目录

政安晨的个人主页:政安晨

欢迎 👍点赞✍评论⭐收藏

收录专栏: TensorFlow与Keras机器学习实战

希望政安晨的博客能够对您有所裨益,如有不足之处,欢迎在评论区提出指正!

本文目标:使用门控残差和变量选择网络进行收入水平预测。

简介

本示例演示了如何使用 Bryan Lim 等人在 Temporal Fusion Transformers (TFT) for Interpretable Multi-horizon Time Series Forecasting 中提出的门控残差网络(GRN)和变量选择网络(VSN)进行结构化数据分类。GRN 为模型提供了灵活性,只在需要时才进行非线性处理。VSN 允许模型软移除可能对性能产生负面影响的任何不必要的噪声输入。这些技术有助于提高深度神经网络模型的学习能力。

请注意,本示例只实现了论文中描述的 GRN 和 VSN 组件,而不是整个 TFT 模型,因为 GRN 和 VSN 本身就可以用于结构化数据学习任务。

(要运行代码,您需要使用 TensorFlow 2.3 或更高版本。)

数据集

本示例使用加州大学欧文分校机器学习资料库提供的美国人口普查收入数据集。任务是二元分类,以确定一个人的年收入是否超过 5 万。

该数据集包含约 30 万个实例和 41 个输入特征:7 个数字特征和 34 个分类特征。

(亲爱的读者朋友们去这里自行查阅数据:UCI Machine Learning Repository —— 政安晨)

安装准备

import math

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers数据准备

首先,我们将 UCI 机器学习资源库中的数据加载到 Pandas DataFrame 中。

# Column names.

CSV_HEADER = [

"age",

"class_of_worker",

"detailed_industry_recode",

"detailed_occupation_recode",

"education",

"wage_per_hour",

"enroll_in_edu_inst_last_wk",

"marital_stat",

"major_industry_code",

"major_occupation_code",

"race",

"hispanic_origin",

"sex",

"member_of_a_labor_union",

"reason_for_unemployment",

"full_or_part_time_employment_stat",

"capital_gains",

"capital_losses",

"dividends_from_stocks",

"tax_filer_stat",

"region_of_previous_residence",

"state_of_previous_residence",

"detailed_household_and_family_stat",

"detailed_household_summary_in_household",

"instance_weight",

"migration_code-change_in_msa",

"migration_code-change_in_reg",

"migration_code-move_within_reg",

"live_in_this_house_1_year_ago",

"migration_prev_res_in_sunbelt",

"num_persons_worked_for_employer",

"family_members_under_18",

"country_of_birth_father",

"country_of_birth_mother",

"country_of_birth_self",

"citizenship",

"own_business_or_self_employed",

"fill_inc_questionnaire_for_veterans_admin",

"veterans_benefits",

"weeks_worked_in_year",

"year",

"income_level",

]

data_url = "https://archive.ics.uci.edu/ml/machine-learning-databases/census-income-mld/census-income.data.gz"

data = pd.read_csv(data_url, header=None, names=CSV_HEADER)

test_data_url = "https://archive.ics.uci.edu/ml/machine-learning-databases/census-income-mld/census-income.test.gz"

test_data = pd.read_csv(test_data_url, header=None, names=CSV_HEADER)

print(f"Data shape: {data.shape}")

print(f"Test data shape: {test_data.shape}")执行:

Data shape: (199523, 42)

Test data shape: (99762, 42)我们将目标列从字符串转换为整数。

data["income_level"] = data["income_level"].apply(

lambda x: 0 if x == " - 50000." else 1

)

test_data["income_level"] = test_data["income_level"].apply(

lambda x: 0 if x == " - 50000." else 1

)然后,我们将数据集分成训练集和验证集。

random_selection = np.random.rand(len(data.index)) <= 0.85

train_data = data[random_selection]

valid_data = data[~random_selection]最后,我们将训练数据和测试数据分割成 CSV 文件存储在本地。

train_data_file = "train_data.csv"

valid_data_file = "valid_data.csv"

test_data_file = "test_data.csv"

train_data.to_csv(train_data_file, index=False, header=False)

valid_data.to_csv(valid_data_file, index=False, header=False)

test_data.to_csv(test_data_file, index=False, header=False)定义数据集元数据

这里,我们定义了数据集的元数据,这些元数据将有助于将数据读取和解析为输入特征,并根据输入特征的类型对其进行编码。

# Target feature name.

TARGET_FEATURE_NAME = "income_level"

# Weight column name.

WEIGHT_COLUMN_NAME = "instance_weight"

# Numeric feature names.

NUMERIC_FEATURE_NAMES = [

"age",

"wage_per_hour",

"capital_gains",

"capital_losses",

"dividends_from_stocks",

"num_persons_worked_for_employer",

"weeks_worked_in_year",

]

# Categorical features and their vocabulary lists.

# Note that we add 'v=' as a prefix to all categorical feature values to make

# sure that they are treated as strings.

CATEGORICAL_FEATURES_WITH_VOCABULARY = {

feature_name: sorted([str(value) for value in list(data[feature_name].unique())])

for feature_name in CSV_HEADER

if feature_name

not in list(NUMERIC_FEATURE_NAMES + [WEIGHT_COLUMN_NAME, TARGET_FEATURE_NAME])

}

# All features names.

FEATURE_NAMES = NUMERIC_FEATURE_NAMES + list(

CATEGORICAL_FEATURES_WITH_VOCABULARY.keys()

)

# Feature default values.

COLUMN_DEFAULTS = [

[0.0]

if feature_name in NUMERIC_FEATURE_NAMES + [TARGET_FEATURE_NAME, WEIGHT_COLUMN_NAME]

else ["NA"]

for feature_name in CSV_HEADER

]创建用于训练和评估的 tf.data.Dataset

我们创建了一个输入函数来读取和解析文件,并将特征和标签转换成一个 [tf.data.Dataset](https://www.tensorflow.org/api_docs/python/tf/data/Dataset) 用于训练和评估。

from tensorflow.keras.layers import StringLookup

def process(features, target):

for feature_name in features:

if feature_name in CATEGORICAL_FEATURES_WITH_VOCABULARY:

# Cast categorical feature values to string.

features[feature_name] = tf.cast(features[feature_name], tf.dtypes.string)

# Get the instance weight.

weight = features.pop(WEIGHT_COLUMN_NAME)

return features, target, weight

def get_dataset_from_csv(csv_file_path, shuffle=False, batch_size=128):

dataset = tf.data.experimental.make_csv_dataset(

csv_file_path,

batch_size=batch_size,

column_names=CSV_HEADER,

column_defaults=COLUMN_DEFAULTS,

label_name=TARGET_FEATURE_NAME,

num_epochs=1,

header=False,

shuffle=shuffle,

).map(process)

return dataset

创建模型输入

def create_model_inputs():

inputs = {}

for feature_name in FEATURE_NAMES:

if feature_name in NUMERIC_FEATURE_NAMES:

inputs[feature_name] = layers.Input(

name=feature_name, shape=(), dtype=tf.float32

)

else:

inputs[feature_name] = layers.Input(

name=feature_name, shape=(), dtype=tf.string

)

return inputs对输入特征进行编码

对于分类特征,我们使用图层嵌入(layer.Embedding)对其进行编码,并将编码大小作为嵌入维度。对于数字特征,我们使用图层密度(layer.Dense)进行线性变换,将每个特征投影到编码大小维度的向量中。这样,所有编码后的特征都将具有相同的维度。

def encode_inputs(inputs, encoding_size):

encoded_features = []

for feature_name in inputs:

if feature_name in CATEGORICAL_FEATURES_WITH_VOCABULARY:

vocabulary = CATEGORICAL_FEATURES_WITH_VOCABULARY[feature_name]

# Create a lookup to convert a string values to an integer indices.

# Since we are not using a mask token nor expecting any out of vocabulary

# (oov) token, we set mask_token to None and num_oov_indices to 0.

index = StringLookup(

vocabulary=vocabulary, mask_token=None, num_oov_indices=0

)

# Convert the string input values into integer indices.

value_index = index(inputs[feature_name])

# Create an embedding layer with the specified dimensions

embedding_ecoder = layers.Embedding(

input_dim=len(vocabulary), output_dim=encoding_size

)

# Convert the index values to embedding representations.

encoded_feature = embedding_ecoder(value_index)

else:

# Project the numeric feature to encoding_size using linear transformation.

encoded_feature = tf.expand_dims(inputs[feature_name], -1)

encoded_feature = layers.Dense(units=encoding_size)(encoded_feature)

encoded_features.append(encoded_feature)

return encoded_features实施门控线性单元

门控线性单元 (GLU) 可以灵活地抑制与特定任务无关的输入。

class GatedLinearUnit(layers.Layer):

def __init__(self, units):

super().__init__()

self.linear = layers.Dense(units)

self.sigmoid = layers.Dense(units, activation="sigmoid")

def call(self, inputs):

return self.linear(inputs) * self.sigmoid(inputs)实施门控余留网络

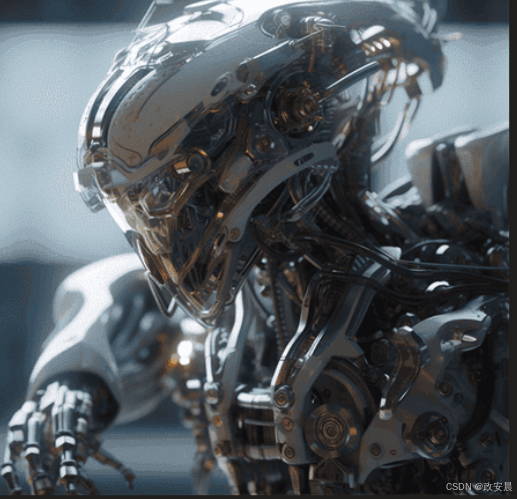

门控残差网络(GRN)的工作原理如下:

1. 对输入应用非线性 ELU 变换。

2. 应用线性变换,然后进行滤除。

3. 应用 GLU,并将原始输入添加到 GLU 的输出中,以执行跳过(残差)连接。

4. 应用层归一化并生成输出。

class GatedResidualNetwork(layers.Layer):

def __init__(self, units, dropout_rate):

super().__init__()

self.units = units

self.elu_dense = layers.Dense(units, activation="elu")

self.linear_dense = layers.Dense(units)

self.dropout = layers.Dropout(dropout_rate)

self.gated_linear_unit = GatedLinearUnit(units)

self.layer_norm = layers.LayerNormalization()

self.project = layers.Dense(units)

def call(self, inputs):

x = self.elu_dense(inputs)

x = self.linear_dense(x)

x = self.dropout(x)

if inputs.shape[-1] != self.units:

inputs = self.project(inputs)

x = inputs + self.gated_linear_unit(x)

x = self.layer_norm(x)

return x实施变量选择网络

变量选择网络(VSN)的工作原理如下:

1. 对每个特征单独应用 GRN。

2. 对所有特征的集合应用 GRN,然后使用 softmax 来产生特征权重。

3. 生成单个 GRN 输出的加权和。

请注意,无论输入特征的数量是多少,VSN 的输出都是 [batch_size, encoding_size]。

class VariableSelection(layers.Layer):

def __init__(self, num_features, units, dropout_rate):

super().__init__()

self.grns = list()

# Create a GRN for each feature independently

for idx in range(num_features):

grn = GatedResidualNetwork(units, dropout_rate)

self.grns.append(grn)

# Create a GRN for the concatenation of all the features

self.grn_concat = GatedResidualNetwork(units, dropout_rate)

self.softmax = layers.Dense(units=num_features, activation="softmax")

def call(self, inputs):

v = layers.concatenate(inputs)

v = self.grn_concat(v)

v = tf.expand_dims(self.softmax(v), axis=-1)

x = []

for idx, input in enumerate(inputs):

x.append(self.grns[idx](input))

x = tf.stack(x, axis=1)

outputs = tf.squeeze(tf.matmul(v, x, transpose_a=True), axis=1)

return outputs

创建门控残差和变量选择网络模型

def create_model(encoding_size):

inputs = create_model_inputs()

feature_list = encode_inputs(inputs, encoding_size)

num_features = len(feature_list)

features = VariableSelection(num_features, encoding_size, dropout_rate)(

feature_list

)

outputs = layers.Dense(units=1, activation="sigmoid")(features)

model = keras.Model(inputs=inputs, outputs=outputs)

return model编译、训练和评估模型

learning_rate = 0.001

dropout_rate = 0.15

batch_size = 265

num_epochs = 20

encoding_size = 16

model = create_model(encoding_size)

model.compile(

optimizer=keras.optimizers.Adam(learning_rate=learning_rate),

loss=keras.losses.BinaryCrossentropy(),

metrics=[keras.metrics.BinaryAccuracy(name="accuracy")],

)

# Create an early stopping callback.

early_stopping = tf.keras.callbacks.EarlyStopping(

monitor="val_loss", patience=5, restore_best_weights=True

)

print("Start training the model...")

train_dataset = get_dataset_from_csv(

train_data_file, shuffle=True, batch_size=batch_size

)

valid_dataset = get_dataset_from_csv(valid_data_file, batch_size=batch_size)

model.fit(

train_dataset,

epochs=num_epochs,

validation_data=valid_dataset,

callbacks=[early_stopping],

)

print("Model training finished.")

print("Evaluating model performance...")

test_dataset = get_dataset_from_csv(test_data_file, batch_size=batch_size)

_, accuracy = model.evaluate(test_dataset)

print(f"Test accuracy: {round(accuracy * 100, 2)}%")Start training the model...

Epoch 1/20

640/640 [==============================] - 31s 29ms/step - loss: 253.8570 - accuracy: 0.9468 - val_loss: 229.4024 - val_accuracy: 0.9495

Epoch 2/20

640/640 [==============================] - 17s 25ms/step - loss: 229.9359 - accuracy: 0.9497 - val_loss: 223.4970 - val_accuracy: 0.9505

Epoch 3/20

640/640 [==============================] - 17s 25ms/step - loss: 225.5644 - accuracy: 0.9504 - val_loss: 222.0078 - val_accuracy: 0.9515

Epoch 4/20

640/640 [==============================] - 16s 25ms/step - loss: 222.2086 - accuracy: 0.9512 - val_loss: 218.2707 - val_accuracy: 0.9522

Epoch 5/20

640/640 [==============================] - 17s 25ms/step - loss: 218.0359 - accuracy: 0.9523 - val_loss: 217.3721 - val_accuracy: 0.9528

Epoch 6/20

640/640 [==============================] - 17s 26ms/step - loss: 214.8348 - accuracy: 0.9529 - val_loss: 210.3546 - val_accuracy: 0.9543

Epoch 7/20

640/640 [==============================] - 17s 26ms/step - loss: 213.0984 - accuracy: 0.9534 - val_loss: 210.2881 - val_accuracy: 0.9544

Epoch 8/20

640/640 [==============================] - 17s 26ms/step - loss: 211.6379 - accuracy: 0.9538 - val_loss: 209.3327 - val_accuracy: 0.9550

Epoch 9/20

640/640 [==============================] - 17s 26ms/step - loss: 210.7283 - accuracy: 0.9541 - val_loss: 209.5862 - val_accuracy: 0.9543

Epoch 10/20

640/640 [==============================] - 17s 26ms/step - loss: 209.9062 - accuracy: 0.9538 - val_loss: 210.1662 - val_accuracy: 0.9537

Epoch 11/20

640/640 [==============================] - 16s 25ms/step - loss: 209.6323 - accuracy: 0.9540 - val_loss: 207.9528 - val_accuracy: 0.9552

Epoch 12/20

640/640 [==============================] - 16s 25ms/step - loss: 208.7843 - accuracy: 0.9544 - val_loss: 207.5303 - val_accuracy: 0.9550

Epoch 13/20

640/640 [==============================] - 21s 32ms/step - loss: 207.9983 - accuracy: 0.9544 - val_loss: 206.8800 - val_accuracy: 0.9557

Epoch 14/20

640/640 [==============================] - 18s 28ms/step - loss: 207.2104 - accuracy: 0.9544 - val_loss: 216.0859 - val_accuracy: 0.9535

Epoch 15/20

640/640 [==============================] - 16s 25ms/step - loss: 207.2254 - accuracy: 0.9543 - val_loss: 206.7765 - val_accuracy: 0.9555

Epoch 16/20

640/640 [==============================] - 16s 25ms/step - loss: 206.6704 - accuracy: 0.9546 - val_loss: 206.7508 - val_accuracy: 0.9560

Epoch 17/20

640/640 [==============================] - 19s 30ms/step - loss: 206.1322 - accuracy: 0.9545 - val_loss: 205.9638 - val_accuracy: 0.9562

Epoch 18/20

640/640 [==============================] - 21s 31ms/step - loss: 205.4764 - accuracy: 0.9545 - val_loss: 206.0258 - val_accuracy: 0.9561

Epoch 19/20

640/640 [==============================] - 16s 25ms/step - loss: 204.3614 - accuracy: 0.9550 - val_loss: 207.1424 - val_accuracy: 0.9560

Epoch 20/20

640/640 [==============================] - 16s 25ms/step - loss: 203.9543 - accuracy: 0.9550 - val_loss: 206.4697 - val_accuracy: 0.9554

Model training finished.

Evaluating model performance...

377/377 [==============================] - 4s 11ms/step - loss: 204.5099 - accuracy: 0.9547

Test accuracy: 95.47% 测试集的准确率应达到 95% 以上。

要提高模型的学习能力,可以尝试增加编码大小值,或在 VSN 层上堆叠多个 GRN 层。这可能需要同时增加 dropout_rate 值,以避免过度拟合。

![[图解]SysML和EA建模住宅安全系统-12-内部块图](https://i-blog.csdnimg.cn/direct/bc7a20a2427240d88cd8ca822536ad5e.png)