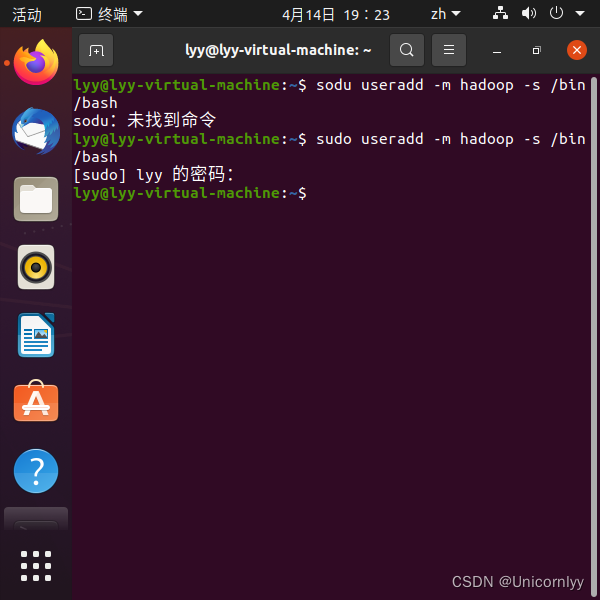

A、添加hadoop用户

1、添加用户组

[root@hadoop00 ~]# groupadd hadoop

2、添加用户并分配用户组

[root@hadoop00 ~]# useradd -g hadoop hadoop

3、修改hadoop用户密码

[root@hadoop00 ~]# passwd hadoop

B、配置本地YUM源

1、上传系统安装镜像到虚拟机服务器的/root目录

2、新建挂载点目录

[root@hadoop00 ~]# mkdir /mnt/centos

3、挂载镜像

[root@hadoop00 ~]# mount -o loop /root/CentOS-6.8-x86_64-bin-DVD1.iso /mnt/centos

4、切换目录

[root@hadoop00 ~]# cd /etc/yum.repos.d

5、新建备份目录

[root@hadoop00 ~]# mkdir bakup

6、备份文件,把所有以Cent开头的文件全部备份移动到bakup目录中

[root@hadoop00 ~]# mv Cent* bakup

7、新建YUM源文件,添加如下内容

[root@hadoop00 ~]# vi local.repo

[local]

name=local

baseurl=file:///mnt/centos

enabled=1

gpgcheck=0

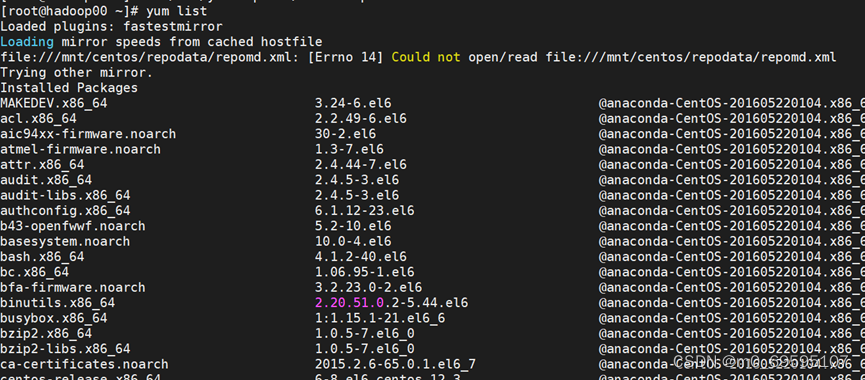

8、验证YUM源,出现如下可用包,表示本地YUM源配置成功。

[root@hadoop00 ~]# yum list

C、SSH无密码配置

1、查看软件openssh与rsync安装状态

[root@hadoop00 ~]# rpm -qa|grep openssh

[root@hadoop00 ~]# rpm -qa|grep rsync

2、安装openssh与rsync

[root@hadoop00 ~]# yum -y install openssh

[root@hadoop00 ~]# yum install openssh-clients.x86_64 -y

[root@hadoop00 ~]# yum -y install rsync

3、切换到hadoop用户

[root@hadoop00 ~]# su - hadoop

4、生成SSH密码对

[root@hadoop00 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

5、将id_dsa.pub追加到授权的key文件中

[root@hadoop00 ~]# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

6、设置授权key文件权限

[root@hadoop00 ~]# chmod 600 ~/.ssh/authorized_keys

7、测试ssh连接

[root@hadoop00 ~]# ssh hadoop00

无需输入密码,即可完成登录,表示SSH配置成功。

D、Hadoop安装与配置

1、将Hadoop安装压缩包hadoop-2.7.3.tar.gz,上传至/root目录

2、将压缩包解压至/usr目录

[root@hadoop00 ~]# tar zxvf /root/hadoop-2.7.3.tar.gz -C /usr/

3、修改文件夹名称

[root@hadoop00 ~]# mv /usr/hadoop-2.7.3/ /usr/hadoop

4、将hadoop文件夹授权给hadoop用户

[root@hadoop00 ~]# chown -R hadoop:hadoop /usr/hadoop/

5、设置环境变量

[root@hadoop00 ~]# vi /etc/profile

(文档末尾追加如下内容)

export HADOOP_HOME=/usr/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

6、使环境变量生效

[root@hadoop00 ~]# source /etc/profile

7、测试环境变量设置

[root@hadoop00 ~]# hadoop version

E、配置HDFS

1、切换至Hadoop用户

[root@hadoop00 ~]# su - hadoop

2、修改hadoop-env.sh

[hadoop@hadoop00 ~]$ cd /usr/hadoop/etc/hadoop/

[hadoop@hadoop00 ~]$ vi hadoop-env.sh

(文档末尾追加如下内容)

export JAVA_HOME=/usr/java/jdk1.8.0_162

3、修改core-site.xml

[hadoop@hadoop00 ~]$ vi core-site.xml

(添加如下内容,注意标签对的匹配及唯一性)

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop00:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/hadoop/tmp/</value>

<description>A base for other temporary directories.</description>

</property>

</configuration>

4、修改hdfs-site.xml

[hadoop@hadoop00 ~]$ vi hdfs-site.xml

(添加如下内容)

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

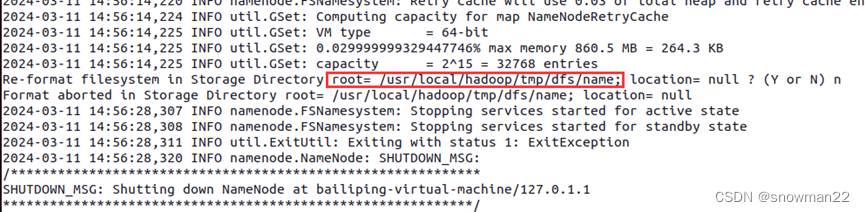

5、格式化hdfs

[hadoop@hadoop00 ~]$ hdfs namenode -format

注:出现Exiting with status 0即为成功

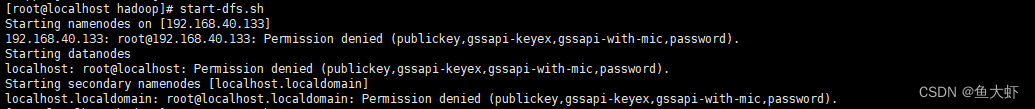

6、启动hdfs

[hadoop@hadoop00 ~]$ start-dfs.sh

(停止命令)# stop-dfs.sh

注:输出如下内容,则启动成功

15/09/21 18:09:13 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [Hadoop.Master]

Hadoop.Master: starting namenode, logging to /usr/hadoop/logs/hadoop-hadoop-namenode-Hadoop.Master.out

Hadoop.Master: starting datanode, logging to /usr/hadoop/logs/hadoop-hadoop-datanode-Hadoop.Master.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

RSA key fingerprint is b5:96:b2:68:e6:63:1a:3c:7d:08:67:4b:ae:80:e2:e3.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (RSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /usr/hadoop/logs/hadoop-hadoop-secondarynamenode-Hadoop.Master.out

15/09/21 18:09:45 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicab

7、查看进程

[hadoop@hadoop00 ~]$ jps

注:输出类似如下内容

1763 NameNode

1881 DataNode

2146 Jps

2040 SecondaryNameNode

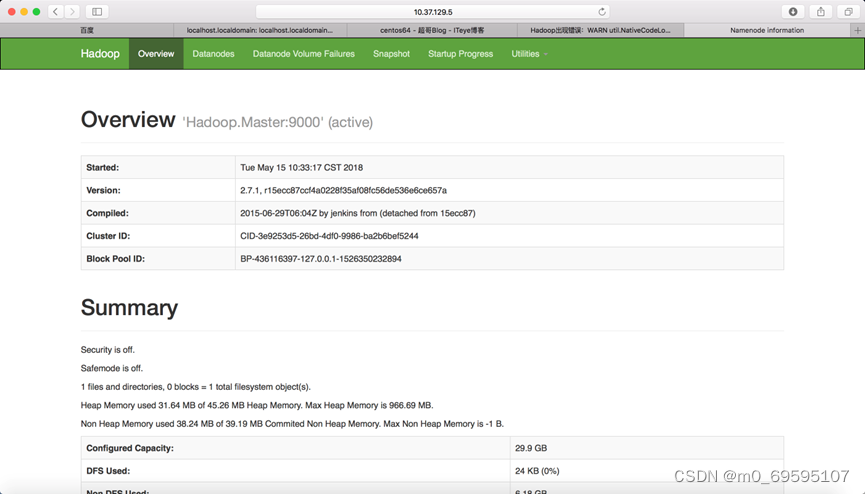

8、使用web浏览器查看Hadoop运行状态

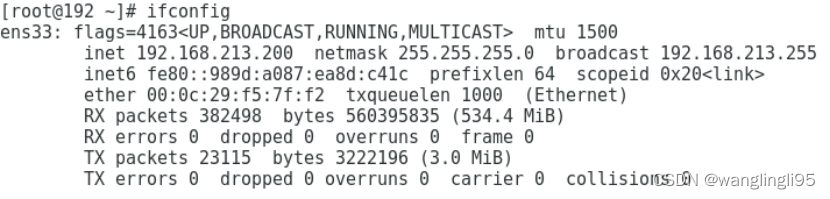

http://你的服务器ip地址:50070/

9、在HDFS上运行WordCount:

1)创建HDFS用户目录

[hadoop@hadoop00 ~]$ hdfs dfs -mkdir /user

[hadoop@hadoop00 ~]$ hdfs dfs -mkdir /user/hadoop #根据自己的情况调整

2)复制输入文件(要处理的文件)到HDFS上

[hadoop@hadoop00 ~]$ hdfs dfs -put /usr/hadoop/etc/hadoop/ /input

3)查看我们复制到HDFS上的文件

[hadoop@hadoop00 ~]$ hdfs dfs -ls /input

4)运行单词检索(grep)程序

[hadoop@hadoop00 ~]$ hadoop jar /usr/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar grep /input output 'dfs[a-z.]+'

(WordCount

[hadoop@hadoop00 ~]$ hadoop jar /usr/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount /input output

#说明:output文件夹如已经存在则需要删除或指定其他文件夹。)

5)查看运行结果

[hadoop@hadoop00 ~]$ hdfs dfs -cat output/*

10、配置YARN

1)修改mapred-site.xml

[hadoop@hadoop00 ~]$ cd /usr/hadoop/etc/hadoop/

[root@hadoop00 ~]# cp mapred-site.xml.template mapred-site.xml

[hadoop@hadoop00 ~]$ vi mapred-site.xml

(添加如下内容)

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

2)修改yarn-site.xml

[hadoop@hadoop00 ~]$ vi yarn-site.xml

(添加如下内容)

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

3)启动YARN

[hadoop@hadoop00 ~]$ start-yarn.sh

(停止yarn stop-yarn.sh)

4)查看当前java进程

[hadoop@hadoop00 ~]$ jps

5)(输出如下)

4918 ResourceManager

1663 NameNode

1950 SecondaryNameNode

5010 NodeManager

5218 Jps

1759 DataNode

6)运行你的mapReduce程序

配置好如上配置再运行mapReduce程序时即是yarn中运行

7)使用web查看Yarn运行状态

http://你的服务器ip地址:8088/

F、HDFS常用命令参考

1、创建HDFS文件夹

1)在根目录创建input文件夹

[hadoop@hadoop00 ~]$ hdfs dfs -mkdir -p /input

2)在用户目录创建input文件夹

说明:如果不指定“/目录”,则默认在用户目录创建文件夹

[hadoop@hadoop00 ~]$ hdfs dfs -mkdir -p input

(等同于 hdfs dfs -mkdir -p /user/hadoop/input)

2、查看HDFS文件夹

1)查看HDFS根文件夹

[hadoop@hadoop00 ~]$ hdfs dfs -ls /

2)查看HDFS用户目录文件夹

[hadoop@hadoop00 ~]$ hdfs dfs -ls

3)查看HDFS用户目录文件夹下input文件夹

[hadoop@hadoop00 ~]$ hdfs dfs -ls input

(等同与 hdfs dfs -ls /user/hadoop/input)

3、复制文件到HDFS

[hadoop@hadoop00 ~]$ hdfs dfs -put /usr/hadoop/etc/hadoop input

4、删除文件夹

[hadoop@hadoop00 ~]$ hdfs dfs -rm -r input

![【C++】C++中规范[ 类型转换标准 ] 的四种形式](https://img-blog.csdnimg.cn/direct/a07ba229478e4ed9942f45048b56249e.png)