平时我们学习Hadoop技术时,可不一直沉溺于理论学习,一定要理论和实践相结合,所以就必须有一个Hadoop环境,我们能在这个Hadoop环境里进行各种操作,来验证我们在书本上学到的知识。

最小的环境,至少要具有一台Linux服务器吧,部署一个最简单的单节点环境,我们可以来部署一个伪分布式集群。

1. 软硬件系统准备

所谓伪分布式集群, 就是在一台Linux服务器上部署一个Hadoop集群, 即单节点部署。

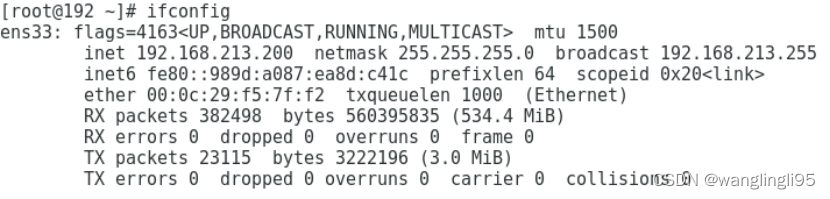

本次安装用的是Ubuntu16操作系统,IP设置为:192.168.1.11, 系统资源是:

CPU: 4核; 内存: 32G; 磁盘: /分区为1T, /data分区为3.6T。

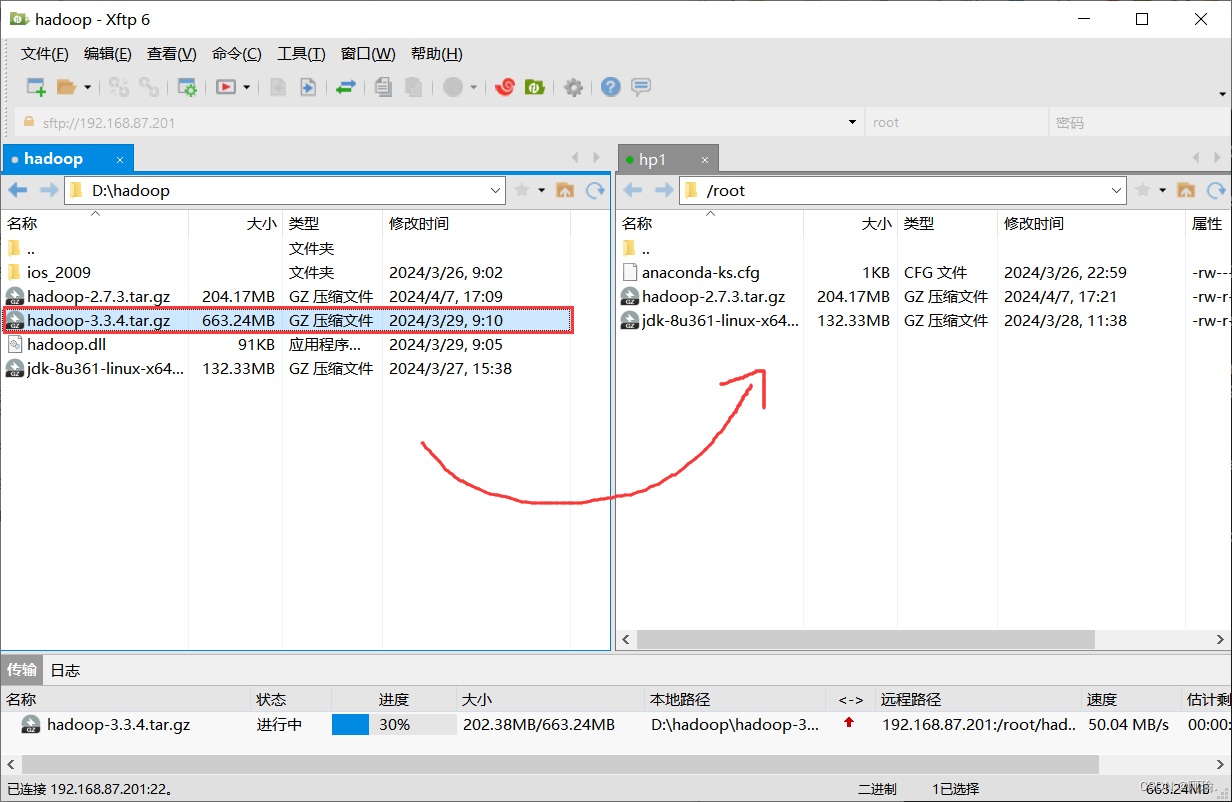

从Hadoop官网下载安装包,目前使用的是hadoop-2.10.0,

下载地址:https://archive.apache.org/dist/hadoop/common/hadoop-2.10.0/, 下载得到:hadoop-2.10.0.tar.gz。

上传到目标Linux服务器,创建安装目录:

mkdir /opt/bigdata

把hadoop-2.10.0.tar.gz放到/opt/bigdata目录下,解压:

tar zxvf hadoop-2.10.0.tar.gz

cd hadoop-2.10.0/

创建需要的子目录:

mkdir dfs

mkdir logs

mkdir tmp

2. 配置

所有配置文件和环境脚本文件到放到etc/hadoop/目录下, 进入配置目录:

cd hadoop-2.10.0/etc/hadoop/

这里配置文件很多,但只要修改4个.xml配置文件和2个.sh脚本文件:

core-site.xml

hdfs-site.xml

mapred-site.xml

yarn-site.xml

hadoop-env.sh

yarn-env.sh

因为默认的模板文件基本是空的,需要我们根据官网文档和实际安装情况来配置,为了降低部署难度,特提供测试环境使用的6个文件,我们在线上部署时,在提供的文件基础上进行修改就比较简单了。

2.1 core-site.xml

主要修改两项:

安装路径要填写实际的目录,如: /opt/bigdata/hadoop-2.10.0

<property>

<name>hadoop.home</name>

<value>/opt/bigdata/hadoop-2.10.0</value>

</property>

修改hdfs的IP和端口。

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.11:9000</value>

</property>

完整配置文件如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.home</name>

<value>/opt/bigdata/hadoop-2.10.0</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.11:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>${hadoop.home}/tmp/hdfs</value>

</property>

</configuration>

2.2 hdfs-site.xml

主要修改两项:

安装路径要填写实际的目录,如: /opt/bigdata/hadoop-2.10.0

<property>

<name>hadoop.home</name>

<value>/opt/bigdata/hadoop-2.10.0</value>

</property>

修改节点IP:

<property>

<name>namenode.ip</name>

<value>192.168.1.11</value>

</property>

完整配置文件如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.home</name>

<value>/opt/bigdata/hadoop-2.10.0</value>

</property>

<property>

<name>namenode.ip</name>

<value>192.168.1.11</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>${hadoop.home}/dfs/name</value>

</property>

<property>

<name>dfs.hosts</name>

<value></value>

</property>

<property>

<name>dfs.hosts.exclude</name>

<value></value>

</property>

<property>

<name>dfs.blocksize</name>

<value>134217728</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>10</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>${hadoop.home}/dfs/data</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.http.address</name>

<value>${namenode.ip}:50070</value>

<description> hdfs的namenode的web ui 地址 </description>

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>${namenode.ip}:50090</value>

<description> hdfs的snn的web ui 地址 </description>

</property>

</configuration>

2.3 mapred-site.xml

主要修改IP:

<property>

<name>master.ip</name>

<value>192.168.1.11</value>

</property>

完整配置文件如下:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>master.ip</name>

<value>192.168.1.11</value>

</property>

<!--指定maoreduce运行框架-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!--历史服务的通信地址-->

<property>

<name>mapreduce.jobhistory.address</name>

<value>${master.ip}:10020</value>

</property>

<!--历史服务的web ui地址-->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>${master.ip}:19888</value>

</property>

</configuration>

2.4 yarn-site.xml

修改节点IP:

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.11</value>

</property>

安装路径要填写实际的目录,如: /opt/bigdata/hadoop-2.10.0

<property>

<name>hadoop.home</name>

<value>/opt/bigdata/hadoop-2.10.0</value>

</property>

下面5项的端口号,如果有被其它程序服务所占用,就修改一下,不然不要改动:

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

任务资源调度策略:1) CapacityScheduler: 按队列调度;2) FairScheduler: 平均分配。

很重要的配置,一定要理解原理。

<property>

<description>The class to use as the resource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<!--

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

-->

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

分配给AM单个容器可申请的最小内存: MB

<property>

<description>The minimum allocation for every container request at the RM

in MBs. Memory requests lower than this will be set to the value of this

property. Additionally, a node manager that is configured to have less memory

than this value will be shut down by the resource manager.</description>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

分配给AM单个容器可申请的最大内存: MB

<property>

<description>The maximum allocation for every container request at the RM

in MBs. Memory requests higher than this will throw an

InvalidResourceRequestException.</description>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>8192</value>

</property>

分配给AM单个容器可申请的最小虚拟的CPU个数:

<property>

<description>The minimum allocation for every container request at the RM

in terms of virtual CPU cores. Requests lower than this will be set to the

value of this property. Additionally, a node manager that is configured to

have fewer virtual cores than this value will be shut down by the resource

manager.</description>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

</property>

分配给AM单个容器可申请的最大虚拟的CPU个数:

<property>

<description>The maximum allocation for every container request at the RM

in terms of virtual CPU cores. Requests higher than this will throw an

InvalidResourceRequestException.</description>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>4</value>

</property>

NodeManager节点最大可用内存, 根据实际机器上的物理内存进行配置:

NodeManager节点最大Container数量:

max(Container) = yarn.nodemanager.resource.memory-mb / yarn.scheduler.maximum-allocation-mb

<property>

<description>Amount of physical memory, in MB, that can be allocated

for containers. If set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically calculated(in case of Windows and Linux).

In other cases, the default is 8192MB.

</description>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>24576</value>

</property>

节点服务器上yarn可以使用的虚拟的CPU个数,默认是8,推荐配置与核心个数相同。

如果节点CPU的核心个数不足8个,需要调小这个值,yarn不会智能的去检测物理核数。如果机器性能较好,可以配置为物理核数的2倍。

<property>

<description>Number of vcores that can be allocated

for containers. This is used by the RM scheduler when allocating

resources for containers. This is not used to limit the number of

CPUs used by YARN containers. If it is set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically determined from the hardware in case of Windows and Linux.

In other cases, number of vcores is 8 by default.</description>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>30</value>

</property>

完整配置文件如下:

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.11</value>

</property>

<property>

<name>hadoop.home</name>

<value>/opt/bigdata/hadoop-2.10.0</value>

</property>

<property>

<name>yarn.acl.enable</name>

<value>false</value>

</property>

<property>

<name>yarn.admin.acl</name>

<value>*</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>false</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<description>A comma separated list of services where service name should only

contain a-zA-Z0-9_ and can not start with numbers</description>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<description>The class to use as the resource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<!--

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

-->

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<description>The minimum allocation for every container request at the RM

in MBs. Memory requests lower than this will be set to the value of this

property. Additionally, a node manager that is configured to have less memory

than this value will be shut down by the resource manager.</description>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<description>The maximum allocation for every container request at the RM

in MBs. Memory requests higher than this will throw an

InvalidResourceRequestException.</description>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>8192</value>

</property>

<property>

<description>The minimum allocation for every container request at the RM

in terms of virtual CPU cores. Requests lower than this will be set to the

value of this property. Additionally, a node manager that is configured to

have fewer virtual cores than this value will be shut down by the resource

manager.</description>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

</property>

<property>

<description>The maximum allocation for every container request at the RM

in terms of virtual CPU cores. Requests higher than this will throw an

InvalidResourceRequestException.</description>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>4</value>

</property>

<property>

<description>Amount of physical memory, in MB, that can be allocated

for containers. If set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically calculated(in case of Windows and Linux).

In other cases, the default is 8192MB.

</description>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>24576</value>

</property>

<property>

<description>Number of vcores that can be allocated

for containers. This is used by the RM scheduler when allocating

resources for containers. This is not used to limit the number of

CPUs used by YARN containers. If it is set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically determined from the hardware in case of Windows and Linux.

In other cases, number of vcores is 8 by default.</description>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>30</value>

</property>

<property>

<description>Ratio between virtual memory to physical memory when

setting memory limits for containers. Container allocations are

expressed in terms of physical memory, and virtual memory usage

is allowed to exceed this allocation by this ratio.

</description>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>${hadoop.home}/tmp</value>

</property>

<property>

<name>yarn.log.dir</name>

<value>${hadoop.home}/logs/yarn</value>

</property>

<property>

<description>List of directories to store localized files in. An

application's localized file directory will be found in:

${yarn.nodemanager.local-dirs}/usercache/${user}/appcache/application_${appid}.

Individual containers' work directories, called container_${contid}, will

be subdirectories of this.

</description>

<name>yarn.nodemanager.local-dirs</name>

<value>${hadoop.tmp.dir}/yarn/nm-local-dir</value>

</property>

<property>

<description>

Where to store container logs. An application's localized log directory

will be found in ${yarn.nodemanager.log-dirs}/application_${appid}.

Individual containers' log directories will be below this, in directories

named container_{$contid}. Each container directory will contain the files

stderr, stdin, and syslog generated by that container.

</description>

<name>yarn.nodemanager.log-dirs</name>

<value>${yarn.log.dir}/userlogs</value>

</property>

<property>

<description>Time in seconds to retain user logs. Only applicable if

log aggregation is disabled

</description>

<name>yarn.nodemanager.log.retain-seconds</name>

<value>10800</value>

</property>

<property>

<description>Whether physical memory limits will be enforced for

containers.</description>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<description>Whether virtual memory limits will be enforced for

containers.</description>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

2.5 hadoop-env.sh

主要把以下配置项的路径,按实际情况进行配置:

HOME=/opt/bigdata

export JAVA_HOME=$HOME/jdk1.8.0_144

export HADOOP_PREFIX=$HOME/hadoop-2.10.0

export HADOOP_HOME=$HADOOP_PREFIX

export HADOOP_YARN_HOME=$HADOOP_PREFIX

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_LOG_DIR=${HADOOP_HOME}/logs/hdfs

完整脚本文件如下:

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hadoop-specific environment variables here.

# The only required environment variable is JAVA_HOME. All others are

# optional. When running a distributed configuration it is best to

# set JAVA_HOME in this file, so that it is correctly defined on

# remote nodes.

# The java implementation to use.

#export JAVA_HOME=${JAVA_HOME}

HOME=/opt/bigdata

export JAVA_HOME=$HOME/jdk1.8.0_144

export HADOOP_PREFIX=$HOME/hadoop-2.10.0

export HADOOP_HOME=$HADOOP_PREFIX

export HADOOP_YARN_HOME=$HADOOP_PREFIX

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

# The jsvc implementation to use. Jsvc is required to run secure datanodes

# that bind to privileged ports to provide authentication of data transfer

# protocol. Jsvc is not required if SASL is configured for authentication of

# data transfer protocol using non-privileged ports.

#export JSVC_HOME=${JSVC_HOME}

HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}

# Extra Java CLASSPATH elements. Automatically insert capacity-scheduler.

for f in $HADOOP_HOME/contrib/capacity-scheduler/*.jar; do

if [ "$HADOOP_CLASSPATH" ]; then

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$f

else

export HADOOP_CLASSPATH=$f

fi

done

# The maximum amount of heap to use, in MB. Default is 1000.

#export HADOOP_HEAPSIZE=

#export HADOOP_NAMENODE_INIT_HEAPSIZE=""

# Enable extra debugging of Hadoop's JAAS binding, used to set up

# Kerberos security.

# export HADOOP_JAAS_DEBUG=true

# Extra Java runtime options. Empty by default.

# For Kerberos debugging, an extended option set logs more invormation

# export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true -Dsun.security.krb5.debug=true -Dsun.security.spnego.debug"

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true"

# Command specific options appended to HADOOP_OPTS when specified

export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"

export HADOOP_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS"

export HADOOP_NFS3_OPTS="$HADOOP_NFS3_OPTS"

export HADOOP_PORTMAP_OPTS="-Xmx512m $HADOOP_PORTMAP_OPTS"

# The following applies to multiple commands (fs, dfs, fsck, distcp etc)

export HADOOP_CLIENT_OPTS="$HADOOP_CLIENT_OPTS"

# set heap args when HADOOP_HEAPSIZE is empty

if [ "$HADOOP_HEAPSIZE" = "" ]; then

export HADOOP_CLIENT_OPTS="-Xmx512m $HADOOP_CLIENT_OPTS"

fi

#HADOOP_JAVA_PLATFORM_OPTS="-XX:-UsePerfData $HADOOP_JAVA_PLATFORM_OPTS"

# On secure datanodes, user to run the datanode as after dropping privileges.

# This **MUST** be uncommented to enable secure HDFS if using privileged ports

# to provide authentication of data transfer protocol. This **MUST NOT** be

# defined if SASL is configured for authentication of data transfer protocol

# using non-privileged ports.

export HADOOP_SECURE_DN_USER=${HADOOP_SECURE_DN_USER}

# Where log files are stored. $HADOOP_HOME/logs by default.

#export HADOOP_LOG_DIR=${HADOOP_LOG_DIR}/$USER

export HADOOP_LOG_DIR=${HADOOP_HOME}/logs/hdfs

# Where log files are stored in the secure data environment.

#export HADOOP_SECURE_DN_LOG_DIR=${HADOOP_LOG_DIR}/${HADOOP_HDFS_USER}

###

# HDFS Mover specific parameters

###

# Specify the JVM options to be used when starting the HDFS Mover.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HADOOP_MOVER_OPTS=""

###

# Router-based HDFS Federation specific parameters

# Specify the JVM options to be used when starting the RBF Routers.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HADOOP_DFSROUTER_OPTS=""

###

###

# Advanced Users Only!

###

# The directory where pid files are stored. /tmp by default.

# NOTE: this should be set to a directory that can only be written to by

# the user that will run the hadoop daemons. Otherwise there is the

# potential for a symlink attack.

export HADOOP_PID_DIR=${HADOOP_PID_DIR}

export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR}

# A string representing this instance of hadoop. $USER by default.

export HADOOP_IDENT_STRING=$USER

2.6 yarn-env.sh

主要把以下配置项的路径,按实际情况进行配置:

HOME=/opt/bigdata

export JAVA_HOME=$HOME/jdk1.8.0_144

export HADOOP_PREFIX=$HOME/hadoop-2.10.0

export HADOOP_HOME=$HADOOP_PREFIX

export HADOOP_YARN_HOME=$HADOOP_PREFIX

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

HADOOP_YARN_USER=test

YARN_CONF_DIR=$HADOOP_YARN_HOME/etc/hadoop

YARN_LOG_DIR=$HADOOP_YARN_HOME/logs/yarn

完整脚本文件如下:

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

HOME=/opt/bigdata

export JAVA_HOME=$HOME/jdk1.8.0_144

export HADOOP_PREFIX=$HOME/hadoop-2.10.0

export HADOOP_HOME=$HADOOP_PREFIX

export HADOOP_YARN_HOME=$HADOOP_PREFIX

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

# User for YARN daemons

HADOOP_YARN_USER=test

export HADOOP_YARN_USER=${HADOOP_YARN_USER:-yarn}

# resolve links - $0 may be a softlink

YARN_CONF_DIR=$HADOOP_YARN_HOME/etc/hadoop

export YARN_CONF_DIR="${YARN_CONF_DIR:-$HADOOP_YARN_HOME/conf}"

# some Java parameters

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

if [ "$JAVA_HOME" != "" ]; then

#echo "run java in $JAVA_HOME"

JAVA_HOME=$JAVA_HOME

fi

if [ "$JAVA_HOME" = "" ]; then

echo "Error: JAVA_HOME is not set."

exit 1

fi

JAVA=$JAVA_HOME/bin/java

JAVA_HEAP_MAX=-Xmx1000m

# For setting YARN specific HEAP sizes please use this

# Parameter and set appropriately

# YARN_HEAPSIZE=1000

# check envvars which might override default args

if [ "$YARN_HEAPSIZE" != "" ]; then

JAVA_HEAP_MAX="-Xmx""$YARN_HEAPSIZE""m"

fi

# Resource Manager specific parameters

# Specify the max Heapsize for the ResourceManager using a numerical value

# in the scale of MB. For example, to specify an jvm option of -Xmx1000m, set

# the value to 1000.

# This value will be overridden by an Xmx setting specified in either YARN_OPTS

# and/or YARN_RESOURCEMANAGER_OPTS.

# If not specified, the default value will be picked from either YARN_HEAPMAX

# or JAVA_HEAP_MAX with YARN_HEAPMAX as the preferred option of the two.

#export YARN_RESOURCEMANAGER_HEAPSIZE=1000

# Specify the max Heapsize for the timeline server using a numerical value

# in the scale of MB. For example, to specify an jvm option of -Xmx1000m, set

# the value to 1000.

# This value will be overridden by an Xmx setting specified in either YARN_OPTS

# and/or YARN_TIMELINESERVER_OPTS.

# If not specified, the default value will be picked from either YARN_HEAPMAX

# or JAVA_HEAP_MAX with YARN_HEAPMAX as the preferred option of the two.

#export YARN_TIMELINESERVER_HEAPSIZE=1000

# Specify the JVM options to be used when starting the ResourceManager.

# These options will be appended to the options specified as YARN_OPTS

# and therefore may override any similar flags set in YARN_OPTS

#export YARN_RESOURCEMANAGER_OPTS=

# Node Manager specific parameters

# Specify the max Heapsize for the NodeManager using a numerical value

# in the scale of MB. For example, to specify an jvm option of -Xmx1000m, set

# the value to 1000.

# This value will be overridden by an Xmx setting specified in either YARN_OPTS

# and/or YARN_NODEMANAGER_OPTS.

# If not specified, the default value will be picked from either YARN_HEAPMAX

# or JAVA_HEAP_MAX with YARN_HEAPMAX as the preferred option of the two.

#export YARN_NODEMANAGER_HEAPSIZE=1000

# Specify the JVM options to be used when starting the NodeManager.

# These options will be appended to the options specified as YARN_OPTS

# and therefore may override any similar flags set in YARN_OPTS

#export YARN_NODEMANAGER_OPTS=

# so that filenames w/ spaces are handled correctly in loops below

IFS=

# default log directory & file

if [ "$YARN_LOG_DIR" = "" ]; then

YARN_LOG_DIR="$HADOOP_YARN_HOME/logs/yarn"

fi

if [ "$YARN_LOGFILE" = "" ]; then

YARN_LOGFILE='yarn.log'

fi

# default policy file for service-level authorization

if [ "$YARN_POLICYFILE" = "" ]; then

YARN_POLICYFILE="hadoop-policy.xml"

fi

# restore ordinary behaviour

unset IFS

YARN_OPTS="$YARN_OPTS -Dhadoop.log.dir=$YARN_LOG_DIR"

YARN_OPTS="$YARN_OPTS -Dyarn.log.dir=$YARN_LOG_DIR"

YARN_OPTS="$YARN_OPTS -Dhadoop.log.file=$YARN_LOGFILE"

YARN_OPTS="$YARN_OPTS -Dyarn.log.file=$YARN_LOGFILE"

YARN_OPTS="$YARN_OPTS -Dyarn.home.dir=$YARN_COMMON_HOME"

YARN_OPTS="$YARN_OPTS -Dyarn.id.str=$YARN_IDENT_STRING"

YARN_OPTS="$YARN_OPTS -Dhadoop.root.logger=${YARN_ROOT_LOGGER:-INFO,console}"

YARN_OPTS="$YARN_OPTS -Dyarn.root.logger=${YARN_ROOT_LOGGER:-INFO,console}"

if [ "x$JAVA_LIBRARY_PATH" != "x" ]; then

YARN_OPTS="$YARN_OPTS -Djava.library.path=$JAVA_LIBRARY_PATH"

fi

YARN_OPTS="$YARN_OPTS -Dyarn.policy.file=$YARN_POLICYFILE"

###

# Router specific parameters

###

# Specify the JVM options to be used when starting the Router.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# See ResourceManager for some examples

#

#export YARN_ROUTER_OPTS=

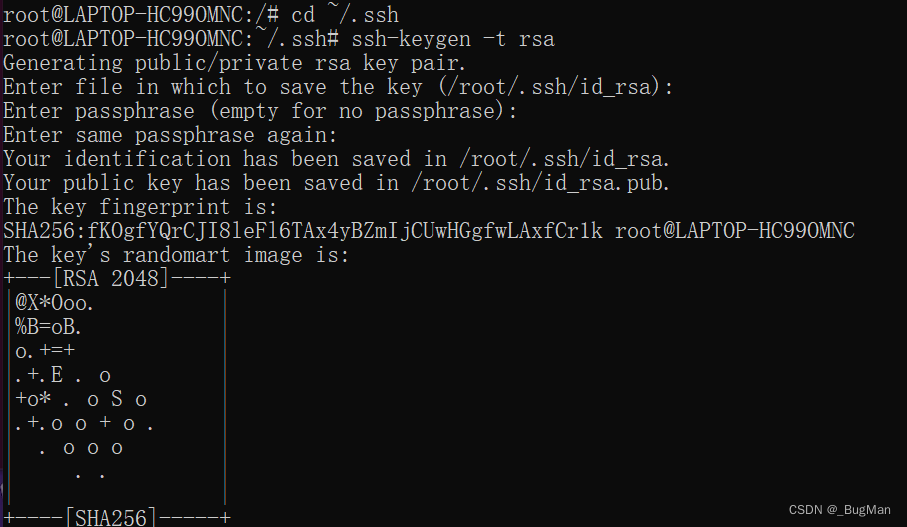

3. 设置ssh免密登录

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

4. 格式化hdfs

bin/hdfs namenode -format

5. 启动/关闭hadoop NameNode daemon和DataNode daemon

启动服务执行:sbin/start-dfs.sh

启动成功后,可以打开管理系统:http://192.168.1.11:50070/

如果要关闭服务,则执行:sbin/stop-dfs.sh

6. 启动/关闭ResourceManager daemon 和 NodeManager daemon

启动服务执行:sbin/start-yarn.sh

启动成功后,可以打开管理系统:http://192.168.1.11:8088/

如果要关闭服务,则执行:sbin/stop-yarn.sh