上课笔记

如果一个网络全都是由线性层串联起来(torch.nn.Linear(xx, yy)),就叫他全连接的网络(左边节点到右边节点任意两个都存在权重)

先看一下吴恩达或者李宏毅老师的视频了解一下卷积

通过卷积层保留图像的空间特征(结构)

张量的维度是(b, c, w, h) batch, channel, width, height

经过5 * 5的卷积层变成一个4 * 24 * 24的特征图,经过2*2的下采样(减少元素数量,降低运算需求)变成4 * 12 * 12的特征图,再做5 * 5 的卷积, 2 * 2的下采样,变成8 * 4 * 4的特征图(前面是特征提取层),展开成1维向量,最后线性变换映射成10维的输出,用softmax计算分布(分类器)

必须知道输入输出的尺寸

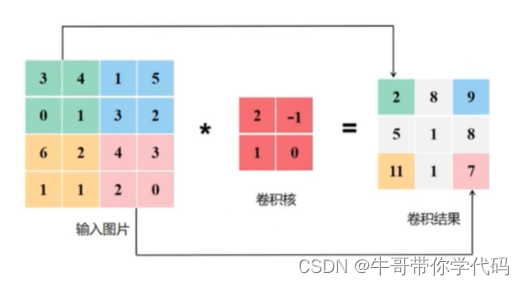

卷积的例子:

通道内的每个位置和卷积核的同一位置进行内积,计算后的尺寸大小为原来的长宽-卷积核长宽+1,最后把所有通道计算的结果进行加法得到输出。

卷积核通道数 = 卷积输入层的通道数;卷积输出层通道数 = 卷积核(组)的个数。

m个卷积核进行运算后将结果拼接,输出尺寸为m * w * h

w权重的维度为: m * n * w * h

默认情况下缩小的圈数为卷积核大小/2向下取整,比如(1,28,28)进行5 * 5卷积后是(1, 24, 24) 5 / 2 = 2 缩小两圈等于宽高-4

padding:在输入图像外面周围进行填充0,如果对于3*3的卷积核想让输入输出大小相同设置padding=1,如果对于5 * 5的卷积核想让输入输出大小相同设置padding=2

stride步长,每次滑动的长度

Stride的作用:是成倍缩小尺寸,而这个参数的值就是缩小的具体倍数,比如步幅为2,输出就是输入的1/2;步幅为3,输出就是输入的1/3

下采样:常用的max pooling,最大池化层,运算后通道数量不变,如果是2 * 2的maxpooling,输出尺寸变原来的一半:torch.nn.MaxPool2d(kernel

_size=2)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, -1) # view()函数用来转换size大小。x = x.view(batchsize, -1)中batchsize指转换后有几行,而-1指根据原tensor数据和batchsize自动分配列数。 -1 此处自动算出的是x平摊的元素值/batch_size=320

x = self.fc(x)#用交叉熵损失所以最后一层不用激活

return x

model = Net()

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

代码实现

1、torch.nn.Conv2d(1,10,kernel_size=3,stride=2,bias=False)

1是指输入的Channel,灰色图像是1维的;10是指输出的Channel,也可以说第一个卷积层需要10个卷积核;kernel_size=3,卷积核大小是3x3;stride=2进行卷积运算时的步长,默认为1;bias=False卷积运算是否需要偏置bias,默认为False。padding = 0,卷积操作是否补0。

2、self.fc = torch.nn.Linear(320, 10),这个320获取的方式,可以通过x = x.view(batch_size, -1) # print(x.shape)可得到(64,320),64指的是batch,320就是指要进行全连接操作时,输入的特征维度。

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

# prepare dataset

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

# design model using class

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, -1) # -1 此处自动算出的是320

x = self.fc(x)

return x

model = Net()

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch+1, batch_idx+1, running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100*correct/total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

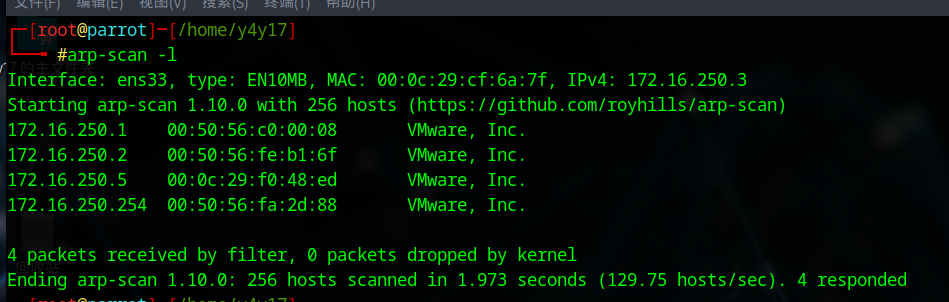

显卡计算

1:model后面迁移至gpu

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

2:训练和测试的输入输出数据也迁移至gpu

inputs, target = inputs.to(device), target.to(device)

作业实现

卷积层用三个,relu三个,池化三个,线性层三个,对比性能

input(batch, 1, 28, 28) -> conv2d -> relu -> pooling -> conv2d -> relu -> pooling -> conv2d -> relu -> pooling -> linear layer -> linear -> output(batch, 10)

(batch, 1, 28, 28) ->卷积(1, 10, 5)->(10, 24, 24) ->下采样2->(10, 12, 12)->卷积(10, 20, 5)->(20, 8, 8)->下采样2->(20, 4, 4)->卷积(20, 10, 5, padding=2) ->(10, 4 ,4)->下采样(10,2,2)摊平view(batch_size, -1)->l1(40, 32)->l2(32, 16)->l3(16, 10)

(10,12,12)-》(20,6, 6)-》(10, 3, 3)

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import torch.nn.functional as F

# 1.数据集准备

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/minist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size, num_workers=2)

test_dataset = datasets.MNIST(root='../dataset/minist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size, num_workers=2)

# 2.模型构建

class CNNNet2(torch.nn.Module):

def __init__(self):

super(CNNNet2, self).__init__()

self.cov1 = torch.nn.Conv2d(1, 10,5)

self.cov2 = torch.nn.Conv2d(10, 20,5)

self.cov3 = torch.nn.Conv2d(20, 10, 3, padding=1)

self.pool = torch.nn.MaxPool2d(2)

self.l1 = torch.nn.Linear(40, 32)

self.l2 = torch.nn.Linear(32, 16)

self.l3 = torch.nn.Linear(16, 10)

def forward(self, x):

batch_size = x.size(0) # B,C,W,H(之前的transform已经将图像转为tensor张量了,取第一个维度就是batch)

x = self.pool(torch.relu(self.cov1(x)))

x = self.pool(torch.relu(self.cov2(x)))

x = self.pool(torch.relu(self.cov3(x)))

x = x.view(batch_size, -1) # batch是不变的,把CWH拉长

x = torch.relu(self.l1(x))

x = torch.relu(self.l2(x))

return self.l3(x)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, -1) # -1 此处自动算出的是320

x = self.fc(x)

return x

model1 = Net()

device = torch.device('cuda'if torch.cuda.is_available() else 'cpu')

model1.to(device)

model2 = CNNNet2()

model2.to(device)

# 3.损失值和优化器

criterion = torch.nn.CrossEntropyLoss()

optimizer1 = torch.optim.SGD(model1.parameters(), lr=0.01, momentum=0.5)

optimizer2 = torch.optim.SGD(model2.parameters(), lr=0.01, momentum=0.5)

# 4.训练循环

def train(epoch):

l1 = 0.0

l2 = 0.0

for batch_index, (x, y) in enumerate(train_loader):

x, y = x.to(device), y.to(device)

y_pred1 = model1(x)

optimizer1.zero_grad()

loss1 = criterion(y_pred1, y)

l1 += loss1.item()

loss1.backward()

optimizer1.step()

y_pred2 = model2(x)

optimizer2.zero_grad()

loss2 = criterion(y_pred2, y)

l2 += loss2.item()

loss2.backward()

optimizer2.step()

if batch_index % 300 == 299:

print(f'[epoch{epoch+1}---------batch={batch_index+1}---------loss1={round(100*l1/300, 3)}]')

l1 = 0.0 # 输出完记得置为0

print(f'[epoch{epoch + 1}---------batch={batch_index + 1}---------loss2={round(100 * l2 / 300, 3)}]')

l2 = 0.0 # 输出完记得置为0

def test():

size = 0

acc1 = 0

acc2 = 0

with torch.no_grad():

for (x, y) in test_loader:

x, y = x.to(device), y.to(device)

y_pred1 = model1(x)

_, predict1 = torch.max(y_pred1.data, dim=1) # 0列1行,注意这里取的是data(用到张量的时候要格外小心)

size += predict1.size(0)

acc1 += (predict1 == y).sum().item() # 与标签进行比较

print('test accuracy1= %.3f %%' % (100 * acc1 / size))

size = 0

with torch.no_grad():

for (x, y) in test_loader:

x, y = x.to(device), y.to(device)

y_pred2 = model2(x)

_, predict2 = torch.max(y_pred2.data, dim=1) # 0列1行,注意这里取的是data(用到张量的时候要格外小心)

size += predict2.size(0)

acc2 += (predict2 == y).sum().item() # 与标签进行比较

print('test accuracy2= %.3f %%' % (100 * acc2 / size))

return (acc1 / size, acc2 / size)

if __name__ == "__main__":

epoch_list = []

acc_list1 = []

acc_list2 = []

for epoch in range(10):

train(epoch)

acc1, acc2 = test()

epoch_list.append(epoch)

acc_list1.append(acc1)

acc_list2.append(acc2)

plt.plot(epoch_list, acc_list1)

plt.plot(epoch_list, acc_list2)

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.show()