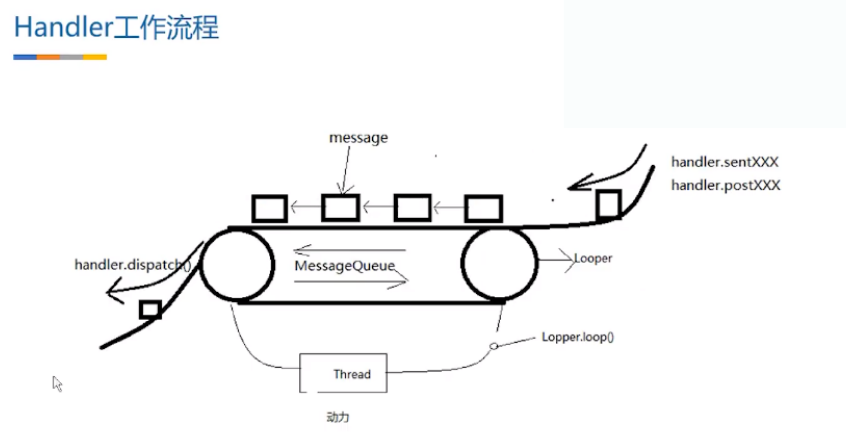

提起handler我们就不得不谈epoll

epoll的主要机制是:

1)调用epoll_create()建立一个epoll对象(在epoll文件系统中为这个句柄对象分配资源)

2)调用epoll_ctl向epoll对象中添加事件的套接字

3)调用epoll_wait收集发生的事件的连接

从Android 2.3开始,Google把Handler的阻塞/唤醒方案从Object#wait()/notify()改成了用Linux epoll来实现。原因是Native层也需要引入一套消息管理机制用于提供给C/C++开发者使用,而现有的阻塞/唤醒方案是为Java层准备的,只支持Java。因此从Android 2.3开始在Native层重新实现了一套阻塞/唤醒方案,Java层弃用Object#wait()/notify(),改为通过jni调用Native进入阻塞态。

接下来我们通过代码来理解

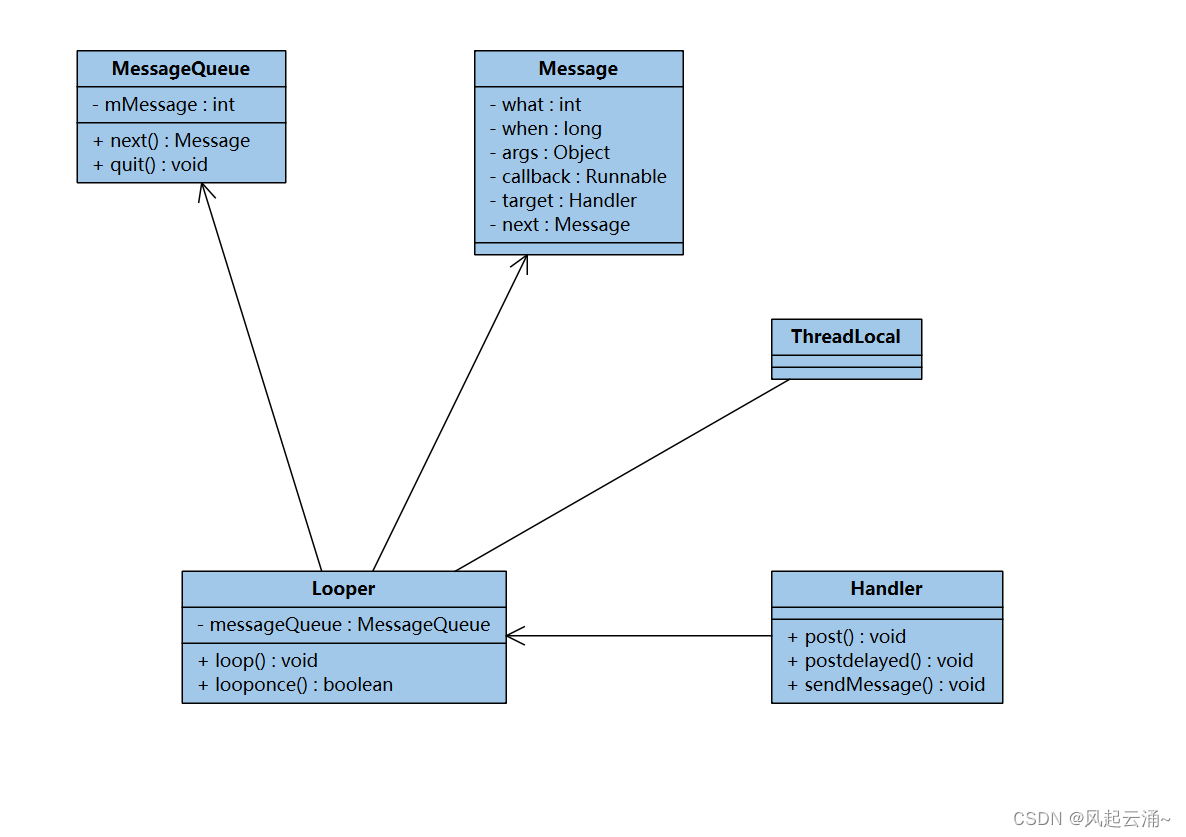

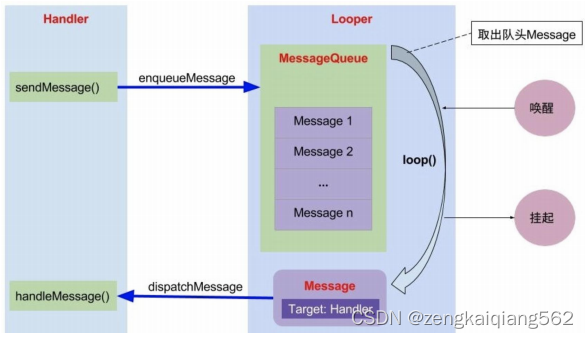

首先我们肯定要先创建消息队列来存放message

我们看下准备方法 在这个ActivityThread类

public static void main(String[] args) {

Trace.traceBegin(Trace.TRACE_TAG_ACTIVITY_MANAGER, "ActivityThreadMain");

// Install selective syscall interception

AndroidOs.install();

// CloseGuard defaults to true and can be quite spammy. We

// disable it here, but selectively enable it later (via

// StrictMode) on debug builds, but using DropBox, not logs.

CloseGuard.setEnabled(false);

Environment.initForCurrentUser();

// Make sure TrustedCertificateStore looks in the right place for CA certificates

final File configDir = Environment.getUserConfigDirectory(UserHandle.myUserId());

TrustedCertificateStore.setDefaultUserDirectory(configDir);

// Call per-process mainline module initialization.

initializeMainlineModules();

Process.setArgV0("<pre-initialized>");

Looper.prepareMainLooper();

Looper.prepareMainLooper();这个方法是我们的关注点

public static void prepareMainLooper() {

prepare(false);

synchronized (Looper.class) {

if (sMainLooper != null) {

throw new IllegalStateException("The main Looper has already been prepared.");

}

sMainLooper = myLooper();

}

}这个方法主要是确保looper的唯一性,我们看下prepare方法

private static void prepare(boolean quitAllowed) {

if (sThreadLocal.get() != null) {

throw new RuntimeException("Only one Looper may be created per thread");

}

sThreadLocal.set(new Looper(quitAllowed));

}

我们看下looper的构造方法

private Looper(boolean quitAllowed) {

mQueue = new MessageQueue(quitAllowed);

mThread = Thread.currentThread();

}我们看下队列的实现方法,

MessageQueue(boolean quitAllowed) {

mQuitAllowed = quitAllowed;

mPtr = nativeInit();

}这里调用了native方法,我们看下实现

static jlong android_os_MessageQueue_nativeInit(JNIEnv* env, jclass clazz) {

NativeMessageQueue* nativeMessageQueue = new NativeMessageQueue();

if (!nativeMessageQueue) {

jniThrowRuntimeException(env, "Unable to allocate native queue");

return 0;

}

nativeMessageQueue->incStrong(env);

return reinterpret_cast<jlong>(nativeMessageQueue);

}NativeMessageQueue::NativeMessageQueue() :

mPollEnv(NULL), mPollObj(NULL), mExceptionObj(NULL) {

mLooper = Looper::getForThread();

if (mLooper == NULL) {

mLooper = new Looper(false);

Looper::setForThread(mLooper);

}

}根据头文件我们也可以看出这里的looper是c++实现的,并非java,第一次肯定为空调用

Looper::Looper(bool allowNonCallbacks)

: mAllowNonCallbacks(allowNonCallbacks),

mSendingMessage(false),

mPolling(false),

mEpollRebuildRequired(false),

mNextRequestSeq(WAKE_EVENT_FD_SEQ + 1),

mResponseIndex(0),

mNextMessageUptime(LLONG_MAX) {

mWakeEventFd.reset(eventfd(0, EFD_NONBLOCK | EFD_CLOEXEC));

LOG_ALWAYS_FATAL_IF(mWakeEventFd.get() < 0, "Could not make wake event fd: %s", strerror(errno));

AutoMutex _l(mLock);

rebuildEpollLocked();

}void Looper::rebuildEpollLocked() {

// Close old epoll instance if we have one.

if (mEpollFd >= 0) {

#if DEBUG_CALLBACKS

ALOGD("%p ~ rebuildEpollLocked - rebuilding epoll set", this);

#endif

mEpollFd.reset();

}

// Allocate the new epoll instance and register the WakeEventFd.

mEpollFd.reset(epoll_create1(EPOLL_CLOEXEC));

LOG_ALWAYS_FATAL_IF(mEpollFd < 0, "Could not create epoll instance: %s", strerror(errno));

epoll_event wakeEvent = createEpollEvent(EPOLLIN, WAKE_EVENT_FD_SEQ);

int result = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, mWakeEventFd.get(), &wakeEvent);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not add wake event fd to epoll instance: %s",

strerror(errno));

for (const auto& [seq, request] : mRequests) {

epoll_event eventItem = createEpollEvent(request.getEpollEvents(), seq);

int epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, request.fd, &eventItem);

if (epollResult < 0) {

ALOGE("Error adding epoll events for fd %d while rebuilding epoll set: %s",

request.fd, strerror(errno));

}

}

}

这里调用了epoll_create1,epoll实例也就被创建了

创建之后就该使用了,看下流程,我们肯定调用Looper.loop(),来等待方法

public static void loop() {

final Looper me = myLooper();

if (me == null) {

throw new RuntimeException("No Looper; Looper.prepare() wasn't called on this thread.");

}

if (me.mInLoop) {

Slog.w(TAG, "Loop again would have the queued messages be executed"

+ " before this one completed.");

}

me.mInLoop = true;

// Make sure the identity of this thread is that of the local process,

// and keep track of what that identity token actually is.

Binder.clearCallingIdentity();

final long ident = Binder.clearCallingIdentity();

// Allow overriding a threshold with a system prop. e.g.

// adb shell 'setprop log.looper.1000.main.slow 1 && stop && start'

final int thresholdOverride =

SystemProperties.getInt("log.looper."

+ Process.myUid() + "."

+ Thread.currentThread().getName()

+ ".slow", -1);

me.mSlowDeliveryDetected = false;

for (;;) {

if (!loopOnce(me, ident, thresholdOverride)) {

return;

}

}

}我们看下loopOnce实现

@SuppressWarnings({"UnusedTokenOfOriginalCallingIdentity",

"ClearIdentityCallNotFollowedByTryFinally"})

private static boolean loopOnce(final Looper me,

final long ident, final int thresholdOverride) {

Message msg = me.mQueue.next(); // might block

if (msg == null) {

// No message indicates that the message queue is quitting.

return false;

}

// This must be in a local variable, in case a UI event sets the logger

final Printer logging = me.mLogging;

if (logging != null) {

logging.println(">>>>> Dispatching to " + msg.target + " "

+ msg.callback + ": " + msg.what);

}

// Make sure the observer won't change while processing a transaction.

final Observer observer = sObserver;

final long traceTag = me.mTraceTag;

long slowDispatchThresholdMs = me.mSlowDispatchThresholdMs;

long slowDeliveryThresholdMs = me.mSlowDeliveryThresholdMs;

final boolean hasOverride = thresholdOverride >= 0;

if (hasOverride) {

slowDispatchThresholdMs = thresholdOverride;

slowDeliveryThresholdMs = thresholdOverride;

}

final boolean logSlowDelivery = (slowDeliveryThresholdMs > 0 || hasOverride)

&& (msg.when > 0);

final boolean logSlowDispatch = (slowDispatchThresholdMs > 0 || hasOverride);

final boolean needStartTime = logSlowDelivery || logSlowDispatch;

final boolean needEndTime = logSlowDispatch;

if (traceTag != 0 && Trace.isTagEnabled(traceTag)) {

Trace.traceBegin(traceTag, msg.target.getTraceName(msg));

}

final long dispatchStart = needStartTime ? SystemClock.uptimeMillis() : 0;

final long dispatchEnd;

Object token = null;

if (observer != null) {

token = observer.messageDispatchStarting();

}

long origWorkSource = ThreadLocalWorkSource.setUid(msg.workSourceUid);

try {

msg.target.dispatchMessage(msg);

if (observer != null) {

observer.messageDispatched(token, msg);

}

dispatchEnd = needEndTime ? SystemClock.uptimeMillis() : 0;

} catch (Exception exception) {

if (observer != null) {

observer.dispatchingThrewException(token, msg, exception);

}

throw exception;

} finally {

ThreadLocalWorkSource.restore(origWorkSource);

if (traceTag != 0) {

Trace.traceEnd(traceTag);

}

}

if (logSlowDelivery) {

if (me.mSlowDeliveryDetected) {

if ((dispatchStart - msg.when) <= 10) {

Slog.w(TAG, "Drained");

me.mSlowDeliveryDetected = false;

}

} else {

if (showSlowLog(slowDeliveryThresholdMs, msg.when, dispatchStart, "delivery",

msg)) {

// Once we write a slow delivery log, suppress until the queue drains.

me.mSlowDeliveryDetected = true;

}

}

}

if (logSlowDispatch) {

showSlowLog(slowDispatchThresholdMs, dispatchStart, dispatchEnd, "dispatch", msg);

}

if (logging != null) {

logging.println("<<<<< Finished to " + msg.target + " " + msg.callback);

}

// Make sure that during the course of dispatching the

// identity of the thread wasn't corrupted.

final long newIdent = Binder.clearCallingIdentity();

if (ident != newIdent) {

Log.wtf(TAG, "Thread identity changed from 0x"

+ Long.toHexString(ident) + " to 0x"

+ Long.toHexString(newIdent) + " while dispatching to "

+ msg.target.getClass().getName() + " "

+ msg.callback + " what=" + msg.what);

}

msg.recycleUnchecked();

return true;

}

epoll主要是用来消息处理的,我们看下消息的获得方法 Message msg = me.mQueue.next();

@UnsupportedAppUsage

Message next() {

// Return here if the message loop has already quit and been disposed.

// This can happen if the application tries to restart a looper after quit

// which is not supported.

final long ptr = mPtr;

if (ptr == 0) {

return null;

}

int pendingIdleHandlerCount = -1; // -1 only during first iteration

int nextPollTimeoutMillis = 0;

for (;;) {

if (nextPollTimeoutMillis != 0) {

Binder.flushPendingCommands();

}

nativePollOnce(ptr, nextPollTimeoutMillis);

synchronized (this) {

// Try to retrieve the next message. Return if found.

final long now = SystemClock.uptimeMillis();

Message prevMsg = null;

Message msg = mMessages;

if (msg != null && msg.target == null) {

// Stalled by a barrier. Find the next asynchronous message in the queue.

do {

prevMsg = msg;

msg = msg.next;

} while (msg != null && !msg.isAsynchronous());

}

if (msg != null) {里面调用了nativePollOnce,消息没有到指定时间就会阻塞住

static void android_os_MessageQueue_nativePollOnce(JNIEnv* env, jobject obj,

jlong ptr, jint timeoutMillis) {

NativeMessageQueue* nativeMessageQueue = reinterpret_cast<NativeMessageQueue*>(ptr);

nativeMessageQueue->pollOnce(env, obj, timeoutMillis);

}

void NativeMessageQueue::pollOnce(JNIEnv* env, jobject pollObj, int timeoutMillis) {

mPollEnv = env;

mPollObj = pollObj;

mLooper->pollOnce(timeoutMillis);

mPollObj = NULL;

mPollEnv = NULL;

if (mExceptionObj) {

env->Throw(mExceptionObj);

env->DeleteLocalRef(mExceptionObj);

mExceptionObj = NULL;

}

}最后由调用到looper

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

int result = 0;

for (;;) {

while (mResponseIndex < mResponses.size()) {

const Response& response = mResponses.itemAt(mResponseIndex++);

int ident = response.request.ident;

if (ident >= 0) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning signalled identifier %d: "

"fd=%d, events=0x%x, data=%p",

this, ident, fd, events, data);

#endif

if (outFd != nullptr) *outFd = fd;

if (outEvents != nullptr) *outEvents = events;

if (outData != nullptr) *outData = data;

return ident;

}

}

if (result != 0) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning result %d", this, result);

#endif

if (outFd != nullptr) *outFd = 0;

if (outEvents != nullptr) *outEvents = 0;

if (outData != nullptr) *outData = nullptr;

return result;

}

result = pollInner(timeoutMillis);

}

}之后处理完交给pollInner

int Looper::pollInner(int timeoutMillis) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - waiting: timeoutMillis=%d", this, timeoutMillis);

#endif

// Adjust the timeout based on when the next message is due.

if (timeoutMillis != 0 && mNextMessageUptime != LLONG_MAX) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

int messageTimeoutMillis = toMillisecondTimeoutDelay(now, mNextMessageUptime);

if (messageTimeoutMillis >= 0

&& (timeoutMillis < 0 || messageTimeoutMillis < timeoutMillis)) {

timeoutMillis = messageTimeoutMillis;

}

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - next message in %" PRId64 "ns, adjusted timeout: timeoutMillis=%d",

this, mNextMessageUptime - now, timeoutMillis);

#endif

}

// Poll.

int result = POLL_WAKE;

mResponses.clear();

mResponseIndex = 0;

// We are about to idle.

mPolling = true;

struct epoll_event eventItems[EPOLL_MAX_EVENTS];

int eventCount = epoll_wait(mEpollFd.get(), eventItems, EPOLL_MAX_EVENTS, timeoutMillis);

// No longer idling.

mPolling = false;

// Acquire lock.

mLock.lock();最后又调用到关键方法epoll_wait来等待。