全球DeepFake攻防挑战赛&DataWhale AI 夏令营——图像赛道

赛题背景

随着人工智能技术的迅猛发展,深度伪造技术(Deepfake)正成为数字世界中的一把双刃剑。这项技术不仅为创意内容的生成提供了新的可能性,同时也对数字安全构成了前所未有的挑战。Deepfake技术可以通过人工智能算法生成高度逼真的图像、视频和音频内容,这些内容看起来与真实的毫无二致。然而,这也意味着虚假信息、欺诈行为和隐私侵害等问题变得更加严重和复杂。

Deepfake是一种使用人工智能技术生成的伪造媒体,特别是视频和音频,它们看起来或听起来非常真实,但实际上是由计算机生成的。这种技术通常涉及到深度学习算法,特别是生成对抗网络(GANs),它们能够学习真实数据的特征,并生成新的、逼真的数据。

Deepfake技术虽然在多个领域展现出其创新潜力,但其滥用也带来了一系列严重的危害。在政治领域,Deepfake可能被用来制造假新闻或操纵舆论,影响选举结果和政治稳定。经济上,它可能破坏企业形象,引发市场恐慌,甚至操纵股市。法律体系也面临挑战,因为伪造的证据可能误导司法判断。此外,深度伪造技术还可能加剧身份盗窃的风险,成为恐怖分子的新工具,煽动暴力和社会动荡,威胁国家安全。

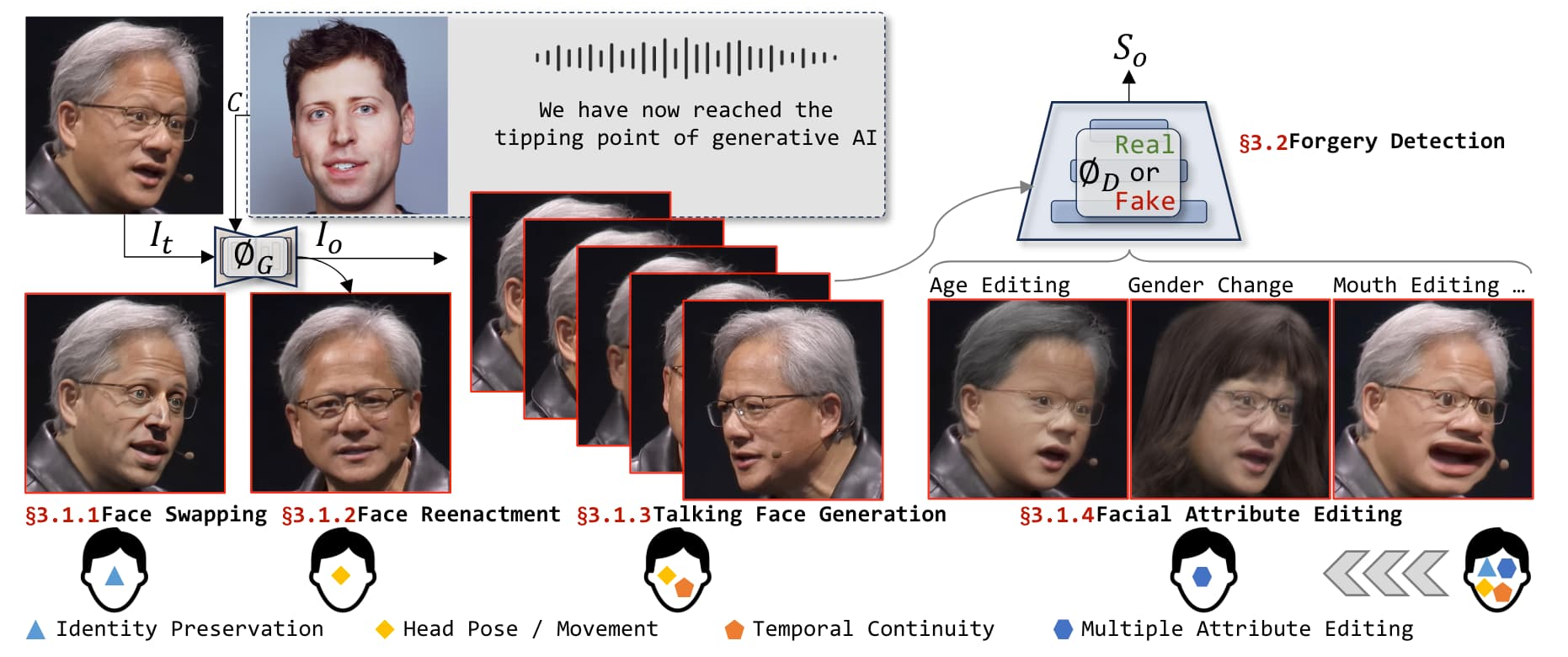

深度伪造技术通常可以分为四个主流研究方向:

- 面部交换专注于在两个人的图像之间执行身份交换;

- 面部重演强调转移源运动和姿态;

- 说话面部生成专注于在角色生成中实现口型与文本内容的自然匹配;

- 面部属性编辑旨在修改目标图像的特定面部属性;

深度学习与Deepfake

深度学习是一种强大的机器学习技术,它通过模拟人脑处理信息的方式,使计算机能够从大量数据中自动学习和识别模式。深度学习模型,尤其是卷积神经网络(CNN),能够识别图像和视频中的复杂特征。在Deepfake检测中,模型可以学习识别伪造内容中可能存在的微妙异常。

为了训练有效的Deepfake检测模型,需要构建包含各种Deepfake和真实样本的数据集(本次比赛的数据集就是按照这种方式进行组织)。深度学习模型通过这些数据集学习区分真假内容。

赛题任务

在这个赛道中,比赛任务是判断一张人脸图像是否为Deepfake图像,并输出其为Deepfake图像的概率评分。参赛者需要开发和优化检测模型,以应对多样化的Deepfake生成技术和复杂的应用场景,从而提升Deepfake图像检测的准确性和鲁棒性。

赛题数据集

可以使用command命令进行下载

curl 'http://zoloz-open.oss-cn-hangzhou.aliyuncs.com/waitan2024_deepfake_challenge%2F_%E8%B5%9B%E9%81%931%E5%AF%B9%E5%A4%96%E5%8F%91%E5%B8%83%E6%95%B0%E6%8D%AE%E9%9B%86%2Fphase1.tar.gz?Expires=1726603663&OSSAccessKeyId=LTAI5tAfcZDV5eCa1BBEJL9R&Signature=wFrzBHn5bhULqWzlZP7Z74p1g9c%3D' -o multiFFDI-phase1.tar.gz

训练集样例:

img_name,target

3381ccbc4df9e7778b720d53a2987014.jpg,1

63fee8a89581307c0b4fd05a48e0ff79.jpg,0

7eb4553a58ab5a05ba59b40725c903fd.jpg,0

…

验证集样例;

img_name,target

cd0e3907b3312f6046b98187fc25f9c7.jpg,1

aa92be19d0adf91a641301cfcce71e8a.jpg,0

5413a0b706d33ed0208e2e4e2cacaa06.jpg,0

…

提交的预测文件:

img_name,y_pred

cd0e3907b3312f6046b98187fc25f9c7.jpg,1

aa92be19d0adf91a641301cfcce71e8a.jpg,0.5

5413a0b706d33ed0208e2e4e2cacaa06.jpg,0.5

…

Baseline

Datawhale提供的baseline使用了ResNet,ResNet是一种残差网络,由于网络的加深会造成梯度爆炸和梯度消失的问题,因此何恺明了新的架构ResNet。

对kaggle中的baseline进行拆分,拆分为model、dataset和run三个部分

数据集的加载

from torch.utils.data.dataset import Dataset from PIL import Image import torch import numpy as np import pandas as pd class FFDIDataset(Dataset): def __init__(self, img_path, img_label, transform=None): self.img_path = img_path self.img_label = img_label if transform is not None: self.transform = transform else: self.transform = None def __getitem__(self, index): img = Image.open(self.img_path[index]).convert('RGB') if self.transform is not None: img = self.transform(img) return img, torch.from_numpy(np.array(self.img_label[index])) def __len__(self): return len(self.img_path) def read_labels():#此处注意path修改 train_label = pd.read_csv("phase1/trainset_label.txt") val_label = pd.read_csv("phase1/valset_label.txt") train_label['path'] = "phase1/trainset/" + train_label['img_name'] val_label['path'] = "phase1/valset/" + val_label['img_name'] return train_label, val_label模型架构

import timm model = timm.create_model('resnet18', pretrained=True, num_classes=2)#baseline使用resnet,同时加载预训练模型,分类为2类模型的训练和验证

import torch import dataset torch.manual_seed(0) torch.backends.cudnn.deterministic = False torch.backends.cudnn.benchmark = True import torchvision.models as models import torchvision.transforms as transforms import torchvision.datasets as datasets import torch.nn as nn import torch.nn.functional as F import torch.optim as optim from torch.autograd import Variable from torch.utils.data.dataset import Dataset from dataset import FFDIDataset import timm import time from Model import model import pandas as pd import numpy as np import cv2 from PIL import Image from tqdm import tqdm class AverageMeter(object): """Computes and stores the average and current value""" def __init__(self, name, fmt=':f'): self.name = name self.fmt = fmt self.reset() def reset(self): self.val = 0 self.avg = 0 self.sum = 0 self.count = 0 def update(self, val, n=1): self.val = val self.sum += val * n self.count += n self.avg = self.sum / self.count def __str__(self): fmtstr = '{name} {val' + self.fmt + '} ({avg' + self.fmt + '})' return fmtstr.format(**self.__dict__) class ProgressMeter(object): def __init__(self, num_batches, *meters): self.batch_fmtstr = self._get_batch_fmtstr(num_batches) self.meters = meters self.prefix = "" def pr2int(self, batch): entries = [self.prefix + self.batch_fmtstr.format(batch)] entries += [str(meter) for meter in self.meters] print('\t'.join(entries)) def _get_batch_fmtstr(self, num_batches): num_digits = len(str(num_batches // 1)) fmt = '{:' + str(num_digits) + 'd}' return '[' + fmt + '/' + fmt.format(num_batches) + ']' def validate(val_loader, model, criterion):#验证集进行验证 batch_time = AverageMeter('Time', ':6.3f') losses = AverageMeter('Loss', ':.4e') top1 = AverageMeter('Acc@1', ':6.2f') progress = ProgressMeter(len(val_loader), batch_time, losses, top1) # switch to evaluate mode model.eval() with torch.no_grad(): end = time.time() for i, (input, target) in tqdm(enumerate(val_loader), total=len(val_loader)): input = input.cuda() target = target.cuda() # compute output output = model(input)#模型进行处理 loss = criterion(output, target)#损失函数 # measure accuracy and record loss acc = (output.argmax(1).view(-1) == target.float().view(-1)).float().mean() * 100#计算acc losses.update(loss.item(), input.size(0)) top1.update(acc, input.size(0)) # measure elapsed time batch_time.update(time.time() - end) end = time.time() # TODO: this should also be done with the ProgressMeter print(' * Acc@1 {top1.avg:.3f}' .format(top1=top1)) return top1 def predict(test_loader, model, tta=10):#模型进行预测 # switch to evaluate mode model.eval() test_pred_tta = None for _ in range(tta): test_pred = [] with torch.no_grad(): end = time.time() for i, (input, target) in tqdm(enumerate(test_loader), total=len(test_loader)): input = input.cuda() target = target.cuda() # compute output output = model(input) output = F.softmax(output, dim=1)#softmax进行处理 output = output.data.cpu().numpy() test_pred.append(output) test_pred = np.vstack(test_pred) if test_pred_tta is None: test_pred_tta = test_pred else: test_pred_tta += test_pred return test_pred_tta def train(train_loader, model, criterion, optimizer, epoch): batch_time = AverageMeter('Time', ':6.3f') losses = AverageMeter('Loss', ':.4e') top1 = AverageMeter('Acc@1', ':6.2f') progress = ProgressMeter(len(train_loader), batch_time, losses, top1) # switch to train mode model.train() end = time.time() for i, (input, target) in enumerate(train_loader): input = input.cuda(non_blocking=True) target = target.cuda(non_blocking=True) # compute output output = model(input) loss = criterion(output, target) # measure accuracy and record loss losses.update(loss.item(), input.size(0)) acc = (output.argmax(1).view(-1) == target.float().view(-1)).float().mean() * 100 top1.update(acc, input.size(0)) # compute gradient and do SGD step optimizer.zero_grad() loss.backward() optimizer.step() # measure elapsed time batch_time.update(time.time() - end) end = time.time() if i % 100 == 0: progress.pr2int(i) if __name__ == '__main__': train_label, val_label = dataset.read_labels() train_loader = torch.utils.data.DataLoader(#加载数据,同时进行数据增强 FFDIDataset(train_label['path'].head(10), train_label['target'].head(10), transforms.Compose([ transforms.Resize((256, 256)), transforms.RandomHorizontalFlip(), transforms.RandomVerticalFlip(), transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) ]) ), batch_size=40, shuffle=True, num_workers=4, pin_memory=True ) val_loader = torch.utils.data.DataLoader( FFDIDataset(val_label['path'].head(10), val_label['target'].head(10), transforms.Compose([ transforms.Resize((256, 256)), transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) ]) ), batch_size=40, shuffle=False, num_workers=4, pin_memory=True ) model = model.cuda() criterion = nn.CrossEntropyLoss().cuda()#交叉熵 optimizer = torch.optim.Adam(model.parameters(), 0.005)#Adam优化器 scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=4, gamma=0.85) best_acc = 0.0 for epoch in range(1): print('Epoch: ', epoch) train(train_loader, model, criterion, optimizer, epoch) val_acc = validate(val_loader, model, criterion) optimizer.step() scheduler.step() if val_acc.avg.item() > best_acc: best_acc = round(val_acc.avg.item(), 2) torch.save(model.state_dict(), f'./model_{best_acc}.pt') test_loader = torch.utils.data.DataLoader( FFDIDataset(val_label['path'].head(10), val_label['target'].head(10), transforms.Compose([ transforms.Resize((256, 256)), transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) ]) ), batch_size=40, shuffle=False, num_workers=4, pin_memory=True ) val = val_label.head(10).copy() val['y_pred'] = predict(test_loader,model,1)[:,1] val[['img_name','y_pred']].to_csv('submit.csv',index=None)