项目指路

https://gitee.com/chenjian0502/yanzhaowang

实现功能

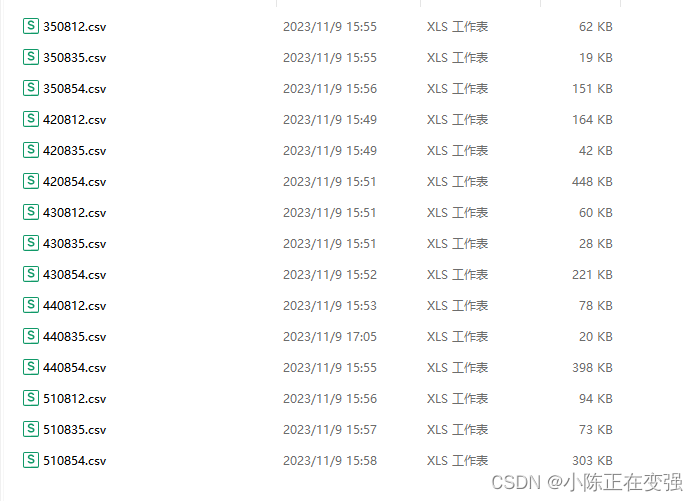

用于爬取研招网的信息,目前实现的是,输入省份以及专业代码可以load到相关的信息,报考人数,专业,学院,学校,考试科目等。

代码

import requests

from bs4 import BeautifulSoup

from pandas.core.frame import DataFrame

import re

import time

class Graduate:

def __init__(self, province, category):

self.head = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKi"

"t/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

}

self.data = []

self.province = province

self.category = category

# print(self.category+"self.province"+self.province)

def get_list_fun(self, url, name):

"""获取提交表单代码"""

response = requests.get(url, headers=self.head)

province = response.json()

with open("{}.txt".format(name), "w") as f:

for x in province:

f.write(str(x))

f.write("\n")

def get_list(self):

"""

分别获取省,学科门类,专业编号数据

写入txt文件

"""

self.get_list_fun("https://yz.chsi.com.cn/zsml/pages/getSs.jsp", "province")

self.get_list_fun('https://yz.chsi.com.cn/zsml/pages/getMl.jsp', "category")

self.get_list_fun('https://yz.chsi.com.cn/zsml/pages/getZy.jsp', 'major')

def get_school_url(self):

"""

输入省份,

发送post请求,获取数据

提取数据

必填省份,学科门类,专业可选填

返回学校网址

默认了全日制 xxfx 可修改变换

"""

url = "https://yz.chsi.com.cn/zsml/queryAction.do"

data = {

"ssdm": self.province,

"yjxkdm": self.category,

'xxfs': "1"

}

# 可以直接替换也可以

# data = {

# 'ssdm': "44",

# 'dwmc': "",

# 'mldm': "08",

# 'mlmc': "",

# 'yjxkdm': "0812",

# 'zymc': "",

# 'xxfs': "1"

# # 'pageno': "1"

# }

response = requests.post(url, data=data, headers=self.head)

html = response.text

# print(html)

reg = re.compile(r'(<tr>.*? </tr>)', re.S)

content = re.findall(reg, html)

schools_url = re.findall('<a href="(.*?)" target="_blank">.*?</a>', str(content))

# print(schools_url)

return schools_url

def get_college_data(self, url):

"""返回一个学校所有学院数据"""

response = requests.get(url, headers=self.head)

html = response.text

colleges_url = re.findall('<td class="ch-table-center"><a href="(.*?)" '

'target="_blank">查看</a>', html)

return colleges_url

def get_final_data(self, url):

"""输出一个学校一个学院一个专业的数据"""

temp = []

response = requests.get(url, headers=self.head)

html = response.text

# print(html)

soup = BeautifulSoup(html, features='lxml')

# + soup.find_all('tbody', {"class": "zsml-res-items"})

summary = (soup.find_all('td', {"class": "zsml-summary"}))

subject = soup.find_all('tbody', {"class": "zsml-res-items"})

# result = []

# for tr in subject:

# for td in tr.find_all('td'):

# result.append(td.text.replace('\r\n', '').replace('\n', ''))

# print(result)

results = []

for tr in subject:

for td in tr.find_all('td'):

text = td.text

result = re.findall(r'[\u4e00-\u9fa5\d]+', text)

results.append(result)

# print(results)

summary = summary + results

# print(subject)

# print("==========================================")

# print(summary)

# print("==========================================")

for x in summary:

# print("++++++++++++++++++++++++++++++++")

# print(x.get_text())

# print("++++++++++++++++++++++++++++++++")

if isinstance(x, list):

temp.append(x)

else:

temp.append(x.get_text() or x)

if x.get_text().__contains__("专业"):

if "不含推免" in x.get_text():

if "不区分" not in x.get_text():

if "不分" not in x.get_text():

temp.append("专业")

num = re.findall(r'\d+', x.get_text())

match = re.search(r'\d+', str(num))

if match:

result = match.group()

# print(result)

temp.append(result)

elif x.get_text().__contains__("研究方向"):

if "不含推免" in x.get_text():

if "不区分" not in x.get_text():

if "不分" not in x.get_text():

temp.append("研究方向")

num2 = re.findall(r'\d+', x.get_text())

match = re.search(r'\d+', str(num2))

if match:

result = match.group()

temp.append(result)

elif x.get_text().__contains__("一级学科"):

if "不含推免" in x.get_text():

temp.append("一级学科")

num2 = re.findall(r'\d+', x.get_text())

match = re.search(r'\d+', str(num2))

if match:

result = match.group()

temp.append(result)

# temp.append(result)

self.data.append(temp)

def get_schools_data(self):

"""获取所有学校的数据"""

url = "https://yz.chsi.com.cn"

schools_url = self.get_school_url()

amount = len(schools_url)

i = 0

for school_url in schools_url:

i += 1

url_ = url + school_url

# 找到一个学校对应所有满足学院网址

colleges_url = self.get_college_data(url_)

print("已完成第" + str(i) + "/" + str(amount) + "学院爬取")

time.sleep(1)

for college_url in colleges_url:

_url = url + college_url

self.get_final_data(_url)

def get_data_frame(self):

"""将列表形数据转化为数据框格式"""

data = DataFrame(self.data)

# print(data)

data.to_csv(self.province + self.category + ".csv",

encoding="utf_8_sig")

# writer = pd.ExcelWriter("C:\\Users\\Administrator\\Desktop\\信息\\" + self.province + self.category + ".xlsx")

# data.to_excel(writer, sheet_name="1")

if __name__ == '__main__':

# province = "44" # 广东

# province = "43" # 湖南

# province = "42" # 湖北

# province = "33" # 湖北

# category = "0835" # 软件工程

# category = "0812" # 计算机技术

# category = "0854" # 电子信息

# category = "0501" # 中国语言文学

# 省份代码列表

# provinceList = ['42', '43', '44', '35', '51', '31', '32', '33', '34', '45']

# provinceList = ['44']

# # 专业代码列表

# categoryList = ['0812', '0835', '0854']

# categoryList = ['0835']

# for province in provinceList:

# for category in categoryList:

# print("开始爬取" + province + "的" + category)

# spyder = Graduate(province, category)

# spyder.get_schools_data()

# spyder.get_data_frame()

# print(province + category + '写入成功')

province = input("请输入查询学校省份编号:")

category = input("请输入查询专业代码:")

print("开始爬取" + province + "的" + category)

spyder = Graduate(province, category)

spyder.get_schools_data()

spyder.get_data_frame()

print(province + category + '写入成功')

使用

self.get_list_fun("https://yz.chsi.com.cn/zsml/pages/getSs.jsp", "province")

self.get_list_fun('https://yz.chsi.com.cn/zsml/pages/getMl.jsp', "category")

self.get_list_fun('https://yz.chsi.com.cn/zsml/pages/getZy.jsp', 'major')

上面的三个链接是用来获取省份类别和专业的

有了之后将代码中的替换成自己想要的就可以了。

可以看这个:https://gitee.com/chenjian0502/yanzhaowang,有什么问题可以问