目录

一、目标

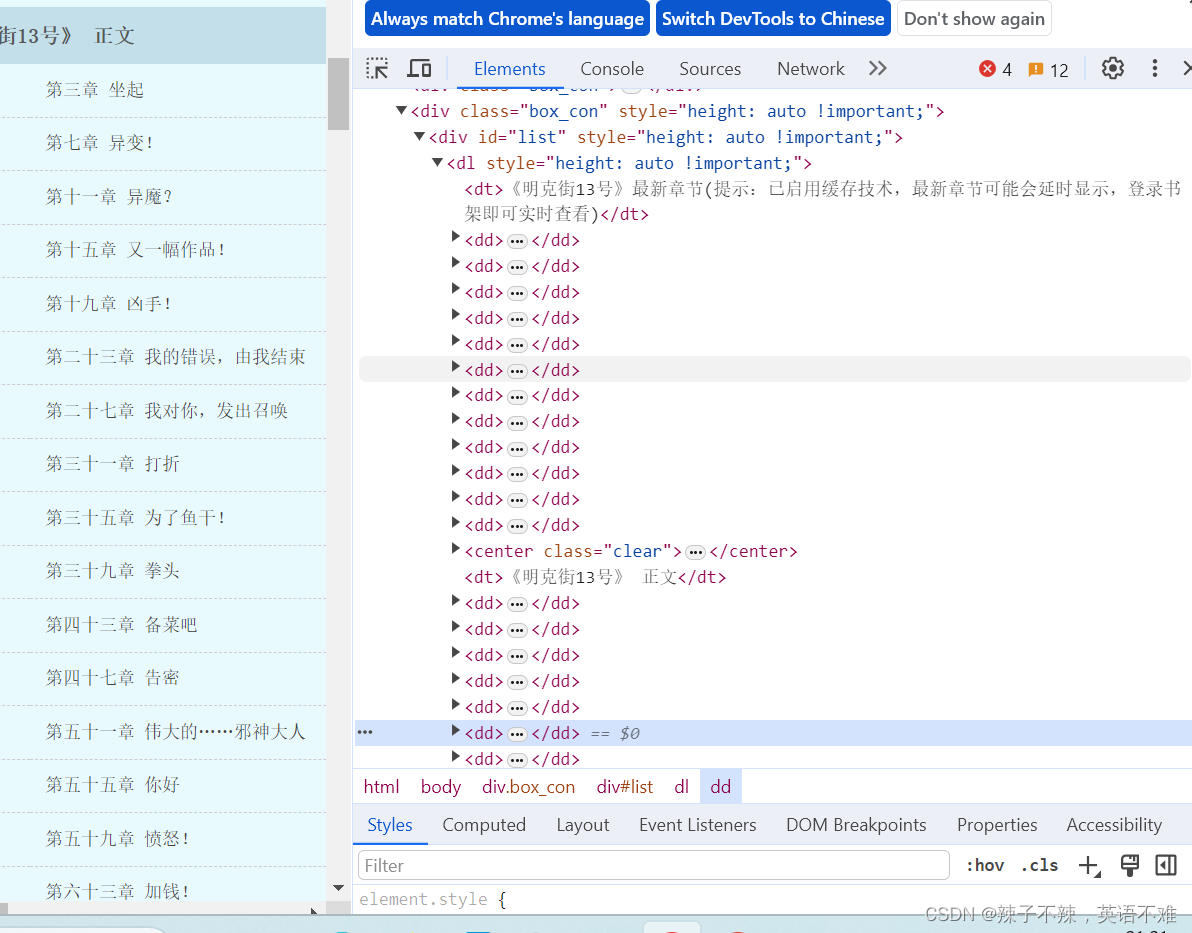

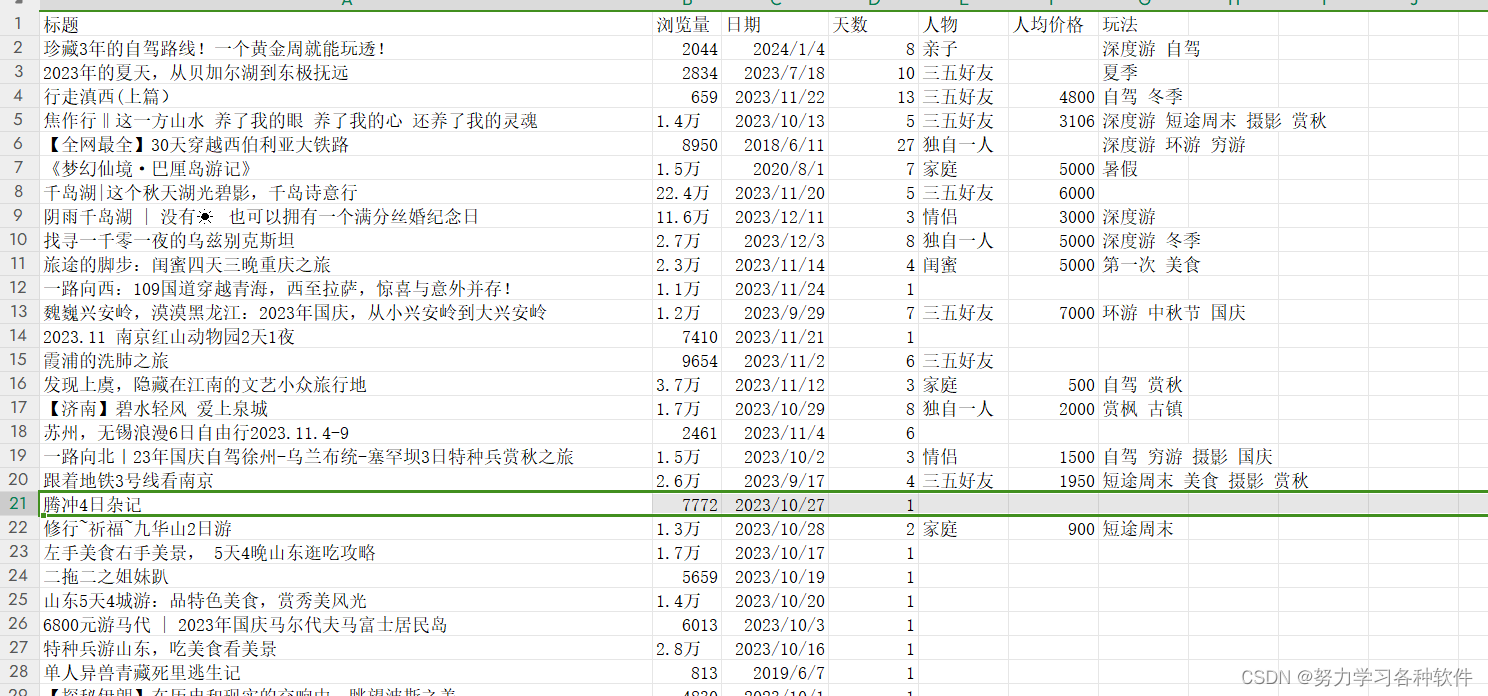

爬取链接我的书架 -17K小说网,并将书架信息中的作品的分类、书名、最新章节、作者这些信息一一存储在 Mysql 数据库中。

二、分析

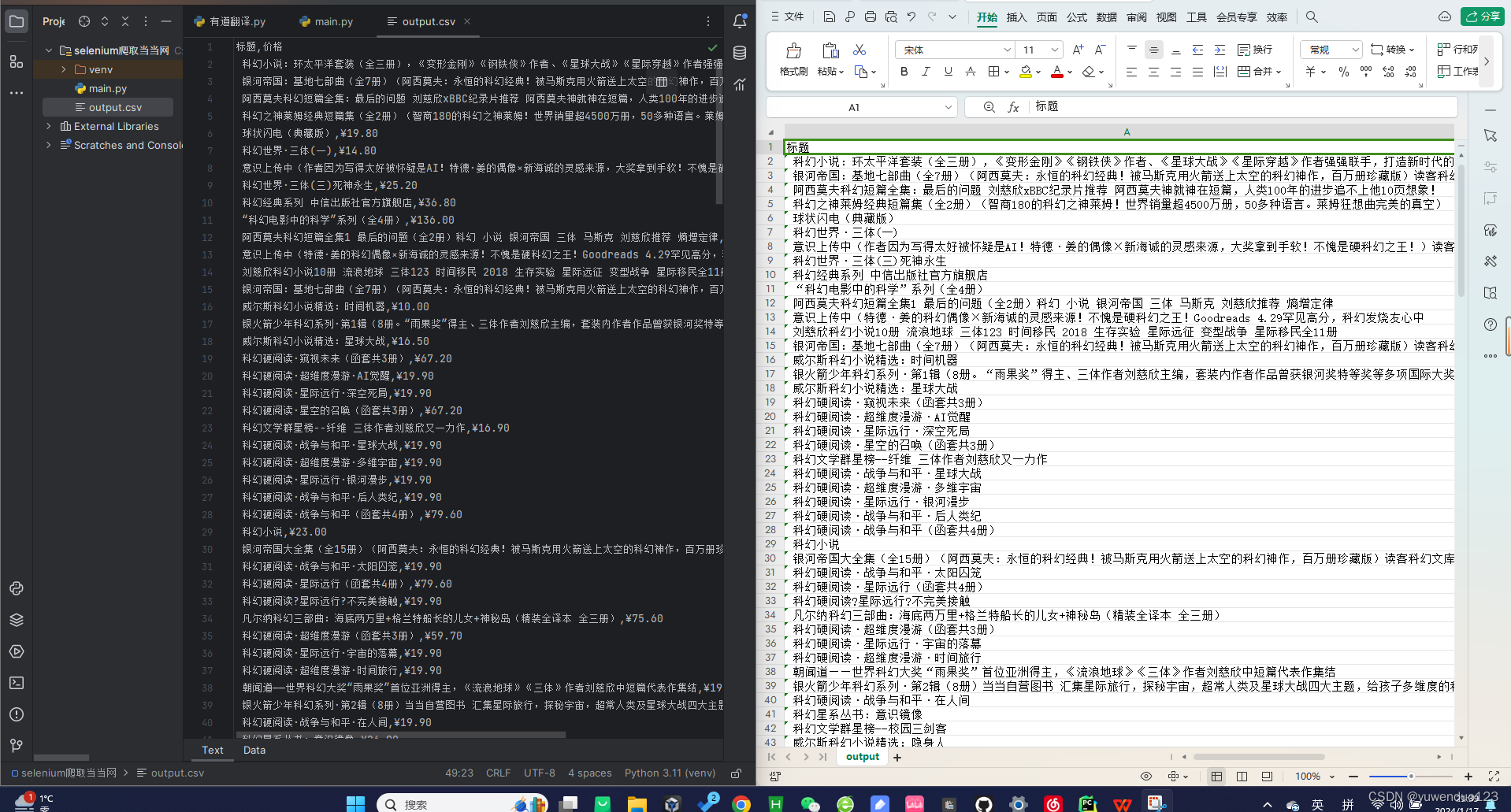

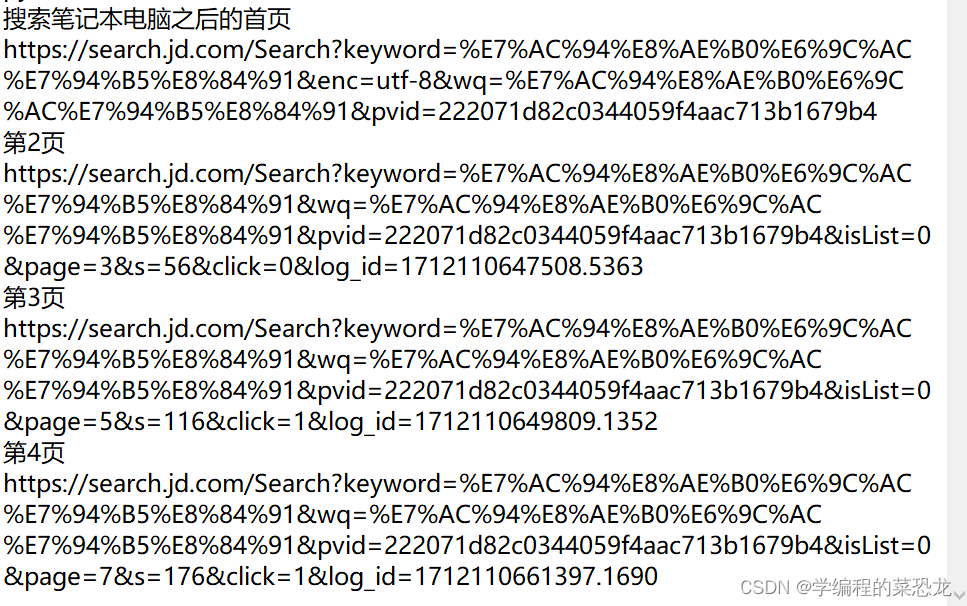

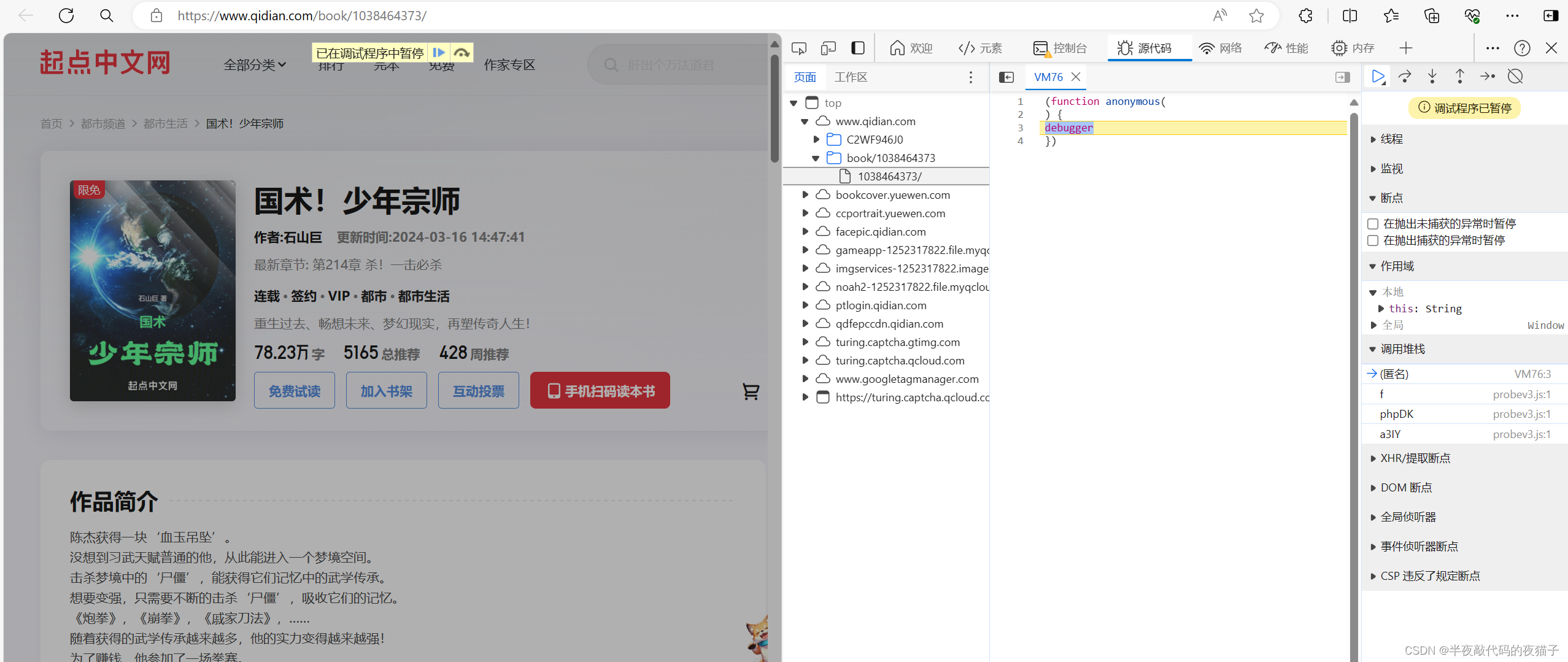

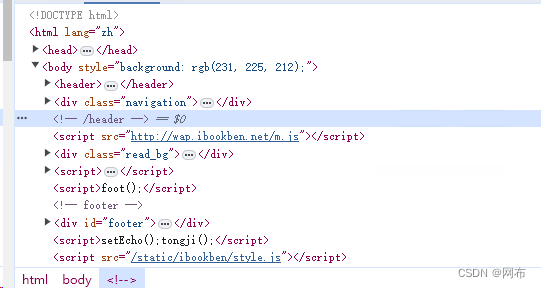

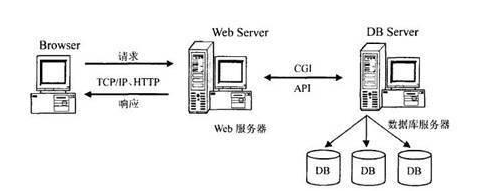

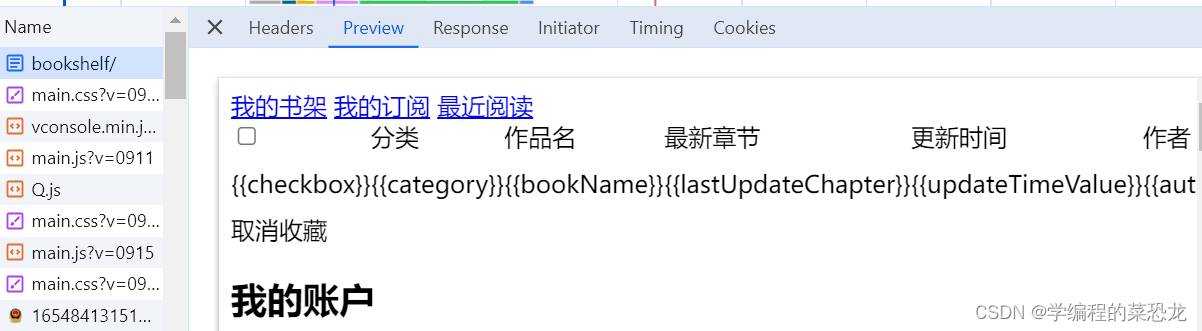

发现要先登录才能进入页面,所以到时候要用到 Cookies 和 Session,登录后:右键,检查 --> 点击Network,ctrl+r刷新数据包(已刷新点击两下上方红色按钮),找到下方数据包发现信息没有呈现出来。(用的是谷歌浏览器)

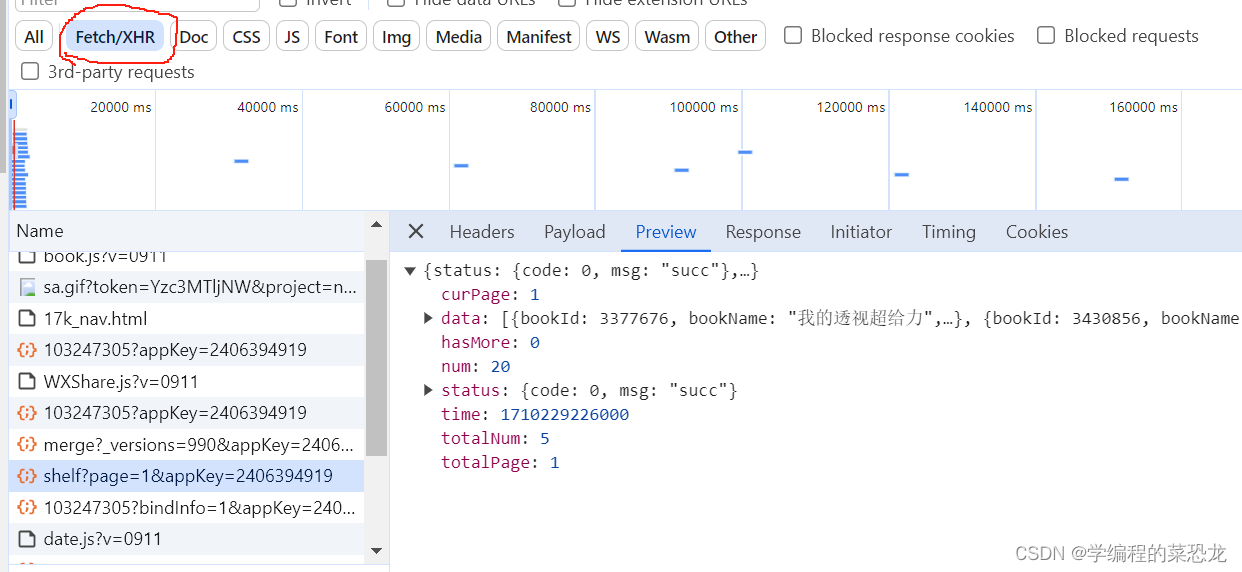

在这里,先考虑 Ajax 数据,点击下方红色标记处,寻找一番,找到数据所在。Ajax 可看5.2 Ajax 数据爬取实战-CSDN博客。

在 response 中分析数据,就可开始爬取。

因为是登录后的爬取,所以需要设置 headers,cookies等,这些可以直接在上图文件的 Headers 中寻找。

三、代码

import requests

import pymysql

def create_connection(): # 创建数据库(架构)

conn = pymysql.connect(host='localhost', user='root',

passwd='1141477238', port=3306)

cursor = conn.cursor()

sql = "create database if not exists train default character set utf8mb4"

cursor.execute(sql)

conn.commit()

conn.close()

def creat_table(): # 创建表

conn = pymysql.connect(host='localhost', user='root',

passwd='1141477238', port=3306, db='train')

cursor = conn.cursor()

sql = ('create table if not exists 7ktrain(id INT AUTO_INCREMENT PRIMARY KEY,'

'bookName VARCHAR(100),'

'authorPenName VARCHAR(100),'

'bookCategory VARCHAR(100),'

'lastUpdateChapter TEXT)')

cursor.execute(sql)

cursor.close()

conn.close()

def insert_data(data_dict): # 将数据插入数据库中

conn = pymysql.connect(host='localhost', user='root',

passwd='1141477238', port=3306, db='train')

cursor = conn.cursor()

keys = ','.join(data_dict.keys())

values = ','.join(['%s'] * len(data_dict))

sql = f'INSERT INTO 7ktrain({keys}) VALUES ({values})'

cursor.execute(sql, tuple(data_dict.values()))

conn.commit()

cursor.close()

conn.close()

def crawl(): # 爬取数据

url = 'https://user.17k.com/ck/author2/shelf?page=1&appKey=2406394919'

headers = {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate, br, zstd",

"Accept-Language": "zh-CN,zh;q=0.9",

"Connection": "keep-alive",

"Cookie": "GUID=9802c882-bf61-4b1b-976e-251e41179b2b; Hm_lvt_9793f42b498361373512340937deb2a0=1710160603,1710163379,1710227157; c_channel=0; c_csc=web; accessToken=nickname%3D%25E6%25AC%25A2%25E5%2598%25BB%25E5%2598%25BB%26avatarUrl%3Dhttps%253A%252F%252Fcdn.static.17k.com%252Fuser%252Favatar%252F05%252F05%252F73%252F103247305.jpg-88x88%253Fv%253D1710227281000%26id%3D103247305%26e%3D1725779751%26s%3D253acd6a39fc074d; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%22103247305%22%2C%22%24device_id%22%3A%2218e2d83e716753-0cfefcab8777bf-26001b51-921600-18e2d83e7175f3%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_referrer%22%3A%22%22%2C%22%24latest_referrer_host%22%3A%22%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%7D%2C%22first_id%22%3A%229802c882-bf61-4b1b-976e-251e41179b2b%22%7D; acw_sc__v2=65f0060446cd22255a80bd085b0d8aca6b981e32; ssxmod_itna=YqfxnD9iDQ0=eqBpxeTm9AL2AOBzDuexGuRPDsWerDSxGKidDqxBWWjhOn0KqOqWQKrOGoW=SoC24Wo8frcodDU4i8DCdnGbrDeW=D5xGoDPxDeDADYojDAqiOD7qDdvk5Hz8DbxYpuDWPDYxDrvPKDRxi7DDydFx07DQyOYnw8dDDzQ2exDmqKVrAaY45mUP4hWi2OD7vmDlPx0U0OQKMjd75hEU8sAeKDXPQDvo5CyhPmDB+8B=Hsl7D3q7iev7WeeAbYWi+vKjiYY7Z5NQ0Bzbiqre+vFbG4Km0P6sZcDDaqzzNP4D===; ssxmod_itna2=YqfxnD9iDQ0=eqBpxeTm9AL2AOBzDuexGu4ikvWWqdDlrr8Dj4Mi=MdglRC+kwOD8hRjFUYQjYKHDROB=3YC6T7nK0SrjKoHCKIm4xXPPbdIH84qNUqB87q1TBtIsW+DUdqhWFs8YornTQOK4a=0baYqP=gbzeeeTWiq4goPQMCAzqmHThhaduGisQo3xVxq4xcgQ=eR4rS+iNrW17OIbb+pF7WuW8DaEDFPfSPbsIxfHgO900mCYvhaOsa7RLAvio59WkLm97LZ=ZGgFpwdgVtkOdptBPfzllj/gwqVMkhljf5bHZtfxB+znqE=d8WtX6q4hLSePXPjKE=E7muD2kDLo+2dhLGOGppdqiAEnqe005cjkne3cDxbecY56EK3ljCren=dx246PmHFX8gL4cj7A=1Ud/hvS=58f56mfOt=fAmLDwgY3Q2n62rRQme0o67GeSP6Y=Zin61pTDG2zxDFqD+6DxD=; tfstk=eo6XQPZ7Gq0jYHGmPrZPAIKMSbv_fZwe5ctOxGHqXKpxWVIpzn3AuGL15a8l5CqD7FL17GC44JyFmivMBNaULJ-YRtn38bJT61jDIdUyzJlQuibEX1gdYLgtdYeGczMqnAvYNy4KlH7OFnO8aiL-oBBJcITdDTHtBntXGeIvPgyrLe_25fiWtAtW8uZSsfmp6Y7OPX6C0IKkqDr7VXlMM3xWyuZSs4Ovq3qYVuGHA; Hm_lpvt_9793f42b498361373512340937deb2a0=1710229226",

"Host": "user.17k.com",

"Referer": "https://user.17k.com/www/bookshelf/",

"Sec-Ch-Ua": "\"Chromium\";v=\"122\", \"Not(A:Brand\";v=\"24\", \"Google Chrome\";v=\"122\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "empty",

"Sec-Fetch-Mode": "cors",

"Sec-Fetch-Site": "same-origin",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"

}

response = requests.get(url, headers=headers)

content = response.json()

data = content['data']

book_dict = {}

for i in range(len(data)):

book_dict['bookName'] = data[i]['bookName']

book_dict['authorPenName'] = data[i]['authorPenName']

book_dict['bookCategory'] = data[i]['bookCategory']['name']

book_dict['lastUpdateChapter'] = data[i]['lastUpdateChapter']['name']

insert_data(book_dict)

create_connection() # 建立数据库

creat_table() # 创建表

crawl()数据库相关内容可看4.4 MySQL存储-CSDN博客。

文章到此结束,本人新手,若有错误,欢迎指正;若有疑问,欢迎讨论。若文章对你有用,点个小赞鼓励一下,谢谢大家,一起加油吧!