perming

本文作者的Github账号是linjing-lab,PyPI账户是DeeGLMath

perming: Perceptron Models Are Training on Windows Platform with Default GPU Acceleration.

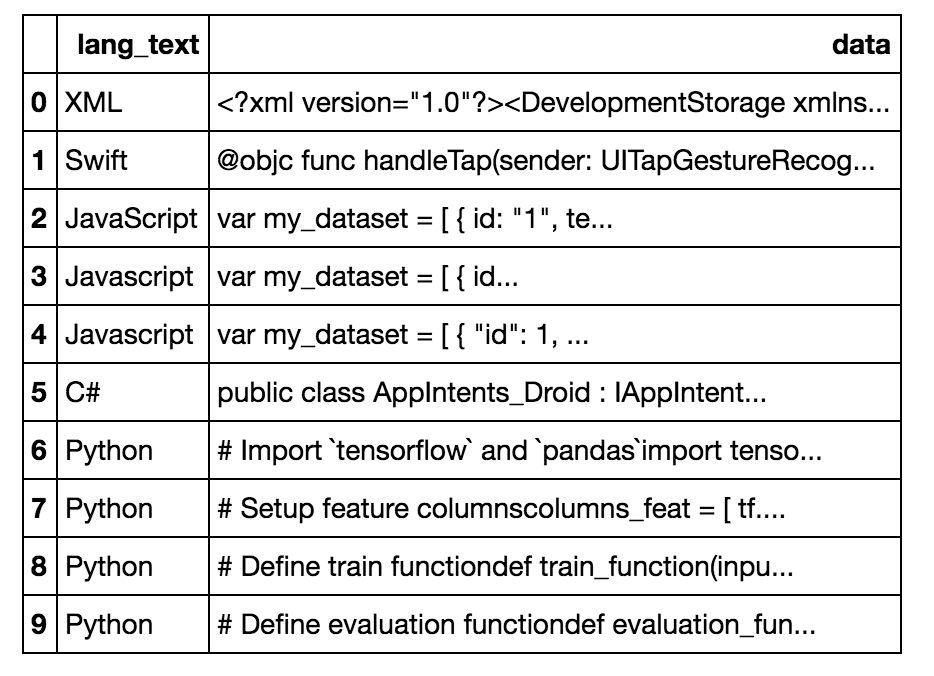

- p: use polars or pandas to read dataset.

- per: perceptron algorithm used as based model.

- m: models include Box, Regressier, Binarier, Mutipler and Ranker.

- ing: training on windows platform with strong gpu acceleration.

init backend

refer to https://pytorch.org/get-started/locally/ and choose PyTorch to support cuda compatible with your Windows.

tests with: PyTorch 1.7.1+cu101

advices

- If users don’t want to encounter CUDA out of memory return from joblib.parallel, the best solution is to download v1.9.2 or before v1.6.1.

- If users have no plan to retrain a full network in tuning model, the best solution is to download versions after v1.8.0 which support set_freeze.

- If users are not conducting experiments on Jupyter, download versions after v1.7.* will accelerate train_val process and reduce redundancy.

parameters

init:

- input_: int, feature dimensions of tabular datasets after extract, transform, load from any data sources.

- num_classes: int, define numbers of classes or outputs after users defined the type of task with layer output.

- hidden_layer_sizes: Tuple[int]=(100,), define numbers and sizes of hidden layers to enhance model representation.

- device: str=‘cuda’, configure training and validation device with torch.device options. ‘cuda’ or ‘cpu’.

- activation: str=‘relu’, configure activation function combined with subsequent learning task. see _activate in open models.

- inplace_on: bool=False, configure whether to enable inplace=True on activation. False or True. (manually set in Box)

- criterion: str=‘CrossEntropyLoss’, configure loss criterion with compatible learning task output. see _criterion in open models.

- solver: str=‘adam’, configure inner optimizer serve as learning solver for learning task. see _solver in _utils/BaseModel.

- batch_size: int=32, define batch size on loaded dataset of one epoch training process. any int value > 0. (prefer 2^n)

- learning_rate_init: float=1e-2, define initial learning rate of solver input param controled by inner assertion. (1e-6, 1.0).

- lr_scheduler: Optional[str]=None, configure scheduler about learning rate decay for compatible use. see _scheduler in _utils/BaseModel.

data_loader:

- features: TabularData, manually input by users.

- target: TabularData, manually input by users.

- ratio_set: Dict[str, int]={‘train’: 8, ‘test’: 1, ‘val’: 1}, define by users.

- worker_set: Dict[str, int]={‘train’: 8, ‘test’: 2, ‘val’: 1}, manually set by users need.

- random_seed: Optional[int]=None, manually set any int value by users to fixed sequence.

set_freeze:

- require_grad: Dict[int, bool], manually set freezed layers by given serial numbers according to

self.model. (if users set require_grad with{0: False}, it means freeze the first layer ofself.model.)

train_val:

- num_epochs: int=2, define numbers of epochs in main training cycle. any int value > 0.

- interval: int=100