前言

近期腾出了点时间,拟对IJKPLAYER做更完整的源码分析,并对关键实现细节,作为技术笔记,记录下来。包括Android端硬解码/AudioTrack/OpenSL播放,以及iOS端硬解码/AudioUnit播放,以及OpenGL渲染和Android/iOS端的图像显示技术,不一而足。

本文着重分析Android端mediacodec硬解实现,由于audio用的是ffmpeg软解方案,因此本文只介绍用mediacodec硬解视频。

mediacodec简介

初识mediacodec

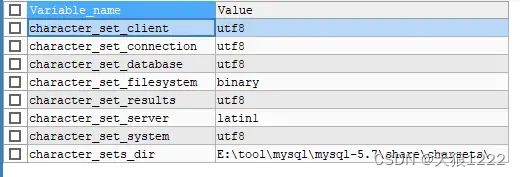

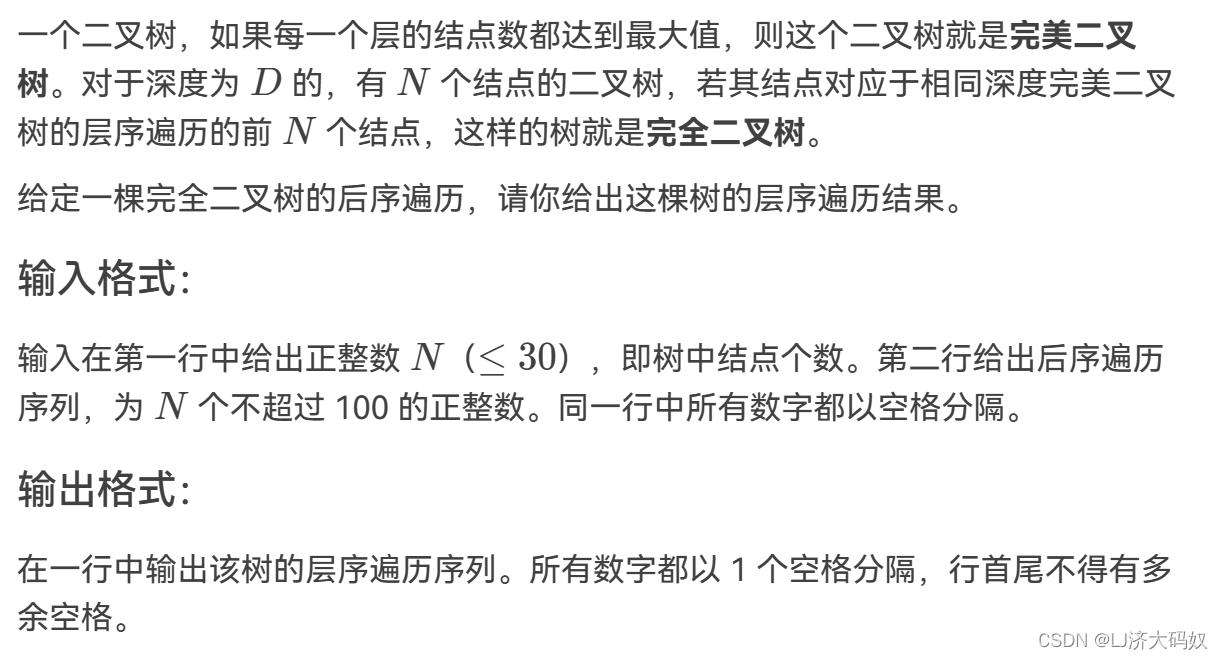

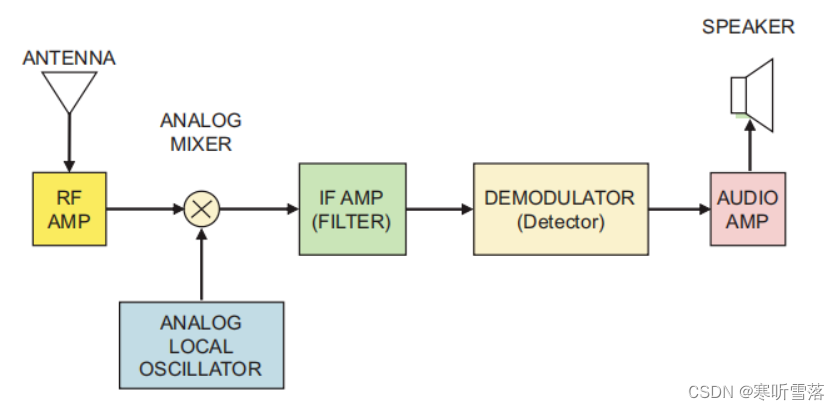

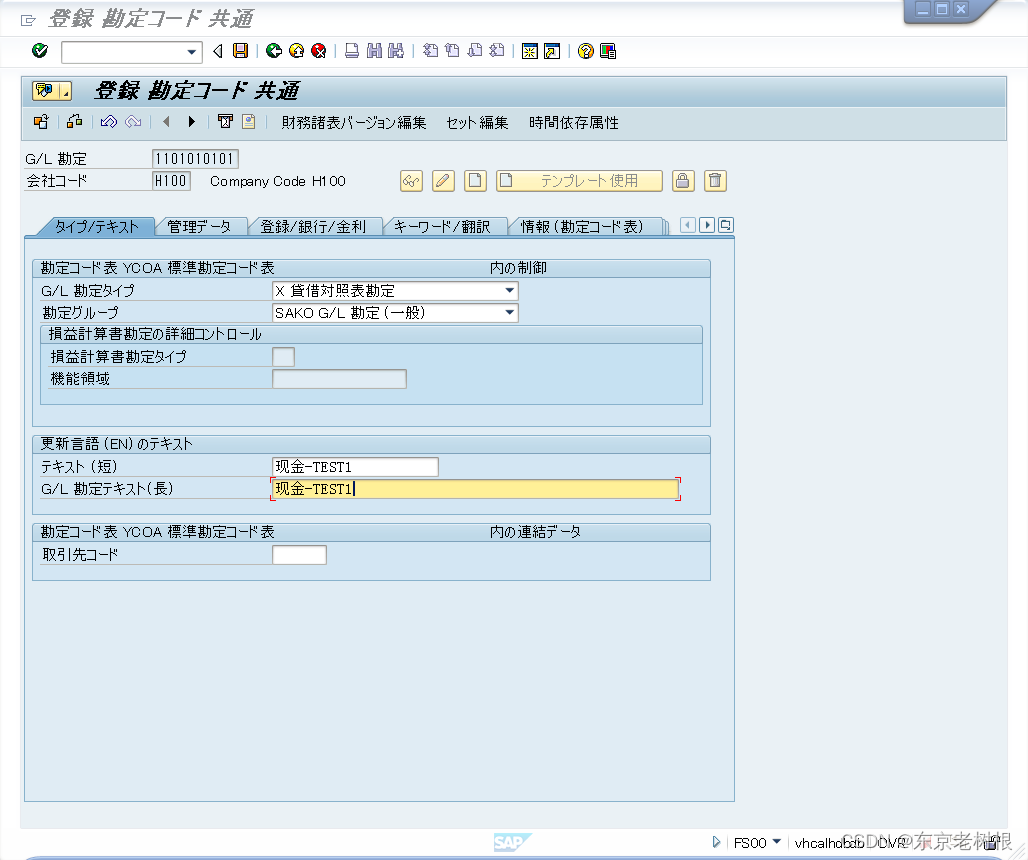

mediacodec技术是Android系统音视频硬编/硬解的一套标准,各硬件厂商加以实现,经常和MediaExtractor/MediaSync/MediaMuxer/MediaCrypto/MediaDrm/Image/Surface/AudioTrack一起使用。具体请参考google官方文档。

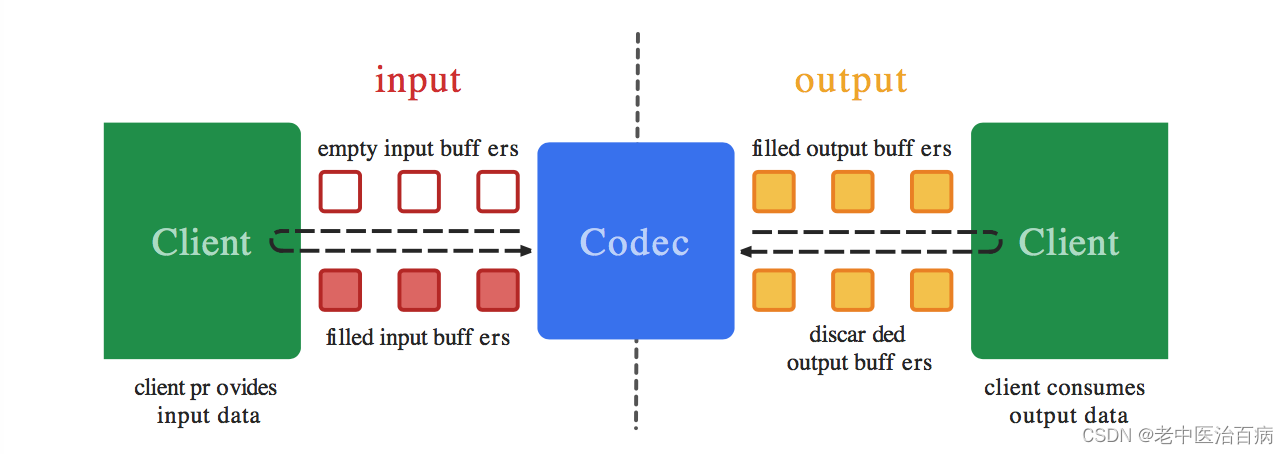

由上图了解到,一言以蔽之,一端输入、一端输出,input输入的数据提交给codec异步处理后,由output输出再归还给codec。输入的是raw video/audio数据,则输出的是编码后的video/audio数据,输入的是编码后的video/audio数据,则输出的是raw的video/audio数据。

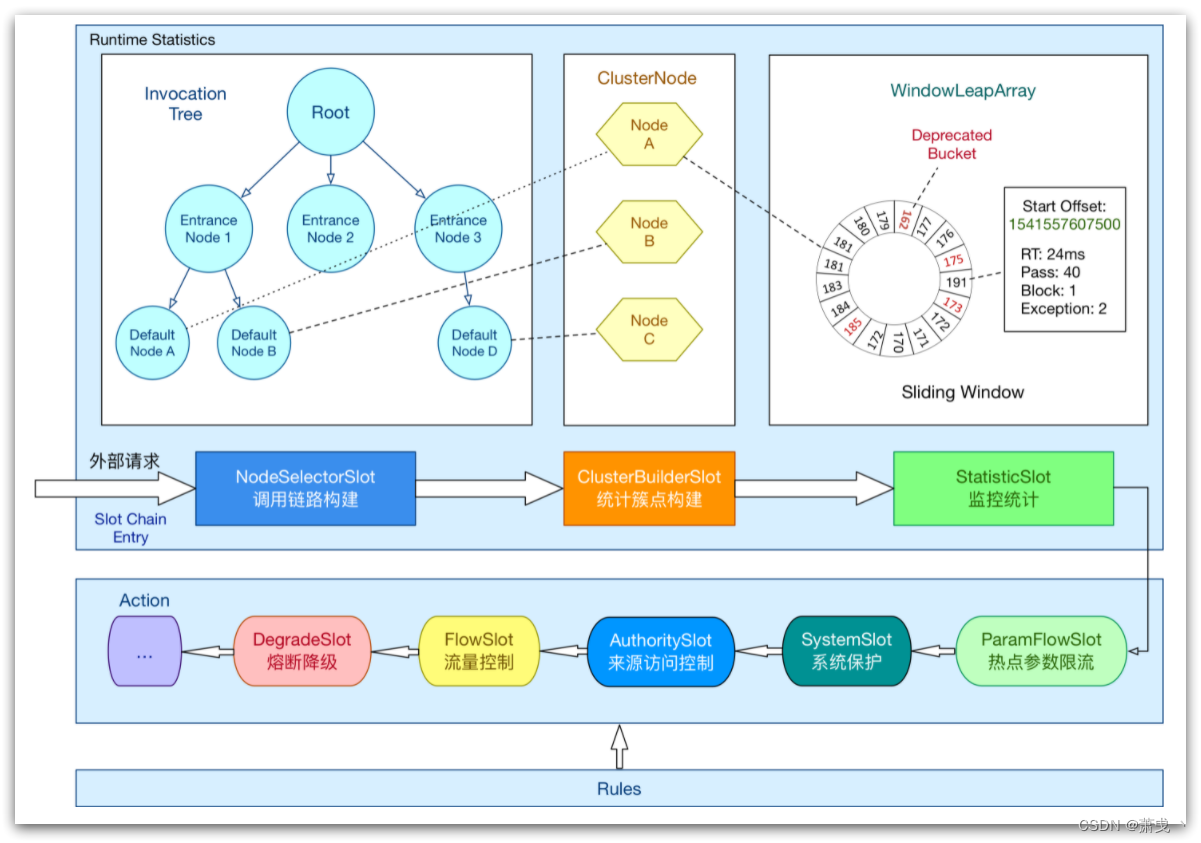

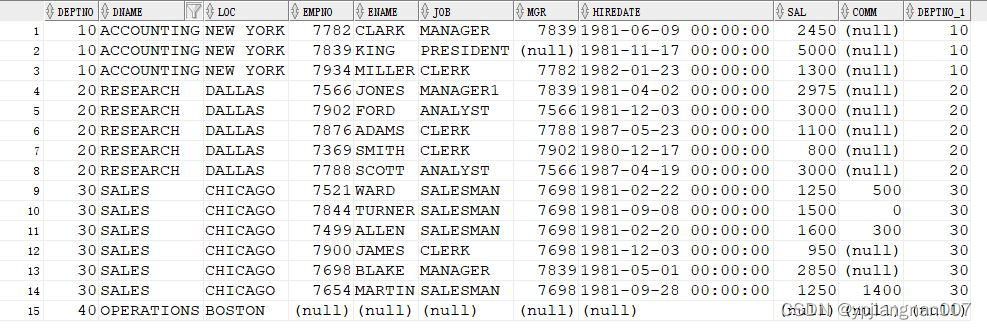

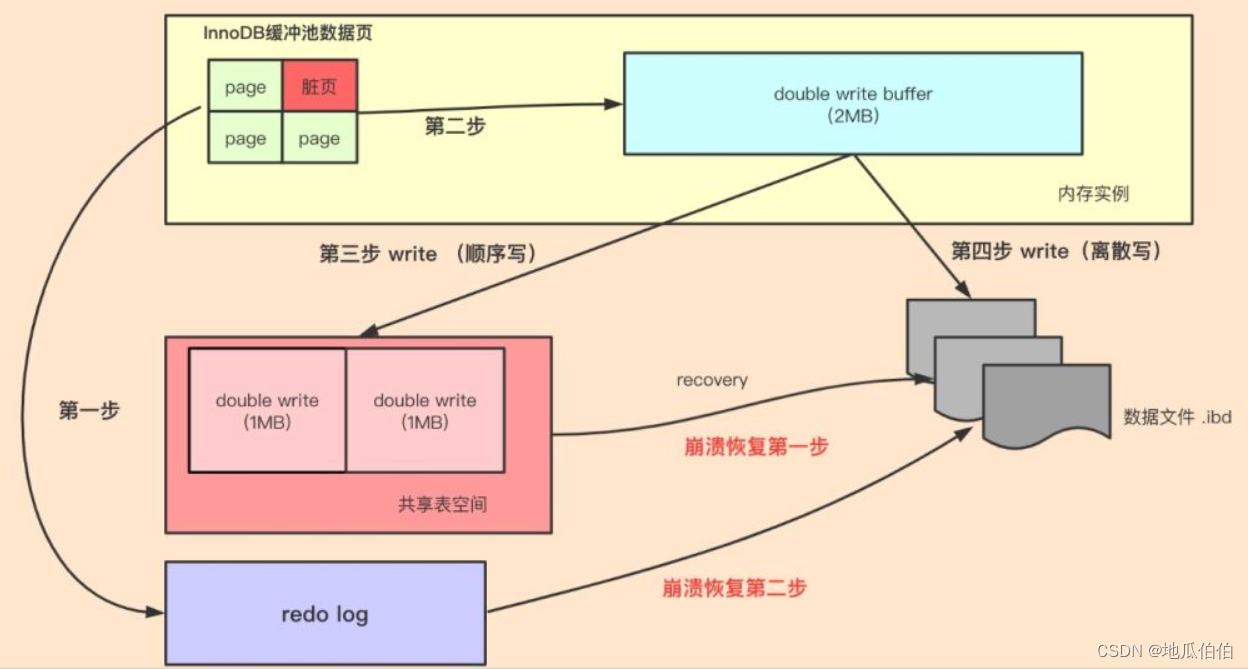

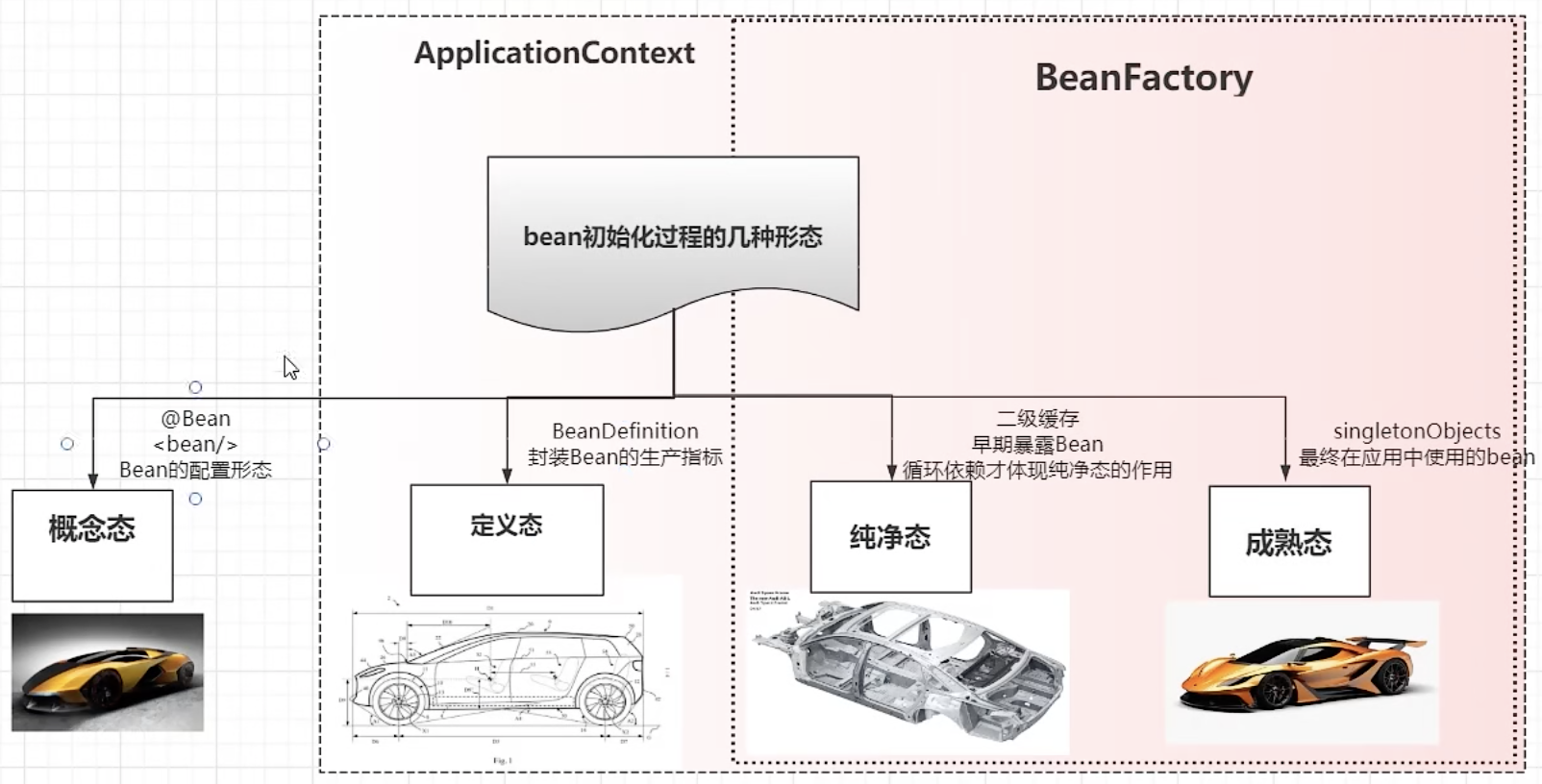

mediacodec状态机

上图显示,Stopped状态包含三个子状态:Uninitialize/Configured/Error;Executing同样包含三个子状态:Flushed,/Running/End-of-Stream.

- 对mediacodec的使用需遵守上图所示流程,否则会发生错误。

- 以decode为例,当创建了mediacodec并且指定为解码后,进入Uninitialized状态,调用

configure方法后,进入Configured状态,再调用start方法进入Executing状态。- 进入Executing状态后,首先到达Flush状态,此时mediacodec会持有所有的数据,当第一个inputbufffer从队列中取出时,立即进入Running状态,这个时间很短。然后就可以调用dequeueInputBuffer和getInputBuffer来获取用户可用的缓冲区,用户填满数据后调用queueinputbuffer方法提交给解码器,解码器大部分时间都会工作在Running状态。当想inputbufferqueue中输入一帧标记

EndOfStream的时候,进入End-of-Stream状态,此时,解码器不再接受任何新的数据输入,缓冲区中的数据和标记EndOfStream最终会执行完毕。在任何时候都可以调用flush方法回到Flush状态。- 调用stop方法会使mediacodec进入Uninitialized状态,这时候可以执行configure方法来进入下一循环。当mediacodec使用完毕后必须调用release方法来释放所有的资源。

- 在某些情况下,如取出缓冲区索引时,mediacodec会发生错误进入Error状态,此时调用reset方法使得mediacodec重新处于Uninitialized状态,或者调用release来结束解码。

input

此处以解码为例,一般的解码操作如下:

- 通过inputBuffer提交给mediacodec的数据,应当是1个完整帧;

- 若不是完整帧,则解码后会马赛克、画面显示异常,或在API 26以后可通过queueInputBuffer方法设置标志位BUFFER_FLAG_PARTIAL_FRAME告诉mediacodec是部分帧,由mediacodec组装成1个完整的帧再解码;

output

- 通过releaseOutputBuffer(codec, output_buffer_index, render)来控制此帧显示与否,并归还output_buffer_index的缓冲区;

硬解码

何为硬解

所谓软解,是指使用CPU进行解码运算,GPU用以视频渲染加速,而硬解则是指利用移动端设备DSP芯片的解码能力进行解码。

此外,必须说明的是,IJKPLAYER所支持的mediacodec硬解,是在native层以反射调用java层mediacodec的硬解码能力。

为何没用NDK的mediacodec解码能力?原因在于NDK的硬解能力是在API 21(Android 5.0)后才得以支持。

Android SDK硬解

感性认识

为了让各位对mediacodec有个感性认识,以下是我用c写的伪代码,大致描述了用jmediacodec解码播放的流程:

// use mediacodec api 伪代码

void decodeWithMediacodec() {

// create h26x decoder

MediaCodec codec = MediaCodec.createByCodecName("video/avc");

// configure

codec.configure(codec, android_media_format, android_surface, crypto, flags);

// start

codec.start();

// dequeuInputBuffer

int input_buffer_index = codec.dequeInputBuffer(codec, timeout);

// write copy_size pixel data to input_buffer_index buffer

......

// queueInputBuffer

codec.queueInputBuffer(acodec, input_buffer_index, 0, copy_size, time_stamp, queue_flags)

// dequeOutputBuffer

int output_buffer_index = codec.dequeOutputBuffer(codec, bufferInfo, timeout);

// releaseOutputBuffer

codec.releaseOutputBuffer(codec, output_buffer_index, true);

// flush

codec.flush()

// stop / release

codec.stop() or codec.release()

}Annex-b格式

在进入硬解流程之前,先来认识下annex-b格式的h26x码流:

- 所谓的annex-b格式码流,是指用0x00000001或0x000001起始码分割的nalu单元,是一种h26x的一种码流组织方式;

- 具体如:| start_code | nalu header | nalu | start_code | nalu header | nalu | ......

- mediacodec解码所需的数据必须是带start_code的nalu数据,即| start_code | nalu header | nalu;

硬解流程

上一节用伪代码描述了对mediacodec的简易使用,此处便可以正式介绍IJKPLAYER对mediacodec的使用了。归纳起来,对mediacodec的使用有如下步骤:

- 首先,创建对应解码器,并完成对surface等的配置;

- 调用start()启动mediacodec解码;

- 调用dequeInputBuffer取得empty input buffer index,完成数据的写入;

- 再用queueInputBuffer()提交给mediacodec解码;

- 再用dequeOutputBuffer()取得output buffer index;

- 再用releaseOutputBuffer(codec, output_buffer_index, true)完成视频的绘制;

业务侧使能了mediacodec选项后,创建mediacodec video decoder时机,是在read_thread线程里通过avformat_find_stream_info()接口拿到视频的参数之后:

read_thread() => stream_component_open() => ffpipeline_open_video_decoder() => func_open_video_decoder() => ffpipenode_create_video_decoder_from_android_mediacodec()此处根据业务侧所设置的选项,选用ffmpeg软解,还是mediacodec硬解:

static IJKFF_Pipenode *func_open_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{

IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;

IJKFF_Pipenode *node = NULL;

// 业务侧使能了mediacodec选项,则使用之

if (ffp->mediacodec_all_videos || ffp->mediacodec_avc || ffp->mediacodec_hevc || ffp->mediacodec_mpeg2)

node = ffpipenode_create_video_decoder_from_android_mediacodec(ffp, pipeline, opaque->weak_vout);

if (!node) {

node = ffpipenode_create_video_decoder_from_ffplay(ffp);

}

return node;

}完成mediacodec的解码器的创建,surface的配置,并启动解码器:

IJKFF_Pipenode *ffpipenode_create_video_decoder_from_android_mediacodec(FFPlayer *ffp, IJKFF_Pipeline *pipeline, SDL_Vout *vout)

{

ALOGD("ffpipenode_create_video_decoder_from_android_mediacodec()\n");

if (SDL_Android_GetApiLevel() < IJK_API_16_JELLY_BEAN)

return NULL;

if (!ffp || !ffp->is)

return NULL;

IJKFF_Pipenode *node = ffpipenode_alloc(sizeof(IJKFF_Pipenode_Opaque));

if (!node)

return node;

VideoState *is = ffp->is;

IJKFF_Pipenode_Opaque *opaque = node->opaque;

JNIEnv *env = NULL;

int ret = 0;

jobject jsurface = NULL;

node->func_destroy = func_destroy;

if (ffp->mediacodec_sync) {

node->func_run_sync = func_run_sync_loop;

} else {

node->func_run_sync = func_run_sync;

}

node->func_flush = func_flush;

opaque->pipeline = pipeline;

opaque->ffp = ffp;

opaque->decoder = &is->viddec;

opaque->weak_vout = vout;

opaque->codecpar = avcodec_parameters_alloc();

if (!opaque->codecpar)

goto fail;

ret = avcodec_parameters_from_context(opaque->codecpar, opaque->decoder->avctx);

if (ret)

goto fail;

// 根据opaque->codecpar->codec_id取得profile和level以及java层创建decoder时所需的mime_type,如“video/avc”,此处略去相关代码

......

if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {

ALOGE("%s:create: SetupThreadEnv failed\n", __func__);

goto fail;

}

opaque->acodec_mutex = SDL_CreateMutex();

opaque->acodec_cond = SDL_CreateCond();

opaque->acodec_first_dequeue_output_mutex = SDL_CreateMutex();

opaque->acodec_first_dequeue_output_cond = SDL_CreateCond();

opaque->any_input_mutex = SDL_CreateMutex();

opaque->any_input_cond = SDL_CreateCond();

if (!opaque->acodec_cond || !opaque->acodec_cond || !opaque->acodec_first_dequeue_output_mutex || !opaque->acodec_first_dequeue_output_cond) {

ALOGE("%s:open_video_decoder: SDL_CreateCond() failed\n", __func__);

goto fail;

}

// 创建inputFormat,并通过setBuffer将sps和pps设置给mediacodec

ret = recreate_format_l(env, node);

if (ret) {

ALOGE("amc: recreate_format_l failed\n");

goto fail;

}

if (!ffpipeline_select_mediacodec_l(pipeline, &opaque->mcc) || !opaque->mcc.codec_name[0]) {

ALOGE("amc: no suitable codec\n");

goto fail;

}

jsurface = ffpipeline_get_surface_as_global_ref(env, pipeline);

// 创建decoder,完成surface配置,并启动mediacodec解码

ret = reconfigure_codec_l(env, node, jsurface);

J4A_DeleteGlobalRef__p(env, &jsurface);

if (ret != 0)

goto fail;

ffp_set_video_codec_info(ffp, MEDIACODEC_MODULE_NAME, opaque->mcc.codec_name);

opaque->off_buf_out = 0;

if (opaque->n_buf_out) {

int i;

opaque->amc_buf_out = calloc(opaque->n_buf_out, sizeof(*opaque->amc_buf_out));

assert(opaque->amc_buf_out != NULL);

for (i = 0; i < opaque->n_buf_out; i++)

opaque->amc_buf_out[i].pts = AV_NOPTS_VALUE;

}

SDL_SpeedSamplerReset(&opaque->sampler);

ffp->stat.vdec_type = FFP_PROPV_DECODER_MEDIACODEC;

return node;

fail:

ffpipenode_free_p(&node);

return NULL;

}mediacodec要能解码,必须先拿到sps/pps等视频参数信息,方式有二:

- 在configure之前,通过mediacodec的setBuffer,csd-0或csd-1属性,将sps和pps提交给mediacodec;

- 在start启动解码器后,通过queueInputBuffer()提交sps和pps时,位或flag |= AMEDIACODEC__BUFFER_FLAG_CODEC_CONFIG亦可;

- 值得一提的是,若播放过程中视频的分辨率发生变更,需重新configure完成配置并提交新的sps和pps数据;

IJKPLAYER选择了第一种方式:

static int recreate_format_l(JNIEnv *env, IJKFF_Pipenode *node)

{

IJKFF_Pipenode_Opaque *opaque = node->opaque;

FFPlayer *ffp = opaque->ffp;

int rotate_degrees = 0;

ALOGI("AMediaFormat: %s, %dx%d\n", opaque->mcc.mime_type, opaque->codecpar->width, opaque->codecpar->height);

SDL_AMediaFormat_deleteP(&opaque->output_aformat);

opaque->input_aformat = SDL_AMediaFormatJava_createVideoFormat(env, opaque->mcc.mime_type, opaque->codecpar->width, opaque->codecpar->height);

if (opaque->codecpar->extradata && opaque->codecpar->extradata_size > 0) {

if ((opaque->codecpar->codec_id == AV_CODEC_ID_H264 && opaque->codecpar->extradata[0] == 1)

|| (opaque->codecpar->codec_id == AV_CODEC_ID_HEVC && opaque->codecpar->extradata_size > 3

&& (opaque->codecpar->extradata[0] == 1 || opaque->codecpar->extradata[1] == 1))) {

#if AMC_USE_AVBITSTREAM_FILTER

// 此处使用h264_mp4toannexb或hevc_mp4toannexb过滤器将avcC格式的数据转为annex-b格式,略去相关code

......

#else

size_t sps_pps_size = 0;

size_t convert_size = opaque->codecpar->extradata_size + 20;

uint8_t *convert_buffer = (uint8_t *)calloc(1, convert_size);

if (!convert_buffer) {

ALOGE("%s:sps_pps_buffer: alloc failed\n", __func__);

goto fail;

}

if (opaque->codecpar->codec_id == AV_CODEC_ID_H264) {

if (0 != convert_sps_pps(opaque->codecpar->extradata, opaque->codecpar->extradata_size,

convert_buffer, convert_size,

&sps_pps_size, &opaque->nal_size)) {

ALOGE("%s:convert_sps_pps: failed\n", __func__);

goto fail;

}

} else {

if (0 != convert_hevc_nal_units(opaque->codecpar->extradata, opaque->codecpar->extradata_size,

convert_buffer, convert_size,

&sps_pps_size, &opaque->nal_size)) {

ALOGE("%s:convert_hevc_nal_units: failed\n", __func__);

goto fail;

}

}

// 将sps/pps设置给mediacodec,通过csd-0,start_code|sps|start_code|pps

SDL_AMediaFormat_setBuffer(opaque->input_aformat, "csd-0", convert_buffer, sps_pps_size);

for(int i = 0; i < sps_pps_size; i+=4) {

ALOGE("csd-0[%d]: %02x%02x%02x%02x\n", (int)sps_pps_size, (int)convert_buffer[i+0], (int)convert_buffer[i+1], (int)convert_buffer[i+2], (int)convert_buffer[i+3]);

}

free(convert_buffer);

#endif

} else if (opaque->codecpar->codec_id == AV_CODEC_ID_MPEG4) {

// ......此处省去MPEG4格式

} else {

// Codec specific data

// SDL_AMediaFormat_setBuffer(opaque->aformat, "csd-0", opaque->codecpar->extradata, opaque->codecpar->extradata_size);

ALOGE("csd-0: naked\n");

}

} else {

ALOGE("no buffer(%d)\n", opaque->codecpar->extradata_size);

}

// 通过ffmpeg拿到旋转角度,再设置给mediacodec,让mediacodec自动旋转

rotate_degrees = ffp_get_video_rotate_degrees(ffp);

if (ffp->mediacodec_auto_rotate &&

rotate_degrees != 0 &&

SDL_Android_GetApiLevel() >= IJK_API_21_LOLLIPOP) {

ALOGI("amc: rotate in decoder: %d\n", rotate_degrees);

opaque->frame_rotate_degrees = rotate_degrees;

SDL_AMediaFormat_setInt32(opaque->input_aformat, "rotation-degrees", rotate_degrees);

ffp_notify_msg2(ffp, FFP_MSG_VIDEO_ROTATION_CHANGED, 0);

} else {

ALOGI("amc: rotate notify: %d\n", rotate_degrees);

ffp_notify_msg2(ffp, FFP_MSG_VIDEO_ROTATION_CHANGED, rotate_degrees);

}

return 0;

fail:

return -1;

}万事俱备,可以给mediacodec喂数据了:

static int feed_input_buffer(JNIEnv *env, IJKFF_Pipenode *node, int64_t timeUs, int *enqueue_count)

{

IJKFF_Pipenode_Opaque *opaque = node->opaque;

FFPlayer *ffp = opaque->ffp;

IJKFF_Pipeline *pipeline = opaque->pipeline;

VideoState *is = ffp->is;

Decoder *d = &is->viddec;

PacketQueue *q = d->queue;

sdl_amedia_status_t amc_ret = 0;

int ret = 0;

ssize_t input_buffer_index = 0;

ssize_t copy_size = 0;

int64_t time_stamp = 0;

uint32_t queue_flags = 0;

if (enqueue_count)

*enqueue_count = 0;

if (d->queue->abort_request) {

ret = 0;

goto fail;

}

if (!d->packet_pending || d->queue->serial != d->pkt_serial) {

#if AMC_USE_AVBITSTREAM_FILTER

#else

H264ConvertState convert_state = {0, 0};

#endif

// 从AVPacket队列里拿到1帧视频数据,压缩的数据

AVPacket pkt;

do {

if (d->queue->nb_packets == 0)

SDL_CondSignal(d->empty_queue_cond);

if (ffp_packet_queue_get_or_buffering(ffp, d->queue, &pkt, &d->pkt_serial, &d->finished) < 0) {

ret = -1;

goto fail;

}

if (ffp_is_flush_packet(&pkt) || opaque->acodec_flush_request) {

// request flush before lock, or never get mutex

opaque->acodec_flush_request = true;

SDL_LockMutex(opaque->acodec_mutex);

if (SDL_AMediaCodec_isStarted(opaque->acodec)) {

if (opaque->input_packet_count > 0) {

// flush empty queue cause error on OMX.SEC.AVC.Decoder (Nexus S)

SDL_VoutAndroid_invalidateAllBuffers(opaque->weak_vout);

SDL_AMediaCodec_flush(opaque->acodec);

opaque->input_packet_count = 0;

}

// If codec is configured in synchronous mode, codec will resume automatically

// SDL_AMediaCodec_start(opaque->acodec);

}

opaque->acodec_flush_request = false;

SDL_CondSignal(opaque->acodec_cond);

SDL_UnlockMutex(opaque->acodec_mutex);

d->finished = 0;

d->next_pts = d->start_pts;

d->next_pts_tb = d->start_pts_tb;

}

} while (ffp_is_flush_packet(&pkt) || d->queue->serial != d->pkt_serial);

av_packet_split_side_data(&pkt);

av_packet_unref(&d->pkt);

d->pkt_temp = d->pkt = pkt;

d->packet_pending = 1;

if (opaque->ffp->mediacodec_handle_resolution_change &&

opaque->codecpar->codec_id == AV_CODEC_ID_H264) {

// 此处处理分辨率变更的逻辑,略去相关代码

......

}

#if AMC_USE_AVBITSTREAM_FILTER

// d->pkt_temp->data could be allocated by av_bitstream_filter_filter

// 此处使用av_bitstream_filter_filter过滤器将avcC格式的数据转为annex-b格式

......

#else

// 将avcC格式数据转为annex-b格式的

if (opaque->codecpar->codec_id == AV_CODEC_ID_H264 || opaque->codecpar->codec_id == AV_CODEC_ID_HEVC) {

convert_h264_to_annexb(d->pkt_temp.data, d->pkt_temp.size, opaque->nal_size, &convert_state);

int64_t time_stamp = d->pkt_temp.pts;

if (!time_stamp && d->pkt_temp.dts)

time_stamp = d->pkt_temp.dts;

if (time_stamp > 0) {

time_stamp = av_rescale_q(time_stamp, is->video_st->time_base, AV_TIME_BASE_Q);

} else {

time_stamp = 0;

}

}

#endif

}

if (d->pkt_temp.data) {

// reconfigure surface if surface changed

// NULL surface cause no display

// 此处是重新配置surface,重启解码器的逻辑,略去相关代码

......

// 拿到mediacodec的一个empty input buffer index

queue_flags = 0;

input_buffer_index = SDL_AMediaCodec_dequeueInputBuffer(opaque->acodec, timeUs);

if (input_buffer_index < 0) {

if (SDL_AMediaCodec_isInputBuffersValid(opaque->acodec)) {

// timeout

ret = 0;

goto fail;

} else {

// enqueue fake frame

queue_flags |= AMEDIACODEC__BUFFER_FLAG_FAKE_FRAME;

copy_size = d->pkt_temp.size;

}

} else {

SDL_AMediaCodecFake_flushFakeFrames(opaque->acodec);

// 将video数据写入empty input buffer

copy_size = SDL_AMediaCodec_writeInputData(opaque->acodec, input_buffer_index, d->pkt_temp.data, d->pkt_temp.size);

if (!copy_size) {

ALOGE("%s: SDL_AMediaCodec_getInputBuffer failed\n", __func__);

ret = -1;

goto fail;

}

}

time_stamp = d->pkt_temp.pts;

if (time_stamp == AV_NOPTS_VALUE && d->pkt_temp.dts != AV_NOPTS_VALUE)

time_stamp = d->pkt_temp.dts;

if (time_stamp >= 0) {

time_stamp = av_rescale_q(time_stamp, is->video_st->time_base, AV_TIME_BASE_Q);

} else {

time_stamp = 0;

}

// ALOGE("queueInputBuffer, %lld\n", time_stamp);

// 将video数据input_buffer_index所指向的内容提交给mediacodec

amc_ret = SDL_AMediaCodec_queueInputBuffer(opaque->acodec, input_buffer_index, 0, copy_size, time_stamp, queue_flags);

if (amc_ret != SDL_AMEDIA_OK) {

ALOGE("%s: SDL_AMediaCodec_getInputBuffer failed\n", __func__);

ret = -1;

goto fail;

}

// ALOGE("%s: queue %d/%d", __func__, (int)copy_size, (int)input_buffer_size);

opaque->input_packet_count++;

if (enqueue_count)

++*enqueue_count;

}

if (copy_size < 0) {

d->packet_pending = 0;

} else {

d->pkt_temp.dts =

d->pkt_temp.pts = AV_NOPTS_VALUE;

if (d->pkt_temp.data) {

// 1个AVPacket的数据,1个mediacodec的inputBuffer或装不下,需将剩下的数据装入下1个或下下1个inputBuffer中,直到装完

// 或引发解码异常,画面马赛克

d->pkt_temp.data += copy_size;

d->pkt_temp.size -= copy_size;

if (d->pkt_temp.size <= 0)

d->packet_pending = 0;

} else {

// FIXME: detect if decode finished

// if (!got_frame) {

d->packet_pending = 0;

// 到文件末,解码完毕

d->finished = d->pkt_serial;

// }

}

}

fail:

return ret;

}消费mediacodec的像素数据,控制video的显示:

static int drain_output_buffer2(JNIEnv *env, IJKFF_Pipenode *node, int64_t timeUs, int *dequeue_count, AVFrame *frame, AVRational frame_rate)

{

IJKFF_Pipenode_Opaque *opaque = node->opaque;

FFPlayer *ffp = opaque->ffp;

VideoState *is = ffp->is;

AVRational tb = is->video_st->time_base;

int got_frame = 0;

int ret = -1;

double duration;

double pts;

while (ret) {

got_frame = 0;

// 消费mediacodec的output buffer,控制显示

ret = drain_output_buffer2_l(env, node, timeUs, dequeue_count, frame, &got_frame);

if (opaque->decoder->queue->abort_request) {

if (got_frame && frame->opaque)

SDL_VoutAndroid_releaseBufferProxyP(opaque->weak_vout, (SDL_AMediaCodecBufferProxy **)&frame->opaque, false);

return ACODEC_EXIT;

}

if (ret != 0) {

if (got_frame && frame->opaque)

SDL_VoutAndroid_releaseBufferProxyP(opaque->weak_vout, (SDL_AMediaCodecBufferProxy **)&frame->opaque, false);

}

}

// AMEDIACODEC__BUFFER_FLAG_FAKE_FRAME帧似只在此处有用

if (got_frame) {

// 此处若业务侧使能了framedrop选项,有丢帧逻辑,略去相关代码

......

// 统一ffmpeg软解render逻辑,此处将mediacodec解码后的AVFrame填充之后,喂给OpenGL准备渲染

ret = ffp_queue_picture(ffp, frame, pts, duration, av_frame_get_pkt_pos(frame), is->viddec.pkt_serial);

if (ret) {

if (frame->opaque)

SDL_VoutAndroid_releaseBufferProxyP(opaque->weak_vout, (SDL_AMediaCodecBufferProxy **)&frame->opaque, false);

}

av_frame_unref(frame);

}

return ret;

}消费output buffer数据,填充AVFrame,统一ffmpeg软解render逻辑:

static int drain_output_buffer2(JNIEnv *env, IJKFF_Pipenode *node, int64_t timeUs, int *dequeue_count, AVFrame *frame, AVRational frame_rate)

{

IJKFF_Pipenode_Opaque *opaque = node->opaque;

FFPlayer *ffp = opaque->ffp;

VideoState *is = ffp->is;

AVRational tb = is->video_st->time_base;

int got_frame = 0;

int ret = -1;

double duration;

double pts;

while (ret) {

got_frame = 0;

// 消费mediacodec的output buffer,填充AVFrame,并控制显示

ret = drain_output_buffer2_l(env, node, timeUs, dequeue_count, frame, &got_frame);

if (opaque->decoder->queue->abort_request) {

if (got_frame && frame->opaque)

SDL_VoutAndroid_releaseBufferProxyP(opaque->weak_vout, (SDL_AMediaCodecBufferProxy **)&frame->opaque, false);

return ACODEC_EXIT;

}

if (ret != 0) {

if (got_frame && frame->opaque)

SDL_VoutAndroid_releaseBufferProxyP(opaque->weak_vout, (SDL_AMediaCodecBufferProxy **)&frame->opaque, false);

}

}

// AMEDIACODEC__BUFFER_FLAG_FAKE_FRAME帧似只在此处有用

if (got_frame) {

// 此处若业务侧使能了framedrop选项,有丢帧逻辑,略去相关代码

......

// 统一ffmpeg软解render逻辑,此处将mediacodec解码后的AVFrame填充之后,喂给OpenGL准备渲染

ret = ffp_queue_picture(ffp, frame, pts, duration, av_frame_get_pkt_pos(frame), is->viddec.pkt_serial);

if (ret) {

if (frame->opaque)

SDL_VoutAndroid_releaseBufferProxyP(opaque->weak_vout, (SDL_AMediaCodecBufferProxy **)&frame->opaque, false);

}

av_frame_unref(frame);

}

return ret;

}

static int drain_output_buffer2_l(JNIEnv *env, IJKFF_Pipenode *node, int64_t timeUs, int *dequeue_count, AVFrame *frame, int *got_frame)

{

IJKFF_Pipenode_Opaque *opaque = node->opaque;

FFPlayer *ffp = opaque->ffp;

SDL_AMediaCodecBufferInfo bufferInfo;

ssize_t output_buffer_index = 0;

if (dequeue_count)

*dequeue_count = 0;

if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {

ALOGE("%s:create: SetupThreadEnv failed\n", __func__);

return ACODEC_RETRY;

}

// 取得output buffer index,控制渲染

output_buffer_index = SDL_AMediaCodecFake_dequeueOutputBuffer(opaque->acodec, &bufferInfo, timeUs);

if (output_buffer_index == AMEDIACODEC__INFO_OUTPUT_BUFFERS_CHANGED) {

ALOGD("AMEDIACODEC__INFO_OUTPUT_BUFFERS_CHANGED\n");

return ACODEC_RETRY;

} else if (output_buffer_index == AMEDIACODEC__INFO_OUTPUT_FORMAT_CHANGED) {

ALOGD("AMEDIACODEC__INFO_OUTPUT_FORMAT_CHANGED\n");

SDL_AMediaFormat_deleteP(&opaque->output_aformat);

// 视频的输出格式发生变更,此处打印之

opaque->output_aformat = SDL_AMediaCodec_getOutputFormat(opaque->acodec);

if (opaque->output_aformat) {

int width = 0;

int height = 0;

int color_format = 0;

int stride = 0;

int slice_height = 0;

int crop_left = 0;

int crop_top = 0;

int crop_right = 0;

int crop_bottom = 0;

SDL_AMediaFormat_getInt32(opaque->output_aformat, "width", &width);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "height", &height);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "color-format", &color_format);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "stride", &stride);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "slice-height", &slice_height);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "crop-left", &crop_left);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "crop-top", &crop_top);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "crop-right", &crop_right);

SDL_AMediaFormat_getInt32(opaque->output_aformat, "crop-bottom", &crop_bottom);

// TI decoder could crash after reconfigure

// ffp_notify_msg3(ffp, FFP_MSG_VIDEO_SIZE_CHANGED, width, height);

// opaque->frame_width = width;

// opaque->frame_height = height;

ALOGI(

"AMEDIACODEC__INFO_OUTPUT_FORMAT_CHANGED\n"

" width-height: (%d x %d)\n"

" color-format: (%s: 0x%x)\n"

" stride: (%d)\n"

" slice-height: (%d)\n"

" crop: (%d, %d, %d, %d)\n"

,

width, height,

SDL_AMediaCodec_getColorFormatName(color_format), color_format,

stride,

slice_height,

crop_left, crop_top, crop_right, crop_bottom);

}

return ACODEC_RETRY;

// continue;

} else if (output_buffer_index == AMEDIACODEC__INFO_TRY_AGAIN_LATER) {

return 0;

// continue;

} else if (output_buffer_index < 0) {

return 0;

} else if (output_buffer_index >= 0) {

ffp->stat.vdps = SDL_SpeedSamplerAdd(&opaque->sampler, FFP_SHOW_VDPS_MEDIACODEC, "vdps[MediaCodec]");

if (dequeue_count)

++*dequeue_count;

// Galaxy Nexus, Ti OMAP4460 手机兼容

if (opaque->n_buf_out) {

// 此处是mediacodec对以上手机的兼容处理,略去相关代码

......

} else {

// 此处将mediacodec解码后的output buffer index的内容填充到AVFrame

amc_fill_frame(node, frame, got_frame, output_buffer_index, SDL_AMediaCodec_getSerial(opaque->acodec), &bufferInfo);

}

}

return 0;

}最后在此处render,由于mediacodec使用的是ANativeWindow,是surface输出,不需要在native层再用OpenGL ES渲染了:

static int func_display_overlay_l(SDL_Vout *vout, SDL_VoutOverlay *overlay)

{

SDL_Vout_Opaque *opaque = vout->opaque;

ANativeWindow *native_window = opaque->native_window;

if (!native_window) {

if (!opaque->null_native_window_warned) {

opaque->null_native_window_warned = 1;

ALOGW("func_display_overlay_l: NULL native_window");

}

return -1;

} else {

opaque->null_native_window_warned = 1;

}

if (!overlay) {

ALOGE("func_display_overlay_l: NULL overlay");

return -1;

}

if (overlay->w <= 0 || overlay->h <= 0) {

ALOGE("func_display_overlay_l: invalid overlay dimensions(%d, %d)", overlay->w, overlay->h);

return -1;

}

switch(overlay->format) {

case SDL_FCC__AMC: {

// only ANativeWindow support

IJK_EGL_terminate(opaque->egl);

// mediacodec硬解的render在此函数里

return SDL_VoutOverlayAMediaCodec_releaseFrame_l(overlay, NULL, true);

}

case SDL_FCC_RV24:

case SDL_FCC_I420:

case SDL_FCC_I444P10LE: {

// only GLES support

if (opaque->egl)

return IJK_EGL_display(opaque->egl, native_window, overlay);

break;

}

case SDL_FCC_YV12:

case SDL_FCC_RV16:

case SDL_FCC_RV32: {

// both GLES & ANativeWindow support

if (vout->overlay_format == SDL_FCC__GLES2 && opaque->egl)

return IJK_EGL_display(opaque->egl, native_window, overlay);

break;

}

}

// fallback to ANativeWindow

IJK_EGL_terminate(opaque->egl);

return SDL_Android_NativeWindow_display_l(native_window, overlay);

}之后的调用链:

SDL_VoutOverlayAMediaCodec_releaseFrame_l() => SDL_VoutAndroid_releaseBufferProxyP_l() => SDL_VoutAndroid_releaseBufferProxy_l()最后,走到此处render:

static int SDL_VoutAndroid_releaseBufferProxy_l(SDL_Vout *vout, SDL_AMediaCodecBufferProxy *proxy, bool render)

{

SDL_Vout_Opaque *opaque = vout->opaque;

if (!proxy)

return 0;

AMCTRACE("%s: [%d] -------- proxy %d: vout: %d idx: %d render: %s fake: %s",

__func__,

proxy->buffer_id,

proxy->acodec_serial,

SDL_AMediaCodec_getSerial(opaque->acodec),

proxy->buffer_index,

render ? "true" : "false",

(proxy->buffer_info.flags & AMEDIACODEC__BUFFER_FLAG_FAKE_FRAME) ? "YES" : "NO");

ISDL_Array__push_back(&opaque->overlay_pool, proxy);

if (!SDL_AMediaCodec_isSameSerial(opaque->acodec, proxy->acodec_serial)) {

ALOGW("%s: [%d] ???????? proxy %d: vout: %d idx: %d render: %s fake: %s",

__func__,

proxy->buffer_id,

proxy->acodec_serial,

SDL_AMediaCodec_getSerial(opaque->acodec),

proxy->buffer_index,

render ? "true" : "false",

(proxy->buffer_info.flags & AMEDIACODEC__BUFFER_FLAG_FAKE_FRAME) ? "YES" : "NO");

return 0;

}

if (proxy->buffer_index < 0) {

ALOGE("%s: [%d] invalid AMediaCodec buffer index %d\n", __func__, proxy->buffer_id, proxy->buffer_index);

return 0;

} else if (proxy->buffer_info.flags & AMEDIACODEC__BUFFER_FLAG_FAKE_FRAME) {

proxy->buffer_index = -1;

return 0;

}

// 在此处控制最后render与否

sdl_amedia_status_t amc_ret = SDL_AMediaCodec_releaseOutputBuffer(opaque->acodec, proxy->buffer_index, render);

if (amc_ret != SDL_AMEDIA_OK) {

ALOGW("%s: [%d] !!!!!!!! proxy %d: vout: %d idx: %d render: %s, fake: %s",

__func__,

proxy->buffer_id,

proxy->acodec_serial,

SDL_AMediaCodec_getSerial(opaque->acodec),

proxy->buffer_index,

render ? "true" : "false",

(proxy->buffer_info.flags & AMEDIACODEC__BUFFER_FLAG_FAKE_FRAME) ? "YES" : "NO");

proxy->buffer_index = -1;

return -1;

}

proxy->buffer_index = -1;

return 0;

}分辨率改变

// TODO:待增加

SEEK时

由IJKPLAYER源码分析-点播原理-CSDN博客一文讨论可知,seek时应将mediacodec解码器的缓存flush掉,准备render seek后的video画面:

for (;;) {

// 此处省去不相干代码

......

if (is->seek_req) {

int64_t seek_target = is->seek_pos;

int64_t seek_min = is->seek_rel > 0 ? seek_target - is->seek_rel + 2: INT64_MIN;

int64_t seek_max = is->seek_rel < 0 ? seek_target - is->seek_rel - 2: INT64_MAX;

// FIXME the +-2 is due to rounding being not done in the correct direction in generation

// of the seek_pos/seek_rel variables

ffp_toggle_buffering(ffp, 1);

ffp_notify_msg3(ffp, FFP_MSG_BUFFERING_UPDATE, 0, 0);

ret = avformat_seek_file(is->ic, -1, seek_min, seek_target, seek_max, is->seek_flags);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR,

"%s: error while seeking\n", is->ic->filename);

} else {

if (is->audio_stream >= 0) {

packet_queue_flush(&is->audioq);

packet_queue_put(&is->audioq, &flush_pkt);

// TODO: clear invaild audio data

// SDL_AoutFlushAudio(ffp->aout);

}

if (is->subtitle_stream >= 0) {

packet_queue_flush(&is->subtitleq);

packet_queue_put(&is->subtitleq, &flush_pkt);

}

if (is->video_stream >= 0) {

if (ffp->node_vdec) {

// 将mediacodec 硬解码器缓存flush掉

ffpipenode_flush(ffp->node_vdec);

}

packet_queue_flush(&is->videoq);

packet_queue_put(&is->videoq, &flush_pkt);

}

}

}

}调用链:

ffpipenode_flush(node) => SDL_AMediaCodecJava_flush(codec)在此反射到Java层调用mediacodec的flush方法,将解码器的缓存清空:

static sdl_amedia_status_t SDL_AMediaCodecJava_flush(SDL_AMediaCodec* acodec)

{

SDLTRACE("%s", __func__);

JNIEnv *env = NULL;

if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {

ALOGE("%s: SetupThreadEnv failed", __func__);

return SDL_AMEDIA_ERROR_UNKNOWN;

}

jobject android_media_codec = SDL_AMediaCodecJava_getObject(env, acodec);

// 将mediacodec的解码器缓存flush掉

J4AC_MediaCodec__flush(env, android_media_codec);

if (J4A_ExceptionCheck__catchAll(env)) {

ALOGE("%s: flush", __func__);

return SDL_AMEDIA_ERROR_UNKNOWN;

}

acodec->object_serial = SDL_AMediaCodec_create_object_serial();

return SDL_AMEDIA_OK;

}异常处理

mediacodec的异常处理,本文主要讨论喂给解码器部分帧和dequeInputBuffer异常。

部分帧

在喂数据给mediacodec解码器时,或因InputBuffer容量不够,而不能提交1个完整帧的情况。IJKPLAYER的处理方式是按序多次提交部分帧,直到完整提交为止:

调用链:

feed_input_buffer() => SDL_AMediaCodec_writeInputData() => SDL_AMediaCodecJava_writeInputData()static ssize_t SDL_AMediaCodecJava_writeInputData(SDL_AMediaCodec* acodec, size_t idx, const uint8_t *data, size_t size)

{

AMCTRACE("%s", __func__);

ssize_t write_ret = -1;

jobject input_buffer_array = NULL;

jobject input_buffer = NULL;

JNIEnv *env = NULL;

if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {

ALOGE("%s: SetupThreadEnv failed", __func__);

return -1;

}

SDL_AMediaCodec_Opaque *opaque = (SDL_AMediaCodec_Opaque *)acodec->opaque;

input_buffer_array = J4AC_MediaCodec__getInputBuffers__catchAll(env, opaque->android_media_codec);

if (!input_buffer_array)

return -1;

int buffer_count = (*env)->GetArrayLength(env, input_buffer_array);

if (J4A_ExceptionCheck__catchAll(env) || idx < 0 || idx >= buffer_count) {

ALOGE("%s: idx(%d) < count(%d)\n", __func__, (int)idx, (int)buffer_count);

goto fail;

}

input_buffer = (*env)->GetObjectArrayElement(env, input_buffer_array, idx);

if (J4A_ExceptionCheck__catchAll(env) || !input_buffer) {

ALOGE("%s: GetObjectArrayElement failed\n", __func__);

goto fail;

}

{

jlong buf_size = (*env)->GetDirectBufferCapacity(env, input_buffer);

void *buf_ptr = (*env)->GetDirectBufferAddress(env, input_buffer);

// 此处因InputBuffe容量不足以容纳1个完整帧,而需多次提交的情况

write_ret = size < buf_size ? size : buf_size;

memcpy(buf_ptr, data, write_ret);

}

fail:

SDL_JNI_DeleteLocalRefP(env, &input_buffer);

SDL_JNI_DeleteLocalRefP(env, &input_buffer_array);

// 返回实际copy给mediacodec解码器InputBuffer的byte数

return write_ret;

}d->pkt_temp偏移提交给mediacodec的长度,余下的数据降在下次_feed_input_buffer()时再次喂给解码器:

// SDL_AMediaCodec_queueInputBuffer()提交InputBuffer数据

......

if (copy_size < 0) {

d->packet_pending = 0;

} else {

d->pkt_temp.dts =

d->pkt_temp.pts = AV_NOPTS_VALUE;

if (d->pkt_temp.data) {

// 1个AVPacket的数据,1个mediacodec的inputBuffer或装不下,需将剩下的数据装入下1个或下下1个inputBuffer中,直到装完

d->pkt_temp.data += copy_size;

d->pkt_temp.size -= copy_size;

if (d->pkt_temp.size <= 0)

d->packet_pending = 0;

} else {

// FIXME: detect if decode finished

// if (!got_frame) {

d->packet_pending = 0;

// 到文件末,解码完毕

d->finished = d->pkt_serial;

// }

}

}

......