scrapy扩展中间件的使用extensions

1.爬虫统计扩展中间件

在一次爬虫采集中,突然需求方来来个需求,说要知道每天某来源爬虫采集数量的情况

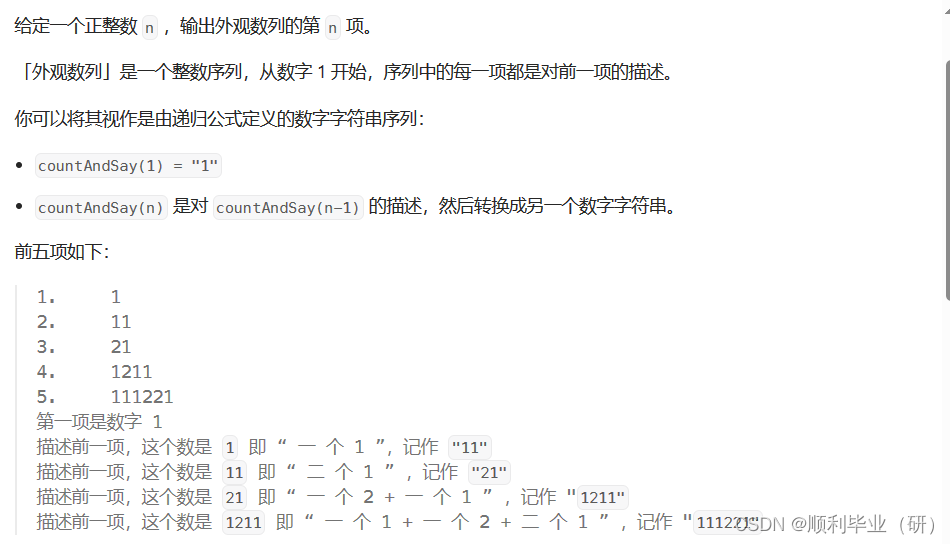

首先分析,scrpay日志输出窗口是有我们想要的信息的,

item_scraped_count:整个爬虫item的总个数

finish_time:爬虫完成时间

elapsed_time_seconds:爬虫运行时间

我们在scrapy源码中可以看到logstats.py文件

import logging

from twisted.internet import task

from scrapy.exceptions import NotConfigured

from scrapy import signals

logger = logging.getLogger(__name__)

class LogStats:

"""Log basic scraping stats periodically"""

def __init__(self, stats, interval=60.0):

self.stats = stats

self.interval = interval

self.multiplier = 60.0 / self.interval

self.task = None

@classmethod

def from_crawler(cls, crawler):

interval = crawler.settings.getfloat('LOGSTATS_INTERVAL')

if not interval:

raise NotConfigured

o = cls(crawler.stats, interval)

crawler.signals.connect(o.spider_opened, signal=signals.spider_opened)

crawler.signals.connect(o.spider_closed, signal=signals.spider_closed)

return o

def spider_opened(self, spider):

self.pagesprev = 0

self.itemsprev = 0

self.task = task.LoopingCall(self.log, spider)

self.task.start(self.interval)

def log(self, spider):

items = self.stats.get_value('item_scraped_count', 0)

pages = self.stats.get_value('response_received_count', 0)

irate = (items - self.itemsprev) * self.multiplier

prate = (pages - self.pagesprev) * self.multiplier

self.pagesprev, self.itemsprev = pages, items

msg = ("Crawled %(pages)d pages (at %(pagerate)d pages/min), "

"scraped %(items)d items (at %(itemrate)d items/min)")

log_args = {'pages': pages, 'pagerate': prate,

'items': items, 'itemrate': irate}

logger.info(msg, log_args, extra={'spider': spider})

def spider_closed(self, spider, reason):

if self.task and self.task.running:

self.task.stop()

上述代码就是负责爬虫相关的日志输出

同时可以注意到CoreStats.py中赋值了elapsed_time_seconds,finish_time,finish_reason等字段,如果想要获取爬虫相关的信息统计,我们只要写一个新的类继承CoreStats即可

2.自己实现一个组件,统计信息

我定义一个自己的扩展组件,名字为SpiderStats,来统计爬虫信息

同时将获取到的信息存入MongoDB代码如下

"""

author:tyj

"""

import datetime

import os

from pymongo import MongoClient, ReadPreference

from scrapy import crawler

from scrapy.utils.conf import get_config

from scrapy.extensions.corestats import CoreStats

from scrapy.extensions.logstats import LogStats

import logging

logger = logging.getLogger(__name__)

class SpiderStats(CoreStats):

batch = None

sources = None

def item_scraped(self, item, spider):

batch = item.get("batch")

if batch:

self.batch = batch

if item.get("sources"):

self.sources = item.get("sources")

def spider_closed(self, spider):

items = self.stats.get_value('item_scraped_count', 0)

finish_time = self.stats.get_value('finish_time') + datetime.timedelta(hours=8)

finish_time = finish_time.strftime('%Y-%m-%d %H:%M:%S')

start_time = self.stats.get_value('start_time') + datetime.timedelta(hours=8)

start_time = start_time.strftime('%Y-%m-%d %H:%M:%S')

result_ = {}

result_["total_items"] = items

result_["start_time"] = start_time

result_["finish_time"] = finish_time

result_["batch"] = self.batch

result_["sources"] = self.sources

print("items:", items, "start_time:", start_time, "finish_time:", finish_time, self.batch,self.sources)

section = "mongo_cfg_prod"

MONGO_HOST = get_config().get(section=section,option='MONGO_HOST',fallback='')

MONGO_DB = get_config().get(section=section,option='MONGO_DB',fallback='')

MONGO_USER = get_config().get(section=section,option='MONGO_USER',fallback='')

MONGO_PSW = get_config().get(section=section,option='MONGO_PSW',fallback='')

AUTH_SOURCE = get_config().get(section=section,option='AUTH_SOURCE',fallback='')

mongo_url = 'mongodb://{0}:{1}@{2}/?authSource={3}&replicaSet=rs01'.format(MONGO_USER, MONGO_PSW,

MONGO_HOST,

AUTH_SOURCE)

client = MongoClient(mongo_url)

db = client.get_database(MONGO_DB, read_preference=ReadPreference.SECONDARY_PREFERRED)

coll = db["ware_detail_price_statistic"]

coll.insert(result_)

client.close()

大家可以根据自己的需求来增加相应的扩展中间件,来符合自己业务场景需求