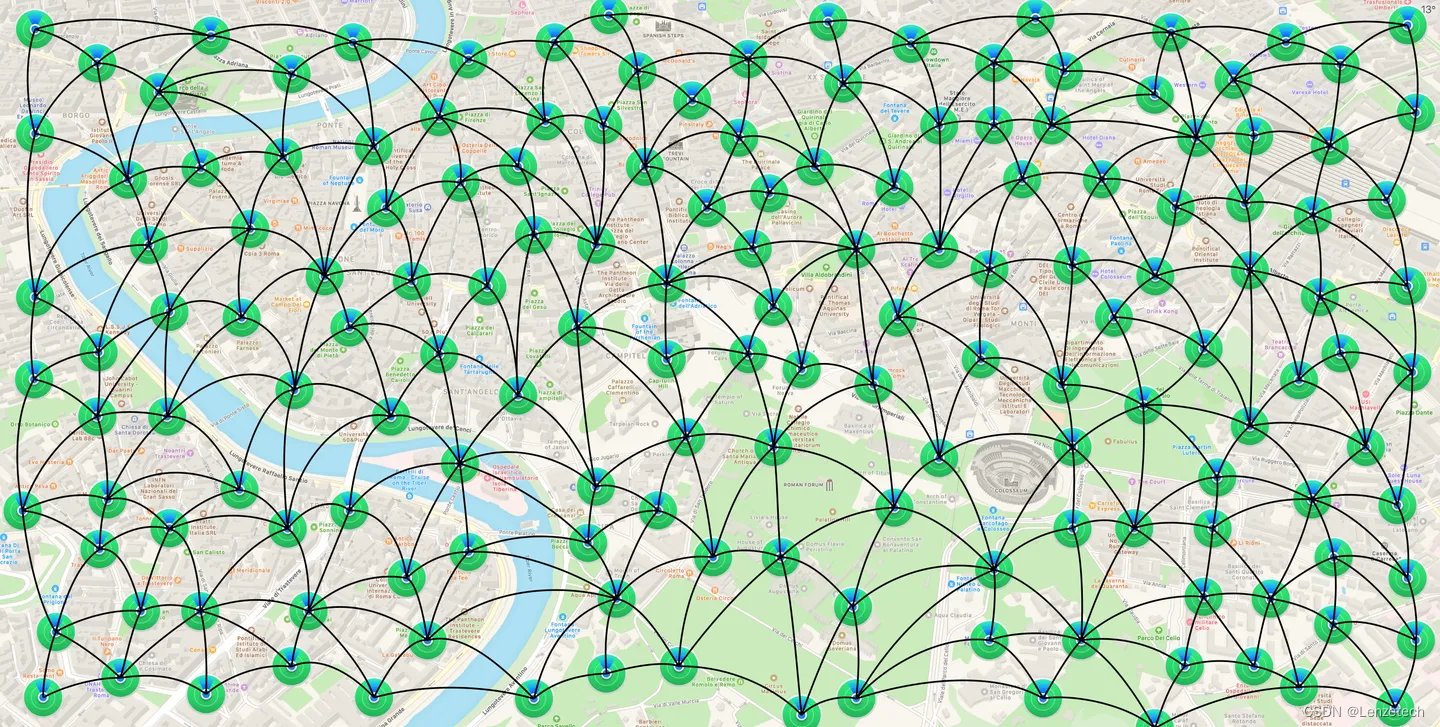

尝试搭建双节点clickhouse,以做数据存储

环境准备

#创建clickhouse用户与用户组

sudo groupadd clickhouse

sudo useradd -m clickhouse -g clickhouse

#密码为clickhouse

sudo passwd clickhouse

#赋予权限

chmod -R 777 /opt/comm_app

#配置使用sudo命令的用户

vim /etc/sudoers

clickhouse ALL=(ALL:ALL) ALL

下载&安装

#切换为clickhouse操作

export LATEST_VERSION=22.3.20.29

#21.2.xx 之后的tgz包路径变成https://repo.clickhouse.com/tgz/stable下

#21.2.xx 之前的还在https://repo.clickhouse.com/tgz下

curl -O https://packages.clickhouse.com/tgz/stable/clickhouse-common-static-$LATEST_VERSION-amd64.tgz

curl -O https://packages.clickhouse.com/tgz/stable/clickhouse-common-static-dbg-$LATEST_VERSION-amd64.tgz

curl -O https://packages.clickhouse.com/tgz/stable/clickhouse-server-$LATEST_VERSION-amd64.tgz

curl -O https://packages.clickhouse.com/tgz/stable/clickhouse-client-$LATEST_VERSION-amd64.tgz

tar -xzvf clickhouse-common-static-$LATEST_VERSION-amd64.tgz

sudo clickhouse-common-static-$LATEST_VERSION/install/doinst.sh

tar -xzvf clickhouse-common-static-dbg-$LATEST_VERSION-amd64.tgz

sudo clickhouse-common-static-dbg-$LATEST_VERSION/install/doinst.sh

tar -xzvf clickhouse-server-$LATEST_VERSION-amd64.tgz

sudo clickhouse-server-$LATEST_VERSION/install/doinst.sh

tar -xzvf clickhouse-client-$LATEST_VERSION-amd64.tgz

sudo clickhouse-client-$LATEST_VERSION/install/doinst.sh

配置调整

1.配置开放外网访问

vim /etc/clickhouse-server/config.d/listen.xml

<clickhouse>

<listen_host>0.0.0.0</listen_host>

</clickhouse>

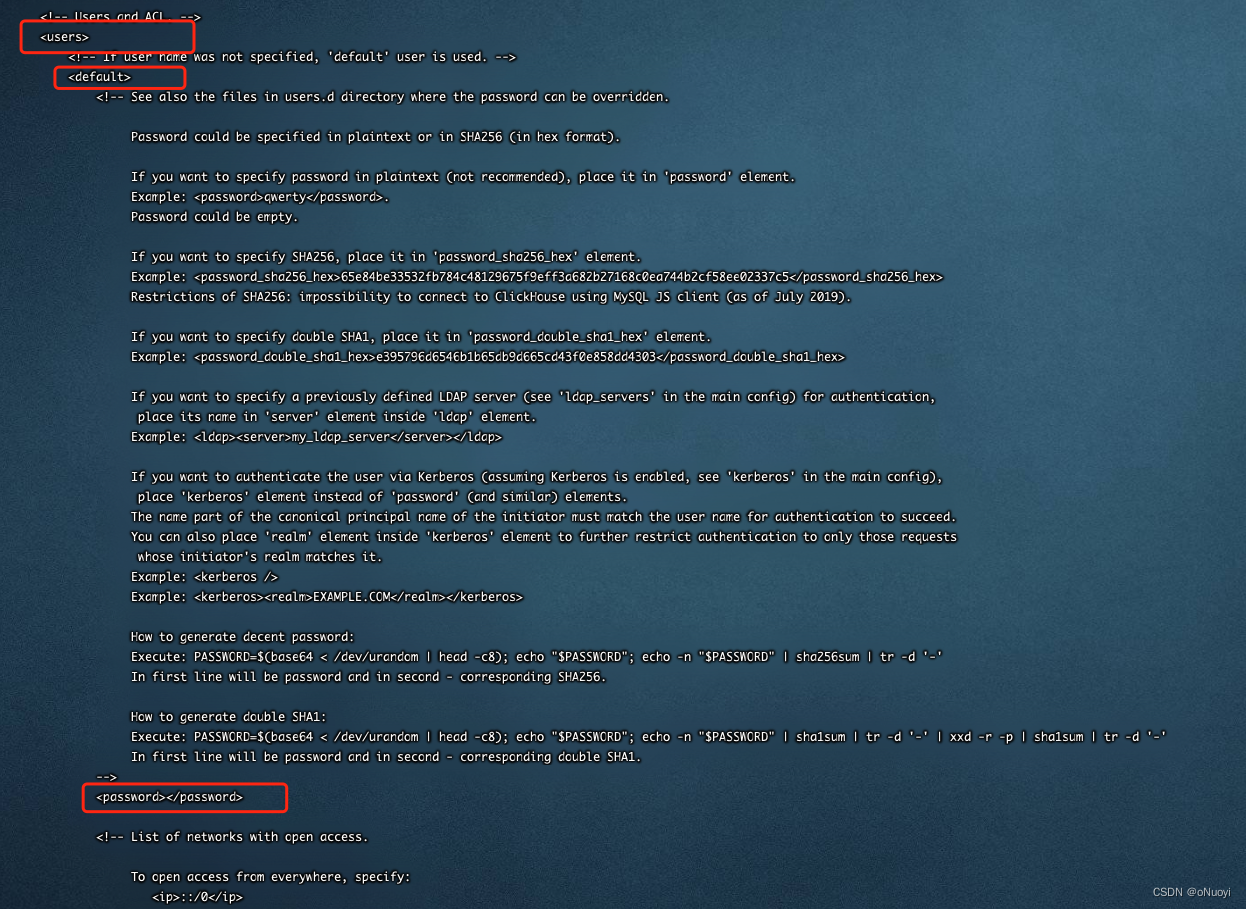

2.配置修改登录密码

vim /etc/clickhouse-server/users.d/default-password.xml

<clickhouse>

<users>

<default>

<password>EqcT3KF4kQ?v</password>

</default>

</users>

</clickhouse>

3.基础配置

3.1 集群分配配置(host password port 需调整为正式配置值)在remote_servers标签下

vim /etc/clickhouse-server/config.xml

<transport_cluster>

<shard>

<!-- 权重:新增一条数据的时候有多大的概率落入该分片,默认值:1 -->

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>10.22.1.116</host>

<port>1200</port>

<user>default</user>

<password>clickhouse</password>

<compression>true</compression>

</replica>

</shard>

<shard>

<!-- 权重:新增一条数据的时候有多大的概率落入该分片,默认值:1 -->

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>10.22.1.117</host>

<port>1200</port>

<user>default</user>

<password>clickhouse</password>

<compression>true</compression>

</replica>

</shard>

</transport_cluster>

3.2 zookeeper配置

<zookeeper>

<node>

<host>10.22.3.217</host>

<port>2181</port>

</node>

<node>

<host>172.27.2.140</host>

<port>2180</port>

</node>

<node>

<host>172.27.2.140</host>

<port>2179</port>

</node>

</zookeeper>

3.3配置分片名称 每个机器下分别配置自己的ip与编号 01 02 即可

# 10.22.1.116 服务器

<macros>

<shard>01</shard>

<replica>10.22.1.116</replica>

</macros>

# 10.22.1.117 服务器

<macros>

<shard>02</shard>

<replica>10.22.1.117</replica>

</macros>

3.4修改默认端口

<tcp_port>1200</tcp_port>

服务启动

## 服务启动

sudo service clickhouse-server start

# 服务重启

sudo service clickhouse-server restart

# 服务停止

sudo service clickhouse-server stop

验证

# 查看日志与错误日志

tail -f /var/log/clickhouse-server/clickhouse-server.log

tail -f /var/log/clickhouse-server/clickhouse-server.err.log

# 使用client链接数据库进行验证

clickhouse-client -h 10.22.1.116 --port 1200 -u default --password EqcT3KF4kQ?v -m -n

clickhouse-client -h 10.22.1.117 --port 1200 -u default --password EqcT3KF4kQ?v -m -n

select 1

# 查询数据文件大小

SELECT

database, table,

formatReadableSize(sum(bytes_on_disk)) AS sizeFROM system.partsWHERE active = 1GROUP BY

database, tableORDER BY sum(bytes_on_disk) DESC;

# 查询表数据量

SELECT

database,

table,

sum(rows) as rows FROM system.parts

WHERE active = 1 GROUP BY

database,

table ORDER BY rows DESC;

地址:

jdbc:clickhouse://172.27.2.140:8123

default

clickhouse

异常以及处理办法

- 在查看分片表时,报出该异常;原因是分配连接需要使用tcp配置的端口进行连接,由于配置与设定不一致导致;

2024-02-22 17:36:42] Code: 279. DB::NetException: All connection tries failed. Log:

[2024-02-22 17:36:42] Code: 210. DB::NetException: Connection refused (172.27.2.140:1200). (NETWORK_ERROR) (version 22.3.20.29 (official build))

[2024-02-22 17:36:42] Code: 210. DB::NetException: Connection refused (172.27.2.140:1200). (NETWORK_ERROR) (version 22.3.20.29 (official build))

[2024-02-22 17:36:42] Code: 210. DB::NetException: Connection refused (172.27.2.140:1200). (NETWORK_ERROR) (version 22.3.20.29 (official build))

[2024-02-22 17:36:42] : While executing Remote. (ALL_CONNECTION_TRIES_FAILED) (version 22.3.20.29 (official build))

[2024-02-22 17:36:42] , server ClickHouseNode [uri=http://172.27.2.147:8123/default, options={session_id=DataGrip_846de358-2c8b-47be-9e5e-193b1bb7a7b7}]@-496462918

解决办法:

在配置文件中修改port中的值,确保与tcp_port 标签中的端口一致

<remote_servers>

<tranport_cluster>

<shard>

<!-- 权重:新增一条数据的时候有多大的概率落入该分片,默认值:1 -->

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.27.2.140</host>

<port>1200</port>

<user>default</user>

<password>clickhouse</password>

<compression>true</compression>

</replica>

</shard>

<shard>

<!-- 权重:新增一条数据的时候有多大的概率落入该分片,默认值:1 -->

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.27.2.147</host>

<port>1200</port>

<user>default</user>

<password>clickhouse</password>

<compression>true</compression>

</replica>

</shard>

</tranport_cluster>

</remote_servers>

- 在删除表后重新建立表的时候报

[2024-02-22 17:33:14] Code: 253. DB::Exception: Replica /clickhouse/tables/01/location_cluster2/replicas/172.27.2.140 already exists. (REPLICA_IS_ALREADY_EXIST) (version 22.3.20.29 (official build))

[2024-02-22 17:33:14] , server ClickHouseNode [uri=http://172.27.2.140:8123/default, options={session_id=DataGrip_ac9993a4-2991-4d5f-9e1f-96cec00436f2}]@381624111

解决办法:

Clickhouse drop table on cluster but not delete on zookeeper - xibuhaohao - 博客园 (cnblogs.com)

- 日志中报 由于上述删除zk节点,导致找不到节点无法再分布式表中插入数据。

2024.02.22 19:23:51.001232 [ 19421 ] {} <Error> void DB::AsynchronousMetrics::update(std::chrono::system_clock::time_point): Cannot get replica delay for table: default.location_cluster2: Code: 999. Coordination::Exception: No node, path: /clickhouse/tables/02/location_cluster2/replicas. (KEEPER_EXCEPTION), Stack trace (when copying this message, always include the lines below):

DB::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int, bool) @ 0xb3ac1da in /usr/bin/clickhouse

Coordination::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, Coordination::Error, int) @ 0x16bd55b5 in /usr/bin/clickhouse

解决办法:

重新建立表,建立zk节点

- 分布式表中无法进行mutations操作,删除,修改等,需要到原数据表进行删除。

[07000][48] Code: 48. DB::Exception: Table engine Distributed doesn't support mutations. (NOT_IMPLEMENTED) (version 22.3.20.29 (official build)) , server ClickHouseNode [uri=http://172.27.2.140:8123/default, options={session_id=DataGrip_717b36fd-bd96-448e-9292-a0de15ad3a22}]@-1614401145

解决办法:

到源数据表中进行数据删除,修改等