datasets包的官方GitHub项目:huggingface/datasets: 🤗 The largest hub of ready-to-use datasets for ML models with fast, easy-to-use and efficient data manipulation tools

datasets包可以加载很多公开数据集,并对其进行预处理。

datasets包的建构参考了TFDS项目:tensorflow/datasets: TFDS is a collection of datasets ready to use with TensorFlow, Jax, …

这一部分介绍本来包含在了用huggingface.transformers.AutoModelForSequenceClassification在文本分类任务上微调预训练模型这篇博文中,为清晰起见,我将其单拎出来。但对于datasets的具体应用仍然可以参考这篇博文和我写的其他介绍transformers的使用的博文。

文章目录

1. datasets包的安装

如果使用anaconda作为包管理环境,并已经使用pip安装的transformers包,则可以直接使用pip来安装datasets:

pip install datasets

其他安装方式可参考datasets文档:https://huggingface.co/docs/datasets/installation

2. datasets简易入门

本部分使用的函数参考官方GitHub项目的README文件。

- 所有可用的数据集:

all_available_datasets=datasets.list_datasets()

列出前5条:all_available_datasets[:5]

输出:['assin', 'ar_res_reviews', 'ambig_qa', 'bianet', 'ag_news'] - 加载数据集:

datasets.load_dataset(dataset_name, **kwargs)

以本教程所使用的"yelp_review_full"数据集为例:dataset=datasets.load_dataset("yelp_review_full") - 所有可用的指标:

datasets.list_metrics() - 加载指标:

datasets.load_metric(metric_name, **kwargs) - 查看数据集:

- 获取数据集中的样本:

dataset['train'][123456]

- 查看数据词典:

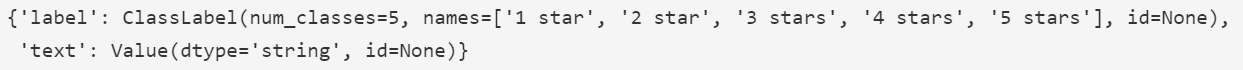

dataset['train'].features

3. 数据集的加载和预处理示例:分类数据集Yelp Reviews

数据集在huggingface上的官方网址:yelp_review_full · Datasets at Hugging Face

这是个用于英文短文本分类(情感分类)任务的数据集,是Yelp(美国点评网站)上的评论(text)和对应的评分星级(1-5星)(label)。

提取自Yelp Dataset Challenge 2015数据集。出自该论文:(2015 NIPS) Character-level Convolutional Networks for Text Classification

加载并查看数据集:

from datasets import load_dataset

dataset = load_dataset("yelp_review_full")

dataset["train"][100]

输出:

{'label': 0,

'text': 'My expectations for McDonalds are t rarely high. But for one to still fail so spectacularly...that takes something special!\\nThe cashier took my friends\'s order, then promptly ignored me. I had to force myself in front of a cashier who opened his register to wait on the person BEHIND me. I waited over five minutes for a gigantic order that included precisely one kid\'s meal. After watching two people who ordered after me be handed their food, I asked where mine was. The manager started yelling at the cashiers for \\"serving off their orders\\" when they didn\'t have their food. But neither cashier was anywhere near those controls, and the manager was the one serving food to customers and clearing the boards.\\nThe manager was rude when giving me my order. She didn\'t make sure that I had everything ON MY RECEIPT, and never even had the decency to apologize that I felt I was getting poor service.\\nI\'ve eaten at various McDonalds restaurants for over 30 years. I\'ve worked at more than one location. I expect bad days, bad moods, and the occasional mistake. But I have yet to have a decent experience at this store. It will remain a place I avoid unless someone in my party needs to avoid illness from low blood sugar. Perhaps I should go back to the racially biased service of Steak n Shake instead!'}

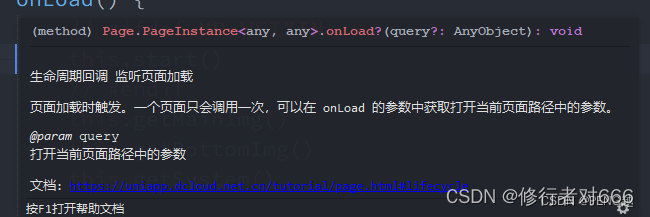

用datasets的map函数(文档:https://huggingface.co/docs/datasets/process.html#map)将文本格式的原数据text列值经tokenize后转换为模型可以读取的格式(tokenizer的输出):

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("mypath/bert-base-cased")

def tokenize_function(examples):

return tokenizer(examples["text"],padding="max_length",truncation=True,max_length=512)

tokenized_datasets = dataset.map(tokenize_function, batched=True)

输出:

转换后的数据集:

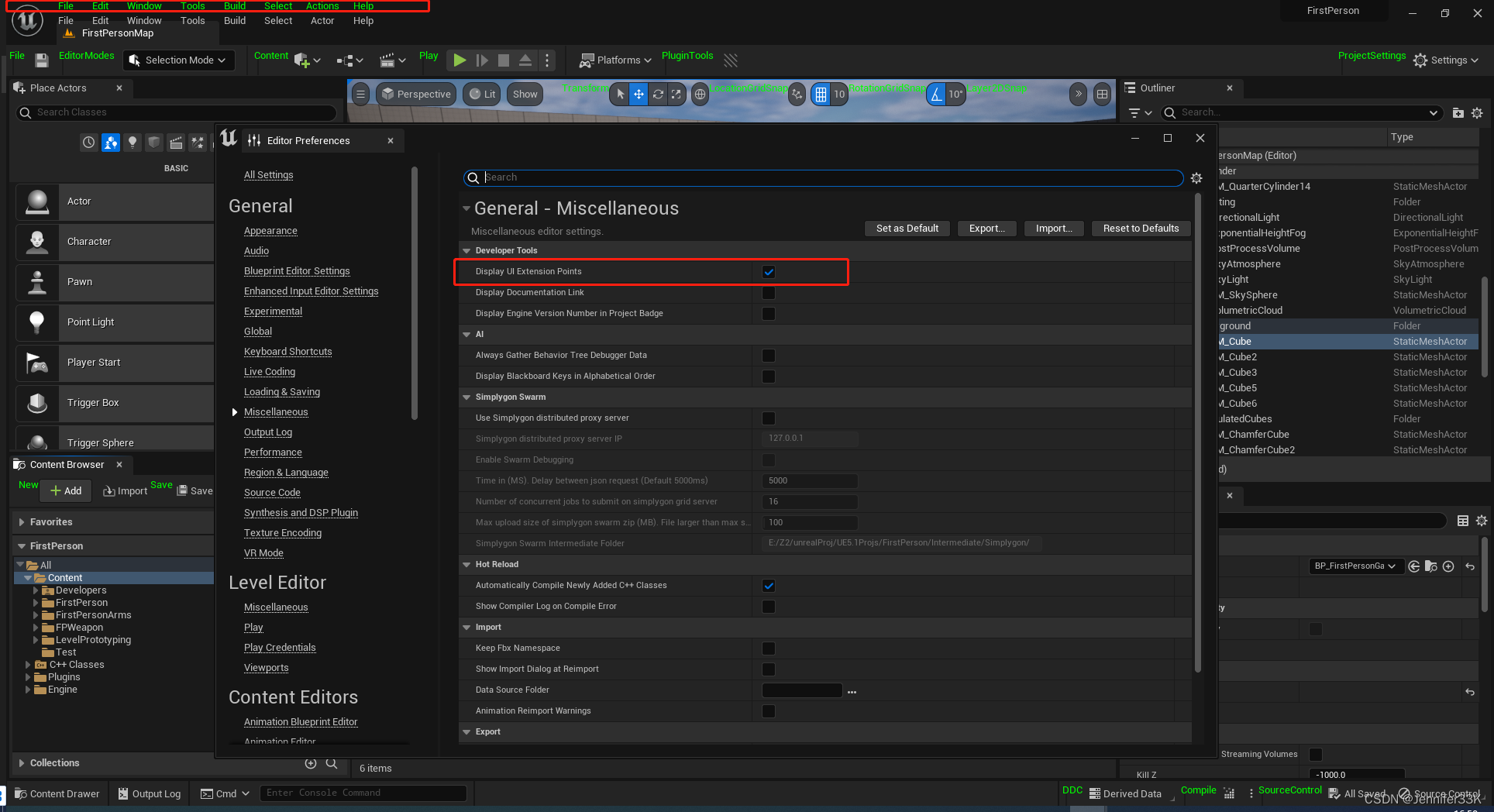

注意这里原教程中tokenizer没有max_length参数,但是这样的话,这一部分就会输出警告:

Asking to pad to max_length but no maximum length is provided and the model has no predefined maximum length. Default to no padding.

Asking to truncate to max_length but no maximum length is provided and the model has no predefined maximum length. Default to no truncation.

后面训练时就会报错:

Traceback (most recent call last):

File "mypath/huggingfacedatasets1.py", line 47, in <module>

trainer.train()

File "myenv/lib/python3.8/site-packages/transformers/trainer.py", line 1396, in train

for step, inputs in enumerate(epoch_iterator):

File "myenv/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 517, in __next__

data = self._next_data()

File "myenv/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 557, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

File "myenv/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py", line 47, in fetch

return self.collate_fn(data)

File "myenv/lib/python3.8/site-packages/transformers/data/data_collator.py", line 66, in default_data_collator

return torch_default_data_collator(features)

File "myenv/lib/python3.8/site-packages/transformers/data/data_collator.py", line 130, in torch_default_data_collator

batch[k] = torch.tensor([f[k] for f in features])

ValueError: expected sequence of length 72 at dim 1 (got 118)

这一bug的产生就是由于同一batch的sequence不同长。所以要加max_length入参。

但是奇怪的是,我用colab试了一遍,就发现可以直接运行……但是我没找到模型的max_length本身是定义在哪里的?

使用512是因为在colab运行时看了一下len(tokenized_datasets['train'][0]['input_ids']),发现是512,说明默认定义的max_length值反正是512。至于为什么我这个还需要手动定义,鬼知道。

我一开始猜测是因为我使用的pretrained_model_name_or_path参数是本地地址,而不是这个里面的某一个键:

测试证明似乎不是,see:

手动输入max_length入参的情况:

不手动输入max_length入参的情况:

修改模型max_model_input_sizes,并不手动输入max_length入参的情况:

那我就特么的纳闷这个属性是拿来干嘛的了……

我用dir(tokenizer)硬找到了另一个看起来也很符合要求的属性名model_max_length,经测试发现这个应该才真的是:

但是这个实验结果没有在整个的实验上重做,因为我觉得应该没有必要,因为这两个情况是一样的(可以参考我之前撰写的博文huggingface.transformers术语表_诸神缄默不语的博客-CSDN博客2.2节,tokenizer(batch_sentences, padding='max_length', truncation=True, max_length=512)意为所有sequence都固定为512长度,tokenizer(batch_sentences, padding='max_length', truncation=True)意为所有sequence都固定为模型max_length长度,当模型max_length就是512时,两种情况等价)。事实上我觉得手动加max_length入参可能更好,更适宜于控制代码。

为了加快示例代码的训练速度,我们抽样出一个较小的数据集来做示例:

small_train_dataset = tokenized_datasets["train"].shuffle(seed=42).select(range(1000))

small_eval_dataset = tokenized_datasets["test"].shuffle(seed=42).select(range(1000))

4. 将自定义数据集转换为datasets的数据集格式

更多细节可参考datasets官方文档https://huggingface.co/docs/datasets/v2.0.0/en/loading

(本文专门作此条撰写,主要是为了以后用Trainer时将自定义数据集转为datasets.Dataset,会比较方便(Trainer的*_dataset入参可以接受datasets.Dataset或torch的数据集,如果是datasets.Dataset的话看起来应该会按列名自动输入模型所需的入参,而且看本教程示例是可以直接用list格式的。Trainer会自动移除其他列(后面训练时输出会显示这一部分内容)。如果是torch数据集我咋知道它要输入啥,有点麻烦。所以直接转换成datasets.Dataset就不用麻烦了)

本文直接以in-memory的dict对象格式(别的in-memory数据类似。暂时没有考虑大到一次加载不到内存的情况,等以后遇到这种情况了再解决)的yelp_review_full数据集为例(Dataset.load_from_dict()文档:https://huggingface.co/docs/datasets/v2.0.0/en/package_reference/main_classes#datasets.Dataset.from_dict):

①将上文得到的small_train_dataset转换为dict对象(键是列名,值是列值(list)),作为示例数据:

example_dict={'label':small_train_dataset['label'],'text':small_train_dataset['text']}

②将这个字典转为Dataset:

example_dataset=datasets.Dataset.from_dict(example_dict)

example_dataset

Dataset({

features: ['label', 'text'],

num_rows: 1000

})

DatasetDict本质上就相当于一个Dataset的字典,对DatasetDict的操作应该就相当于对其所有值做原地操作。

将Dataset组合为DatasetDict的方法:

datasets={}

datasets['train']=convert2Dataset(train_data)

datasets['valid']=convert2Dataset(valid_data)

datasets['test']=convert2Dataset(test_data)

datasets=DatasetDict(datasets)

5. 常见bug

大陆地区无法加载数据集和指标的解决方案可参考我之前撰写的博文:huggingface.datasets无法加载数据集的解决方案_诸神缄默不语的博客-CSDN博客