一、引子

上篇文章讲述了目前内存的硬件架构,本篇阐述内核中是怎么表示不同架构的物理内存页。

二、平坦内存模型(Flat Memory Model)

在该模型下,物理内存是连续的,所以物理地址也是连续的。这时内核使用struct page *mem_map的全局数组来统一管理整个物理内存,page 结构体代表的是每一个内存页的具体属性。如图:

struct page{

unsigned long flags; /* Atomic flags, some possibly

* updated asynchronously */

/*

* Five words (20/40 bytes) are available in this union.

* WARNING: bit 0 of the first word is used for PageTail(). That

* means the other users of this union MUST NOT use the bit to

* avoid collision and false-positive PageTail().

*/

union {

struct { /* Page cache and anonymous pages */

/**

* @lru: Pageout list, eg. active_list protected by

* lruvec->lru_lock. Sometimes used as a generic list

* by the page owner.

*/

union {

struct list_head lru;

/* Or, for the Unevictable "LRU list" slot */

struct {

/* Always even, to negate PageTail */

void *__filler;

/* Count page's or folio's mlocks */

unsigned int mlock_count;

};

/* Or, free page */

struct list_head buddy_list;

struct list_head pcp_list;

};

/* See page-flags.h for PAGE_MAPPING_FLAGS */

struct address_space *mapping;

union {

pgoff_t index; /* Our offset within mapping. */

unsigned long share; /* share count for fsdax */

};

/**

* @private: Mapping-private opaque data.

* Usually used for buffer_heads if PagePrivate.

* Used for swp_entry_t if PageSwapCache.

* Indicates order in the buddy system if PageBuddy.

*/

unsigned long private;

};

struct { /* page_pool used by netstack */

/**

* @pp_magic: magic value to avoid recycling non

* page_pool allocated pages.

*/

unsigned long pp_magic;

struct page_pool *pp;

unsigned long _pp_mapping_pad;

unsigned long dma_addr;

union {

/**

* dma_addr_upper: might require a 64-bit

* value on 32-bit architectures.

*/

unsigned long dma_addr_upper;

/**

* For frag page support, not supported in

* 32-bit architectures with 64-bit DMA.

*/

atomic_long_t pp_frag_count;

};

};

struct { /* Tail pages of compound page */

unsigned long compound_head; /* Bit zero is set */

/* First tail page only */

unsigned char compound_dtor;

unsigned char compound_order;

atomic_t compound_mapcount;

atomic_t subpages_mapcount;

atomic_t compound_pincount;

#ifdef CONFIG_64BIT

unsigned int compound_nr; /* 1 << compound_order */

#endif

};

struct { /* Second tail page of transparent huge page */

unsigned long _compound_pad_1; /* compound_head */

unsigned long _compound_pad_2;

/* For both global and memcg */

struct list_head deferred_list;

};

struct { /* Second tail page of hugetlb page */

unsigned long _hugetlb_pad_1; /* compound_head */

void *hugetlb_subpool;

void *hugetlb_cgroup;

void *hugetlb_cgroup_rsvd;

void *hugetlb_hwpoison;

/* No more space on 32-bit: use third tail if more */

};

struct { /* Page table pages */

unsigned long _pt_pad_1; /* compound_head */

pgtable_t pmd_huge_pte; /* protected by page->ptl */

unsigned long _pt_pad_2; /* mapping */

union {

struct mm_struct *pt_mm; /* x86 pgds only */

atomic_t pt_frag_refcount; /* powerpc */

};

#if ALLOC_SPLIT_PTLOCKS

spinlock_t *ptl;

#else

spinlock_t ptl;

#endif

};

struct { /* ZONE_DEVICE pages */

/** @pgmap: Points to the hosting device page map. */

struct dev_pagemap *pgmap;

void *zone_device_data;

/*

* ZONE_DEVICE private pages are counted as being

* mapped so the next 3 words hold the mapping, index,

* and private fields from the source anonymous or

* page cache page while the page is migrated to device

* private memory.

* ZONE_DEVICE MEMORY_DEVICE_FS_DAX pages also

* use the mapping, index, and private fields when

* pmem backed DAX files are mapped.

*/

};

/** @rcu_head: You can use this to free a page by RCU. */

struct rcu_head rcu_head;

};

union { /* This union is 4 bytes in size. */

/*

* If the page can be mapped to userspace, encodes the number

* of times this page is referenced by a page table.

*/

atomic_t _mapcount;

/*

* If the page is neither PageSlab nor mappable to userspace,

* the value stored here may help determine what this page

* is used for. See page-flags.h for a list of page types

* which are currently stored here.

*/

unsigned int page_type;

};

/* Usage count. *DO NOT USE DIRECTLY*. See page_ref.h */

atomic_t _refcount;

#ifdef CONFIG_MEMCG

unsigned long memcg_data;

#endif

/*

* On machines where all RAM is mapped into kernel address space,

* we can simply calculate the virtual address. On machines with

* highmem some memory is mapped into kernel virtual memory

* dynamically, so we need a place to store that address.

* Note that this field could be 16 bits on x86 ... ;)

*

* Architectures with slow multiplication can define

* WANT_PAGE_VIRTUAL in asm/page.h

*/

#if defined(WANT_PAGE_VIRTUAL)

void *virtual; /* Kernel virtual address (NULL if

not kmapped, ie. highmem) */

#endif /* WANT_PAGE_VIRTUAL */

#ifdef CONFIG_KMSAN

/*

* KMSAN metadata for this page:

* - shadow page: every bit indicates whether the corresponding

* bit of the original page is initialized (0) or not (1);

* - origin page: every 4 bytes contain an id of the stack trace

* where the uninitialized value was created.

*/

struct page *kmsan_shadow;

struct page *kmsan_origin;

#endif

#ifdef LAST_CPUPID_NOT_IN_PAGE_FLAGS

int _last_cpupid;

#endif

}

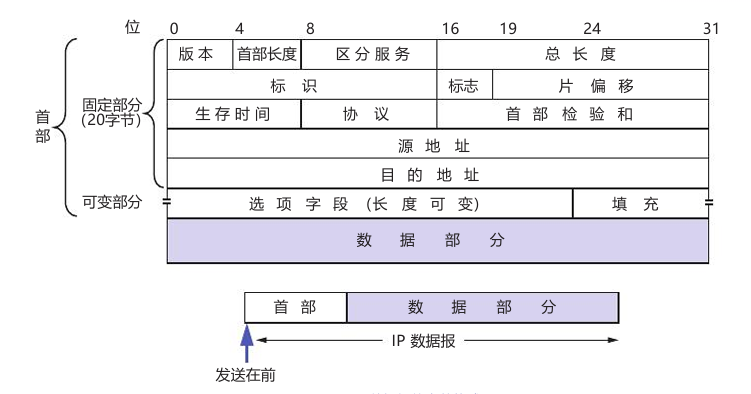

2.1unsigned long flags:

分为4部分,存放SECTION编号,node节点编号,zone编号和当前页标志位;具体定义在page_flags.h中。可以使用pageflags中给出的类型进行赋值。NR_PAGEFLAGS是一个常量,定义了可以与单个struct page数据结构关联的最大标志数。它代表了struct page结构的flags字段中可用位的总数。值通常由内核架构决定,并可能因特定硬件平台而异。例如,在32位架构中,NR_PAGEFLAGS通常为32,而在64位架构中,它可以为64甚至更高。

| FIELD | ... | FLAGS |

N-1 ^ 0

(NR_PAGEFLAGS)

2.2第一个union:

根据内存页的具体使用场景进行划分。按注释进行讲解。

2.2.1 Page cache and anonymous pages:

这个就是第一篇文章中讲解的文件映射和匿名映射区和堆。

第一个union:表明该页面当前是在lru里还是不可驱逐LRU列表里还是伙伴系统里还是pcp链表里。这都是内核管理内存的方式,只是用于不同的场景。

struct address_space *mapping:用于表示文件或匿名内存区域的地址空间的数据结构。它主要用于内存映射(mmap)、页缓存管理和虚拟文件系统(VFS)的实现。

一个具体的文件在打开后,内核会在内存中为之建立一个struct inode结构,其中的i_mapping域指向一个address_space结构。这样,一个文件就对应一个address_space结构,一个address_space与一个偏移量能够确定一个page cache 或swap cache中的一个页面

第二个union:配合address_space使用的偏移量。

unsigned long private:一个私有数据指针,由应用场景确定其具体的含义。也使用pageflags赋值。

2.2.2 page_pool used by netstack

Linux 内核中用于高效管理网络数据包的内存池,它被网络协议栈(netstack)广泛使用。page_pool 提供了一种高效的方式来分配和释放页面,用于网络数据包的处理,特别是那些高性能和高负载的网络环境。

主要的作用是来分配使用的DMA和数据使用的内存池。并且区分了32位和64位的DMA,第二章也讲述了不能架构的DMA是有区别的。

struct page_pool *pp:指向 page_pool 结构的指针。page_pool 是一个用于管理网络数据包的页面池,包含了有关页面池的所有信息和管理功能。

unsigned long dma_addr:此字段存储页面的32位物理DMA(直接内存访问)地址。网络堆栈通常处理DMA传输,此字段提供硬件交互的物理地址。

unsigned long dma_addr_upper:此字段仅存在于32位体系结构上。如果需要,它提供额外的位来存储64位DMA地址的上半部分。这允许netmem结构表示DMA地址超过32位限制的页面。

unsigned long dma_addr_upper:此字段支持碎片化页面分配的64位体系结构。它使用原子数据类型(atomic_long_t)进行线程安全更新,并存储与页面关联的片段数。碎片化是指将大页面分配拆分为更小的部分的概念。

2.2.3 Tail pages of compound page

在 Linux 内核的内存管理中,“复合页面”(compound page)是一种特殊类型的页面,它由多个连续的物理页面组成,并作为一个大的页面进行处理。这个结构通常用于处理需要大块连续物理内存的内存分配场景。

unsigned long compound_head:此字段存储了复合页中头页的物理地址。最低有效位(bit 0)始终置为1。这用作标识尾页结构本身的标志。通过检查此位,内核可以区分尾页结构和常规页结构。

unsigned char compound_dtor:仅出现在复合页的第一个尾页中。指向与复合页关联的析构函数(由“dtor”表示)。当复合页被释放时,此函数可能负责执行任何必要的清理操作。

unsigned char compound_order:仅出现在复合页的第一个尾页中。存储了复合页的大小(以2^n页为单位)。

2.2.4 Second tail page of transparent huge page

在 Linux 内核中,透明大页面(Transparent Huge Pages,THP)是一种用于优化大内存分配的机制,可以减少内存碎片,提高性能。透明大页面通常由多个小页面(通常是 4 KB 页面)组成,形成一个较大的页面(如 2 MB 或 1 GB 页面)。这些大页面在内存中以复合页的形式存在,其中包括一个主页面和多个尾页面。

2.2.5 Second tail page of hugetlb page

在 Linux 内核中,hugetlb 页面是一种用于支持大页面分配的机制,能够提高内存分配的效率和性能。hugetlb 页面由一个主页面(头页面)和多个尾页面组成,每个尾页面用于存储与该大页面相关的特定信息。

2.2.6 Page table pages

管理页面表页面(page table pages)的数据结构。在 Linux 内核中,页面表用于将虚拟地址映射到物理地址,支持内存管理。该结构体包含了有关页面表的各种信息和管理字段,具体取决于体系结构和配置选项。

pgtable_t pmd_huge_pte:这是一个页表条目,可能代表一个大页的页表条目。pmd_huge_pte 用于处理大页(如大于标准页大小的页面),它受 page->ptl 保护,以确保对页表的访问是线程安全的。

union:

pt_mm: 对于 x86 架构,pt_mm 指向 mm_struct,它表示进程的内存管理结构体,用于跟踪页面表的上下文。

pt_frag_refcount: 对于 powerpc 架构,pt_frag_refcount 是一个原子计数器,用于管理页表碎片的引用计数,帮助跟踪和管理内存的使用。

spinlock_t *ptl 和 spinlock_t ptl:页表锁用于保护对页表的并发访问。具体使用哪种类型的锁取决于 ALLOC_SPLIT_PTLOCKS 配置选项:

ALLOC_SPLIT_PTLOCKS 被定义时:使用指针 ptl 指向一个分离锁定的页表锁。

ALLOC_SPLIT_PTLOCKS 未定义时:使用一个单一的 spinlock_t 类型的锁。

2.2.7 ZONE_DEVICE pages

这种页面类型在 Linux 内核中用于处理设备内存(如设备直接内存访问,Device-DAX)。ZONE_DEVICE 页面是与设备相关的内存页面,主要用于支持设备内存的映射和管理。

struct dev_pagemap *pgmap: 这是一个指向 dev_pagemap 结构体的指针,dev_pagemap 用于描述设备内存页面的映射。它包含了有关设备内存区域的详细信息,用于支持设备内存的管理和访问。

void *zone_device_data:指向设备内存数据的指针。这个字段用于存储与设备内存相关的数据,具体取决于设备内存的类型和使用场景。当 ZONE_DEVICE 页面迁移到设备私有内存时,这些字段用于维护源页面的信息。对于 MEMORY_DEVICE_FS_DAX 页面(即 pmem 支持的 DAX 文件),这些字段也会用到,以便在映射文件时保留源页面的映射、索引和私有信息。

2.3第二个union:

用于存储有关内存页面的不同信息,具体取决于页面的类型和用途

atomic_t _mapcount:这是一个原子计数器,用于跟踪页面在页面表中的引用次数。如果页面可以映射到用户空间,那么 _mapcount 字段记录该页面在页表中的引用次数。这有助于管理页面的生命周期,确保页面在不再被使用时可以被正确回收。

unsigned int page_type:这个字段用于存储页面的类型信息,如果页面既不是 slab 页面(PageSlab)也不可映射到用户空间,则使用 page_type 来记录页面的用途。这些类型信息有助于内核确定页面的用途和状态。

2.4atomic_t _refcount:

引用计数,表示内核中引用该page的次数, 如果要操作该page, 引用计数会+1, 操作完成-1. 当该值为0时, 表示没有引用该page的位置,所以该page可以被解除映射,这往往在内存回收时是有用的。不能直接对该数进行操作,操作函数在page_ref.h定义

2.5void *virtual:

为Linux内核中的页面提供了处理虚拟地址计算或存储的机制。它适应不同的内存架构(所有RAM映射与highmem)并为可能从中受益的架构提供了一个优化选项(WANT_PAGE_VIRTUAL)。这个字段用于存储页面的内核虚拟地址。对于内存被映射到内核虚拟地址空间的系统,这个字段保存了该地址。对于高内存(highmem)系统,部分内存可能不是直接映射到内核虚拟地址空间中,而是动态地进行映射,在这种情况下,这个字段可以为 NULL。

2.6struct page *kmsan_shadow和struct page *kmsan_origin:

内核内存沙箱(Kernel Memory Sanitizer, KMSAN)相关的条件编译部分,它用于在内核中跟踪和管理未初始化内存的元数据。KMSAN 是一种工具,用于检测和调试内核中未初始化内存的使用情况,以帮助开发人员发现潜在的内存错误。

2.6int _last_cpupid:

这个字段用于存储 last_cpupid。last_cpupid 是一个整数,代表最后一次执行的 CPU 处理标识。这个标识用于跟踪和管理与特定 CPU 相关的操作,如进程调度等。

三、稀疏内存模型(Sparse Memory Model)

SPARSEMEM 内存模型下使用mem_section结构体,描述内存的一个大块区域,并包含了对这些内存块的管理信息。在 SPARSEMEM 模式下,系统将内存划分为若干 mem_section,每个 mem_section 描述一部分内存的状态。

struct mem_section {

/*

* This is, logically, a pointer to an array of struct

* pages. However, it is stored with some other magic.

* (see sparse.c::sparse_init_one_section())

*

* Additionally during early boot we encode node id of

* the location of the section here to guide allocation.

* (see sparse.c::memory_present())

*

* Making it a UL at least makes someone do a cast

* before using it wrong.

*/

unsigned long section_mem_map;

struct mem_section_usage *usage;

#ifdef CONFIG_PAGE_EXTENSION

/*

* If SPARSEMEM, pgdat doesn't have page_ext pointer. We use

* section. (see page_ext.h about this.)

*/

struct page_ext *page_ext;

unsigned long pad;

#endif

/*

* WARNING: mem_section must be a power-of-2 in size for the

* calculation and use of SECTION_ROOT_MASK to make sense.

*/

};

3.1unsigned long section_mem_map:

这是一个指向 struct page 数组的指针,但存储时带有一些特殊的处理(例如在 sparse.c::sparse_init_one_section() 中)。在系统启动的早期阶段,这里还会编码 node id,以指导内存分配(例如在 sparse.c::memory_present() 中)。使用 unsigned long 类型可以确保在错误使用时需要进行类型转换。

3.2struct mem_section_usage *usage:

指向 mem_section_usage 结构体的指针,用于描述内存段的使用情况。

3.3struct page_ext *page_ext:

启用了 SPARSEMEM 模式,pgdat 结构体没有 page_ext 指针,因此这里使用 page_ext。这个指针指向 page_ext 结构体,用于扩展页面的管理信息。

四、CPU和物理内存架构

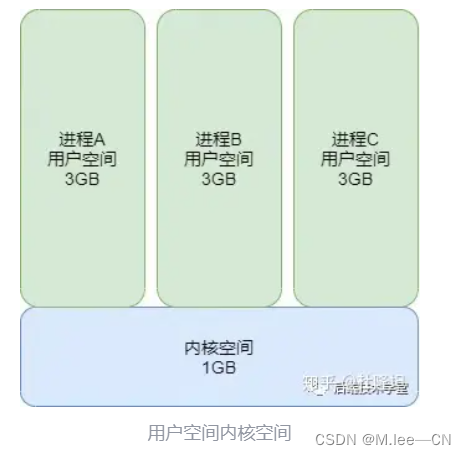

从宏观上来看,NUMA架构就是一堆UMA架构访问模型组成的一个完整的CPU访问架构。所以和物理模型一样,可以使用一个大的数组来表示UMA。通过UMA的各内存区域来表示NUMA。NUMA架构的描述结构体是struct pglist_data。

typedef struct pglist_data {

/*

* node_zones contains just the zones for THIS node. Not all of the

* zones may be populated, but it is the full list. It is referenced by

* this node's node_zonelists as well as other node's node_zonelists.

*/

struct zone node_zones[MAX_NR_ZONES];

/*

* node_zonelists contains references to all zones in all nodes.

* Generally the first zones will be references to this node's

* node_zones.

*/

struct zonelist node_zonelists[MAX_ZONELISTS];

int nr_zones; /* number of populated zones in this node */

#ifdef CONFIG_FLATMEM /* means !SPARSEMEM */

struct page *node_mem_map;

#ifdef CONFIG_PAGE_EXTENSION

struct page_ext *node_page_ext;

#endif

#endif

#if defined(CONFIG_MEMORY_HOTPLUG) || defined(CONFIG_DEFERRED_STRUCT_PAGE_INIT)

/*

* Must be held any time you expect node_start_pfn,

* node_present_pages, node_spanned_pages or nr_zones to stay constant.

* Also synchronizes pgdat->first_deferred_pfn during deferred page

* init.

*

* pgdat_resize_lock() and pgdat_resize_unlock() are provided to

* manipulate node_size_lock without checking for CONFIG_MEMORY_HOTPLUG

* or CONFIG_DEFERRED_STRUCT_PAGE_INIT.

*

* Nests above zone->lock and zone->span_seqlock

*/

spinlock_t node_size_lock;

#endif

unsigned long node_start_pfn;

unsigned long node_present_pages; /* total number of physical pages */

unsigned long node_spanned_pages; /* total size of physical page

range, including holes */

int node_id;

wait_queue_head_t kswapd_wait;

wait_queue_head_t pfmemalloc_wait;

/* workqueues for throttling reclaim for different reasons. */

wait_queue_head_t reclaim_wait[NR_VMSCAN_THROTTLE];

atomic_t nr_writeback_throttled;/* nr of writeback-throttled tasks */

unsigned long nr_reclaim_start; /* nr pages written while throttled

* when throttling started. */

#ifdef CONFIG_MEMORY_HOTPLUG

struct mutex kswapd_lock;

#endif

struct task_struct *kswapd; /* Protected by kswapd_lock */

int kswapd_order;

enum zone_type kswapd_highest_zoneidx;

int kswapd_failures; /* Number of 'reclaimed == 0' runs */

#ifdef CONFIG_COMPACTION

int kcompactd_max_order;

enum zone_type kcompactd_highest_zoneidx;

wait_queue_head_t kcompactd_wait;

struct task_struct *kcompactd;

bool proactive_compact_trigger;

#endif

/*

* This is a per-node reserve of pages that are not available

* to userspace allocations.

*/

unsigned long totalreserve_pages;

#ifdef CONFIG_NUMA

/*

* node reclaim becomes active if more unmapped pages exist.

*/

unsigned long min_unmapped_pages;

unsigned long min_slab_pages;

#endif /* CONFIG_NUMA */

/* Write-intensive fields used by page reclaim */

CACHELINE_PADDING(_pad1_);

#ifdef CONFIG_DEFERRED_STRUCT_PAGE_INIT

/*

* If memory initialisation on large machines is deferred then this

* is the first PFN that needs to be initialised.

*/

unsigned long first_deferred_pfn;

#endif /* CONFIG_DEFERRED_STRUCT_PAGE_INIT */

#ifdef CONFIG_TRANSPARENT_HUGEPAGE

struct deferred_split deferred_split_queue;

#endif

#ifdef CONFIG_NUMA_BALANCING

/* start time in ms of current promote rate limit period */

unsigned int nbp_rl_start;

/* number of promote candidate pages at start time of current rate limit period */

unsigned long nbp_rl_nr_cand;

/* promote threshold in ms */

unsigned int nbp_threshold;

/* start time in ms of current promote threshold adjustment period */

unsigned int nbp_th_start;

/*

* number of promote candidate pages at start time of current promote

* threshold adjustment period

*/

unsigned long nbp_th_nr_cand;

#endif

/* Fields commonly accessed by the page reclaim scanner */

/*

* NOTE: THIS IS UNUSED IF MEMCG IS ENABLED.

*

* Use mem_cgroup_lruvec() to look up lruvecs.

*/

struct lruvec __lruvec;

unsigned long flags;

#ifdef CONFIG_LRU_GEN

/* kswap mm walk data */

struct lru_gen_mm_walk mm_walk;

#endif

CACHELINE_PADDING(_pad2_);

/* Per-node vmstats */

struct per_cpu_nodestat __percpu *per_cpu_nodestats;

atomic_long_t vm_stat[NR_VM_NODE_STAT_ITEMS];

#ifdef CONFIG_NUMA

struct memory_tier __rcu *memtier;

#endif

} pg_data_t;

4.1struct zone node_zones[MAX_NR_ZONES]:

包含该节点的所有内存区(zone)。每个节点都会包含一个 MAX_NR_ZONES 大小的数组,其中存放了该节点的所有 zone 结构体。

4.2struct zonelist node_zonelists[MAX_ZONELISTS]:

包含指向所有节点的所有内存区的引用。也就是备用的NUMA节点和这些节点的物理内存区域。并且按照访问距离的远近,依次放入数组。这是为了优化访问备用内存时耗费的时间。

4.3struct page *node_mem_map:

如果是平坦内存模型,直接使用该节点进行内存页的管理。如果是稀疏内存模型,则内存分段(section)的管理信息保存在 mem_section 结构体中。zone 通过页帧号(PFN)间接与 mem_section 关联,从而管理该区域的内存。

4.4unsigned long node_start_pfn:

该节点内存的起始页帧号(PFN)。

4.5unsigned long node_present_pages:

该节点的总物理页数,不包括空洞(holes)。

4.6unsigned long node_spanned_pages:

该节点的总内存范围大小,包括空洞(holes)。

五、内存区域

struct zone 结构体用于描述内存区域(zone)。每个 NUMA 节点都包含多个内存区域,例如 DMA 区域、普通内存区域等。zone 结构体中的各种字段和标志用于管理和跟踪这些内存区域的状态和属性。

struct zone {

/* Read-mostly fields */

/* zone watermarks, access with *_wmark_pages(zone) macros */

unsigned long _watermark[NR_WMARK];

unsigned long watermark_boost;

unsigned long nr_reserved_highatomic;

/*

* We don't know if the memory that we're going to allocate will be

* freeable or/and it will be released eventually, so to avoid totally

* wasting several GB of ram we must reserve some of the lower zone

* memory (otherwise we risk to run OOM on the lower zones despite

* there being tons of freeable ram on the higher zones). This array is

* recalculated at runtime if the sysctl_lowmem_reserve_ratio sysctl

* changes.

*/

long lowmem_reserve[MAX_NR_ZONES];

#ifdef CONFIG_NUMA

int node;

#endif

struct pglist_data *zone_pgdat;

struct per_cpu_pages __percpu *per_cpu_pageset;

struct per_cpu_zonestat __percpu *per_cpu_zonestats;

/*

* the high and batch values are copied to individual pagesets for

* faster access

*/

int pageset_high;

int pageset_batch;

#ifndef CONFIG_SPARSEMEM

/*

* Flags for a pageblock_nr_pages block. See pageblock-flags.h.

* In SPARSEMEM, this map is stored in struct mem_section

*/

unsigned long *pageblock_flags;

#endif /* CONFIG_SPARSEMEM */

/* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

unsigned long zone_start_pfn;

/*

* spanned_pages is the total pages spanned by the zone, including

* holes, which is calculated as:

* spanned_pages = zone_end_pfn - zone_start_pfn;

*

* present_pages is physical pages existing within the zone, which

* is calculated as:

* present_pages = spanned_pages - absent_pages(pages in holes);

*

* present_early_pages is present pages existing within the zone

* located on memory available since early boot, excluding hotplugged

* memory.

*

* managed_pages is present pages managed by the buddy system, which

* is calculated as (reserved_pages includes pages allocated by the

* bootmem allocator):

* managed_pages = present_pages - reserved_pages;

*

* cma pages is present pages that are assigned for CMA use

* (MIGRATE_CMA).

*

* So present_pages may be used by memory hotplug or memory power

* management logic to figure out unmanaged pages by checking

* (present_pages - managed_pages). And managed_pages should be used

* by page allocator and vm scanner to calculate all kinds of watermarks

* and thresholds.

*

* Locking rules:

*

* zone_start_pfn and spanned_pages are protected by span_seqlock.

* It is a seqlock because it has to be read outside of zone->lock,

* and it is done in the main allocator path. But, it is written

* quite infrequently.

*

* The span_seq lock is declared along with zone->lock because it is

* frequently read in proximity to zone->lock. It's good to

* give them a chance of being in the same cacheline.

*

* Write access to present_pages at runtime should be protected by

* mem_hotplug_begin/done(). Any reader who can't tolerant drift of

* present_pages should use get_online_mems() to get a stable value.

*/

atomic_long_t managed_pages;

unsigned long spanned_pages;

unsigned long present_pages;

#if defined(CONFIG_MEMORY_HOTPLUG)

unsigned long present_early_pages;

#endif

#ifdef CONFIG_CMA

unsigned long cma_pages;

#endif

const char *name;

#ifdef CONFIG_MEMORY_ISOLATION

/*

* Number of isolated pageblock. It is used to solve incorrect

* freepage counting problem due to racy retrieving migratetype

* of pageblock. Protected by zone->lock.

*/

unsigned long nr_isolate_pageblock;

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/* see spanned/present_pages for more description */

seqlock_t span_seqlock;

#endif

int initialized;

/* Write-intensive fields used from the page allocator */

CACHELINE_PADDING(_pad1_);

/* free areas of different sizes */

struct free_area free_area[MAX_ORDER];

/* zone flags, see below */

unsigned long flags;

/* Primarily protects free_area */

spinlock_t lock;

/* Write-intensive fields used by compaction and vmstats. */

CACHELINE_PADDING(_pad2_);

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* pfn where compaction free scanner should start */

unsigned long compact_cached_free_pfn;

/* pfn where compaction migration scanner should start */

unsigned long compact_cached_migrate_pfn[ASYNC_AND_SYNC];

unsigned long compact_init_migrate_pfn;

unsigned long compact_init_free_pfn;

#endif

#ifdef CONFIG_COMPACTION

/*

* On compaction failure, 1<<compact_defer_shift compactions

* are skipped before trying again. The number attempted since

* last failure is tracked with compact_considered.

* compact_order_failed is the minimum compaction failed order.

*/

unsigned int compact_considered;

unsigned int compact_defer_shift;

int compact_order_failed;

#endif

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* Set to true when the PG_migrate_skip bits should be cleared */

bool compact_blockskip_flush;

#endif

bool contiguous;

CACHELINE_PADDING(_pad3_);

/* Zone statistics */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

atomic_long_t vm_numa_event[NR_VM_NUMA_EVENT_ITEMS];

} ____cacheline_internodealigned_in_smp;

这个结构体的注释很详细,并且上面结构体实现很像。不过比较有意思的是

CACHELINE_PADDING和____cacheline_internodealigned_in_smp。

5.1CACHELINE_PADDING:

CACHELINE_PADDING 宏用于填充内存,以确保结构体中的不同部分在访问时不会发生伪共享(false sharing)。伪共享会导致性能下降,因为多个处理器内核可能会试图同时访问相邻的内存单元,从而引发缓存一致性流量。

5.2____cacheline_internodealigned_in_smp:

用于确保结构体在多处理器系统中对齐到缓存行边界,以减少伪共享并提高访问性能。

在 Linux 内核中,多处理器系统上的缓存行伪共享会显著影响性能。为了解决这个问题,Linux 内核使用 ____cacheline_internodealigned_in_smp 宏来确保数据结构对齐到缓存行边界,特别是在这些结构体经常被多个处理器访问时。

![[附开题]flask框架的基于web的外卖程序的设计与实现zce6e(python+源码)](https://i-blog.csdnimg.cn/direct/391e68338f4d4e1b8f955461dca4a4dc.png)