线性回归

有借鉴网上部分博客

第一题 单变量

先导入相关库

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt读取数据,并展示前五行

data=pd.read_csv(path,header=None,names=["Population","Profit"])

data.head()

先绘制一个散点图,查看数据分布,并规定x,y轴的名字

data.plot(kind="scatter",x="Population",y="Profit",figsize=(12,8))

plt.show

新增一列,已便构造矩阵

#新增一列x0

data.insert(0,"x0",1)

data.head()先查看数据有多少列,然后读取相应的列的数据构造矩阵,同时构造一个零矩阵

cols=data.shape[1]

X=data.iloc[:,0:cols-1]

Y=data.iloc[:,cols-1:cols]

X=np.matrix(X.values)

Y=np.matrix(Y.values)

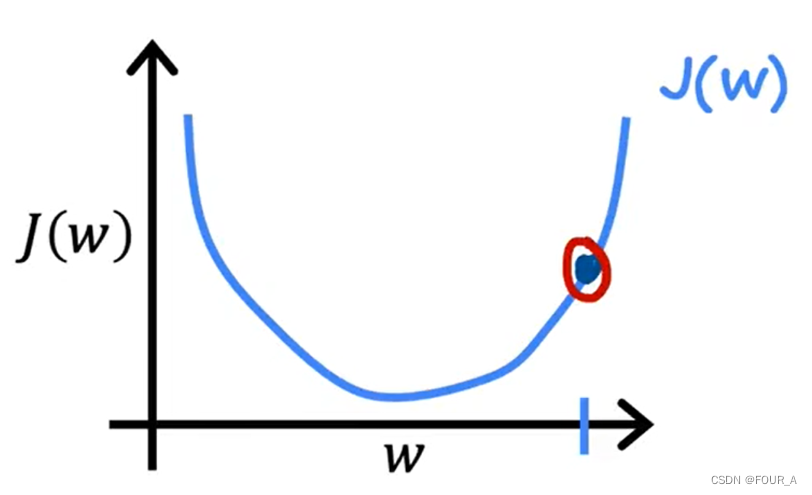

theta=np.zeros((2, 1))构造代价函数。 代价函数公式:

def cost_fuc(X,Y,theta):

cost_matrix=np.power(X*theta-Y,2)

cost_finite=np.sum(cost_matrix)/(2*len(X))

return cost_finite构造梯度下降函数

def gradient_descent(X,Y,theta,alpha,times):

for i in range(times):

theta[0]=theta[0]-alpha*(1/len(X))*(np.sum(X*theta-Y))

theta[1]=theta[1]-alpha*(1/len(X))*(np.multiply((X*theta-Y),X[1])).sum()

temp0=theta[0]

temp1=theta[1]

cost = cost_func(X, Y, theta)

pass

return theta

调用函数,并绘制线性回归图

theta_last= gradient_descent(x,y,theta,0.02,1500)

data.plot(kind="scatter",x="Population",y="Profit",figsize=(12,8),label='Prediction')

x = np.linspace(data.Population.min(), data.Population.max(), 100)

y = theta[0] + theta[1] * x

plt.plot(x, y)

plt.show()

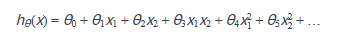

第二题 多变量

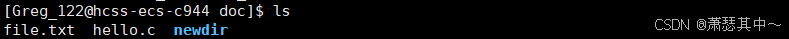

导入相关库并读取文件数据

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

path = "./ex1data2.txt"

data = pd.read_csv(path,header=None,names=["size","bedrooms","price"])

data.head()

#展示两个散点图

path = "./ex1data2.txt"

data = pd.read_csv(path,header=None,names=["size","bedrooms","price"])

data.head()

data.plot(kind="scatter",x="bedrooms",y="price")

plt.show()由于特征值的数字大小差距较大,进行特征缩放,并构造相关的xy数组

#特征缩放

data = (data - data.mean())/data.std()

data.head()

data.insert(0,"x0",1)

data.head()

cols=data.shape[1]

X=data.iloc[:,0:cols-1]

Y=data.iloc[:,cols-1:cols]

X=X.values

Y=Y.values

theta=np.zeros((3, 1))代价函数

#代价函数

def cost_func(x,y,theta):

cost_matrix = np.power(x@theta-y,2)

cost_finite = np.sum(cost_matrix)/(2*len(x))

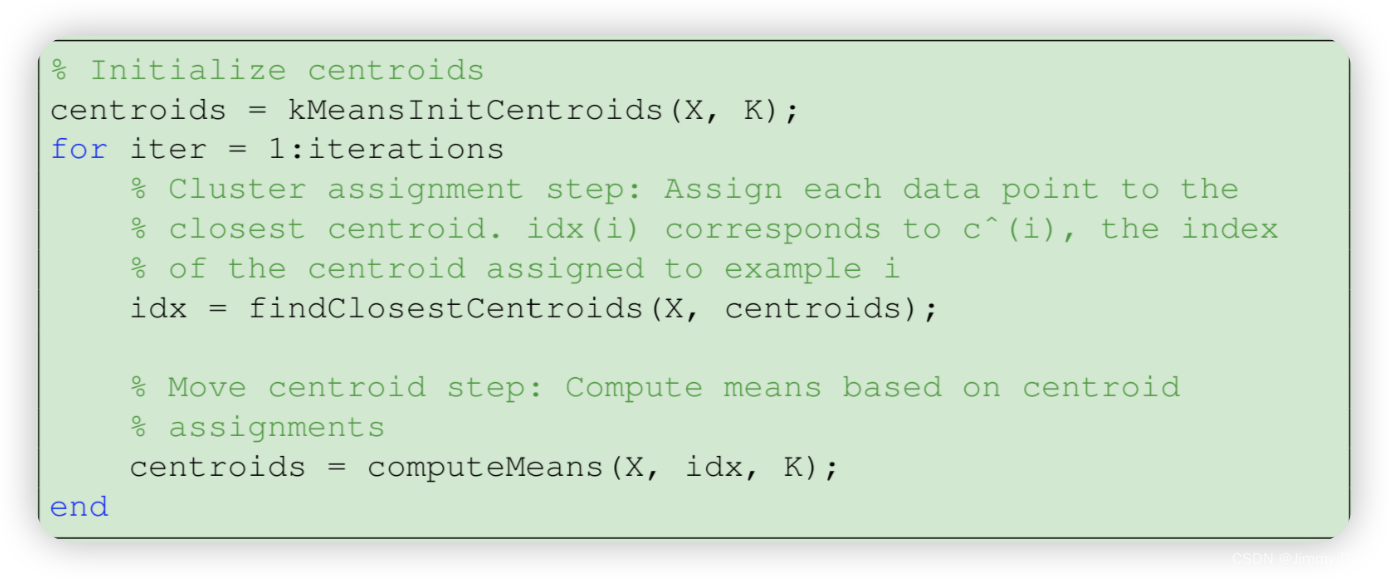

return cost_finite梯度下降函数

#梯度下降

def gradient_descent(X,Y,theta,alpha,times):

costs = []

for i in range(times):

theta = theta - (X.T @ (X@theta - Y)) * alpha / len(X) #迭代梯度下降

cost = cost_func(X, Y, theta)

costs.append(cost)

pass

return theta,costs

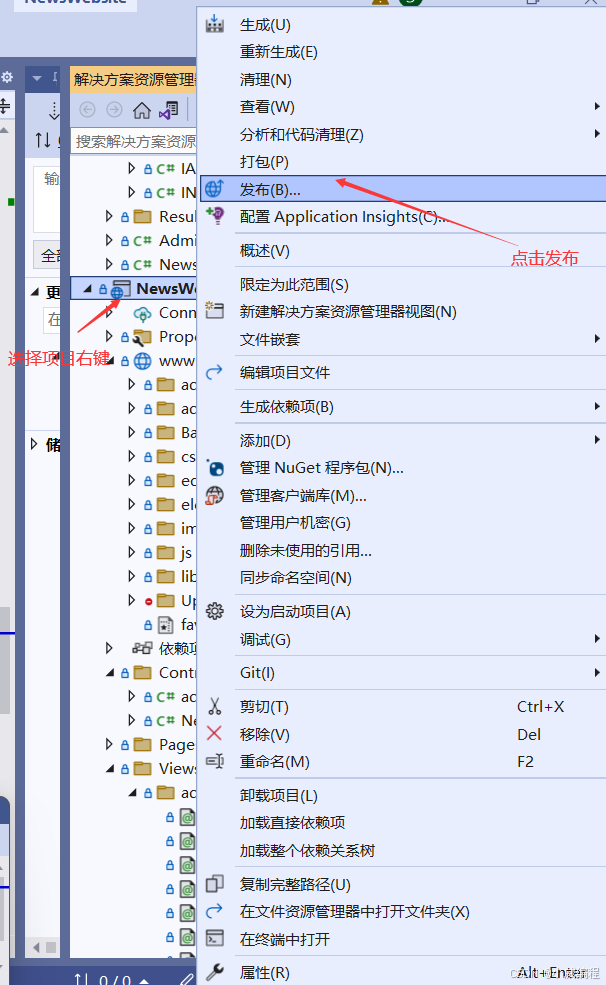

显示图像

alpha_iters = [0.003,0.03,0.0001,0.001,0.01]#设置alpha

counts = 200#循环次数

fig,ax = plt.subplots()

for alpha in alpha_iters:#迭代不同学习率alpha

_,costs = gradient_descent(X,Y,theta,alpha,counts)#得到损失值

ax.plot(np.arange(counts),costs,label = alpha)#设置x轴参数为迭代次数,y轴参数为cost

ax.legend() #加上这句 显示label

ax.set(xlabel= 'counts', #图的坐标轴设置

ylabel = 'cost',

title = 'cost vs counts')#标题

plt.show()#显示图像