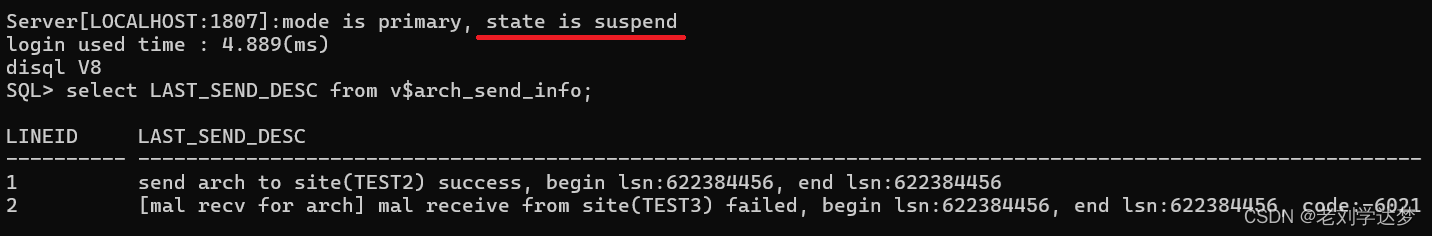

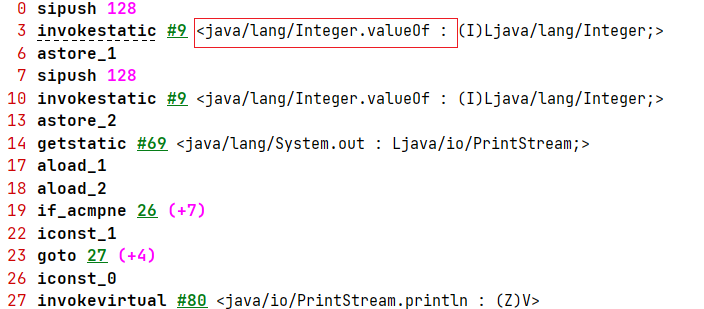

笔者在阅读知乎上一个关于CUDA编程的专栏时,发现作者写的很多文章中都会附带计时的模块用于评估程序的运行效率,然而笔者发现,在运行这篇文章中的代码时时,得到的结果和作者的结果有较大差异,主要体现在:使用第三种thrust库的方法得到的结果比第二种使用共享内存的结果要差。

观察每次的运行时间,发现第三种方法首次运行的时间大约是后续运行时间的7倍,而第二种方法的每次运行时间基本一致,因此造成了结果的不一致,笔者将文中的代码进行修改,展示两个版本,其中version1是前20次的结果(即原文结果),version2是第1次到第21次的运行结果(即后20次的结果,不包括第一次),可以看到,此时第三种方法的结果比第二种方法的结果快,且三种方法的结果差距不大,那么可以说在计算层面,对该程序的优化提升不大,而在访存方面,thrust使用的方法效率要比共享内存低。

#include <stdio.h>

#include "error.cuh"

#include <thrust/scan.h>

#include <thrust/execution_policy.h>

#ifdef USE_DP

typedef double real;

#else

typedef float real;

#endif

const int N = 1000000;

const int M = sizeof(int) * N;

const int NUM_REAPEATS = 20;

const int BLOCK_SIZE = 1024;

const int GRID_SIZE = (N - 1) / BLOCK_SIZE + 1;

__global__ void globalMemScan(real *d_x, real *d_y) {

real *x = d_x + blockDim.x * blockIdx.x;

const int n = blockDim.x * blockIdx.x + threadIdx.x;

real y = 0.0;

if (n < N) {

for (int offset = 1; offset < blockDim.x; offset <<= 1) {

if (threadIdx.x >= offset) y = x[threadIdx.x] + x[threadIdx.x - offset];

__syncthreads();

if (threadIdx.x >= offset) x[threadIdx.x] = y;

}

if (threadIdx.x == blockDim.x - 1) d_y[blockIdx.x] = x[threadIdx.x];

}

}

__global__ void addBaseValue(real *d_x, real *d_y) {

const int n = blockDim.x * blockIdx.x + threadIdx.x;

real y = blockIdx.x > 0 ? d_y[blockIdx.x - 1] : 0.0;

if (n < N) {

d_x[n] += y;

}

}

__global__ void sharedMemScan(real *d_x, real *d_y) {

extern __shared__ real s_x[];

const int n = blockDim.x * blockIdx.x + threadIdx.x;

s_x[threadIdx.x] = n < N ? d_x[n] : 0.0;

__syncthreads();

real y = 0.0;

if (n < N) {

for (int offset = 1; offset < blockDim.x; offset <<= 1) {

if (threadIdx.x >= offset) y = s_x[threadIdx.x] + s_x[threadIdx.x - offset];

__syncthreads();

if (threadIdx.x >= offset) s_x[threadIdx.x] = y;

}

d_x[n] = s_x[threadIdx.x];

if (threadIdx.x == blockDim.x - 1) d_y[blockIdx.x] = s_x[threadIdx.x];

}

}

void scan(real *d_x, real *d_y, real *d_z, const int method) {

switch (method)

{

case 0:

globalMemScan<<<GRID_SIZE, BLOCK_SIZE>>>(d_x, d_y);

globalMemScan<<<1, GRID_SIZE>>>(d_y, d_z);

addBaseValue<<<GRID_SIZE, BLOCK_SIZE>>>(d_x, d_y);

break;

case 1:

sharedMemScan<<<GRID_SIZE, BLOCK_SIZE, sizeof(real) * BLOCK_SIZE>>>(d_x, d_y);

sharedMemScan<<<1, GRID_SIZE, sizeof(real) * GRID_SIZE>>>(d_y, d_z);

addBaseValue<<<GRID_SIZE, BLOCK_SIZE>>>(d_x, d_y);

break;

case 2:

thrust::inclusive_scan(thrust::device, d_x, d_x + N, d_x);

break;

default:

break;

}

}

void timing(const real *h_x, real *d_x, real *d_y, real *d_z, real *h_ret, const int method) {

float tSum = 0.0;

float t2Sum = 0.0;

float tSumVersion2 = 0.0;

float t2SumVersion2 = 0.0;

for (int i=0; i<=NUM_REAPEATS; ++i) {

CHECK(cudaMemcpy(d_x, h_x, M, cudaMemcpyHostToDevice));

cudaEvent_t start, stop;

CHECK(cudaEventCreate(&start));

CHECK(cudaEventCreate(&stop));

CHECK(cudaEventRecord(start));

cudaEventQuery(start);

scan(d_x, d_y, d_z, method);

CHECK(cudaEventRecord(stop));

CHECK(cudaEventSynchronize(stop));

float elapsedTime;

CHECK(cudaEventElapsedTime(&elapsedTime, start, stop));

if(i == 0) {

// do nothing

} else {

tSumVersion2 += elapsedTime;

t2SumVersion2 += elapsedTime * elapsedTime;

}

if(i == NUM_REAPEATS) {

// do nothing

} else {

tSum += elapsedTime;

t2Sum += elapsedTime * elapsedTime;

}

printf("%g\t", elapsedTime);

CHECK(cudaEventDestroy(start));

CHECK(cudaEventDestroy(stop));

}

printf("\n======version1======\n");

printf("\n%g\t", tSum);

float tAVG = tSum / NUM_REAPEATS;

float tERR = sqrt(t2Sum / NUM_REAPEATS - tAVG * tAVG);

printf("Time = %g +- %g ms.\n", tAVG, tERR);

printf("\n======version2======\n");

printf("\n%g\t", tSumVersion2);

float tAVGVersion2 = tSumVersion2 / NUM_REAPEATS;

float tERRVersion2 = sqrt(t2SumVersion2 / NUM_REAPEATS - tAVGVersion2 * tAVGVersion2);

printf("Time = %g +- %g ms.\n", tAVGVersion2, tERRVersion2);

CHECK(cudaMemcpy(h_ret, d_x, M, cudaMemcpyDeviceToHost));

}

int main() {

real *h_x = new real[N];

real *h_y = new real[N];

real *h_ret = new real[N];

for (int i=0; i<N; i++) h_x[i] = 1.23;

real *d_x, *d_y, *d_z;

CHECK(cudaMalloc((void **)&d_x, M));

CHECK(cudaMalloc((void **)&d_y, sizeof(real) * GRID_SIZE));

CHECK(cudaMalloc((void **)&d_z, sizeof(real)));

printf("using global mem:\n");

timing(h_x, d_x, d_y, d_z, h_ret, 0);

for (int i = N - 10; i < N; i++) printf("%f ", h_ret[i]);

printf("\n");

printf("using shared mem:\n");

timing(h_x, d_x, d_y, d_z, h_ret, 1);

for (int i = N - 10; i < N; i++) printf("%f ", h_ret[i]);

printf("\n");

printf("using thrust lib:\n");

timing(h_x, d_x, d_y, d_z, h_ret, 2);

for (int i = N - 10; i < N; i++) printf("%f ", h_ret[i]);

printf("\n");

CHECK(cudaFree(d_x));

CHECK(cudaFree(d_y));

CHECK(cudaFree(d_z));

delete[] h_x;

delete[] h_y;

delete[] h_ret;

}

贴一张运行结果:

局部放大图:

可以看到,三种方法在计算层面基本上都稳定到0.03-0.04ms这个数量级。

![[<span style='color:red;'>机器</span>学习]-人工智能<span style='color:red;'>对</span>程序员<span style='color:red;'>的</span>深远<span style='color:red;'>影响</span>——案例分析](https://i-blog.csdnimg.cn/direct/11b0af27d89742ec9515da8140f68eb2.gif#pic_centerpng)