先贴出github地址,欢迎大家批评指正:https://github.com/taifyang/yolo-inference

不知不觉LZ已经快工作两年了,由于之前的工作内容主要和模型部署相关,想着利用闲暇时间写一些推理方面的经验总结,于是有了这个工程。其实本来也是自己写了玩的,不过已经陆续迭代半年多了,期间也通过借签优秀代码吸收了经验,索性总结一下心得~

1.0 初始版本

1.1 支持多精度模型

1.2 支持tensorrt的cuda前后处理

1.3 支持onnxruntime的int8推理

1.4 onnxruntime推理代码采用cpp风格接口

1.5 采用抽象工厂和单例模式重构代码

1.6 增加cmake编译支持

1.7 增加Linux系统编译支持

2.0 增加yolov8检测器支持

2.1 增加cmake条件编译选项和自动化测试脚本

3.0 增加分类和分割算法支持

3.1 重构代码结构和缺陷修复

最初版的接口头文件部分主要如下:

#pragma once

#include <iostream>

#include <fstream>

#include <opencv2/opencv.hpp>

...

enum Device_Type

{

CPU,

GPU,

};

class YOLOv5

{

public:

void infer(const std::string image_path)

{

m_image = cv::imread(image_path);

m_result = m_image.clone();

pre_process();

process();

post_process();

cv::imwrite("result.jpg", m_result);

cv::imshow("result", m_result);

cv::waitKey(0);

}

cv::Mat m_image;

cv::Mat m_result;

private:

virtual void pre_process() = 0;

virtual void process() = 0;

virtual void post_process() = 0;

};

该接口类的思路很简单,即一个名为YOLOv5的基类,定义了抽象业务接口如前处理pre_process()、模型推理process()和后处理post_process()需要在派生类中进行具体实现。由基类YOLO根据后端推理框架种类派生出五个子类YOLO_Libtorch 、YOLO_ONNXRuntime、YOLO_OpenCV、YOLO_OpenVINO和YOLO_TensorRT。

#pragma once

#include "yolov5.h"

#include <torch/script.h>

#include <torch/torch.h>

class YOLOv5_Libtorch : public YOLOv5

{

public:

YOLOv5_Libtorch(std::string model_path, Device_Type device_type);

~YOLOv5_Libtorch();

private:

void pre_process();

void process();

void post_process();

torch::DeviceType m_device;

torch::jit::script::Module module;

std::vector<torch::jit::IValue> m_inputs;

torch::jit::IValue m_outputs;

};

调用时初始化传入模型路径和Device_Type,并指定图片路径即可推理,demo如下:

#include "yolov5_libtorch.h"

int main(int argc, char* argv[])

{

YOLOv5* yolov5 = new YOLOv5_Libtorch("yolov5n_cpu.torchscript", CPU);

yolov5->infer("bus.jpg");

return 0;

}

后续扩充了支持不同的模型Model_Type,并使用抽象工厂和单例模式时对外接口在被调用时表现形式更统一:

#pragma once

#include <iostream>

#include <fstream>

#include <opencv2/opencv.hpp>

...

enum Algo_Type

{

Libtorch,

ONNXRuntime,

OpenCV,

OpenVINO,

TensorRT,

};

enum Device_Type

{

CPU,

GPU,

};

enum Model_Type

{

FP32,

FP16,

INT8,

};

class YOLOv5

{

public:

virtual void init(const std::string model_path, const Device_Type device_type, Model_Type model_type) = 0;

void infer(const std::string image_path);

virtual void release() {};

protected:

virtual void pre_process() = 0;

virtual void process() = 0;

virtual void post_process();

cv::Mat m_image;

cv::Mat m_result;

float* m_outputs_host;

};

class AlgoFactory

{

public:

typedef std::unique_ptr<YOLOv5>(*CreateFunction)();

static AlgoFactory& instance();

void register_algo(const Algo_Type& algo_type, CreateFunction create_function);

std::unique_ptr<YOLOv5> create(const Algo_Type& algo_type);

private:

AlgoFactory();

std::map<Algo_Type, CreateFunction> m_algo_registry;

};

AlgoFactory类中m_algo_registry用来储存算法的唯一全局注册表,register_algo接口用来注册算法,create接口用来返回抽象工厂创建的算法,具体实现如下:

AlgoFactory& AlgoFactory::instance()

{

static AlgoFactory algo_factory;

return algo_factory;

}

void AlgoFactory::register_algo(const Algo_Type& algo_type, CreateFunction create_function)

{

m_algo_registry[algo_type] = create_function;

}

std::unique_ptr<YOLOv5> AlgoFactory::create(const Algo_Type& algo_type)

{

assert(("algo type not exists!", m_algo_registry.find(algo_type) != m_algo_registry.end()));

return m_algo_registry[algo_type]();

}

AlgoFactory::AlgoFactory()

{

register_algo(Algo_Type::Libtorch, []() -> std::unique_ptr<YOLOv5> { return std::make_unique<YOLOv5_Libtorch>(); });

register_algo(Algo_Type::ONNXRuntime, []() -> std::unique_ptr<YOLOv5> { return std::make_unique<YOLOv5_ONNXRuntime>(); });

register_algo(Algo_Type::OpenCV, []() -> std::unique_ptr<YOLOv5> { return std::make_unique<YOLOv5_OpenCV>(); });

register_algo(Algo_Type::OpenVINO, []() -> std::unique_ptr<YOLOv5> { return std::make_unique<YOLOv5_OpenVINO>(); });

register_algo(Algo_Type::TensorRT, []() -> std::unique_ptr<YOLOv5> { return std::make_unique<YOLOv5_TensorRT>(); });

}

此时调用时需要先创建算法实例,并依次调用init()、infer()和release()接口,demo的表现形式如下:

#include "yolov5.h"

int main(int argc, char* argv[])

{

std::unique_ptr<YOLOv5> yolov5 = AlgoFactory::instance().create(Algo_Type::Libtorch);

yolov5->init("yolov5n_cpu_fp32.torchscript", CPU, FP32);

yolov5->infer("test.mp4");

yolov5->release();

return 0;

}

2.x版本中主要增加了对yolov8检测器的支持,接口头文件除了增加Algo_Type枚举类型用来表示不同算法:

enum Algo_Type

{

YOLOv5,

YOLOv8,

};

3.x版本主要增加了对分类、分割算法的支持,头文件增加了Task_Type枚举类型,由于维度的扩充将算法注册表替换为二维向量来储存:

#pragma once

#include <iostream>

#include <fstream>

#include <opencv2/opencv.hpp>

enum Backend_Type

{

Libtorch,

ONNXRuntime,

OpenCV,

OpenVINO,

TensorRT,

};

enum Task_Type

{

Classification,

Detection,

Segmentation,

};

enum Algo_Type

{

YOLOv5,

YOLOv8,

};

enum Device_Type

{

CPU,

GPU,

};

enum Model_Type

{

FP32,

FP16,

INT8,

};

class YOLO

{

public:

virtual ~YOLO() {}; //不加此句会导致虚拟继承内存泄漏

virtual void init(const Algo_Type algo_type, const Device_Type device_type, const Model_Type model_type, const std::string model_path) = 0;

void infer(const std::string file_path, char* argv[], bool save_result = true, bool show_result = true);

virtual void release() {};

protected:

virtual void pre_process() = 0;

virtual void process() = 0;

virtual void post_process() = 0;

cv::Mat m_image;

cv::Mat m_result;

int m_input_width = 640;

int m_input_height = 640;

int m_input_numel = 1 * 3 * m_input_width * m_input_height;

};

class CreateFactory

{

public:

typedef std::unique_ptr<YOLO>(*CreateFunction)();

static CreateFactory& instance();

void register_class(const Backend_Type& backend_type, const Task_Type& task_type, CreateFunction create_function);

std::unique_ptr<YOLO> create(const Backend_Type& backend_type, const Task_Type& task_type);

private:

CreateFactory();

std::vector<std::vector<CreateFunction>> m_create_registry;

};

CreateFactory& CreateFactory::instance()

{

static CreateFactory create_factory;

return create_factory;

}

void CreateFactory::register_class(const Backend_Type& backend_type, const Task_Type& task_type, CreateFunction create_function)

{

m_create_registry[backend_type][task_type] = create_function;

}

std::unique_ptr<YOLO> CreateFactory::create(const Backend_Type& backend_type, const Task_Type& task_type)

{

if (backend_type >= m_create_registry.size())

{

std::cout << "unsupported backend type!" << std::endl;

std::exit(-1);

}

if (task_type >= m_create_registry[task_type].size())

{

std::cout << "unsupported task type!" << std::endl;

std::exit(-1);

}

return m_create_registry[backend_type][task_type]();

}

CreateFactory::CreateFactory()

{

m_create_registry.resize(5, std::vector<CreateFunction>(3));

#ifdef _YOLO_LIBTORCH

register_class(Backend_Type::Libtorch, Task_Type::Classification, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_Libtorch_Classification>(); });

register_class(Backend_Type::Libtorch, Task_Type::Detection, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_Libtorch_Detection>(); });

register_class(Backend_Type::Libtorch, Task_Type::Segmentation, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_Libtorch_Segmentation>(); });

#endif // _YOLO_Libtorch

#ifdef _YOLO_ONNXRUNTIME

register_class(Backend_Type::ONNXRuntime, Task_Type::Classification, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_ONNXRuntime_Classification>(); });

register_class(Backend_Type::ONNXRuntime, Task_Type::Detection, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_ONNXRuntime_Detection>(); });

register_class(Backend_Type::ONNXRuntime, Task_Type::Segmentation, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_ONNXRuntime_Segmentation>(); });

#endif // _YOLO_ONNXRuntime

#ifdef _YOLO_OPENCV

register_class(Backend_Type::OpenCV, Task_Type::Classification, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_OpenCV_Classification>(); });

register_class(Backend_Type::OpenCV, Task_Type::Detection, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_OpenCV_Detection>(); });

register_class(Backend_Type::OpenCV, Task_Type::Segmentation, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_OpenCV_Segmentation>(); });

#endif // _YOLO_OpenCV

#ifdef _YOLO_OPENVINO

register_class(Backend_Type::OpenVINO, Task_Type::Classification, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_OpenVINO_Classification>(); });

register_class(Backend_Type::OpenVINO, Task_Type::Detection,[]() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_OpenVINO_Detection>(); });

register_class(Backend_Type::OpenVINO, Task_Type::Segmentation, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_OpenVINO_Segmentation>(); });

#endif // _YOLO_OpenVINO

#ifdef _YOLO_TENSORRT

register_class(Backend_Type::TensorRT, Task_Type::Classification, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_TensorRT_Classification>(); });

register_class(Backend_Type::TensorRT, Task_Type::Detection, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_TensorRT_Detection>(); });

register_class(Backend_Type::TensorRT, Task_Type::Segmentation, []() -> std::unique_ptr<YOLO> { return std::make_unique<YOLO_TensorRT_Segmentation>(); });

#endif // _YOLO_TensorRT

}

通过基类YOLO根据任务类型派生出三个子类YOLO_Classification、YOLO_Detection ,并由YOLO_Detection 派生出YOLO_Segmentation:

#pragma once

#include "yolo.h"

#include "utils.h"

class YOLO_Classification : virtual public YOLO

{

protected:

void draw_result(std::string label);

int class_num = 1000;

};

#pragma once

#include "yolo.h"

#include "utils.h"

class YOLO_Detection : virtual public YOLO

{

protected:

void LetterBox(cv::Mat& input_image, cv::Mat& output_image, cv::Vec4d& params, cv::Size shape = cv::Size(640, 640), cv::Scalar color = cv::Scalar(114, 114, 114));

void nms(std::vector<cv::Rect> & boxes, std::vector<float> & scores, float score_threshold, float nms_threshold, std::vector<int> & indices);

void scale_box(cv::Rect& box, cv::Size size);

void draw_result(std::string label, cv::Rect box);

int class_num = 80;

float score_threshold = 0.2;

float nms_threshold = 0.5;

float confidence_threshold = 0.2;

cv::Vec4d m_params;

int m_output_numprob;

int m_output_numbox;

int m_output_numdet;

};

#pragma once

#include "yolo_detection.h"

//网络输出相关参数

struct OutputSeg

{

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

//掩膜相关参数

struct MaskParams

{

int segChannels = 32;

int segWidth = 160;

int segHeight = 160;

int netWidth = 640;

int netHeight = 640;

float maskThreshold = 0.5;

cv::Size srcImgShape;

cv::Vec4d params;

};

class YOLO_Segmentation : public YOLO_Detection

{

protected:

void GetMask(const cv::Mat& maskProposals, const cv::Mat& mask_protos, OutputSeg& output, const MaskParams& maskParams);

void draw_result(std::vector<OutputSeg> result);

MaskParams m_mask_params;

int m_output_numseg;

};

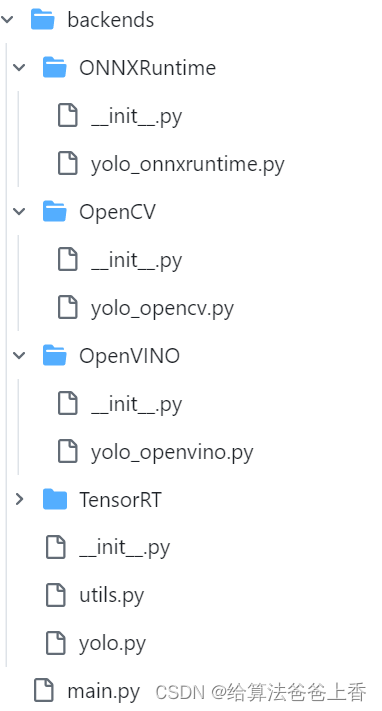

另一方面和之前版本类似,由基类YOLO根据后端推理框架种类派生出五个子类YOLO_Libtorch 、YOLO_ONNXRuntime、YOLO_OpenCV、YOLO_OpenVINO和YOLO_TensorRT。最终的具体实现子类需要派生自任务种类的父类和推理框架种类的父类,如下所示:

class YOLO_Libtorch : virtual public YOLO

{

public:

void init(const Algo_Type algo_type, const Device_Type device_type, const Model_Type model_type, const std::string model_path);

protected:

Algo_Type m_algo;

torch::DeviceType m_device;

Model_Type m_model;

torch::jit::script::Module module;

std::vector<torch::jit::IValue> m_input;

torch::jit::IValue m_output;

};

class YOLO_Libtorch_Classification : public YOLO_Libtorch, public YOLO_Classification

{

public:

void init(const Algo_Type algo_type, const Device_Type device_type, const Model_Type model_type, const std::string model_path);

private:

void pre_process();

void process();

void post_process();

float* m_output_host;

};