加权CE_loss和BCE_loss稍有不同

1.标签为long类型,BCE标签为float类型

2.当reduction为mean时计算每个像素点的损失的平均,BCE除以像素数得到平均值,CE除以像素对应的权重之和得到平均值。

参数配置torch.nn.CrossEntropyLoss(weight=None,size_average=None,ignore_index=-100,reduce=None,reduction=‘mean’,label_smoothing=0.0)

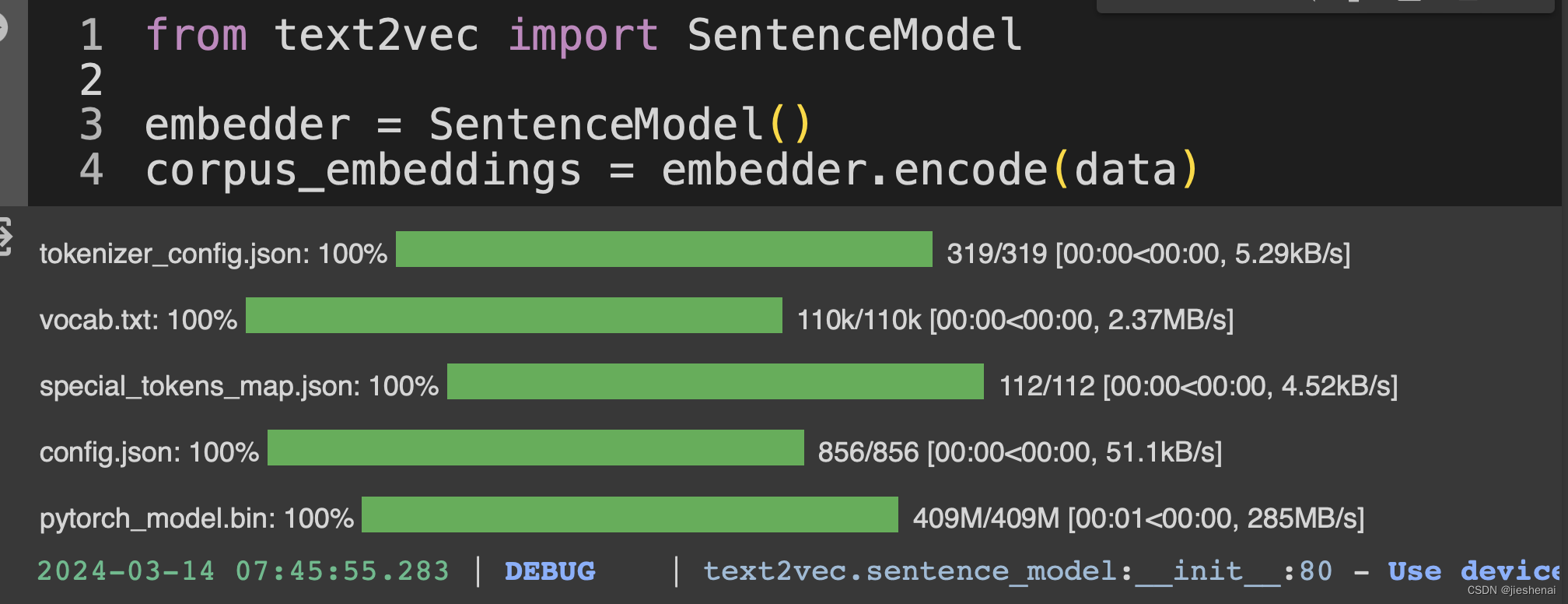

增加加权的CE_loss代码实现

# 总之, CrossEntropyLoss() = softmax + log + NLLLoss() = log_softmax + NLLLoss(), 具体等价应用如下:

import torch

import torch.nn as nn

import torch.nn.functional as F

import random

import numpy as np

class CrossEntropyLoss2d(nn.Module):

def __init__(self, weight=None):

super(CrossEntropyLoss2d, self).__init__()

self.nll_loss = nn.CrossEntropyLoss(weight, reduction='mean')

def forward(self, preds, targets):

return self.nll_loss(preds, targets)

语义分割类别计算

class CE_w_loss(nn.Module):

def __init__(self,ignore_index=255):

super(CE_w_loss, self).__init__()

self.ignore_index = ignore_index

# self.CE = nn.CrossEntropyLoss(ignore_index=self.ignore_index)

def forward(self, outputs, targets):

class_num = outputs.shape[1]

# print("class_num :",class_num )

# # 计算每个类别在整个 batch 中的像素数占比

class_pixel_counts = torch.bincount(targets.flatten(), minlength=class_num) # 假设有class_num个类别

class_pixel_proportions = class_pixel_counts.float() / torch.numel(targets)

# # 根据类别占比计算权重

class_weights = 1.0 / (torch.log(1.02 + class_pixel_proportions)).double() # 使用对数变换平衡权重

# # print("class_weights :",class_weights)

#

# 定义交叉熵损失函数,并使用动态计算的类别权重

criterion = nn.CrossEntropyLoss(ignore_index=self.ignore_index,weight= class_weights)

# 计算损失

loss = criterion(outputs, targets)

print(loss.item()) # 打印损失值

return loss

np.random.seed(666)

pred = np.ones((2, 5, 256,256))

seg = np.ones((2, 5, 256, 256)) # 灰度

label = np.ones((2, 256, 256)) # 灰度

pred = torch.from_numpy(pred)

seg = torch.from_numpy(seg).int() # 灰度

label = torch.from_numpy(label).long()

ce = CE_w_loss()

loss = ce(pred, label)

print("loss:",loss.item())

调用库(手动设置权重)

import torch

import torch.nn as nn

# 假设有一些模型输出和目标标签

model_output = torch.randn(3, 5) # 假设有5个类别

target = torch.empty(3, dtype=torch.long).random_(5)

# 定义权重

weights = torch.tensor([1.0, 2.0, 3.0, 4.0, 5.0])

# 定义交叉熵损失函数,并设置权重

criterion = nn.CrossEntropyLoss(weight=weights)

# 计算损失

loss = criterion(model_output, target)

print(loss)

自适应计算权重

import torch

import torch.nn as nn

import numpy as np

# 假设我们有一个包含10个样本的批次,每个样本属于4个类别之一

batch_size = 10

num_classes = 4

# 随机生成未经过 softmax 的logits输出(网络的最后一层输出)

logits = torch.randn(batch_size, num_classes, requires_grad=True)

# 真实的标签(每个样本的类别索引),例如 [0, 2, 1, 3, 0, 0, 1, 2, 3, 3]

labels = torch.tensor([0, 2, 1, 3, 0, 0, 1, 2, 3, 3])

# 统计每个类别的频率

class_counts = torch.bincount(labels, minlength=num_classes).float()

# 计算每个类别的权重,权重可以为类别频率的倒数

# 为了防止分母为零,这里加一个小的常数epsilon

epsilon = 1e-6

class_weights = 1.0 / (class_counts + epsilon)

# 归一化权重,使其和为1

class_weights /= class_weights.sum()

print('Class Counts:', class_counts)

print('Class Weights:', class_weights)

# 创建带权重的交叉熵损失函数

criterion = nn.CrossEntropyLoss(weight=class_weights)

# 计算损失值

loss = criterion(logits, labels)

print('Logits:\n', logits)

print('Labels:\n', labels)

print('Weighted Cross-Entropy Loss:', loss.item())

# 反向传播梯度

loss.backward()

报错

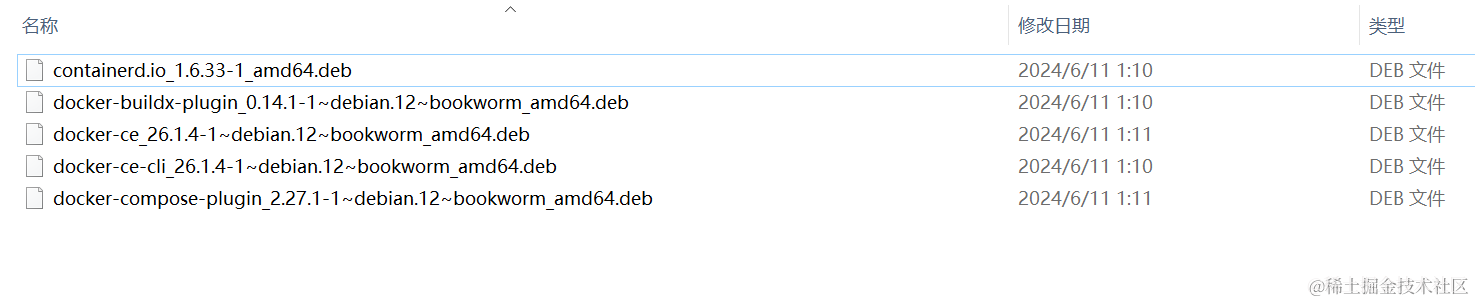

Weight=torch.from_numpy(np.array([0.1, 0.8, 1.0, 1.0])).float() 报错

Weight=torch.from_numpy(np.array([0.1, 0.8, 1.0, 1.0])).double() 正确

参考:[1]https://blog.csdn.net/CSDN_of_ding/article/details/111515226

[2] https://blog.csdn.net/qq_40306845/article/details/137651442

[3] https://www.zhihu.com/question/400443029/answer/2477658229

![[Shell编程学习路线]——编制第一个shell脚本入门篇](https://img-blog.csdnimg.cn/direct/3b7ecfb50593488a85d6a18de8a57bc8.gif)