Reinforcement Learning

an agent performs actions in environment, and receives rewards

goal: Learn how to take actions that maximize reward

Stochasticity: Rewards and state transitions may be random

Credit assignment: Reward r t r_t rt may not directly depend on action a t a_t at

Nondifferentiable: Can’t backprop through the world

Nonstationary: What the agent experiences depends on how it acts

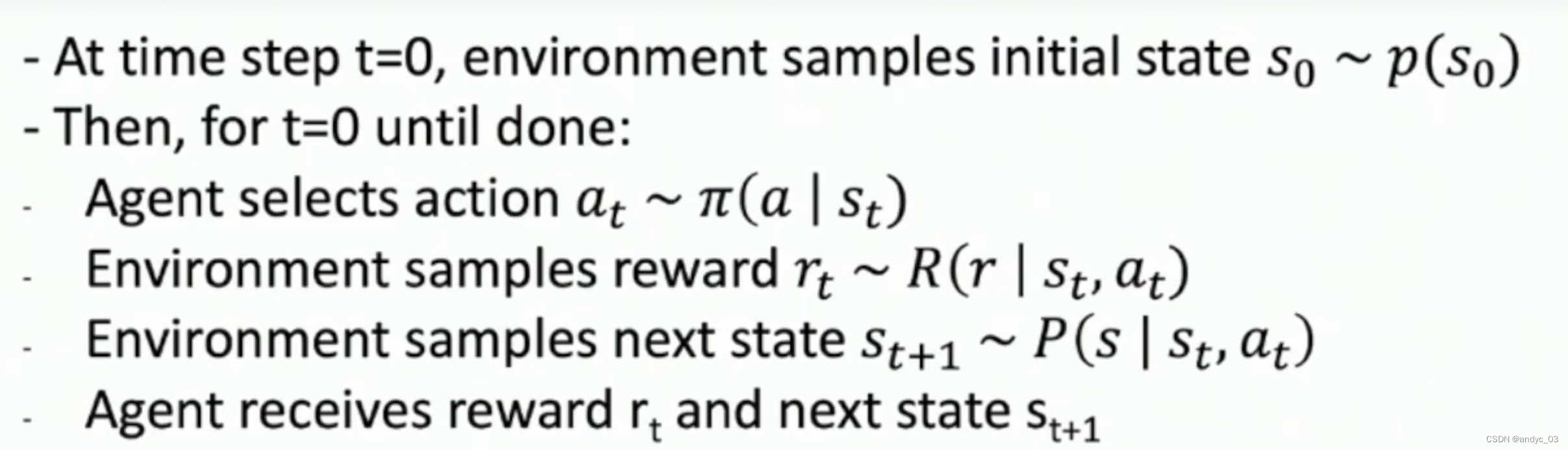

Markov Decision Process (MDP)

Mathematical formalization of the RL problem: A tuple ( S , A , R , P , γ ) (S,A,R,P,\gamma) (S,A,R,P,γ)

S S S: Set of possible states

A A A: Set of possible actions

R R R: Distribution of reward given (state, action) pair

P P P: Transition probability: distribution over next state given (state, action)

γ \gamma γ: Discount factor (trade-off between future and present rewards)

Markov Property: The current state completely characterizes the state of the world. Rewards and next states depend only on current state, not history.

Agent executes a policy π \pi π giving distribution of actions conditioned on states.

Goal: Find best policy that maximizes cumulative discounted reward ∑ t γ t r t \sum_t \gamma^tr_t ∑tγtrt

We will try to find the maximal expected sum of rewards to reduce the randomness.

Value function V π ( s ) V^{\pi}(s) Vπ(s): expected cumulative reward from following policy π \pi π from state s s s

Q function Q π ( s , a ) Q^{ \pi}(s,a) Qπ(s,a) : expected cumulative reward from following policy π \pi π from taking action a a a in state s s s

Bellman Equation

After taking action a in state s, we get reward r and move to a new state s’. After that, the max possible reward we can get is max a ′ Q ∗ ( s ′ , a ′ ) \max_{a'} Q^*(s',a') maxa′Q∗(s′,a′)

Idea: find a function that satisfy Bellman equation then it must be optimal

start with a random Q, and use Bellman equation as an update rule.

But if the state is large/infinite, we can’t iterate them.

Approximate Q(s, a) with a neural network, use Bellman equation as loss function.

-> Deep q learning

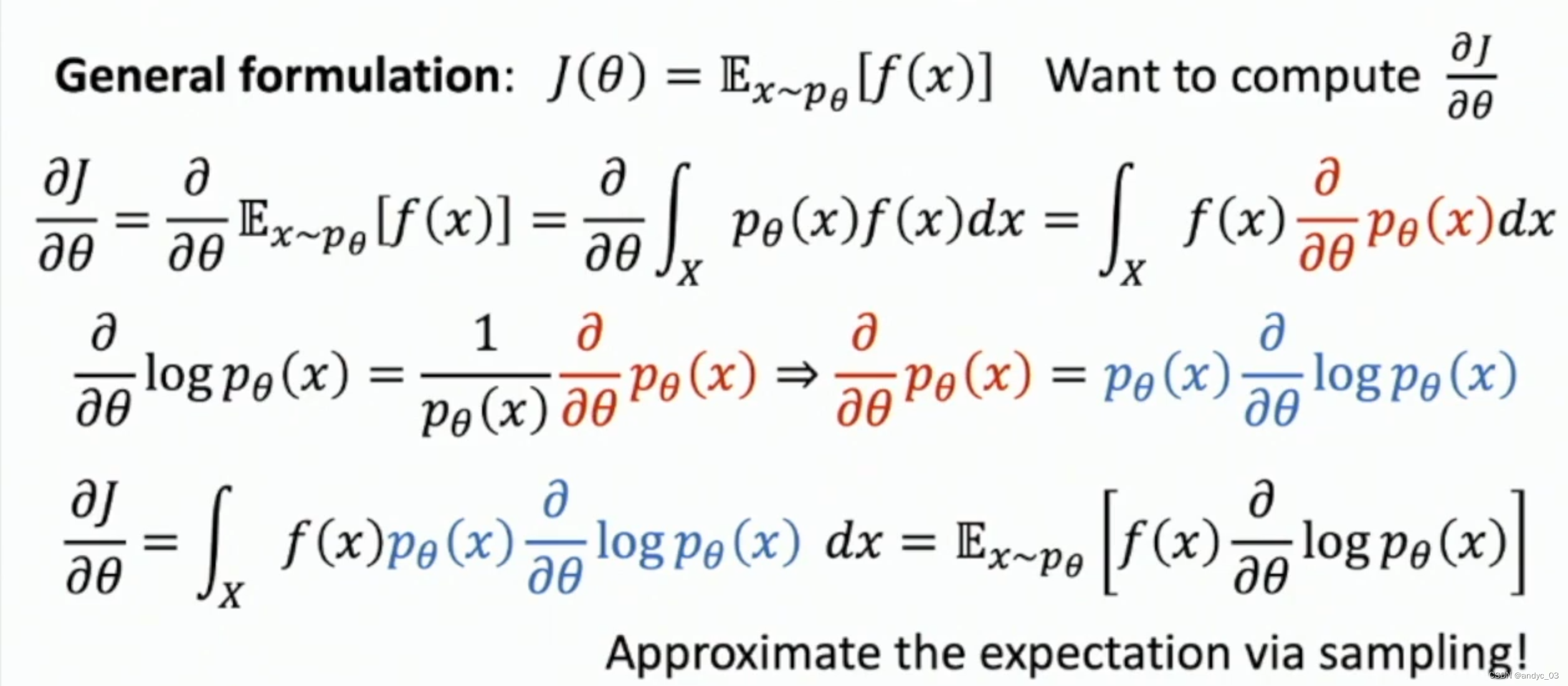

Policy Gradients

Train a network π θ ( a , s ) \pi_{\theta}(a,s) πθ(a,s) that takes state as input, gives distribution over which action to take

Objective function: Expected future rewards when following policy π θ \pi_{\theta} πθ

Use gradient ascent -> play some tricks to make it differentiable

Other approaches:

Actor-Critic

Model-Based

Imitation Learning

Inverse Reinforcement Learning

Adversarial Learning

…

Stochastic computation graphs