一、参考资料

二、准备工作

0. 系统环境

Environment

Operating System + Version: Ubuntu + 16.04

CPU Type: AMD Ryzen 5 5600X 6-Core Processor@3.7GHz

RAM:32GB

Python Version (if applicable): 3.9.5

PyTorch Version (if applicable): 2.0.1+cu11

torchvision:0.10.0+cu11

onnx: 1.16.0

1. 模型准备

MindSpore Lite提供的模型convertor工具可以支持主流的模型格式到MindIR的格式转换,用户需要导出对应的模型文件,推荐导出为ONNX格式。

1.1 PyTorch转ONNX

Exporting to ONNX from PyTorch

第三章:PyTorch 转 ONNX 详解

import torch

import torchvision

def export_onnx():

model = torchvision.models.mobilenet_v2()

model_name = "mobilenet_v2"

model.eval() # 若存在batchnorm、dropout层则一定要eval()!!!!再export

BATCH_SIZE = 1

dummy_input = torch.randn(BATCH_SIZE, 3, 224, 224)

traced_script_module = torch.jit.trace(model, dummy_input)

# 保存PyTorch模型

traced_script_module.save("{}.pt".format(model_name))

model_onnx = torch.jit.load("{}.pt".format(model_name))

model_onnx.eval()

# 保存onnx模型

torch.onnx.export(model_onnx,

dummy_input,

"{}.onnx".format(model_name),

opset_version=13,

do_constant_folding=True,

input_names=["input_0"],

output_names=["output_0"],

dynamic_axes={"input": {0: "batch_size"},

"output": {0: "batch_size"}}

)

1.2 TensorFlow转ONNX

Exporting to ONNX from TensorFlow

2. 参数准备

MSLite涉及到编译优化的过程,不支持完全动态的权重模式,需要在转换时确定对应的inputShape,用于模型的格式的编译与转换,可以在 netron官网 进行查看,或者对于模型结构中的输入进行shape的打印,并明确输入的batch。

一般来说,推理时指定的inputShape是和用户的业务及推理场景是紧密相关的,可以通过原始模型推理脚本或者网络模型进行判断。

如果netron中没有显示inputShape,可能由于使用了动态shape模型导致,请确保使用的是静态shape模型,静态shape模型文件导出方法请参考上章节的【模型准备 】。

3. 下载MinSporeLite

4. (可选)编译MindSporeLite

如果下载的MindSporeLite软件包无法满足开发者,开发者可以自行编译MindSporeLite软件包。

编译MindSporeLite的详细步骤,请参考:编译MindSpore Lite

# 编译x86_64架构版本

bash build.sh -I x86_64 -j12

# 编译debug版本

bash build.sh -I x86_64 -j12 -d

# 增量编译

bash build.sh -I x86_64 -j12 -i

输出结果

...

...

...

[ 99%] Built target benchmark

[100%] Built target cropper

Run CPack packaging tool...

CPack: Create package using TGZ

CPack: Install projects

CPack: - Run preinstall target for: Lite

CPack: - Install project: Lite []

CPack: - Install component: linux-x64

CPack: Create package

CPack: - package: /home/yoyo/MyDocuments/C++Projects/mindspore/output/tmp/mindspore-lite-1.8.2-linux-x64.tar.gz generated.

CPack: - checksum file: /home/yoyo/MyDocuments/C++Projects/mindspore/output/tmp/mindspore-lite-1.8.2-linux-x64.tar.gz.sha256 generated.

Python3 not found, so Python API will not be compiled.

JAVA_HOME is not set, so jni and jar packages will not be compiled

If you want to compile the JAR package, please set . For example: export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-amd64

---------------- mindspore lite: build success ----------------

---------------- MindSpore: build end ----------------

编译成功后,解压MindSporeLite软件包的目录如下:

mindspore-lite-{version}-linux-x64

└── tools

└── converter

├── include

│ └── registry # 自定义算子、模型解析、节点解析、转换优化注册头文件

├── converter # 模型转换工具

│ └── converter_lite # 可执行程序

└── lib # 转换工具依赖的动态库

├── libmindspore_glog.so.0 # Glog的动态库

├── libmslite_converter_plugin.so # 注册插件的动态库

├── libopencv_core.so.4.5 # OpenCV的动态库

├── libopencv_imgcodecs.so.4.5 # OpenCV的动态库

└── libopencv_imgproc.so.4.5 # OpenCV的动态库

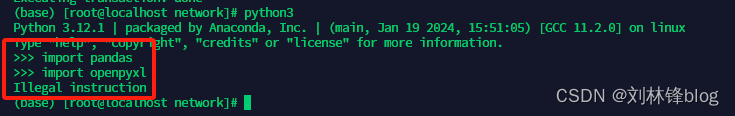

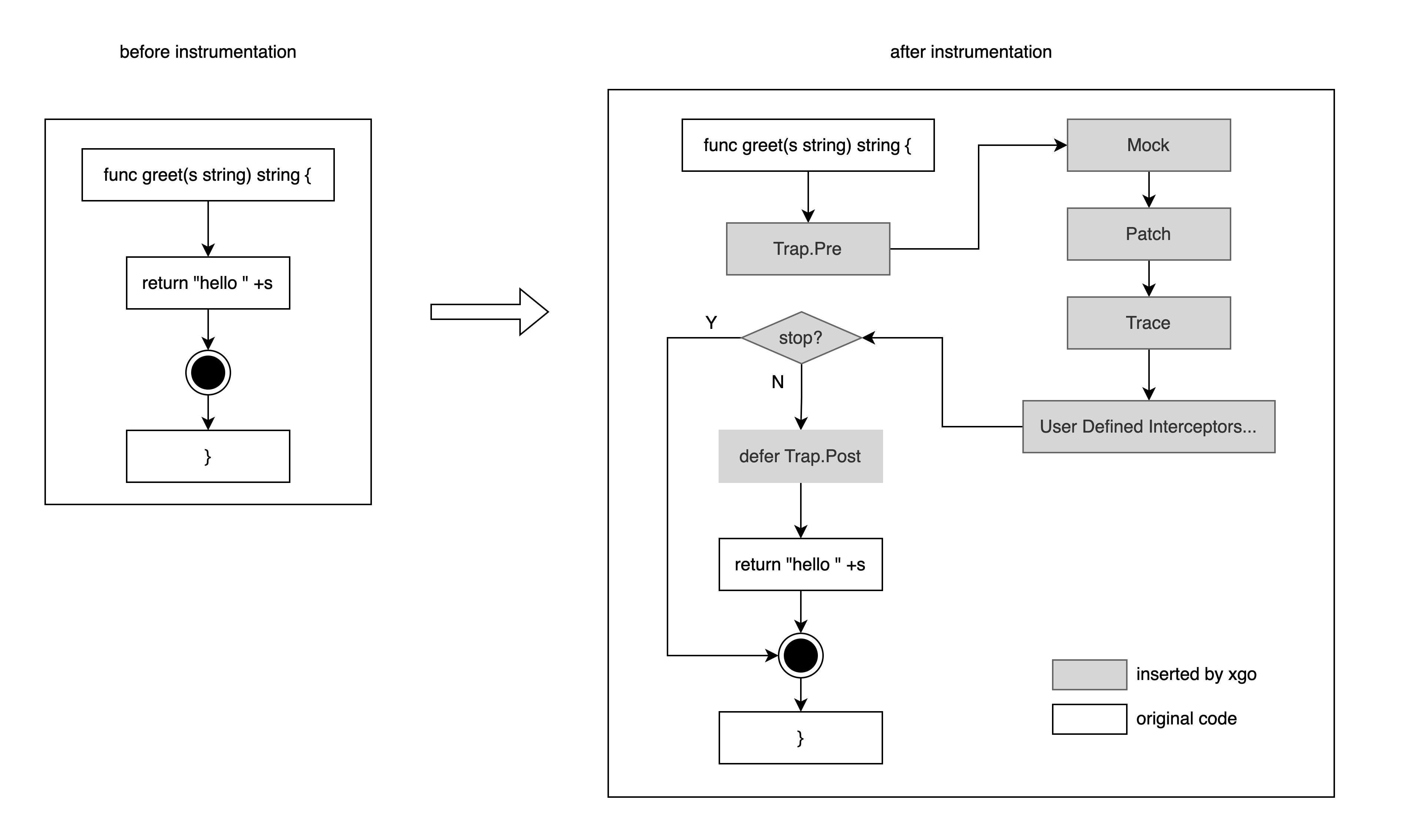

编译PYTORCH版本

MindSpore r2.0以上版本,支持PyTorch模型的转换。但是,由于支持转换PyTorch模型的编译选项默认关闭,因此下载的安装包不支持转换PyTorch模型,需要打开指定编译选项进行本地编译。

下载 CPU版本libtorch ,解压到

/home/user/libtorch目录下。设置环境变量:

export MSLITE_ENABLE_CONVERT_PYTORCH_MODEL=on export LD_LIBRARY_PATH="/home/user/libtorch/lib:${LD_LIBRARY_PATH}" export LIB_TORCH_PATH="/home/user/libtorch"编译

# 编译 bash build.sh -I x86_64 -j12

从上图可以看出,编译支持PyTorch模型的MindSporeLite软件包高达800多MB,而官方提供的Release软件包(不支持PyTorch模型)仅60多MB,这是一个很有意思的问题,博主觉得有空可以研究一下。

5. 设置日志打印级别

日志级别:0代表DEBUG,1代表INFO,2代表WARNING,3代表ERROR。

| 日志级别 | GLOG_v |

|---|---|

| DEBUG | 0 |

| INFO | 1 |

| WARNING | 2 |

| ERROR | 3 |

# 设置日志打印级别为INFO

set GLOG_v=1

6. MindSpore Lite API

MindSpore Lite API

基于MindSpore Lite的模型转换

MindSpore Lite提供了JAVA/C++/Python API,进行推理业务的适配,并且在构建模型时,通过上下文的参数来确定运行时的具体配置,例如运行后端的配置等。

三、converter_lite 模型转换

# 设置环境变量

export LD_LIBRARY_PATH=${PACKAGE_ROOT_PATH}/tools/converter/lib:${LD_LIBRARY_PATH}

# 进入转换工具所在目录

cd ${PACKAGE_ROOT_PATH}/tools/converter/converter

# ONNX模型转换

./converter_lite --fmk=ONNX --modelFile=mobilenetv2-12.onnx --outputFile=mobilenetv2-12

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/converter/converter$ ./converter_lite --fmk=ONNX --modelFile=

mobilenetv2-12.onnx --outputFile=mobilenetv2-12

[WARNING] LITE(24326,7fdabd607f40,converter_lite):2024-04-02-20:19:26.855.514 [mindspore/lite/tools/optimizer/graph/infershape_pass.cc:302] InferProcess] node infer shape failed, node is Conv_0

[WARNING] LITE(24326,7fdabd607f40,converter_lite):2024-04-02-20:19:26.855.529 [mindspore/lite/tools/optimizer/graph/infershape_pass.cc:184] Run] infer shape failed.

CONVERT RESULT SUCCESS:0

1. 设置输入数据格式

--inputDataFormat=<INPUTDATAFORMAT>

- 设置输入数据格式为:

NCHW

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/converter/converter$ ./converter_lite --fmk=ONNX --modelFile=

mobilenetv2-12.onnx --outputFile=mobilenetv2-12NCHW --inputDataFormat=NCHW

[WARNING] LITE(24786,7feadf39df40,converter_lite):2024-04-02-20:42:39.913.710 [mindspore/lite/tools/optimizer/graph/infershape_pass.cc:302] InferProcess] node infer shape failed, node is Conv_0

[WARNING] LITE(24786,7feadf39df40,converter_lite):2024-04-02-20:42:39.913.726 [mindspore/lite/tools/optimizer/graph/infershape_pass.cc:184] Run] infer shape failed.

CONVERT RESULT SUCCESS:0

- 设置输入数据格式为:

NHWC

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/converter/converter$ ./converter_lite --fmk=ONNX --modelFile=mobilenetv2-12.onnx --outputFile=mobilenetv2-12NHWC --inputDataFormat=NHWC

[WARNING] LITE(25344,7fa070c77f40,converter_lite):2024-04-02-21:10:43.436.991 [mindspore/lite/tools/optimizer/graph/infershape_pass.cc:302] InferProcess] node infer shape failed, node is Conv_0

[WARNING] LITE(25344,7fa070c77f40,converter_lite):2024-04-02-21:10:43.437.007 [mindspore/lite/tools/optimizer/graph/infershape_pass.cc:184] Run] infer shape failed.

CONVERT RESULT SUCCESS:0

2. 设置输入维度

--inputShape=<INPUTSHAPE>

设定模型输入的维度,输入维度的顺序和原始模型保持一致。对某些特定的模型可以进一步优化模型结构,但是转化后的模型将可能失去动态shape的特性。

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/converter/converter$ ./converter_lite --fmk=ONNX --modelFile=mobilenetv2-12.onnx --outputFile=mobilenetv2-12NCHW --inputDataFormat=NCHW --inputShape="input:1,3,224,224"

CONVERT RESULT SUCCESS:0

3. 转换PyTorch模型

# 设置libtorch环境变量

export LD_LIBRARY_PATH="/home/user/libtorch/lib:${LD_LIBRARY_PATH}"

export LIB_TORCH_PATH="/home/user/libtorch"

./converter_lite --fmk=PYTORCH --modelFile=model.pt --outputFile=model

四、benchmark 模型推理

# 设置环境变量

export LD_LIBRARY_PATH=${PACKAGE_ROOT_PATH}/runtime/lib:${LD_LIBRARY_PATH}

./benchmark --modelFile=/path/to/model.ms

1. 设置输入维度

--inputShapes=<INPUTSHAPES>

benchmark推理时的输入数据格式,必须与converter_lite模型转换时的一致,否则benchmark推理失败。

- 设置输入数据格式为:

NHWC

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/benchmark$ ./benchmark --modelFile=mobilenetv2-12.ms --inputShapes="1,224,224,3"

ModelPath = mobilenetv2-12.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 10

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 224 224 3

start unified benchmark run

PrepareTime = 18.894 ms

Running warm up loops...

Running benchmark loops...

Model = mobilenetv2-12.ms, NumThreads = 2, MinRunTime = 3.344000 ms, MaxRuntime = 4.421000 ms, AvgRunTime = 3.532000 ms

Run Benchmark mobilenetv2-12.ms Success.

- 输入数据格式为:

NCHW

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/benchmark$ ./benchmark --modelFile=mobilenetv2-12NCHW.ms --inputShapes="1,3,224,224"

ModelPath = mobilenetv2-12NCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 10

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 16.325 ms

Running warm up loops...

Running benchmark loops...

Model = mobilenetv2-12NCHW.ms, NumThreads = 2, MinRunTime = 3.459000 ms, MaxRuntime = 4.462000 ms, AvgRunTime = 3.779000 ms

Run Benchmark mobilenetv2-12NCHW.ms Success.

2. 设置推理运行次数

--loopCount=<LOOPCOUNT>

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/benchmark$ ./benchmark --modelFile=mobilenetv2-12NCHW.ms --inputShapes="1,3,224,224" --loopCount=1000

ModelPath = mobilenetv2-12NCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 1000

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 19.141 ms

Running warm up loops...

Running benchmark loops...

Model = mobilenetv2-12NCHW.ms, NumThreads = 2, MinRunTime = 3.409000 ms, MaxRuntime = 5.176000 ms, AvgRunTime = 3.501000 ms

Run Benchmark mobilenetv2-12NCHW.ms Success.

3. 设置线程数

--numThreads=<NUMTHREADS>

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/benchmark$ ./benchmark --modelFile=mobilenetv2-12NCHW.ms --inputShapes="1,3,224,224" --loopCount=1000 --numThreads=12

ModelPath = mobilenetv2-12NCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 1000

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 12

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 20.17 ms

Running warm up loops...

Running benchmark loops...

Model = mobilenetv2-12NCHW.ms, NumThreads = 12, MinRunTime = 1.675000 ms, MaxRuntime = 45.550999 ms, AvgRunTime = 2.028000 ms

Run Benchmark mobilenetv2-12NCHW.ms Success.

4. 计算每个算子耗时

--timeProfiling=<TIMEPROFILING>

(mslite) yoyo@yoyo:~/MyDocuments/C++Projects/mindspore/output/mindspore-lite-1.9.0-linux-x64/tools/benchmark$ ./benchmark --modelFile=mobilenetv2-12NCHW.ms --inputShapes="1,3,224,224" --timeProfiling=true

ModelPath = mobilenetv2-12NCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 10

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 17.445 ms

Running warm up loops...

Running benchmark loops...

-------------------------------------------------------------------------

opName avg(ms) percent calledTimes opTotalTime

Add_15 0.013500 0.003393 10 0.135000

Add_26 0.004700 0.001181 10 0.047000

Add_32 0.004200 0.001056 10 0.042000

Add_43 0.003700 0.000930 10 0.037000

Add_49 0.003600 0.000905 10 0.036000

Add_55 0.003600 0.000905 10 0.036000

Add_66 0.004200 0.001056 10 0.042000

Add_72 0.004300 0.001081 10 0.043000

Add_83 0.003200 0.000804 10 0.032000

Add_89 0.003000 0.000754 10 0.030000

Clip_96_post 0.017200 0.004324 10 0.172000

Concat_102 0.001800 0.000452 10 0.018000

Conv_0 0.113000 0.028405 10 1.130000

Conv_10 0.146100 0.036725 10 1.461000

Conv_12 0.170600 0.042884 10 1.706000

Conv_14 0.094200 0.023679 10 0.942000

Conv_16 0.144800 0.036398 10 1.448000

Conv_18 0.050000 0.012569 10 0.500000

Conv_2 0.130800 0.032879 10 1.308000

Conv_20 0.032200 0.008094 10 0.322000

Conv_21 0.047300 0.011890 10 0.473000

Conv_23 0.054200 0.013624 10 0.542000

Conv_25 0.041600 0.010457 10 0.416000

Conv_27 0.044900 0.011287 10 0.449000

Conv_29 0.052000 0.013071 10 0.520000

Conv_31 0.039500 0.009929 10 0.395000

Conv_33 0.045300 0.011387 10 0.453000

Conv_35 0.015400 0.003871 10 0.154000

Conv_37 0.023200 0.005832 10 0.232000

Conv_38 0.041000 0.010306 10 0.410000

Conv_4 0.059700 0.015007 10 0.597000

Conv_40 0.027000 0.006787 10 0.270000

Conv_42 0.042600 0.010708 10 0.426000

Conv_44 0.040900 0.010281 10 0.409000

Conv_46 0.025400 0.006385 10 0.254000

Conv_48 0.042700 0.010734 10 0.427000

Conv_5 0.280900 0.070610 10 2.809000

Conv_50 0.040900 0.010281 10 0.409000

Conv_52 0.026200 0.006586 10 0.262000

Conv_54 0.042700 0.010734 10 0.427000

Conv_56 0.040900 0.010281 10 0.409000

Conv_58 0.026700 0.006712 10 0.267000

Conv_60 0.079700 0.020034 10 0.797000

Conv_61 0.088100 0.022146 10 0.881000

Conv_63 0.040800 0.010256 10 0.408000

Conv_65 0.116800 0.029360 10 1.168000

Conv_67 0.087300 0.021945 10 0.873000

Conv_69 0.037600 0.009452 10 0.376000

Conv_7 0.141100 0.035468 10 1.411000

Conv_71 0.116200 0.029209 10 1.162000

Conv_73 0.088200 0.022171 10 0.882000

Conv_75 0.014100 0.003544 10 0.141000

Conv_77 0.053500 0.013448 10 0.535000

Conv_78 0.072400 0.018199 10 0.724000

Conv_80 0.020800 0.005228 10 0.208000

Conv_82 0.088000 0.022121 10 0.880000

Conv_84 0.072800 0.018300 10 0.728000

Conv_86 0.019600 0.004927 10 0.196000

Conv_88 0.087400 0.021970 10 0.874000

Conv_9 0.061100 0.015359 10 0.611000

Conv_90 0.073400 0.018451 10 0.734000

Conv_92 0.021300 0.005354 10 0.213000

Conv_94 0.145300 0.036524 10 1.453000

Conv_95 0.187200 0.047056 10 1.872000

Gather_100 0.002000 0.000503 10 0.020000

Gemm_104 0.122400 0.030768 10 1.224000

GlobalAveragePool_97 0.009100 0.002287 10 0.091000

GlobalAveragePool_97_post 0.001400 0.000352 10 0.014000

Reshape_103 0.001100 0.000277 10 0.011000

Shape_98 0.001600 0.000402 10 0.016000

Unsqueeze_101 0.001300 0.000327 10 0.013000

input_nc2nh 0.076900 0.019330 10 0.769000

-------------------------------------------------------------------------

opType avg(ms) percent calledTimes opTotalTime

AddFusion 0.048000 0.012066 100 0.480000

AvgPoolFusion 0.009100 0.002287 10 0.091000

Concat 0.001800 0.000452 10 0.018000

Conv2DFusion 3.695398 0.928913 520 36.953976

Gather 0.002000 0.000503 10 0.020000

MatMulFusion 0.122400 0.030768 10 1.224000

Reshape 0.001100 0.000277 10 0.011000

Shape 0.001600 0.000402 10 0.016000

Transpose 0.095500 0.024006 30 0.955000

Unsqueeze 0.001300 0.000327 10 0.013000

Model = mobilenetv2-12NCHW.ms, NumThreads = 2, MinRunTime = 3.677000 ms, MaxRuntime = 5.417000 ms, AvgRunTime = 4.200000 ms

Run Benchmark mobilenetv2-12NCHW.ms Success.

五、示例:GHost-DepthNet

1. converter_lite 模型转换

1.1 转换ONNX

yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=ONNX --modelFile=GHost-DepthNet.onnx --outputFile=GHost-DepthNetNCHW --inputDataFormat=NCHW --inputShape="input:1,3,224,224"

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.599 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.626 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_1

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.645 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_2

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.672 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ghostV2block/ghost1/Resize

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.678 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ghostV2block/ghost1/Resize

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.713 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.719 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.733 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_1

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.739 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_1

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.753 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_2

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.759 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_2

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.773 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_3

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.779 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_3

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.796 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_3

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.808 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_4

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.822 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_5

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.263.835 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_6

[WARNING] LITE(17444,7ffa346e1b40,converter_lite):2024-04-05-23:27:59.265.896 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3518] ConvertGraphInputs] Can not find name in map. name is input_0

CONVERT RESULT SUCCESS:0

1.2 转换onnx-sim

# 安装onnx-simplifier

pip install onnx-simplifier

# 执行转换

python -m onnxsim GHost-Depth.onnx GHost-Depth_sim.onnx

/tools/converter/converter$ ./converter_lite --fmk=ONNX --modelFile=GHost-DepthN

et_sim.onnx --outputFile=GHost-DepthNet_simNCHW --inputDataFormat=NCHW --inputShape="input:1,3,224,224"

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.388 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.411 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_1

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.425 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_2

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.446 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ghostV2block/ghost1/Resize

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.452 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ghostV2block/ghost1/Resize

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.468 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.474 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.483 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_1

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.489 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_1

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.498 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_2

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.503 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_2

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.512 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_3

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.518 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /ppma/Resize_3

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.532 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_3

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.544 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_4

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.555 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_5

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.119.567 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3768] CheckOnnxModel] Can not find node input: of /Resize_6

[WARNING] LITE(17870,7f9732ea5b40,converter_lite):2024-04-05-23:36:37.121.865 [mindspore/lite/build/tools/converter/parser/onnx/onnx_op_parser.cc:3518] ConvertGraphInputs] Can not find name in map. name is input_0

CONVERT RESULT SUCCESS:0

1.3 转换PyTorch

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=GHost-DepthNet.pt --outputFile=GHost-DepthNetNCHW --inputDataFormat=NCHW --inputShape="input:1,3,224,224"

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.434.036 [mindspore/lite/tools/converter/registry/model_parser_registry.cc:47] GetModelParser] ILLEGAL FMK: fmk must be in FmkType.1

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.434.064 [mindspore/lite/tools/converter/registry/model_parser_registry.cc:49] GetModelParser] ILLEGAL FMK: fmk must be in FmkType.2

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.434.070 [mindspore/lite/tools/converter/registry/model_parser_registry.cc:51] GetModelParser] ILLEGAL FMK: fmk must be in FmkType.3

[WARNING] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.575.566 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:730] ConvertGraphInputs] The input shape is empty.

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.635.700 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type hardtanh

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.635.911 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:950] BuildOpInputs] could not find input node: 77

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.635.922 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1055] ConvertNodes] BuildOpInputs failed.

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.635.952 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:692] ConvertTorchGraph] convert nodes failed.

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.635.960 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:626] Parse] convert pytorch graph failed.

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.636.954 [mindspore/lite/tools/converter/converter_funcgraph.cc:92] Load3rdModelToFuncgraph] Get funcGraph failed for fmk: 5

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.636.975 [mindspore/lite/tools/converter/converter_funcgraph.cc:162] Build] Load model file failed

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.636.982 [mindspore/lite/tools/converter/converter.cc:979] HandleGraphCommon] Build func graph failed

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.637.014 [mindspore/lite/tools/converter/converter.cc:947] Convert] Handle graph failed: -1 Common error code.

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.637.022 [mindspore/lite/tools/converter/converter.cc:1108] RunConverter] Convert model failed

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.637.028 [mindspore/lite/tools/converter/converter_context.h:60] PrintOps] ===========================================

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.637.033 [mindspore/lite/tools/converter/converter_context.h:61] PrintOps] UNSUPPORTED OP LIST:

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.637.041 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: hardtanh

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.637.047 [mindspore/lite/tools/converter/converter_context.h:65] PrintOps] ===========================================

[ERROR] LITE(17915,7fd1d75c5b40,converter_lite):2024-04-05-23:38:27.637.058 [mindspore/lite/tools/converter/cxx_api/converter.cc:314] Convert] Convert model failed, ret=Common error code.

ERROR [mindspore/lite/tools/converter/converter_lite/main.cc:102] main] Convert failed. Ret: Common error code.

Convert failed. Ret: Common error code.

2. benchmark模型推理

2.1 GHost-DepthNet模型

自编译版本的MindSporeLite的性能,远不及官方提供的Release版本,强烈推荐使用Release版本的软件包。

性能差距大的具体原因暂不清楚,有空再去研究一下这个问题。

2.1.1 自编译版本

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/benchmark$ ./benchmark --modelFile=GHost-DepthNetNCHW.ms --inputShapes="1,3,224,224" --timeProfiling=true

ModelPath = GHost-DepthNetNCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 10

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 36.034 ms

Running warm up loops...

Running benchmark loops...

-------------------------------------------------------------------------

opName avg(ms) percent calledTimes opTotalTime

/Add 0.051300 0.000092 10 0.513000

/Add_1 0.138300 0.000247 10 1.383000

/Add_2 0.256200 0.000458 10 2.562000

/Add_3 0.502200 0.000898 10 5.022000

/Resize 0.926700 0.001656 10 9.266999

/Resize_1 0.464000 0.000829 10 4.640000

/Resize_2 0.243000 0.000434 10 2.430000

/Resize_3 0.485800 0.000868 10 4.858000

/Resize_4 0.932300 0.001666 10 9.323000

/Resize_5 1.871400 0.003345 10 18.714001

/Resize_6 3.578200 0.006396 10 35.782001

/conv1/0/Conv 17.991699 0.032158 10 179.916992

/conv1/0/Conv_pre_0 0.211200 0.000377 10 2.112000

/decoder1/0/Conv 1.526100 0.002728 10 15.261002

/decoder1/3/Conv 35.245499 0.062997 10 352.454987

/decoder2/0/Conv 3.163500 0.005654 10 31.634998

/decoder2/3/Conv 35.615898 0.063659 10 356.158997

/decoder3/0/Conv 6.894100 0.012322 10 68.941002

/decoder3/3/Conv 38.015301 0.067948 10 380.153015

/decoder4/0/Conv 12.572200 0.022471 10 125.722000

/decoder4/3/Conv 4.220300 0.007543 10 42.202999

/decoder4/5/Clip_post 0.015800 0.000028 10 0.158000

/dsc_block1/0/Conv 3.105700 0.005551 10 31.057001

/dsc_block1/3/Conv 43.002701 0.076862 10 430.027008

/dsc_block12/0/Conv 0.384500 0.000687 10 3.845000

/dsc_block12/3/Conv 17.429699 0.031153 10 174.296997

/dsc_block3/0/Conv 3.038500 0.005431 10 30.385000

/dsc_block3/3/Conv 71.039200 0.126974 10 710.391968

/dsc_block5/0/Conv 1.470400 0.002628 10 14.704000

/dsc_block5/3/Conv 69.926102 0.124984 10 699.260986

/dsc_block6/0/Conv 0.381800 0.000682 10 3.818000

/dsc_block6/3/Conv 17.436499 0.031166 10 174.364990

/ghostV2block/Add 0.049100 0.000088 10 0.491000

/ghostV2block/ghost1/AveragePool 0.154500 0.000276 10 1.545000

/ghostV2block/ghost1/Concat 0.051300 0.000092 10 0.513000

/ghostV2block/ghost1/Mul 0.145700 0.000260 10 1.457000

/ghostV2block/ghost1/Resize 0.034700 0.000062 10 0.347000

/ghostV2block/ghost1/Slice 0.021400 0.000038 10 0.214000

/ghostV2block/ghost1/cheap_operation/0/Conv 0.704400 0.001259 10 7.044000

/ghostV2block/ghost1/gate_fn/Sigmoid 0.362100 0.000647 10 3.621000

/ghostV2block/ghost1/primary_conv/0/Conv 34.603901 0.061850 10 346.039001

/ghostV2block/ghost1/short_conv/0/Conv 17.199600 0.030742 10 171.996002

/ghostV2block/ghost1/short_conv/2/Conv 0.172700 0.000309 10 1.727000

/ghostV2block/ghost1/short_conv/4/Conv 0.173600 0.000310 10 1.736000

/ghostV2block/ghost2/Concat 0.029700 0.000053 10 0.297000

/ghostV2block/ghost2/Slice 0.019900 0.000036 10 0.199000

/ghostV2block/ghost2/cheap_operation/0/Conv 0.153300 0.000274 10 1.533000

/ghostV2block/ghost2/primary_conv/0/Conv 38.044197 0.067999 10 380.441986

/ppma/Concat_8 0.034400 0.000061 10 0.344000

/ppma/Mul 0.052000 0.000093 10 0.520000

/ppma/Resize 0.039800 0.000071 10 0.398000

/ppma/Resize_1 0.043300 0.000077 10 0.433000

/ppma/Resize_2 0.043600 0.000078 10 0.436000

/ppma/Resize_3 0.049500 0.000088 10 0.495000

/ppma/stages.0/0/AveragePool 0.148300 0.000265 10 1.483000

/ppma/stages.0/1/Conv 0.046800 0.000084 10 0.468000

/ppma/stages.1/0/AveragePool 0.159100 0.000284 10 1.591000

/ppma/stages.1/1/Conv 0.112100 0.000200 10 1.121000

/ppma/stages.2/0/AveragePool 0.241200 0.000431 10 2.412000

/ppma/stages.2/1/Conv 0.222800 0.000398 10 2.228000

/ppma/stages.3/0/AveragePool 0.299600 0.000535 10 2.996000

/ppma/stages.3/1/Conv 0.843400 0.001507 10 8.434000

/pwise_block2/0/Conv 37.366402 0.066788 10 373.664001

/pwise_block4/0/Conv 35.722004 0.063849 10 357.220032

-------------------------------------------------------------------------

opType avg(ms) percent calledTimes opTotalTime

Activation 0.362100 0.000647 10 3.621000

AddFusion 0.997100 0.001782 50 9.970998

AvgPoolFusion 1.002700 0.001792 50 10.027001

Concat 0.115400 0.000206 30 1.154000

Conv2DFusion 547.825073 0.979167 320 5478.250488

MulFusion 0.197700 0.000353 20 1.977000

Resize 8.712299 0.015572 120 87.122993

StridedSlice 0.041300 0.000074 20 0.413000

Transpose 0.227000 0.000406 20 2.270000

Model = GHost-DepthNetNCHW.ms, NumThreads = 2, MinRunTime = 559.104004 ms, MaxRuntime = 562.068970 ms, AvgRunTime = 560.484009 ms

Run Benchmark GHost-DepthNetNCHW.ms Success.

2.1.2 Release版本

yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.0-linux-x64/tools/benchmark$ ./benchmark --modelFile=GHost-DepthNetNCHW.ms --inputShapes="1,3,224,224" --timeProfiling=true

ModelPath = GHost-DepthNetNCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 10

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 23.123 ms

Running warm up loops...

Running benchmark loops...

-------------------------------------------------------------------------

opName avg(ms) percent calledTimes opTotalTime

/Add 0.005700 0.001144 10 0.057000

/Add_1 0.031500 0.006321 10 0.315000

/Add_2 0.062900 0.012623 10 0.629000

/Add_3 0.164100 0.032932 10 1.641000

/Resize 0.060900 0.012222 10 0.609000

/Resize_1 0.032200 0.006462 10 0.322000

/Resize_2 0.017400 0.003492 10 0.174000

/Resize_3 0.022000 0.004415 10 0.220000

/Resize_4 0.052000 0.010435 10 0.520000

/Resize_5 0.125300 0.025146 10 1.253000

/Resize_6 0.233300 0.046819 10 2.333000

/conv1/0/Conv 0.095700 0.019205 10 0.957000

/conv1/0/Conv_pre_0 0.064000 0.012844 10 0.640000

/decoder1/0/Conv 0.042600 0.008549 10 0.426000

/decoder1/3/Conv 0.198800 0.039896 10 1.988000

/decoder2/0/Conv 0.071800 0.014409 10 0.718000

/decoder2/3/Conv 0.200200 0.040177 10 2.002000

/decoder3/0/Conv 0.163200 0.032751 10 1.632000

/decoder3/3/Conv 0.196300 0.039394 10 1.963000

/decoder4/0/Conv 0.294000 0.059001 10 2.940000

/decoder4/3/Conv 0.118700 0.023821 10 1.187000

/decoder4/5/Clip_post 0.001500 0.000301 10 0.015000

/dsc_block1/0/Conv 0.069100 0.013867 10 0.691000

/dsc_block1/3/Conv 0.225500 0.045254 10 2.255000

/dsc_block12/0/Conv 0.013400 0.002689 10 0.134000

/dsc_block12/3/Conv 0.101500 0.020369 10 1.015000

/dsc_block3/0/Conv 0.076600 0.015372 10 0.766000

/dsc_block3/3/Conv 0.420100 0.084307 10 4.201000

/dsc_block5/0/Conv 0.059100 0.011860 10 0.591000

/dsc_block5/3/Conv 0.418400 0.083966 10 4.184000

/dsc_block6/0/Conv 0.014200 0.002850 10 0.142000

/dsc_block6/3/Conv 0.105800 0.021232 10 1.058000

/ghostV2block/Add 0.006200 0.001244 10 0.062000

/ghostV2block/ghost1/AveragePool 0.006300 0.001264 10 0.063000

/ghostV2block/ghost1/Concat 0.026100 0.005238 10 0.261000

/ghostV2block/ghost1/Mul 0.039800 0.007987 10 0.398000

/ghostV2block/ghost1/Resize 0.016200 0.003251 10 0.162000

/ghostV2block/ghost1/Slice 0.001900 0.000381 10 0.019000

/ghostV2block/ghost1/cheap_operation/0/Conv 0.027900 0.005599 10 0.279000

/ghostV2block/ghost1/gate_fn/Sigmoid 0.010200 0.002047 10 0.102000

/ghostV2block/ghost1/primary_conv/0/Conv 0.202800 0.040698 10 2.028000

/ghostV2block/ghost1/short_conv/0/Conv 0.123900 0.024865 10 1.239000

/ghostV2block/ghost1/short_conv/2/Conv 0.016500 0.003311 10 0.165000

/ghostV2block/ghost1/short_conv/4/Conv 0.020000 0.004014 10 0.200000

/ghostV2block/ghost2/Concat 0.004100 0.000823 10 0.041000

/ghostV2block/ghost2/Slice 0.001300 0.000261 10 0.013000

/ghostV2block/ghost2/cheap_operation/0/Conv 0.007900 0.001585 10 0.079000

/ghostV2block/ghost2/primary_conv/0/Conv 0.204500 0.041040 10 2.045000

/ppma/Concat_8 0.005900 0.001184 10 0.059000

/ppma/Mul 0.006500 0.001304 10 0.065000

/ppma/Resize 0.002500 0.000502 10 0.025000

/ppma/Resize_1 0.003500 0.000702 10 0.035000

/ppma/Resize_2 0.002900 0.000582 10 0.029000

/ppma/Resize_3 0.003600 0.000722 10 0.036000

/ppma/stages.0/0/AveragePool 0.007400 0.001485 10 0.074000

/ppma/stages.0/1/Conv 0.003600 0.000722 10 0.036000

/ppma/stages.1/0/AveragePool 0.007700 0.001545 10 0.077000

/ppma/stages.1/1/Conv 0.004100 0.000823 10 0.041000

/ppma/stages.2/0/AveragePool 0.008700 0.001746 10 0.087000

/ppma/stages.2/1/Conv 0.004400 0.000883 10 0.044000

/ppma/stages.3/0/AveragePool 0.009800 0.001967 10 0.098000

/ppma/stages.3/1/Conv 0.008600 0.001726 10 0.086000

/pwise_block2/0/Conv 0.216100 0.043367 10 2.161000

/pwise_block4/0/Conv 0.214300 0.043006 10 2.143000

-------------------------------------------------------------------------

opType avg(ms) percent calledTimes opTotalTime

Activation 0.010200 0.002047 10 0.102000

AddFusion 0.270400 0.054265 50 2.704000

AvgPoolFusion 0.039900 0.008007 50 0.399000

Concat 0.036100 0.007245 30 0.361000

Conv2DFusion 3.939600 0.790609 320 39.396004

MulFusion 0.046300 0.009292 20 0.463000

Resize 0.571800 0.114750 120 5.717998

StridedSlice 0.003200 0.000642 20 0.032000

Transpose 0.065500 0.013145 20 0.655000

Model = GHost-DepthNetNCHW.ms, NumThreads = 2, MinRunTime = 4.795000 ms, MaxRuntime = 6.984000 ms, AvgRunTime = 5.114000 ms

Run Benchmark GHost-DepthNetNCHW.ms Success.

2.2 mobilenet_v2模型

2.2.1 自编译版本

yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/benchmark$ ./benchmark --modelFile=mobilenet_v2NCHW.ms --inputShapes="1,3,224,224" --timeProfiling=true

ModelPath = mobilenet_v2NCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 10

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 38.092 ms

Running warm up loops...

Running benchmark loops...

-------------------------------------------------------------------------

opName avg(ms) percent calledTimes opTotalTime

/Flatten 0.011700 0.000028 10 0.117000

/GlobalAveragePool 0.197900 0.000466 10 1.979000

/GlobalAveragePool_post 0.014500 0.000034 10 0.145000

/classifier/1/Gemm 1.268400 0.002987 10 12.684000

/features/0/0/Conv 18.322699 0.043146 10 183.226990

/features/0/0/Conv_pre_0 0.206400 0.000486 10 2.064000

/features/0/2/Clip 0.618800 0.001457 10 6.188000

/features/1/conv/0/0/Conv 2.496400 0.005878 10 24.963999

/features/1/conv/0/2/Clip 0.766300 0.001804 10 7.663000

/features/1/conv/1/Conv 9.646601 0.022716 10 96.466003

/features/10/Add 0.030000 0.000071 10 0.300000

/features/10/conv/0/0/Conv 6.759100 0.015916 10 67.591003

/features/10/conv/0/2/Clip 0.187600 0.000442 10 1.876000

/features/10/conv/1/0/Conv 0.404200 0.000952 10 4.042000

/features/10/conv/1/2/Clip 0.204300 0.000481 10 2.043000

/features/10/conv/2/Conv 7.108600 0.016739 10 71.085999

/features/11/conv/0/0/Conv 6.847900 0.016125 10 68.479004

/features/11/conv/0/2/Clip 0.182800 0.000430 10 1.828000

/features/11/conv/1/0/Conv 0.403000 0.000949 10 4.030000

/features/11/conv/1/2/Clip 0.201100 0.000474 10 2.011000

/features/11/conv/2/Conv 10.545300 0.024832 10 105.452995

/features/12/Add 0.033600 0.000079 10 0.336000

/features/12/conv/0/0/Conv 14.952499 0.035210 10 149.524994

/features/12/conv/0/2/Clip 0.292900 0.000690 10 2.929000

/features/12/conv/1/0/Conv 0.630500 0.001485 10 6.305000

/features/12/conv/1/2/Clip 0.300700 0.000708 10 3.007000

/features/12/conv/2/Conv 15.833600 0.037285 10 158.335999

/features/13/Add 0.032700 0.000077 10 0.327000

/features/13/conv/0/0/Conv 14.743700 0.034718 10 147.436996

/features/13/conv/0/2/Clip 0.295100 0.000695 10 2.951000

/features/13/conv/1/0/Conv 0.595700 0.001403 10 5.957000

/features/13/conv/1/2/Clip 0.303700 0.000715 10 3.037000

/features/13/conv/2/Conv 15.780400 0.037159 10 157.804001

/features/14/conv/0/0/Conv 14.601801 0.034384 10 146.018005

/features/14/conv/0/2/Clip 0.283900 0.000669 10 2.839000

/features/14/conv/1/0/Conv 0.184300 0.000434 10 1.843000

/features/14/conv/1/2/Clip 0.088500 0.000208 10 0.885000

/features/14/conv/2/Conv 6.054800 0.014258 10 60.548000

/features/15/Add 0.025900 0.000061 10 0.259000

/features/15/conv/0/0/Conv 9.959600 0.023453 10 99.596001

/features/15/conv/0/2/Clip 0.143800 0.000339 10 1.438000

/features/15/conv/1/0/Conv 0.269000 0.000633 10 2.690000

/features/15/conv/1/2/Clip 0.138900 0.000327 10 1.389000

/features/15/conv/2/Conv 10.102400 0.023789 10 101.023994

/features/16/Add 0.026000 0.000061 10 0.260000

/features/16/conv/0/0/Conv 9.984099 0.023510 10 99.840996

/features/16/conv/0/2/Clip 0.144400 0.000340 10 1.444000

/features/16/conv/1/0/Conv 0.268500 0.000632 10 2.685000

/features/16/conv/1/2/Clip 0.145100 0.000342 10 1.451000

/features/16/conv/2/Conv 10.009699 0.023571 10 100.096985

/features/17/conv/0/0/Conv 9.952100 0.023435 10 99.520996

/features/17/conv/0/2/Clip 0.141700 0.000334 10 1.417000

/features/17/conv/1/0/Conv 0.268300 0.000632 10 2.683000

/features/17/conv/1/2/Clip 0.142400 0.000335 10 1.424000

/features/17/conv/2/Conv 19.981201 0.047051 10 199.812012

/features/18/0/Conv 26.795401 0.063097 10 267.954010

/features/18/2/Clip 0.189100 0.000445 10 1.891000

/features/2/conv/0/0/Conv 30.828897 0.072595 10 308.288971

/features/2/conv/0/2/Clip 1.743800 0.004106 10 17.438002

/features/2/conv/1/0/Conv 1.833000 0.004316 10 18.330000

/features/2/conv/1/2/Clip 0.708300 0.001668 10 7.083000

/features/2/conv/2/Conv 10.147400 0.023895 10 101.473999

/features/3/Add 0.067800 0.000160 10 0.678000

/features/3/conv/0/0/Conv 16.270899 0.038314 10 162.708984

/features/3/conv/0/2/Clip 0.799900 0.001884 10 7.999000

/features/3/conv/1/0/Conv 2.530600 0.005959 10 25.306002

/features/3/conv/1/2/Clip 1.034900 0.002437 10 10.349000

/features/3/conv/2/Conv 14.719400 0.034661 10 147.194000

/features/4/conv/0/0/Conv 16.253298 0.038273 10 162.532974

/features/4/conv/0/2/Clip 0.810700 0.001909 10 8.107000

/features/4/conv/1/0/Conv 0.661400 0.001557 10 6.613999

/features/4/conv/1/2/Clip 0.289400 0.000681 10 2.894000

/features/4/conv/2/Conv 4.927901 0.011604 10 49.279007

/features/5/Add 0.036600 0.000086 10 0.366000

/features/5/conv/0/0/Conv 7.075700 0.016662 10 70.756996

/features/5/conv/0/2/Clip 0.286500 0.000675 10 2.865000

/features/5/conv/1/0/Conv 0.870400 0.002050 10 8.704000

/features/5/conv/1/2/Clip 0.377600 0.000889 10 3.776000

/features/5/conv/2/Conv 6.583500 0.015503 10 65.834999

/features/6/Add 0.038200 0.000090 10 0.382000

/features/6/conv/0/0/Conv 7.074800 0.016660 10 70.748001

/features/6/conv/0/2/Clip 0.292400 0.000689 10 2.924000

/features/6/conv/1/0/Conv 0.834100 0.001964 10 8.341001

/features/6/conv/1/2/Clip 0.357800 0.000843 10 3.578000

/features/6/conv/2/Conv 6.619501 0.015587 10 66.195007

/features/7/conv/0/0/Conv 7.052300 0.016607 10 70.523003

/features/7/conv/0/2/Clip 0.303600 0.000715 10 3.036000

/features/7/conv/1/0/Conv 0.231900 0.000546 10 2.319000

/features/7/conv/1/2/Clip 0.112900 0.000266 10 1.129000

/features/7/conv/2/Conv 3.556400 0.008375 10 35.563999

/features/8/Add 0.030100 0.000071 10 0.301000

/features/8/conv/0/0/Conv 6.689300 0.015752 10 66.892998

/features/8/conv/0/2/Clip 0.190400 0.000448 10 1.904000

/features/8/conv/1/0/Conv 0.423200 0.000997 10 4.232000

/features/8/conv/1/2/Clip 0.204400 0.000481 10 2.044000

/features/8/conv/2/Conv 7.074500 0.016659 10 70.745003

/features/9/Add 0.028800 0.000068 10 0.288000

/features/9/conv/0/0/Conv 6.731101 0.015850 10 67.311005

/features/9/conv/0/2/Clip 0.182200 0.000429 10 1.822000

/features/9/conv/1/0/Conv 0.404200 0.000952 10 4.042000

/features/9/conv/1/2/Clip 0.202300 0.000476 10 2.023000

/features/9/conv/2/Conv 7.055600 0.016614 10 70.556000

-------------------------------------------------------------------------

opType avg(ms) percent calledTimes opTotalTime

Activation 12.668195 0.029831 350 126.681946

AddFusion 0.349700 0.000823 100 3.497001

AvgPoolFusion 0.197900 0.000466 10 1.979000

Conv2DFusion 409.950836 0.965345 520 4099.508301

Flatten 0.011700 0.000028 10 0.117000

MatMulFusion 1.268400 0.002987 10 12.684000

Transpose 0.220900 0.000520 20 2.209000

Model = mobilenet_v2NCHW.ms, NumThreads = 2, MinRunTime = 424.196014 ms, MaxRuntime = 429.618988 ms, AvgRunTime = 426.023987 ms

Run Benchmark mobilenet_v2NCHW.ms Success.

2.2.2 Release版本

yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.0-linux-x64/tools/benchmark$ ./benchmark --modelFile=mobilenet_v2NCHW.ms --inputShapes="1,3,224,224" --timeProfiling=true

ModelPath = mobilenet_v2NCHW.ms

ModelType = MindIR

InDataPath =

ConfigFilePath =

InDataType = bin

LoopCount = 10

DeviceType = CPU

AccuracyThreshold = 0.5

CosineDistanceThreshold = -1.1

WarmUpLoopCount = 3

NumThreads = 2

InterOpParallelNum = 1

Fp16Priority = 0

EnableParallel = 0

calibDataPath =

EnableGLTexture = 0

cpuBindMode = HIGHER_CPU

CalibDataType = FLOAT

Resize Dims: 1 3 224 224

start unified benchmark run

PrepareTime = 28.97 ms

Running warm up loops...

Running benchmark loops...

-------------------------------------------------------------------------

opName avg(ms) percent calledTimes opTotalTime

/Flatten 0.001500 0.000299 10 0.015000

/GlobalAveragePool 0.010500 0.002092 10 0.105000

/GlobalAveragePool_post 0.001500 0.000299 10 0.015000

/classifier/1/Gemm 0.100600 0.020041 10 1.006000

/features/0/0/Conv 0.158500 0.031575 10 1.585000

/features/0/0/Conv_pre_0 0.068700 0.013686 10 0.687000

/features/1/conv/0/0/Conv 0.108900 0.021694 10 1.089000

/features/1/conv/1/Conv 0.088800 0.017690 10 0.888000

/features/10/Add 0.003700 0.000737 10 0.037000

/features/10/conv/0/0/Conv 0.063400 0.012630 10 0.634000

/features/10/conv/1/0/Conv 0.026800 0.005339 10 0.268000

/features/10/conv/2/Conv 0.063100 0.012570 10 0.631000

/features/11/conv/0/0/Conv 0.063500 0.012650 10 0.635000

/features/11/conv/1/0/Conv 0.026300 0.005239 10 0.263000

/features/11/conv/2/Conv 0.111100 0.022132 10 1.111000

/features/12/Add 0.004300 0.000857 10 0.043000

/features/12/conv/0/0/Conv 0.139500 0.027790 10 1.395000

/features/12/conv/1/0/Conv 0.040400 0.008048 10 0.404000

/features/12/conv/2/Conv 0.165500 0.032969 10 1.655000

/features/13/Add 0.004800 0.000956 10 0.048000

/features/13/conv/0/0/Conv 0.138500 0.027591 10 1.385000

/features/13/conv/1/0/Conv 0.039200 0.007809 10 0.392000

/features/13/conv/2/Conv 0.164000 0.032671 10 1.640000

/features/14/conv/0/0/Conv 0.138600 0.027611 10 1.386000

/features/14/conv/1/0/Conv 0.014800 0.002948 10 0.148000

/features/14/conv/2/Conv 0.068800 0.013706 10 0.688000

/features/15/Add 0.003000 0.000598 10 0.030000

/features/15/conv/0/0/Conv 0.103800 0.020678 10 1.038000

/features/15/conv/1/0/Conv 0.022400 0.004462 10 0.224000

/features/15/conv/2/Conv 0.112800 0.022471 10 1.128000

/features/16/Add 0.003200 0.000637 10 0.032000

/features/16/conv/0/0/Conv 0.102700 0.020459 10 1.027000

/features/16/conv/1/0/Conv 0.021800 0.004343 10 0.218000

/features/16/conv/2/Conv 0.112700 0.022451 10 1.127000

/features/17/conv/0/0/Conv 0.103700 0.020658 10 1.037000

/features/17/conv/1/0/Conv 0.021500 0.004283 10 0.215000

/features/17/conv/2/Conv 0.200500 0.039942 10 2.005000

/features/18/0/Conv 0.272800 0.054345 10 2.728000

/features/2/conv/0/0/Conv 0.374300 0.074565 10 3.743000

/features/2/conv/1/0/Conv 0.120600 0.024025 10 1.206000

/features/2/conv/2/Conv 0.091600 0.018248 10 0.916000

/features/3/Add 0.013900 0.002769 10 0.139000

/features/3/conv/0/0/Conv 0.188400 0.037531 10 1.884000

/features/3/conv/1/0/Conv 0.128600 0.025619 10 1.286000

/features/3/conv/2/Conv 0.137500 0.027392 10 1.375000

/features/4/conv/0/0/Conv 0.186800 0.037213 10 1.868000

/features/4/conv/1/0/Conv 0.043400 0.008646 10 0.434000

/features/4/conv/2/Conv 0.046700 0.009303 10 0.467000

/features/5/Add 0.005000 0.000996 10 0.050000

/features/5/conv/0/0/Conv 0.066600 0.013267 10 0.666000

/features/5/conv/1/0/Conv 0.047800 0.009522 10 0.478000

/features/5/conv/2/Conv 0.061600 0.012271 10 0.616000

/features/6/Add 0.004900 0.000976 10 0.049000

/features/6/conv/0/0/Conv 0.066800 0.013307 10 0.668000

/features/6/conv/1/0/Conv 0.049400 0.009841 10 0.494000

/features/6/conv/2/Conv 0.062500 0.012451 10 0.625000

/features/7/conv/0/0/Conv 0.066400 0.013228 10 0.664000

/features/7/conv/1/0/Conv 0.016800 0.003347 10 0.168000

/features/7/conv/2/Conv 0.033100 0.006594 10 0.331000

/features/8/Add 0.003100 0.000618 10 0.031000

/features/8/conv/0/0/Conv 0.063100 0.012570 10 0.631000

/features/8/conv/1/0/Conv 0.026500 0.005279 10 0.265000

/features/8/conv/2/Conv 0.063300 0.012610 10 0.633000

/features/9/Add 0.003200 0.000637 10 0.032000

/features/9/conv/0/0/Conv 0.063200 0.012590 10 0.632000

/features/9/conv/1/0/Conv 0.025900 0.005160 10 0.259000

/features/9/conv/2/Conv 0.062600 0.012471 10 0.626000

-------------------------------------------------------------------------

opType avg(ms) percent calledTimes opTotalTime

AddFusion 0.049100 0.009781 100 0.491000

AvgPoolFusion 0.010500 0.002092 10 0.105000

Conv2DFusion 4.787901 0.953803 520 47.879009

Flatten 0.001500 0.000299 10 0.015000

MatMulFusion 0.100600 0.020041 10 1.006000

Transpose 0.070200 0.013985 20 0.702000

Model = mobilenet_v2NCHW.ms, NumThreads = 2, MinRunTime = 3.337000 ms, MaxRuntime = 6.158000 ms, AvgRunTime = 5.150000 ms

Run Benchmark mobilenet_v2NCHW.ms Success.

五、FAQ

问题定位指南

Q:virtual memory exhausted: 无法分配内存

问题解决:c++: internal compiler error: 已杀死 (program cc1plus)

virtual memory exhausted: 无法分配内存

virtual memory exhausted: 无法分配内存

virtual memory exhausted: 无法分配内存

make[2]: *** [src/litert/kernel/cpu/CMakeFiles/train_cpu_kernel_mid.dir/fp32_grad/adam_weight_decay.cc.o] Error 1

make[2]: *** [tools/converter/micro/coder/CMakeFiles/coder_mid.dir/session.cc.o] Error 1

make[2]: *** 正在等待未完成的任务....

make[1]: *** [src/litert/kernel/cpu/CMakeFiles/train_cpu_kernel_mid.dir/all] Error 2

make[1]: *** 正在等待未完成的任务....

make[2]: *** [tools/converter/config_parser/CMakeFiles/config_parser_mid.dir/preprocess_parser.cc.o] Error 1

make[2]: *** 正在等待未完成的任务....

make[1]: *** [tools/converter/micro/coder/CMakeFiles/coder_mid.dir/all] Error 2

make[1]: *** [tools/converter/config_parser/CMakeFiles/config_parser_mid.dir/all] Error 2

virtual memory exhausted: 无法分配内存

virtual memory exhausted: 无法分配内存

make[2]: *** [minddata/CMakeFiles/minddata-lite-obj.dir/home/yoyo/MyDocuments/mindspore/mindspore/ccsrc/minddata/dataset/engine/ir/datasetops/epoch_ctrl_node.cc.o] Error 1

make[2]: *** [tools/converter/CMakeFiles/converter_src_mid.dir/__/optimizer/fusion/affine_fusion.cc.o] Error 1

make[2]: *** 正在等待未完成的任务....

make[1]: *** [minddata/CMakeFiles/minddata-lite-obj.dir/all] Error 2

make[1]: *** [tools/converter/CMakeFiles/converter_src_mid.dir/all] Error 2

make: *** [all] Error 2

^Cmake[2]: *** [tools/converter/parser/tf/CMakeFiles/tf_parser_mid.dir/tf_op_parser.cc.o] 中断

make[1]: *** [tools/converter/parser/tf/CMakeFiles/tf_parser_mid.dir/all] 中断

make: *** [all] 中断

# 错误原因

编译时内存不足

# 解决办法

增加swap交换空间

#count的大小就是增加的swap空间的大小,64M是块大小,所以空间大小是bs*count=2048MB

sudo dd if=/dev/zero of=/swapfile bs=64M count=32

#把刚才空间格式化成swap格式

sudo mkswap /swapfile

#使用刚才创建的swap空间

chmod 0600 /swapfile

sudo swapon /swapfile

# 关闭swap空间

sudo swapoff /swapfile

# 关闭所有的swap空间

sudo swapoff -a

sudo rm /swapfile

# 查看swap空间是否生效

free -m

Q:./converter_lite: error while loading shared libraries: libtorch_cpu.so: cannot open shared object file: No such file or directory

/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=model.pt

--outputFile=model

./converter_lite: error while loading shared libraries: libtorch_cpu.so: cannot open shared object file: No such file or directory

# 错误原因

未设置libtorch的环境变量

# 解决办法

设置libtorch的环境变量

export LD_LIBRARY_PATH="/home/user/libtorch/lib:${LD_LIBRARY_PATH}"

export LIB_TORCH_PATH="/home/user/libtorch"

Q:Unsupported to converter models with fmk: 5

convert_lite转pth报错,Unsupported to converter models with fmk: 5

# 错误原因

编译MindSporeLite之前未设置libtorch的环境变量

# 解决办法

设置libtorch的环境变量

export LD_LIBRARY_PATH="/home/user/libtorch/lib:${LD_LIBRARY_PATH}"

export LIB_TORCH_PATH="/home/user/libtorch"

Q:PytorchStreamReader failed locating file constants.pkl: file not found Exception raised from valid at

无法加载yolov5的pt文件,比如yolov5s.pt文件就不可以加载成功,yolov5s.torchscript.pt文件可以加载#2627

PytorchStreamReader failed locating file constants.pkl: file not found #1509

PytorchStreamReader failed locating file constants.pkl: file not found

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=mobilenet.pth --outputFile=mobilenet

terminate called after throwing an instance of 'c10::Error'

what(): PytorchStreamReader failed locating file constants.pkl: file not found

Exception raised from valid at ../caffe2/serialize/inline_container.cc:178 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::string) + 0x57 (0x7f80042b9d77 in /home/yoyo/libtorch/lib/libc10.so)

frame #1: c10::detail::torchCheckFail(char const*, char const*, unsigned int, std::string const&) + 0x64 (0x7f8004283abb in /home/yoyo/libtorch/lib/libc10.so)

frame #2: caffe2::serialize::PyTorchStreamReader::valid(char const*, char const*) + 0x8e (0x7f7fe71cf1de in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #3: caffe2::serialize::PyTorchStreamReader::getRecordID(std::string const&) + 0x46 (0x7f7fe71cf3a6 in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #4: caffe2::serialize::PyTorchStreamReader::getRecord(std::string const&) + 0x5c (0x7f7fe71cf46c in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #5: torch::jit::readArchiveAndTensors(std::string const&, std::string const&, std::string const&, c10::optional<std::function<c10::StrongTypePtr (c10::QualifiedName const&)> >, c10::optional<std::function<c10::intrusive_ptr<c10::ivalue::Object, c10::detail::intrusive_target_default_null_type<c10::ivalue::Object> > (c10::StrongTypePtr, c10::IValue)> >, c10::optional<c10::Device>, caffe2::serialize::PyTorchStreamReader&, c10::Type::SingletonOrSharedTypePtr<c10::Type> (*)(std::string const&), std::shared_ptr<torch::jit::DeserializationStorageContext>) + 0xa5 (0x7f7fe8322325 in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #6: <unknown function> + 0x4fd0fe7 (0x7f7fe8306fe7 in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #7: <unknown function> + 0x4fd4043 (0x7f7fe830a043 in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #8: torch::jit::import_ir_module(std::shared_ptr<torch::jit::CompilationUnit>, std::string const&, c10::optional<c10::Device>, std::unordered_map<std::string, std::string, std::hash<std::string>, std::equal_to<std::string>, std::allocator<std::pair<std::string const, std::string> > >&, bool, bool) + 0x3d6 (0x7f7fe830ded6 in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #9: torch::jit::import_ir_module(std::shared_ptr<torch::jit::CompilationUnit>, std::string const&, c10::optional<c10::Device>, bool) + 0x7f (0x7f7fe830e13f in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #10: torch::jit::load(std::string const&, c10::optional<c10::Device>, bool) + 0xac (0x7f7fe830e21c in /home/yoyo/libtorch/lib/libtorch_cpu.so)

frame #11: mindspore::lite::PytorchModelParser::InitOriginModel(std::string const&) + 0x4bb (0x7f8001f3fb83 in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #12: mindspore::lite::PytorchModelParser::Parse(mindspore::converter::ConverterParameters const&) + 0x2f5 (0x7f8001f3ec7d in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #13: mindspore::lite::ConverterFuncGraph::Load3rdModelToFuncgraph(std::shared_ptr<mindspore::ConverterPara> const&) + 0x329 (0x7f8000d3be37 in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #14: mindspore::lite::ConverterFuncGraph::Build(std::shared_ptr<mindspore::ConverterPara> const&) + 0xc9 (0x7f8000d3cd31 in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #15: mindspore::lite::ConverterImpl::HandleGraphCommon(std::shared_ptr<mindspore::ConverterPara> const&, void**, unsigned long*, bool, bool) + 0x68 (0x7f8000d0b298 in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #16: mindspore::lite::ConverterImpl::Convert(std::shared_ptr<mindspore::ConverterPara> const&, void**, unsigned long*, bool) + 0x6ed (0x7f8000d0aabf in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #17: mindspore::lite::RunConverter(std::shared_ptr<mindspore::ConverterPara> const&, void**, unsigned long*, bool) + 0x92 (0x7f8000d0cd7e in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #18: mindspore::Converter::Convert() + 0x85 (0x7f8000da11df in /home/yoyo/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/lib/libmindspore_converter.so)

frame #19: <unknown function> + 0x7b841 (0x5641c1e10841 in ./converter_lite)

frame #20: __libc_start_main + 0xf0 (0x7f7fe2773840 in /lib/x86_64-linux-gnu/libc.so.6)

frame #21: <unknown function> + 0x7ae99 (0x5641c1e0fe99 in ./converter_lite)

已放弃 (核心已转储)

# 错误原因

PyTorch model格式不对,不支持Torch Script以外的其他模型格式

# 解决办法

将PyTorch model模型格式转换成Torch Script格式

Only torchscript model is supported. You must trace your model first.

See: https://docs.djl.ai/docs/pytorch/how_to_convert_your_model_to_torchscript.html#how-to-convert-your-pytorch-model-to-torchscript

You need use

jit.trace()to export your model. See: https://docs.djl.ai/docs/pytorch/how_to_convert_your_model_to_torchscript.html

How did you save the model. DJL use PyTorch C++ API, it can only load traced jit model. If you use

torch.save(), it saved as python pickle format, you won’t be able to load it.See: https://docs.djl.ai/docs/pytorch/how_to_convert_your_model_to_torchscript.html

Q:不支持PyTorch模型

alexnet

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=traced_alexnet_model.pt --outputFile=traced_alexnet_model

[WARNING] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:16.559.930 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:730] ConvertGraphInputs] The input shape is empty.

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.490.156 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1293] SetAttrsForPool] Unsupported adaptive average pool with output kernels: [const vector][6, 6]

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.490.178 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1338] Parse] Set attributes for pooling failed.

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.490.199 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1041] ConvertNodes] parse node adaptive_avg_pool2d failed.

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.490.229 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:950] BuildOpInputs] could not find input node: x.1

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.490.237 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1055] ConvertNodes] BuildOpInputs failed.

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.490.246 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:692] ConvertTorchGraph] convert nodes failed.

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.490.252 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:626] Parse] convert pytorch graph failed.

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.495.600 [mindspore/lite/tools/converter/converter_funcgraph.cc:92] Load3rdModelToFuncgraph] Get funcGraph failed for fmk: 5

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.495.614 [mindspore/lite/tools/converter/converter_funcgraph.cc:162] Build] Load model file failed

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.495.620 [mindspore/lite/tools/converter/converter.cc:979] HandleGraphCommon] Build func graph failed

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.495.650 [mindspore/lite/tools/converter/converter.cc:947] Convert] Handle graph failed: -1 Common error code.

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.495.657 [mindspore/lite/tools/converter/converter.cc:1108] RunConverter] Convert model failed

[ERROR] LITE(8941,7fe3e236cb40,converter_lite):2024-04-04-14:13:17.495.670 [mindspore/lite/tools/converter/cxx_api/converter.cc:314] Convert] Convert model failed, ret=Common error code.

ERROR [mindspore/lite/tools/converter/converter_lite/main.cc:102] main] Convert failed. Ret: Common error code.

Convert failed. Ret: Common error code.

densenet121

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=traced_densenet121_model.pt --outputFile=traced_densenet121_model

[WARNING] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.443.369 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:730] ConvertGraphInputs] The input shape is empty.

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.826.552 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type cat

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.826.678 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:950] BuildOpInputs] could not find input node: input.14

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.826.689 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1055] ConvertNodes] BuildOpInputs failed.

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.826.712 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:692] ConvertTorchGraph] convert nodes failed.

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.826.720 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:626] Parse] convert pytorch graph failed.

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.448 [mindspore/lite/tools/converter/converter_funcgraph.cc:92] Load3rdModelToFuncgraph] Get funcGraph failed for fmk: 5

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.467 [mindspore/lite/tools/converter/converter_funcgraph.cc:162] Build] Load model file failed

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.473 [mindspore/lite/tools/converter/converter.cc:979] HandleGraphCommon] Build func graph failed

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.504 [mindspore/lite/tools/converter/converter.cc:947] Convert] Handle graph failed: -1 Common error code.

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.512 [mindspore/lite/tools/converter/converter.cc:1108] RunConverter] Convert model failed

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.518 [mindspore/lite/tools/converter/converter_context.h:60] PrintOps] ===========================================

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.523 [mindspore/lite/tools/converter/converter_context.h:61] PrintOps] UNSUPPORTED OP LIST:

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.530 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: cat

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.536 [mindspore/lite/tools/converter/converter_context.h:65] PrintOps] ===========================================

[ERROR] LITE(9078,7f70baf16b40,converter_lite):2024-04-04-14:15:27.831.548 [mindspore/lite/tools/converter/cxx_api/converter.cc:314] Convert] Convert model failed, ret=Common error code.

ERROR [mindspore/lite/tools/converter/converter_lite/main.cc:102] main] Convert failed. Ret: Common error code.

Convert failed. Ret: Common error code.

googlenet

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=traced_googlenet_model.pt --outputFile=traced_googlenet_model

[WARNING] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.336.200 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:730] ConvertGraphInputs] The input shape is empty.

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.582.654 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type slice

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.582.685 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type select

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.582.697 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type unsqueeze

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.582.912 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:950] BuildOpInputs] could not find input node: 34

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.582.925 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1055] ConvertNodes] BuildOpInputs failed.

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.582.946 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:692] ConvertTorchGraph] convert nodes failed.

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.582.956 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:626] Parse] convert pytorch graph failed.

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.486 [mindspore/lite/tools/converter/converter_funcgraph.cc:92] Load3rdModelToFuncgraph] Get funcGraph failed for fmk: 5

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.503 [mindspore/lite/tools/converter/converter_funcgraph.cc:162] Build] Load model file failed

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.512 [mindspore/lite/tools/converter/converter.cc:979] HandleGraphCommon] Build func graph failed

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.547 [mindspore/lite/tools/converter/converter.cc:947] Convert] Handle graph failed: -1 Common error code.

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.555 [mindspore/lite/tools/converter/converter.cc:1108] RunConverter] Convert model failed

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.562 [mindspore/lite/tools/converter/converter_context.h:60] PrintOps] ===========================================

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.568 [mindspore/lite/tools/converter/converter_context.h:61] PrintOps] UNSUPPORTED OP LIST:

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.575 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: select

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.580 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: slice

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.587 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: unsqueeze

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.594 [mindspore/lite/tools/converter/converter_context.h:65] PrintOps] ===========================================

[ERROR] LITE(8385,7f97728d6b40,converter_lite):2024-04-04-12:55:20.585.607 [mindspore/lite/tools/converter/cxx_api/converter.cc:314] Convert] Convert model failed, ret=Common error code.

ERROR [mindspore/lite/tools/converter/converter_lite/main.cc:102] main] Convert failed. Ret: Common error code.

Convert failed. Ret: Common error code.

inception_v3

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=traced_inception_v3_model.pt --outputFile=traced_inception_v3_model

[WARNING] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:23.755.698 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:730] ConvertGraphInputs] The input shape is empty.

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.675.543 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type slice

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.675.572 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type select

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.675.584 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type unsqueeze

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.675.810 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:950] BuildOpInputs] could not find input node: 36

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.675.820 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1055] ConvertNodes] BuildOpInputs failed.

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.675.838 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:692] ConvertTorchGraph] convert nodes failed.

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.675.845 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:626] Parse] convert pytorch graph failed.

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.816 [mindspore/lite/tools/converter/converter_funcgraph.cc:92] Load3rdModelToFuncgraph] Get funcGraph failed for fmk: 5

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.833 [mindspore/lite/tools/converter/converter_funcgraph.cc:162] Build] Load model file failed

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.839 [mindspore/lite/tools/converter/converter.cc:979] HandleGraphCommon] Build func graph failed

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.872 [mindspore/lite/tools/converter/converter.cc:947] Convert] Handle graph failed: -1 Common error code.

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.880 [mindspore/lite/tools/converter/converter.cc:1108] RunConverter] Convert model failed

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.886 [mindspore/lite/tools/converter/converter_context.h:60] PrintOps] ===========================================

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.892 [mindspore/lite/tools/converter/converter_context.h:61] PrintOps] UNSUPPORTED OP LIST:

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.899 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: select

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.904 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: slice

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.909 [mindspore/lite/tools/converter/converter_context.h:63] PrintOps] FMKTYPE: PYTORCH, OP TYPE: unsqueeze

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.915 [mindspore/lite/tools/converter/converter_context.h:65] PrintOps] ===========================================

[ERROR] LITE(9220,7fdb71a55b40,converter_lite):2024-04-04-14:19:24.680.927 [mindspore/lite/tools/converter/cxx_api/converter.cc:314] Convert] Convert model failed, ret=Common error code.

ERROR [mindspore/lite/tools/converter/converter_lite/main.cc:102] main] Convert failed. Ret: Common error code.

Convert failed. Ret: Common error code.

mnasnet1_0

(mslite) yoyo@yoyo:~/MyDocuments/mindspore/output/mindspore-lite-2.0.1-linux-x64/tools/converter/converter$ ./converter_lite --fmk=PYTORCH --modelFile=traced_mnasnet1_0_model.pt --outputFile=traced_mnasnet1_0_model

[WARNING] LITE(9347,7fa0c1b56b40,converter_lite):2024-04-04-14:21:25.507.820 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:730] ConvertGraphInputs] The input shape is empty.

[ERROR] LITE(9347,7fa0c1b56b40,converter_lite):2024-04-04-14:21:25.741.850 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type mean

[ERROR] LITE(9347,7fa0c1b56b40,converter_lite):2024-04-04-14:21:25.741.876 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type dropout

[ERROR] LITE(9347,7fa0c1b56b40,converter_lite):2024-04-04-14:21:25.741.886 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:1035] ConvertNodes] not support pytorch op type linear

[ERROR] LITE(9347,7fa0c1b56b40,converter_lite):2024-04-04-14:21:25.741.898 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:692] ConvertTorchGraph] convert nodes failed.

[ERROR] LITE(9347,7fa0c1b56b40,converter_lite):2024-04-04-14:21:25.741.908 [mindspore/lite/build/tools/converter/parser/pytorch/pytorch_op_parser.cc:626] Parse] convert pytorch graph failed.