一、前期准备

🍨 本文为[🔗365天深度学习训练营学习记录博客 🍦 参考文章:365天深度学习训练营 🍖 原作者:[K同学啊 | 接辅导、项目定制]\n🚀 文章来源:[K同学的学习圈子](https://www.yuque.com/mingtian-fkmxf/zxwb45)from __future__ import unicode_literals, print_function, division

from io import open

import unicodedata

import string

import re

import random

import torch

import torch.nn as nn

from torch import optim

import torch.nn.functional as F

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

1.1 搭建语言类

定义了两个常量 SOS_token 和 EOS_token,其分别代表序列的开始和结束。

SOS_token = 0

EOS_token = 1

class Lang:

def __init__(self, name):

self.name = name

self.word2index = {}

self.word2count = {}

self.index2word = {0: "SOS", 1: "EOS"}

self.n_words = 2 # Count SOS and EOS

def addSentence(self, sentence):

for word in sentence.split(' '):

self.addWord(word)

def addWord(self, word):

if word not in self.word2index:

self.word2index[word] = self.n_words

self.word2count[word] = 1

self.index2word[self.n_words] = word

self.n_words += 1

else:

self.word2count[word] += 1

1.2 文本处理函数

def unicodeToAscii(s):

return ''.join(

c for c in unicodedata.normalize('NFD', s)

if unicodedata.category(c) != 'Mn'

)

def normalizeString(s):

s = unicodeToAscii(s.lower().strip())

s = re.sub(r"([.!?])", r" \1", s)

s = re.sub(r"[^a-zA-Z.!?]+", r" ", s)

return s

1.3 文件读取函数

def readLangs(lang1, lang2, reverse=False):

print("Reading lines...")

# 以行为单位读取文件

lines = open('./data/%s-%s.txt'%(lang1,lang2), encoding='utf-8').\

read().strip().split('\n')

pairs = [[normalizeString(s) for s in l.split('\t')] for l in lines]

# 创建Lang实例,并确认是否反转语言顺序

if reverse:

pairs = [list(reversed(p)) for p in pairs]

input_lang = Lang(lang2)

output_lang = Lang(lang1)

else:

input_lang = Lang(lang1)

output_lang = Lang(lang2)

return input_lang, output_lang, pairs

MAX_LENGTH = 10 # 定义语料最长长度

eng_prefixes = (

"i am ", "i m ",

"he is", "he s ",

"she is", "she s ",

"you are", "you re ",

"we are", "we re ",

"they are", "they re "

)

def filterPair(p):

return len(p[0].split(' ')) < MAX_LENGTH and \

len(p[1].split(' ')) < MAX_LENGTH and p[1].startswith(eng_prefixes)

def filterPairs(pairs):

# 选取仅仅包含 eng_prefixes 开头的语料

return [pair for pair in pairs if filterPair(pair)]

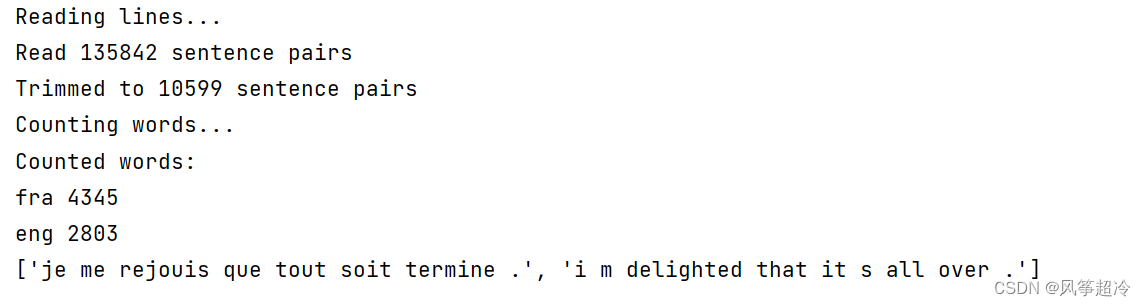

def prepareData(lang1, lang2, reverse=False):

# 读取文件中的数据

input_lang, output_lang, pairs = readLangs(lang1, lang2, reverse)

print("Read %s sentence pairs" % len(pairs))

# 按条件选取语料

pairs = filterPairs(pairs[:])

print("Trimmed to %s sentence pairs" % len(pairs))

print("Counting words...")

# 将语料保存至相应的语言类

for pair in pairs:

input_lang.addSentence(pair[0])

output_lang.addSentence(pair[1])

# 打印语言类的信息

print("Counted words:")

print(input_lang.name, input_lang.n_words)

print(output_lang.name, output_lang.n_words)

return input_lang, output_lang, pairs

input_lang, output_lang, pairs = prepareData('eng', 'fra', True)

print(random.choice(pairs))

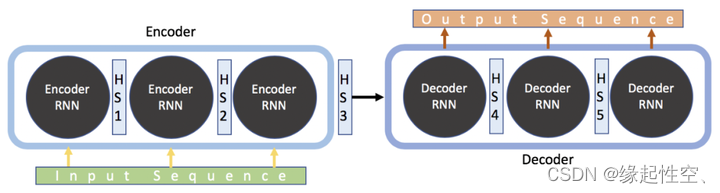

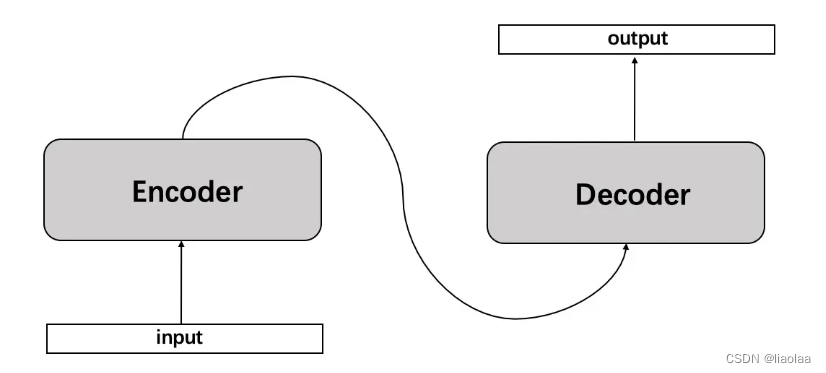

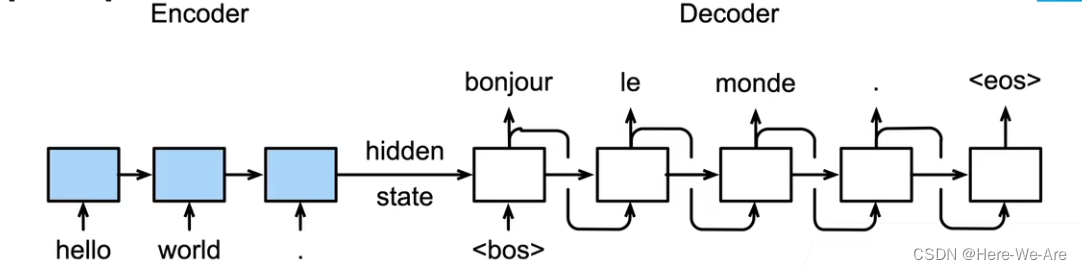

二、Seq2Seq 模型

2.1 编码器(Encoder)

class EncoderRNN(nn.Module):

def __init__(self, input_size, hidden_size):

super(EncoderRNN, self).__init__()

self.hidden_size = hidden_size

self.embedding = nn.Embedding(input_size, hidden_size)

self.gru = nn.GRU(hidden_size, hidden_size)

def forward(self, input, hidden):

embedded = self.embedding(input).view(1, 1, -1)

output = embedded

output, hidden = self.gru(output, hidden)

return output, hidden

def initHidden(self):

return torch.zeros(1, 1, self.hidden_size, device=device)

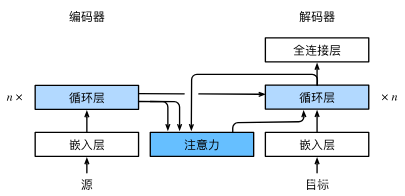

2.2 解码器(Decoder)

这里的代码与N6的不同,加入了注意力机制。

class AttnDecoderRNN(nn.Module):

def __init__(self, hidden_size, output_size, dropout_p=0.1, max_length=MAX_LENGTH):

super(AttnDecoderRNN, self).__init__()

self.hidden_size = hidden_size

self.output_size = output_size

self.dropout_p = dropout_p

self.max_length = max_length

self.embedding = nn.Embedding(self.output_size, self.hidden_size)

self.attn = nn.Linear(self.hidden_size * 2, self.max_length)

self.attn_combine = nn.Linear(self.hidden_size * 2, self.hidden_size)

self.dropout = nn.Dropout(self.dropout_p)

self.gru = nn.GRU(self.hidden_size, self.hidden_size)

self.out = nn.Linear(self.hidden_size, self.output_size)

def forward(self, input, hidden, encoder_outputs):

embedded = self.embedding(input).view(1, 1, -1)

embedded = self.dropout(embedded)

attn_weights = F.softmax(

self.attn(torch.cat((embedded[0], hidden[0]), 1)), dim=1)

attn_applied = torch.bmm(attn_weights.unsqueeze(0),

encoder_outputs.unsqueeze(0))

output = torch.cat((embedded[0], attn_applied[0]), 1)

output = self.attn_combine(output).unsqueeze(0)

output = F.relu(output)

output, hidden = self.gru(output, hidden)

output = F.log_softmax(self.out(output[0]), dim=1)

return output, hidden, attn_weights

def initHidden(self):

return torch.zeros(1, 1, self.hidden_size, device=device)

三、训练

3.1 数据预处理

def indexesFromSentence(lang, sentence):

return [lang.word2index[word] for word in sentence.split(' ')]

# 将数字化的文本,转化为tensor数据

def tensorFromSentence(lang, sentence):

indexes = indexesFromSentence(lang, sentence)

indexes.append(EOS_token)

return torch.tensor(indexes, dtype=torch.long, device=device).view(-1, 1)

# 输入pair文本,输出预处理好的数据

def tensorsFromPair(pair):

input_tensor = tensorFromSentence(input_lang, pair[0])

target_tensor = tensorFromSentence(output_lang, pair[1])

return (input_tensor, target_tensor)

3.2 训练函数

teacher_forcing_ratio = 0.5

def train(input_tensor, target_tensor,

encoder, decoder,

encoder_optimizer, decoder_optimizer,

criterion, max_length=MAX_LENGTH):

# 编码器初始化

encoder_hidden = encoder.initHidden()

# grad属性归零

encoder_optimizer.zero_grad()

decoder_optimizer.zero_grad()

input_length = input_tensor.size(0)

target_length = target_tensor.size(0)

# 用于创建一个指定大小的全零张量(tensor),用作默认编码器输出

encoder_outputs = torch.zeros(max_length, encoder.hidden_size, device=device)

loss = 0

# 将处理好的语料送入编码器

for ei in range(input_length):

encoder_output, encoder_hidden = encoder(input_tensor[ei], encoder_hidden)

encoder_outputs[ei] = encoder_output[0, 0]

# 解码器默认输出

decoder_input = torch.tensor([[SOS_token]], device=device)

decoder_hidden = encoder_hidden

use_teacher_forcing = True if random.random() < teacher_forcing_ratio else False

# 将编码器处理好的输出送入解码器

if use_teacher_forcing:

# Teacher forcing: Feed the target as the next input

for di in range(target_length):

decoder_output, decoder_hidden, decoder_attention = decoder(

decoder_input, decoder_hidden, encoder_outputs)

loss += criterion(decoder_output, target_tensor[di])

decoder_input = target_tensor[di] # Teacher forcing

else:

# Without teacher forcing: use its own predictions as the next input

for di in range(target_length):

decoder_output, decoder_hidden, decoder_attention = decoder(

decoder_input, decoder_hidden, encoder_outputs)

topv, topi = decoder_output.topk(1)

decoder_input = topi.squeeze().detach() # detach from history as input

loss += criterion(decoder_output, target_tensor[di])

if decoder_input.item() == EOS_token:

break

loss.backward()

encoder_optimizer.step()

decoder_optimizer.step()

return loss.item() / target_length

import time

import math

def asMinutes(s):

m = math.floor(s / 60)

s -= m * 60

return '%dm %ds' % (m, s)

def timeSince(since, percent):

now = time.time()

s = now - since

es = s / (percent)

rs = es - s

return '%s (- %s)' % (asMinutes(s), asMinutes(rs))

def trainIters(encoder,decoder,n_iters,print_every=1000,

plot_every=100,learning_rate=0.01):

start = time.time()

plot_losses = []

print_loss_total = 0 # Reset every print_every

plot_loss_total = 0 # Reset every plot_every

encoder_optimizer = optim.SGD(encoder.parameters(), lr=learning_rate)

decoder_optimizer = optim.SGD(decoder.parameters(), lr=learning_rate)

# 在 pairs 中随机选取 n_iters 条数据用作训练集

training_pairs = [tensorsFromPair(random.choice(pairs)) for i in range(n_iters)]

criterion = nn.NLLLoss()

for iter in range(1, n_iters + 1):

training_pair = training_pairs[iter - 1]

input_tensor = training_pair[0]

target_tensor = training_pair[1]

loss = train(input_tensor, target_tensor, encoder,

decoder, encoder_optimizer, decoder_optimizer, criterion)

print_loss_total += loss

plot_loss_total += loss

if iter % print_every == 0:

print_loss_avg = print_loss_total / print_every

print_loss_total = 0

print('%s (%d %d%%) %.4f' % (timeSince(start, iter / n_iters),

iter, iter / n_iters * 100, print_loss_avg))

if iter % plot_every == 0:

plot_loss_avg = plot_loss_total / plot_every

plot_losses.append(plot_loss_avg)

plot_loss_total = 0

return plot_losses

3.3 评估

def evaluate(encoder, decoder, sentence, max_length=MAX_LENGTH):

with torch.no_grad():

input_tensor = tensorFromSentence(input_lang, sentence)

input_length = input_tensor.size()[0]

encoder_hidden = encoder.initHidden()

encoder_outputs = torch.zeros(max_length, encoder.hidden_size, device=device)

for ei in range(input_length):

encoder_output, encoder_hidden = encoder(input_tensor[ei],encoder_hidden)

encoder_outputs[ei] += encoder_output[0, 0]

decoder_input = torch.tensor([[SOS_token]], device=device) # SOS

decoder_hidden = encoder_hidden

decoded_words = []

decoder_attentions = torch.zeros(max_length, max_length)

for di in range(max_length):

decoder_output, decoder_hidden, decoder_attention = decoder(

decoder_input, decoder_hidden, encoder_outputs)

decoder_attentions[di] = decoder_attention.data

topv, topi = decoder_output.data.topk(1)

if topi.item() == EOS_token:

decoded_words.append('<EOS>')

break

else:

decoded_words.append(output_lang.index2word[topi.item()])

decoder_input = topi.squeeze().detach()

return decoded_words, decoder_attentions[:di + 1]

def evaluateRandomly(encoder, decoder, n=5):

for i in range(n):

pair = random.choice(pairs)

print('>', pair[0])

print('=', pair[1])

output_words, attentions = evaluate(encoder, decoder, pair[0])

output_sentence = ' '.join(output_words)

print('<', output_sentence)

print('')

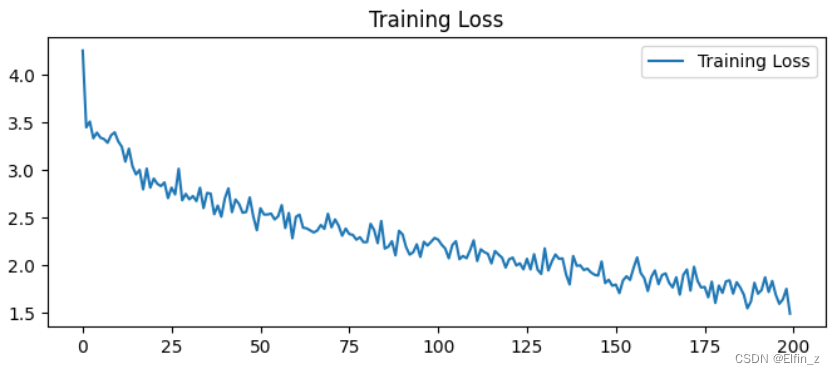

四、训练与评估

hidden_size = 256

encoder1 = EncoderRNN(input_lang.n_words, hidden_size).to(device)

attn_decoder1 = AttnDecoderRNN(hidden_size, output_lang.n_words, dropout_p=0.1).to(device)

plot_losses = trainIters(encoder1, attn_decoder1, 20000, print_every=5000)

4.1 loss

import matplotlib.pyplot as plt

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

epochs_range = range(len(plot_losses))

plt.figure(figsize=(8, 3))

plt.subplot(1, 1, 1)

plt.plot(epochs_range, plot_losses, label='Training Loss')

plt.legend(loc='upper right')

plt.title('Training Loss')

plt.show()

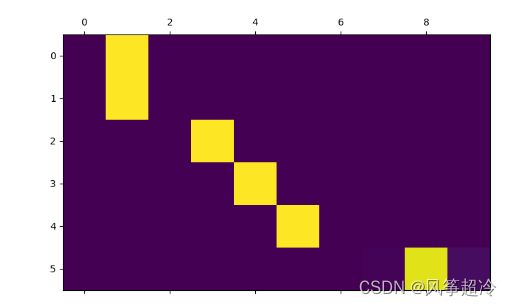

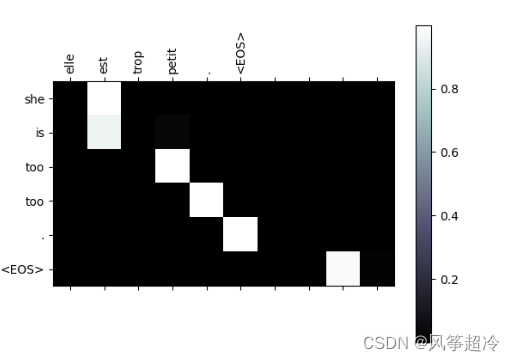

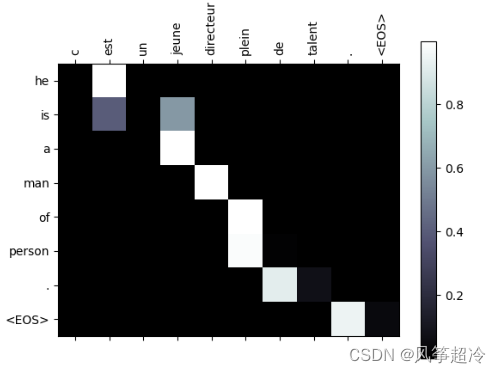

4.2 可视化注意力

output_words, attentions = evaluate(encoder1, attn_decoder1, "je suis trop froid .")

plt.matshow(attentions.numpy())

def showAttention(input_sentence, output_words, attentions):

# Set up figure with colorbar

fig = plt.figure()

ax = fig.add_subplot(111)

cax = ax.matshow(attentions.numpy(), cmap='bone')

fig.colorbar(cax)

# Set up axes

ax.set_xticklabels([''] + input_sentence.split(' ') +

['<EOS>'], rotation=90)

ax.set_yticklabels([''] + output_words)

# Show label at every tick

ax.xaxis.set_major_locator(ticker.MultipleLocator(1))

ax.yaxis.set_major_locator(ticker.MultipleLocator(1))

plt.show()

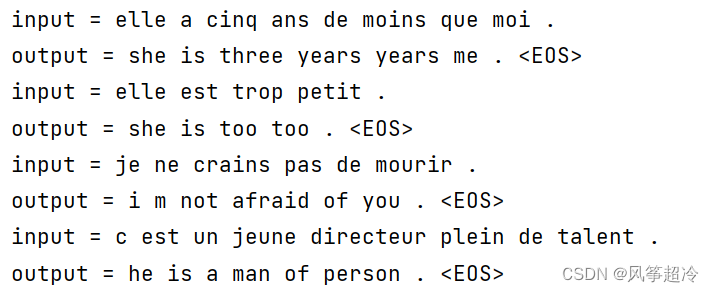

def evaluateAndShowAttention(input_sentence):

output_words, attentions = evaluate(

encoder1, attn_decoder1, input_sentence)

print('input =', input_sentence)

print('output =', ' '.join(output_words))

showAttention(input_sentence, output_words, attentions)

evaluateAndShowAttention("elle a cinq ans de moins que moi .")

evaluateAndShowAttention("elle est trop petit .")

evaluateAndShowAttention("je ne crains pas de mourir .")

evaluateAndShowAttention("c est un jeune directeur plein de talent .")