简介

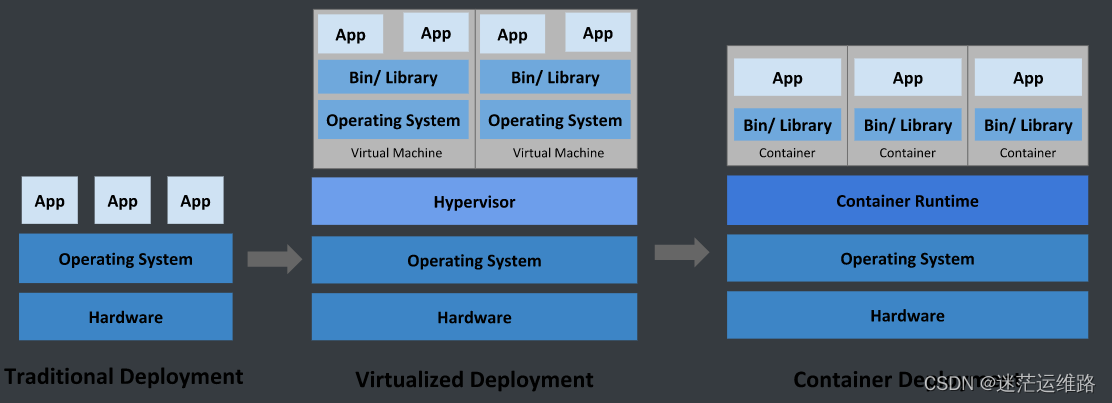

Kubernetes is an open source orchestrator for deploying containerized applications. It was originally developed by Google, inspired by a decade of experience deploying scalable, reliable systems in containers via application-oriented APIs.

Kubernetes 是一种用于部署容器化应用程序的开源协调器。它最初由谷歌开发,灵感来自于十年来通过面向应用的 API 在容器中部署可扩展的可靠系统的经验。

Since its introduction in 2014, Kubernetes has grown to be one of the largest and most popular open source projects in the world. It has become the standard API for building cloud-native applications, present in nearly every public cloud. Kubernetes is a proven infrastructure for distributed systems that is suitable for cloud-native developers of all scales, from a cluster of Raspberry Pi computers to a warehouse full of the latest machines.

自 2014 年推出以来,Kubernetes 已发展成为全球最大、最受欢迎的开源项目之一。它已成为构建云原生应用程序的标准 API,几乎存在于每一个公共云中。Kubernetes 是一种成熟的分布式系统基础架构,适用于各种规模的云原生开发人员,从 Raspberry Pi 计算机集群到堆满最新机器的仓库。

It provides the software necessary to successfully build and deploy reliable, scalable distributed systems.

它提供了成功构建和部署可靠、可扩展的分布式系统所需的软件。

You may be wondering what we mean when we say “reliable, scalable distributed systems.” More and more services are delivered over the network via APIs. These APIs are often delivered by a distributed system, the various pieces that implement the API running on different machines, connected via the network and coordinating their actions via network communication.

您可能想知道我们所说的 "可靠、可扩展的分布式系统 "是什么意思。越来越多的服务通过 API 在网络上提供。这些应用程序接口通常由分布式系统提供,实现应用程序接口的各个部分运行在不同的机器上,通过网络连接,并通过网络通信协调它们的行动。

Because we rely on these APIs increasingly for all aspects of our daily lives (e.g., finding directions to the nearest hospital), these systems must be highly reliable. They cannot fail, even if a part of the system crashes or otherwise stops working. Likewise, they must maintain availability even during software rollouts or other maintenance events.

由于我们在日常生活的各个方面越来越依赖于这些应用程序接口(例如,查找到最近医院的路线),因此这些系统必须高度可靠。即使系统的某个部分崩溃或停止工作,它们也不能失效。同样,即使在软件推出或其他维护活动期间,它们也必须保持可用性。

Finally, because more and more of the world is coming online and using such services, they must be highly scalable so that they can grow their capacity to keep up with ever-increasing usage without radical redesign of the distributed system that implements the services.

最后,由于世界上越来越多的人开始上网并使用这些服务,因此它们必须具有高度的可扩展性,这样才能在不对实现服务的分布式系统进行彻底重新设计的情况下,提高服务能力,跟上日益增长的使用量。

Depending on when and why you have come to hold this book in your hands, you may have varying degrees of experience with containers, distributed systems, and Kubernetes. You may be planning on building your application on top of public cloud infrastructure, in private data centers, or in some hybrid environment.

根据您何时以及为何将本书捧在手中,您可能在容器、分布式系统和 Kubernetes 方面拥有不同程度的经验。您可能计划在公共云基础设施、私有数据中心或某些混合环境中构建应用程序。

Regardless of what your experience is, we believe this book will enable you to make the most of your use of Kubernetes.

无论您的经验如何,我们相信本书都能让您充分利用 Kubernetes。

There are many reasons why people come to use containers and container APIs like Kubernetes, but we believe they can all be traced back to one of these benefits:

- Velocity

- Scaling (of both software and teams)

- Abstracting your infrastructure

- Efficiency

人们使用容器和容器 API(如 Kubernetes)的原因有很多,但我们相信,这些原因都可以归结为其中的一个好处:

- 速度

- 扩展(软件和团队)

- 抽象你的基础设施

- 效率

In the following sections, we describe how Kubernetes can help provide each of these features.

在下面的章节中,我们将介绍 Kubernetes 如何帮助提供这些功能。

速度

Velocity is the key component in nearly all software development today. The software industry has evolved from shipping products as boxed CDs or DVDs to software that is delivered over the network via web-based services that are updated hourly. This changing landscape means that the difference between you and your competitors is often the speed with which you can develop and deploy new components and features, or the speed with which you can respond to innovations developed by others.

速度是当今几乎所有软件开发的关键要素。软件行业已经从以盒装 CD 或 DVD 的形式提供产品,发展到通过网络提供软件,并每小时更新一次。这种不断变化的格局意味着,您与竞争对手之间的差距往往在于您开发和部署新组件和功能的速度,或者您响应他人开发的创新的速度。

It is important to note, however, that velocity is not defined in terms of simply raw speed. While your users are always looking for iterative improvement, they are more interested in a highly reliable service. Once upon a time, it was OK for a service to be down for maintenance at midnight every night. But today, all users expect constant uptime, even if the software they are running is changing constantly.

不过,需要注意的是,速度并不是简单的原始速度。虽然用户一直在寻求迭代改进,但他们更感兴趣的是高度可靠的服务。曾几何时,服务在每晚午夜因维护而停机是没有问题的。但如今,所有用户都希望有稳定的正常运行时间,即使他们运行的软件在不断变化。

Consequently, velocity is measured not in terms of the raw number of features you can ship per hour or day, but rather in terms of the number of things you can ship while maintaining a highly available service.

因此,衡量速度的标准不是每小时或每天可交付的原始功能数量,而是在维持高可用性服务的同时可交付的功能数量。

In this way, containers and Kubernetes can provide the tools that you need to move quickly, while staying available. The core concepts that enable this are:

- Immutability

- Declarative configuration

- Online self-healing systems

这样,容器和 Kubernetes 就能提供快速移动所需的工具,同时保持可用性。实现这一点的核心概念是:

不变性

声明式配置

在线自愈系统

These ideas all interrelate to radically improve the speed with which you can reliably deploy software.

这些理念相互关联,从根本上提高了可靠部署软件的速度。

不变性

Containers and Kubernetes encourage developers to build distributed systems that adhere to the principles of immutable infrastructure.

容器和 Kubernetes 鼓励开发人员构建遵循不可变基础设施原则的分布式系统。

With immutable infrastructure, once an artifact is created in the system it does not change via user modifications.

有了不可变基础架构,工件一旦在系统中创建,就不会因为用户的修改而改变。

Traditionally, computers and software systems have been treated as mutable infrastructure. With mutable infrastructure, changes are applied as incremental updates to an existing system. These updates can occur all at once, or spread out across a long period of time. A system upgrade via the apt-get update tool is a good example of an update to a mutable system.

传统上,计算机和软件系统被视为可变基础设施。在可变基础设施中,变化是作为增量更新应用于现有系统的。这些更新可以一次性完成,也可以在很长一段时间内分散进行。通过 apt-get update 工具进行的系统升级就是对可变系统进行更新的一个很好的例子。

Running apt sequentially downloads any updated binaries, copies them on top of older binaries, and makes incremental updates to configuration files. With a mutable system, the current state of the infrastructure is not represented as a single artifact, but rather an accumulation of incremental updates and changes over time. On many systems these incremental updates come from not just system upgrades, but operator modifications as well.

运行 apt 会按顺序下载任何更新的二进制文件,将其复制到旧的二进制文件之上,并对配置文件进行增量更新。在可变系统中,基础架构的当前状态并不是以单一工件的形式呈现,而是随着时间的推移不断累积的增量更新和变化。在许多系统中,这些增量更新不仅来自系统升级,也来自操作员的修改。

Furthermore, in any system run by a large team, it is highly likely that these changes will have been performed by many different people, and in many cases will not have been recorded anywhere.

此外,在任何由大型团队运行的系统中,这些更改极有可能是由许多不同的人执行的,而且在许多情况下不会记录在任何地方。

In contrast, in an immutable system, rather than a series of incremental updates and changes, an entirely new, complete image is built, where the update simply replaces the entire image with the newer image in a single operation. There are no incremental changes.

与此相反,在不可变系统中,建立的不是一系列增量更新和更改,而是一个全新的、完整的映像,更新只需一次操作就能用较新的映像替换整个映像。没有增量变化。

As you can imagine, this is a significant shift from the more traditional world of configuration management.

可以想象,与传统的配置管理相比,这是一个重大转变。

To make this more concrete in the world of containers, consider two different ways to upgrade your software:

- You can log in to a container, run a command to download your new software, kill the old server, and start the new one.

- You can build a new container image, push it to a container registry, kill the existing container, and start a new one.

为了在容器世界中更具体地说明这一点,可以考虑两种不同的软件升级方式:

你可以登录容器,运行命令下载新软件,杀死旧服务器,然后启动新服务器。

你可以构建一个新的容器映像,将其推送到容器注册表,杀死现有容器,然后启动一个新容器。

At first blush, these two approaches might seem largely indistinguishable. So what is it about the act of building a new container that improves reliability?

乍一看,这两种方法似乎没什么区别。那么,建造新集装箱的行为究竟是如何提高可靠性的呢?

The key differentiation is the artifact that you create, and the record of how you created it. These records make it easy to understand exactly the differences in some new version and, if something goes wrong, to determine what has changed and how to fix it.

关键的区别在于你创建的人工制品,以及如何创建的记录。有了这些记录,就很容易准确理解新版本的不同之处,如果出了问题,也很容易确定哪些地方发生了变化以及如何修复。

Additionally, building a new image rather than modifying an existing one means the old image is still around, and can quickly be used for a rollback if an error occurs. In contrast, once you copy your new binary over an existing binary, such a rollback is nearly impossible.

此外,构建新镜像而不是修改现有镜像意味着旧镜像仍然存在,如果出现错误,可以迅速用于回滚。相比之下,一旦将新的二进制文件复制到现有的二进制文件上,这种回退几乎是不可能的。

Immutable container images are at the core of everything that you will build in Kubernetes. It is possible to imperatively change running containers, but this is an anti-pattern to be used only in extreme cases where there are no other options (e.g., if it is the only way to temporarily repair a mission-critical production system). And even then, the changes must also be recorded through a declarative configuration update at some later time, after the fire is out.

不可变的容器映像是在 Kubernetes 中构建一切的核心。强制更改运行中的容器是可能的,但这是一种反模式,只有在没有其他选择的极端情况下才使用(例如,如果这是临时修复关键任务生产系统的唯一方法)。即便如此,也必须在熄火后的某个时间通过声明式配置更新来记录更改。

声明式配置

Immutability extends beyond containers running in your cluster to the way you describe your application to Kubernetes. Everything in Kubernetes is a declarative configuration object that represents the desired state of the system. It is the job of Kubernetes to ensure that the actual state of the world matches this desired state.

不变性不仅包括在集群中运行的容器,还包括向 Kubernetes 描述应用程序的方式。Kubernetes 中的一切都是一个声明性的配置对象,代表着系统的理想状态。Kubernetes 的工作就是确保世界的实际状态与这一理想状态相匹配。

Much like mutable versus immutable infrastructure, declarative configuration is an alternative to imperative configuration, where the state of the world is defined by the execution of a series of instructions rather than a declaration of the desired state of the world.

就像可变基础设施与不可变基础设施一样,声明式配置是命令式配置的另一种选择,在命令式配置中,世界的状态是通过执行一系列指令来定义的,而不是通过声明所期望的世界状态。

While imperative commands define actions, declarative configurations define state.

命令式命令定义动作,而声明式配置定义状态。

To understand these two approaches, consider the task of producing three replicas of a piece of software. With an imperative approach, the configuration would say “run A, run B, and run C.” The corresponding declarative configuration would be “replicas equals three.”

要理解这两种方法,请考虑制作一个软件的三个副本的任务。如果采用命令式方法,配置会是 “运行 A、运行 B 和运行 C”。相应的声明式配置则是 “副本等于三个”。

Because it describes the state of the world, declarative configuration does not have to be executed to be understood. Its impact is concretely declared.

由于声明式配置描述的是世界的状态,因此无需执行即可理解。它的影响是具体声明的。

Since the effects of declarative configuration can be understood before they are executed, declarative configuration is far less error-prone. Further, the traditional tools of software development, such as source control, code review, and unit testing, can be used in declarative configuration in ways that are impossible for imperative instructions. The idea of storing declarative configuration in source control is often referred to as “infrastructure as code.”

由于声明式配置的效果可以在执行之前就被理解,因此声明式配置的错误率要低得多。此外,软件开发的传统工具,如源代码控制、代码审查和单元测试,都可以用于声明式配置,这是命令式指令所无法做到的。在源代码控制中存储声明式配置的理念通常被称为 “基础架构即代码”。

The combination of declarative state stored in a version control system and the ability of Kubernetes to make reality match this declarative state makes rollback of a change trivially easy. It is simply restating the previous declarative state of the system. This is usually impossible with imperative systems, because although the imperative instructions describe how to get you from point A to point B, they rarely include the reverse instructions that can get you back.

版本控制系统中存储的声明状态与 Kubernetes 使现实与声明状态相匹配的能力相结合,使得回滚变更变得轻而易举。只需重述系统之前的声明状态即可。这在命令式系统中通常是不可能的,因为尽管命令式指令描述了如何从 A 点到达 B 点,但它们很少包含能让你返回的反向指令。

在线自愈系统

Kubernetes is an online, self-healing system. When it receives a desired state configuration, it does not simply take a set of actions to make the current state match the desired state a single time. It continuously takes actions to ensure that the current state matches the desired state. This means that not only will Kubernetes initialize your system, but it will guard it against any failures or perturbations that might destabilize the system and affect reliability.

Kubernetes 是一个在线自愈系统。当它收到所需的状态配置时,它不会简单地采取一系列行动,使当前状态与所需状态一次性匹配。它会持续采取措施,确保当前状态与所需状态相匹配。这意味着,Kubernetes 不仅会初始化你的系统,还会防范任何可能破坏系统稳定和影响可靠性的故障或扰动。

A more traditional operator repair involves a manual series of mitigation steps, or human intervention performed in response to some sort of alert.

更传统的操作员修复涉及一系列手动缓解步骤,或根据某种警报进行人工干预。

Imperative repair like this is more expensive (since it generally requires an on-call operator to be available to enact the repair). It is also generally slower, since a human must often wake up and log in to respond. Furthermore, it is less reliable because the imperative series of repair operations suffers from all of the problems of imperative management described in the previous section.

像这样的紧急维修成本较高(因为通常需要值班操作员来实施维修)。一般来说,它的速度也较慢,因为人工必须经常唤醒并登录才能做出响应。此外,它的可靠性也较低,因为命令式修复操作存在上一节所述的命令式管理的所有问题。

Selfhealing systems like Kubernetes both reduce the burden on operators and improve the overall reliability of the system by performing reliable repairs more quickly.

像 Kubernetes 这样的自愈系统既能减轻操作员的负担,又能更快地进行可靠的修复,从而提高系统的整体可靠性。

As a concrete example of this self-healing behavior, if you assert a desired state of three replicas to Kubernetes, it does not just create three replicas—it continuously ensures that there are exactly three replicas. If you manually create a fourth replica, Kubernetes will destroy one to bring the number back to three. If you manually destroy a replica, Kubernetes will create one to again return you to the desired state.

这种自愈行为的一个具体例子是,如果你向 Kubernetes 提出三个副本的理想状态,它不会直接创建三个副本,而是会持续确保有三个副本。如果你手动创建第四个副本,Kubernetes 会销毁一个副本,使数量恢复到三个。如果你手动销毁一个副本,Kubernetes 会创建一个副本,再次让你回到所需的状态。

Online self-healing systems improve developer velocity because the time and energy you might otherwise have spent on operations and maintenance can instead be spent on developing and testing new features.

在线自修复系统可提高开发人员的开发速度,因为您原本可能要花费在运行和维护上的时间和精力,可以转而用于开发和测试新功能。

In a more advanced form of self-healing, there has been significant recent work in the operator paradigm for Kubernetes. With operators, more advanced logic needed to maintain, scale, and heal a specific piece of software (MySQL, for example) is encoded into an operator application that runs as a container in the cluster. The code in the operator is responsible for more targeted and advanced health detection and healing than can be achieved via Kubernetes’s generic self-healing. Often this is packaged up as “operators,” which are discussed in a later section.

在更高级的自愈形式中,Kubernetes 的操作员范例最近取得了重大进展。通过操作员,维护、扩展和修复特定软件(例如 MySQL)所需的更高级逻辑被编码到操作员应用程序中,作为集群中的一个容器运行。与 Kubernetes 的通用自愈功能相比,操作员中的代码负责更有针对性的高级健康检测和修复。这通常被打包成 “操作员”,将在后面的章节中讨论。

扩展

As your product grows, it’s inevitable that you will need to scale both your software and the teams that develop it. Fortunately, Kubernetes can help with both of these goals. Kubernetes achieves scalability by favoring decoupled architectures.

随着产品的发展,您不可避免地需要扩展软件和开发团队。幸运的是,Kubernetes 可以帮助实现这两个目标。Kubernetes 通过支持解耦架构来实现可扩展性。

解耦

In a decoupled architecture, each component is separated from other components by defined APIs and service load balancers.

在解耦架构中,每个组件都通过定义的应用程序接口和服务负载平衡器与其他组件分离。

APIs and load balancers isolate each piece of the system from the others. APIs provide a buffer between implementer and consumer, and load balancers provide a buffer between running instances of each service.

应用程序接口和负载平衡器将系统的每一部分与其他部分隔离开来。应用程序接口在实现者和消费者之间提供缓冲,而负载平衡器则在每个服务的运行实例之间提供缓冲。

Decoupling components via load balancers makes it easy to scale the programs that make up your service, because increasing the size (and therefore the apacity) of the program can be done without adjusting or reconfiguring any of the other layers of your service.

通过负载平衡器解除组件之间的耦合,可以轻松扩展构成服务的程序,因为增加程序的大小(从而增加容量)无需调整或重新配置服务的任何其他层。

Decoupling servers via APIs makes it easier to scale the development teams because each team can focus on a single, smaller microservice with a comprehensible surface area. Crisp APIs between microservices limit the amount of cross-team communication overhead required to build and deploy software. This communication overhead is often the major restricting factor when scaling teams.

通过应用程序接口对服务器进行解耦,可以更容易地扩展开发团队,因为每个团队都可以专注于一个具有可理解表面积的、更小的微服务。微服务之间简洁的 API 限制了构建和部署软件所需的跨团队通信开销。在扩展团队时,这种通信开销往往是主要的限制因素。

轻松扩展应用程序和集群

Concretely, when you need to scale your service, the immutable, declarative nature of Kubernetes makes this scaling trivial to implement. Because your containers are immutable, and the number of replicas is merely a number in a declarative config, scaling your service upward is simply a matter of changing a number in a configuration file, asserting this new declarative state to Kubernetes, and letting it take care of the rest. Alternatively, you can set up autoscaling and let Kubernetes take care of it for you.

具体来说,当你需要扩展你的服务时,Kubernetes 的不可变性和声明性使得扩展变得轻而易举。因为你的容器是不可变的,副本的数量也只是声明式配置中的一个数字,所以向上扩展你的服务只需改变配置文件中的一个数字,向 Kubernetes 声明这个新的声明状态,剩下的就交给它处理吧。或者,你也可以设置自动扩展,让 Kubernetes 帮你处理。

Of course, that sort of scaling assumes that there are resources available in your cluster to consume. Sometimes you actually need to scale up the cluster itself. Again, Kubernetes makes this task easier.

当然,这种扩展的前提是集群中有可用的资源可供使用。有时,你实际上需要扩展集群本身。同样,Kubernetes 让这项任务变得更容易。

Because many machines in a cluster are entirely identical to other machines in that set and the applications themselves are decoupled from the details of the machine by containers, adding additional resources to the cluster is simply a matter of imaging a new machine of the same class and joining it into the cluster. This can be accomplished via a few simple commands or via a prebaked machine image.

由于集群中的许多机器与该集群中的其他机器完全相同,而且应用程序本身与机器的细节已通过容器解耦,因此向集群中添加额外资源只需将同类的新机器镜像化并将其加入集群即可。这可以通过几个简单的命令或预制的机器镜像来实现。

One of the challenges of scaling machine resources is predicting their use. If you are running on physical infrastructure, the time to obtain a new machine is measured in days or weeks. On both physical and cloud infrastructure, predicting future costs is difficult because it is hard to predict the growth and scaling needs of specific applications.

扩展机器资源的挑战之一是预测其使用情况。如果在物理基础设施上运行,获得新机器的时间以天或周为单位。在物理和云基础设施上,预测未来成本都很困难,因为很难预测特定应用程序的增长和扩展需求。

Kubernetes can simplify forecasting future compute costs. To understand why this is true, consider scaling up three teams, A, B, and C. Historically you have seen that each team’s growth is highly variable and thus hard to predict. If you are provisioning individual machines for each service, you have no choice but to forecast based on the maximum expected growth for each service, since machines dedicated to one team cannot be used for another team. If instead you use Kubernetes to decouple the teams from the specific machines they are using, you can forecast growth based on the aggregate growth of all three services.

Kubernetes 可以简化对未来计算成本的预测。要理解为什么会这样,请考虑扩大 A、B 和 C 三个团队的规模。从历史经验来看,每个团队的增长变化很大,因此很难预测。如果您为每个服务配置单个机器,您别无选择,只能根据每个服务的最大预期增长进行预测,因为专用于一个团队的机器不能用于另一个团队。如果使用 Kubernetes 将团队与他们使用的特定机器分离,就可以根据所有三个服务的总体增长情况进行预测。

Combining three variable growth rates into a single growth rate reduces statistical noise and produces a more reliable forecast of expected growth.

将三个可变增长率合并为一个单一增长率,可减少统计噪音,并得出更可靠的预期增长预测。

Furthermore, decoupling the teams from specific machines means that teams can share fractional parts of one another’s machines, reducing even urther the overheads associated with forecasting growth of computing resources.

此外,将团队与特定机器脱钩意味着团队可以共享彼此机器的部分功能,从而进一步减少与预测计算资源增长相关的开销。

利用微服务扩展开发团队

As noted in a variety of research, the ideal team size is the “two-pizza team,” or roughly six to eight people. This group size often results in good knowledge sharing, fast decision making, and a common sense of purpose.

正如各种研究指出的那样,理想的团队规模是 “两个比萨饼团队”,即大约六到八个人。这种规模的团队通常能实现良好的知识共享、快速决策和共同目标感。

Larger teams tend to suffer from issues of hierarchy, poor visibility, and infighting, which hinder agility and success.

规模较大的团队往往存在等级制度、能见度低和内讧等问题,这些问题阻碍了团队的灵活性和成功。

However, many projects require significantly more resources to be successful and achieve their goals. Consequently, there is a tension between the ideal team size for agility and the necessary team size for the product’s end goals.

然而,许多项目需要更多的资源才能取得成功并实现目标。因此,实现敏捷性的理想团队规模与实现产品最终目标所需的团队规模之间存在着矛盾。

The common solution to this tension has been the development of decoupled, service-oriented teams that each build a single microservice. Each small team is responsible for the design and delivery of a service that is consumed by other small teams. The aggregation of all of these services ultimately provides the implementation of the overall product’s surface area.

解决这种紧张关系的常见方法是开发解耦的、面向服务的团队,每个团队构建一个微服务。每个小团队负责设计和交付一个服务,由其他小团队使用。所有这些服务的聚合最终提供了整个产品表面区域的实现。

Kubernetes provides numerous abstractions and APIs that make it easier to build these decoupled microservice architectures:

- Pods, or groups of containers, can group together container images developed by different teams into a single deployable unit.

- Kubernetes services provide load balancing, naming, and discovery to isolate one microservice from another.

- Namespaces provide isolation and access control, so that each microservice can control the degree to which other services interact with it.

- Ingress objects provide an easy-to-use frontend that can combine multiple microservices into a single externalized API surface area.

Kubernetes 提供了大量抽象和 API,使构建这些解耦的微服务架构变得更加容易:

- Pod 或容器组可以将不同团队开发的容器映像组合成一个可部署的单元。

- Kubernetes 服务提供负载平衡、命名和发现功能,将一个微服务与另一个微服务隔离开来。

- 命名空间提供隔离和访问控制,因此每个微服务都能控制其他服务与之交互的程度。

- Ingress 对象提供了一个易于使用的前端,可将多个微服务组合成一个外部化的 API 表面区域。

Finally, decoupling the application container image and machine means that different microservices can colocate on the same machine without interfering with one another, reducing the overhead and cost of microservice architectures. The health-checking and rollout features of Kubernetes guarantee a consistent approach to application rollout and reliability that ensures that a proliferation of microservice teams does not also result in a proliferation of different approaches to service production lifecycle and operations.

最后,解耦应用容器映像和机器意味着不同的微服务可以集中在同一台机器上,而不会相互干扰,从而降低了微服务架构的开销和成本。Kubernetes 的健康检查和推出功能保证了应用推出和可靠性的一致性,从而确保微服务团队的扩散不会导致服务生产生命周期和运营的不同方法的扩散。

为一致性和扩展性分忧

In addition to the consistency that Kubernetes brings to operations, the decoupling and separation of concerns produced by the Kubernetes stack lead to significantly greater consistency for the lower levels of your infrastructure. This enables you to scale infrastructure operations to manage many machines with a single small, focused team. We have talked at length about the decoupling of application container and machine/operating system (OS), but an important aspect of this decoupling is that the container orchestration API becomes a crisp contract that separates the responsibilities of the application operator from the cluster orchestration operator. We call this the “not my monkey, not my circus” line.

除了 Kubernetes 为运营带来的一致性之外,Kubernetes 协议栈产生的解耦和关注点分离也大大提高了基础架构底层的一致性。这使您能够扩展基础架构运营,只需一个专注的小团队就能管理众多机器。我们已经详细讨论了应用容器与机器/操作系统(OS)的解耦,但这种解耦的一个重要方面是容器编排 API 成为一种清晰的合约,将应用操作员的责任与集群编排操作员的责任分开。我们称之为 “不是我的猴子,不是我的马戏团”。

The application developer relies on the service-level agreement (SLA) delivered by the container orchestration API, without worrying about the details of how this SLA is achieved. Likewise, the container orchestration API reliability engineer focuses on delivering the orchestration API’s SLA without worrying about the applications that are running on top of it.

应用程序开发人员依赖容器协调 API 提供的服务级别协议 (SLA),而不用担心如何实现 SLA 的细节。同样,容器编排 API 的可靠性工程师也只关注提供编排 API 的 SLA,而不关心在其上运行的应用程序。

This decoupling of concerns means that a small team running a Kubernetes cluster can be responsible for supporting hundreds or even thousands of teams running applications within that cluster (Figure 1-1). Likewise, a small team can be responsible for dozens (or more) of clusters running around the world. It’s important to note that the same decoupling of containers and OS enables the OS reliability engineers to focus on the SLA of the individual machine’s OS. This becomes another line of separate responsibility, with the Kubernetes operators relying on the OS SLA, and the OS operators worrying solely about delivering that SLA. Again, this enables you to scale a small team of OS experts to a fleet of thousands of machines.

这种关注点的解耦意味着,一个运行 Kubernetes 集群的小型团队可以负责支持数百甚至数千个在该集群中运行应用程序的团队(图 1-1)。同样,一个小型团队也可以负责支持世界各地运行的数十个(或更多)集群。值得注意的是,容器与操作系统的解耦使得操作系统可靠性工程师能够专注于单个机器操作系统的 SLA。这将成为另一条独立的责任线,Kubernetes 操作员依赖于操作系统的 SLA,而操作系统操作员则只负责提供该 SLA。同样,这也使您能够将一个小规模的操作系统专家团队扩展到数千台机器的机群。

Of course, devoting even a small team to managing an OS is beyond the scale of many organizations. In these environments, a managed Kubernetes-as-a-Service (KaaS) provided by a public cloud provider is a great option. As Kubernetes has become increasingly ubiquitous, KaaS has become increasingly available as well, to the point where it is now offered on nearly every public cloud. Of course, using a KaaS has some limitations, since the operator makes decisions for you about how the Kubernetes clusters are built and configured. For example, many KaaS platforms disable alpha features because they can destabilize the managed cluster.

当然,即使投入一个小团队来管理操作系统,也超出了许多组织的规模。在这些环境中,公共云提供商提供的托管 Kubernetes 即服务(KaaS)是一个不错的选择。随着 Kubernetes 的普及,KaaS 的可用性也越来越高,现在几乎所有的公共云都提供这种服务。当然,使用 KaaS 有一些限制,因为运营商会替你决定如何构建和配置 Kubernetes 集群。例如,许多 KaaS 平台会禁用 alpha 功能,因为它们会破坏托管集群的稳定性。

In addition to a fully managed Kubernetes service, there is a thriving ecosystem of companies and projects that help to install and manage Kubernetes.

除了完全托管的 Kubernetes 服务外,还有一个由帮助安装和管理 Kubernetes 的公司和项目组成的繁荣生态系统。

There is a full spectrum of solutions between doing it “the hard way” and a fully managed service.

在 "硬碰硬 "和完全托管服务之间,有一整套解决方案。

Consequently, the decision of whether to use KaaS or manage it yourself (or something in between) is one each user needs to make based on the skills and demands of their situation. Often for small organizations, KaaS provides an easy-to-use solution that enables them to focus their time and energy on building the software to support their work rather than managing a cluster. For a larger organization that can afford a dedicated team for managing its Kubernetes cluster, it may make sense to manage it yourself since it enables greater flexibility in terms of cluster capabilities and operations.

因此,是使用 KaaS 还是自己管理(或介于两者之间),每个用户都需要根据自己的技能和需求来决定。通常情况下,对于小型企业来说,KaaS 提供了一个易于使用的解决方案,使他们能够将时间和精力集中在构建软件以支持他们的工作上,而不是管理集群。对于有能力聘请专门团队管理 Kubernetes 集群的大型企业来说,自己管理可能更有意义,因为这样可以在集群功能和操作方面实现更大的灵活性。

抽象基础设施

The goal of the public cloud is to provide easy-to-use, self-service infrastructure for developers to consume. However, too often cloud APIs are oriented around mirroring the infrastructure that IT expects, not the concepts (e.g., “virtual machines” instead of “applications”) that developers want to consume.

公共云的目标是为开发人员提供易于使用的自助式基础设施。然而,云应用程序接口往往是以 IT 期望的基础设施为导向,而不是以开发人员希望使用的概念(如 "虚拟机 "而非 “应用程序”)为导向。

Additionally, in many cases the cloud comes with particular details in implementation or services that are specific to the cloud provider. Consuming these APIs directly makes it difficult to run your application in multiple environments, or spread between cloud and physical environments.

此外,在许多情况下,云在实施或服务方面都有特定于云提供商的细节。直接使用这些应用程序接口会导致应用程序难以在多个环境中运行,或在云环境和物理环境之间传播。

The move to application-oriented container APIs like Kubernetes has two concrete benefits. First, as we described previously, it separates developers from specific machines. This makes the machine-oriented IT role easier, since machines can simply be added in aggregate to scale the cluster, and in the context of the cloud it also enables a high degree of portability since developers are consuming a higher-level API that is implemented in terms of the specific cloud infrastructure APIs.

转向 Kubernetes 等面向应用的容器 API 有两个具体好处。首先,正如我们之前所述,它将开发人员与特定的机器分离开来。这使得面向机器的 IT 角色变得更容易,因为只需添加机器总量就能扩展集群,而且在云环境下,它还能实现高度的可移植性,因为开发人员使用的是以特定云基础设施 API 实现的更高级 API。

When your developers build their applications in terms of container images and deploy them in terms of portable Kubernetes APIs, transferring your application between environments, or even running in hybrid environments, is simply a matter of sending the declarative config to a new cluster.

当您的开发人员以容器映像的方式构建应用程序,并以可移植的 Kubernetes API 的方式进行部署时,在不同环境之间传输应用程序,甚至在混合环境中运行,只需将声明式配置发送到新的集群即可。

Kubernetes has a number of plug-ins that can abstract you from a particular cloud. For example, Kubernetes services know how to create load balancers on all major public clouds as well as several different private and physical infrastructures.

Kubernetes 有许多插件,可以将你从特定云中抽象出来。例如,Kubernetes 服务知道如何在所有主要公共云以及几种不同的私有和物理基础设施上创建负载平衡器。

Likewise, Kubernetes PersistentVolumes and PersistentVolumeClaims can be used to abstract your applications away from specific storage implementations. Of course, to achieve this portability you need to avoid cloud-managed services (e.g., Amazon’s DynamoDB, Azure’s CosmosDB, or Google’s Cloud Spanner), which means that you will be forced to deploy and manage open source storage solutions like Cassandra, MySQL, or MongoDB.

同样,Kubernetes PersistentVolumes 和 PersistentVolumeClaims 也可用于将你的应用从特定的存储实现中抽象出来。当然,要实现这种可移植性,您需要避免使用云管理服务(如亚马逊的 DynamoDB、Azure 的 CosmosDB 或 Google 的 Cloud Spanner),这意味着您将被迫部署和管理 Cassandra、MySQL 或 MongoDB 等开源存储解决方案。

Putting it all together, building on top of Kubernetes’s applicationoriented abstractions ensures that the effort you put into building, deploying, and managing your application is truly portable across a wide variety of environments.

总而言之,在 Kubernetes 面向应用的抽象之上进行构建,可确保您在构建、部署和管理应用程序方面所付出的努力在各种环境中真正实现可移植性。

效率

In addition to the developer and IT management benefits that containers and Kubernetes provide, there is also a concrete economic benefit to the abstraction. Because developers no longer think in terms of machines, their applications can be colocated on the same machines without impacting the applications themselves. This means that tasks from multiple users can be packed tightly onto fewer machines.

除了容器和 Kubernetes 为开发人员和 IT 管理带来的好处外,这种抽象还能带来具体的经济效益。由于开发人员不再以机器为单位进行思考,他们的应用程序可以在不影响应用程序本身的情况下在同一台机器上进行共享。这意味着,来自多个用户的任务可以紧凑地打包到更少的机器上。

Efficiency can be measured by the ratio of the useful work performed by a machine or process to the total amount of energy spent doing so.

效率可以用一台机器或一道工序完成的有用功与完成有用功所消耗的总能量之比来衡量。

When it comes to deploying and managing applications, many of the available tools and processes (e.g., bash scripts, apt updates, or imperative configuration management) are somewhat inefficient.

在部署和管理应用程序时,许多可用的工具和流程(如 bash 脚本、apt 更新或必要的配置管理)都有些效率低下。

When discussing efficiency it’s often helpful to think of both the cost of running a server and the human cost required to manage it.

在讨论效率问题时,最好同时考虑服务器的运行成本和管理服务器所需的人力成本。

Running a server incurs a cost based on power usage, cooling requirements, data-center space, and raw compute power. Once a server is racked and powered on (or clicked and spun up), the meter literally starts running. Any idle CPU time is money wasted. Thus, it becomes part of the system administrator’s responsibilities to keep utilization at acceptable levels, which requires ongoing management.

服务器的运行成本取决于用电量、冷却要求、数据中心空间和原始计算能力。一旦服务器上架并接通电源(或点击并启动),电表就开始运行。任何闲置的 CPU 时间都是在浪费金钱。因此,系统管理员有责任将利用率保持在可接受的水平,这就需要持续的管理。

This is where containers and the Kubernetes workflow come in.

这就是容器和 Kubernetes 工作流程的作用所在。

Kubernetes provides tools that automate the distribution of applications across a cluster of machines, ensuring higher levels of utilization than are possible with traditional tooling.

Kubernetes 提供的工具可在机器集群中自动分发应用程序,确保比传统工具更高的利用率。

A further increase in efficiency comes from the fact that a developer’s test environment can be quickly and cheaply created as a set of containers running in a personal view of a shared Kubernetes cluster (using a feature called namespaces). In the past, turning up a test cluster for a developer might have meant turning up three machines.

开发人员的测试环境可以快速、廉价地创建为一组在共享 Kubernetes 集群的个人视图中运行的容器(使用名为命名空间的功能),从而进一步提高了效率。在过去,为开发人员创建一个测试集群可能意味着要启动三台机器。

With Kubernetes it is simple to have all developers share a single test cluster, aggregating their usage onto a much smaller set of machines.

有了 Kubernetes,让所有开发人员共享一个测试集群就变得非常简单,只需将他们的使用量聚合到一组更小的机器上。

Reducing the overall number of machines used in turn drives up the efficiency of each system: since more of the resources (CPU, RAM, etc.) on each individual machine are used, the overall cost of each container becomes much lower.

减少使用的机器总数反过来又会提高每个系统的效率:因为每台机器上的更多资源(CPU、内存等)被使用,每个容器的总体成本就会大大降低。

Reducing the cost of development instances in your stack enables development practices that might previously have been costprohibitive.

降低堆栈中开发实例的成本,可以实现以前可能成本高昂的开发实践。

For example, with your application deployed via Kubernetes it becomes conceivable to deploy and test every single commit contributed by every developer throughout your entire stack.

例如,通过 Kubernetes 部署应用程序后,就可以部署和测试整个堆栈中每个开发人员提交的每个提交。

When the cost of each deployment is measured in terms of a small number of containers, rather than multiple complete virtual machines (VMs), the cost you incur for such testing is dramatically lower.

如果每次部署的成本是以少量容器而不是多个完整的虚拟机(VM)来衡量,那么这种测试的成本就会大大降低。

Returning to the original value of Kubernetes, this increased testing also increases velocity, since you have strong signals as to the reliability of your code as well as the granularity of detail required to quickly identify where a problem may have been introduced.

回到 Kubernetes 的最初价值,测试的增加也提高了速度,因为您可以获得代码可靠性的强烈信号,以及快速识别问题所在所需的细节粒度。

总结

Kubernetes was built to radically change the way that applications are built and deployed in the cloud. Fundamentally, it was designed to give developers more velocity, efficiency, and agility. We hope the preceding sections have given you an idea of why you should deploy your applications using Kubernetes. Now that you are convinced of that, the following chapters will teach you how to deploy your application.

Kubernetes 的出现从根本上改变了在云中构建和部署应用程序的方式。从根本上说,它旨在为开发人员提供更高的速度、效率和灵活性。我们希望前面的章节能让您了解为什么要使用 Kubernetes 部署应用程序。现在,您已对此深信不疑,接下来的章节将教您如何部署应用程序。