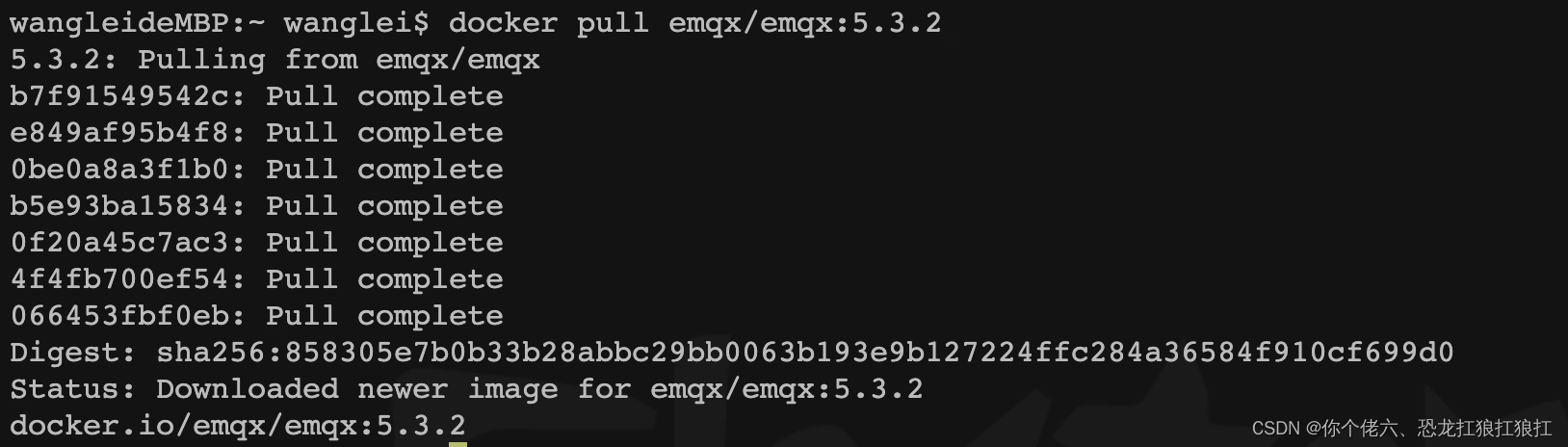

实验环境:

torch.__version__

Out[3]: '1.12.1+cu113'

首先测试一下:

import torch

from torchvision.transforms import functional as F

from torch.autograd import Function

img = torch.randn(1, 3, 224, 224)

startpoints = torch.FloatTensor([[0., 0.], [0., 224.], [224., 0.], [224., 224.]])

endpoints = torch.FloatTensor([[0., 0.], [0., 200.], [200., 0.], [200., 200.]])

t = F.perspective(img, startpoints, endpoints)

print(t.requires_grad)

没有梯度

查看源码torchvision.transforms.functional.perspective,是transform的对外接口,负责处理输入数据类型等问题,

def perspective(

img: Tensor,

startpoints: List[List[int]],

endpoints: List[List[int]],

interpolation: InterpolationMode = InterpolationMode.BILINEAR,

fill: Optional[List[float]] = None,

) -> Tensor:

"""Perform perspective transform of the given image.

If the image is torch Tensor, it is expected

to have [..., H, W] shape, where ... means an arbitrary number of leading dimensions.

Args:

img (PIL Image or Tensor): Image to be transformed.

startpoints (list of list of ints): List containing four lists of two integers corresponding to four corners

``[top-left, top-right, bottom-right, bottom-left]`` of the original image.

endpoints (list of list of ints): List containing four lists of two integers corresponding to four corners

``[top-left, top-right, bottom-right, bottom-left]`` of the transformed image.

interpolation (InterpolationMode): Desired interpolation enum defined by

:class:`torchvision.transforms.InterpolationMode`. Default is ``InterpolationMode.BILINEAR``.

If input is Tensor, only ``InterpolationMode.NEAREST``, ``InterpolationMode.BILINEAR`` are supported.

For backward compatibility integer values (e.g. ``PIL.Image[.Resampling].NEAREST``) are still accepted,

but deprecated since 0.13 and will be removed in 0.15. Please use InterpolationMode enum.

fill (sequence or number, optional): Pixel fill value for the area outside the transformed

image. If given a number, the value is used for all bands respectively.

.. note::

In torchscript mode single int/float value is not supported, please use a sequence

of length 1: ``[value, ]``.

Returns:

PIL Image or Tensor: transformed Image.

"""

if not torch.jit.is_scripting() and not torch.jit.is_tracing():

_log_api_usage_once(perspective)

coeffs = _get_perspective_coeffs(startpoints, endpoints)

# Backward compatibility with integer value

if isinstance(interpolation, int):

warnings.warn(

"Argument 'interpolation' of type int is deprecated since 0.13 and will be removed in 0.15. "

"Please use InterpolationMode enum."

)

interpolation = _interpolation_modes_from_int(interpolation)

if not isinstance(interpolation, InterpolationMode):

raise TypeError("Argument interpolation should be a InterpolationMode")

if not isinstance(img, torch.Tensor):

pil_interpolation = pil_modes_mapping[interpolation]

return F_pil.perspective(img, coeffs, interpolation=pil_interpolation, fill=fill)

return F_t.perspective(img, coeffs, interpolation=interpolation.value, fill=fill)

继续看torchvision.transforms.functional_tensor.perspective,是tensor版本的功能性函数接口。

调用 _perspective_grid 函数生成透视变换的栅格

调用 _apply_grid_transform 函数,将生成的栅格应用到原始图像上,执行透视变换

def perspective(

img: Tensor, perspective_coeffs: List[float], interpolation: str = "bilinear", fill: Optional[List[float]] = None

) -> Tensor:

if not (isinstance(img, torch.Tensor)):

raise TypeError("Input img should be Tensor.")

_assert_image_tensor(img)

_assert_grid_transform_inputs(

img,

matrix=None,

interpolation=interpolation,

fill=fill,

supported_interpolation_modes=["nearest", "bilinear"],

coeffs=perspective_coeffs,

)

ow, oh = img.shape[-1], img.shape[-2]

dtype = img.dtype if torch.is_floating_point(img) else torch.float32

grid = _perspective_grid(perspective_coeffs, ow=ow, oh=oh, dtype=dtype, device=img.device)

return _apply_grid_transform(img, grid, interpolation, fill=fill)

其中:_apply_grid_transform 在给定的网格上对图像进行变换。将输入图像转换为与网格相同的数据类型,进行填充色处理。

def _apply_grid_transform(img: Tensor, grid: Tensor, mode: str, fill: Optional[List[float]]) -> Tensor:

img, need_cast, need_squeeze, out_dtype = _cast_squeeze_in(img, [grid.dtype])

if img.shape[0] > 1:

# Apply same grid to a batch of images

grid = grid.expand(img.shape[0], grid.shape[1], grid.shape[2], grid.shape[3])

# Append a dummy mask for customized fill colors, should be faster than grid_sample() twice

if fill is not None:

dummy = torch.ones((img.shape[0], 1, img.shape[2], img.shape[3]), dtype=img.dtype, device=img.device)

img = torch.cat((img, dummy), dim=1)

img = grid_sample(img, grid, mode=mode, padding_mode="zeros", align_corners=False)

# Fill with required color

if fill is not None:

mask = img[:, -1:, :, :] # N * 1 * H * W

img = img[:, :-1, :, :] # N * C * H * W

mask = mask.expand_as(img)

len_fill = len(fill) if isinstance(fill, (tuple, list)) else 1

fill_img = torch.tensor(fill, dtype=img.dtype, device=img.device).view(1, len_fill, 1, 1).expand_as(img)

if mode == "nearest":

mask = mask < 0.5

img[mask] = fill_img[mask]

else: # 'bilinear'

img = img * mask + (1.0 - mask) * fill_img

img = _cast_squeeze_out(img, need_cast, need_squeeze, out_dtype)

return img

提到 grid生成

生成一个透视栅格,输入参数是透视变换的系数(coeffs)、输出图像的宽度(ow)和高度(oh)。theta1和theta2,用于计算变换后的 x 和 y 坐标。创建一个基础栅格(base_grid),其中包含输出图像的所有像素位置。基础栅格与theta1和theta2进行矩阵乘法操作,生成变换后的位置。

def _perspective_grid(coeffs: List[float], ow: int, oh: int, dtype: torch.dtype, device: torch.device) -> Tensor:

# https://github.com/python-pillow/Pillow/blob/4634eafe3c695a014267eefdce830b4a825beed7/src/libImaging/Geometry.c#L394

#

# x_out = (coeffs[0] * x + coeffs[1] * y + coeffs[2]) / (coeffs[6] * x + coeffs[7] * y + 1)

# y_out = (coeffs[3] * x + coeffs[4] * y + coeffs[5]) / (coeffs[6] * x + coeffs[7] * y + 1)

#

theta1 = torch.tensor(

[[[coeffs[0], coeffs[1], coeffs[2]], [coeffs[3], coeffs[4], coeffs[5]]]], dtype=dtype, device=device

)

theta2 = torch.tensor([[[coeffs[6], coeffs[7], 1.0], [coeffs[6], coeffs[7], 1.0]]], dtype=dtype, device=device)

d = 0.5

base_grid = torch.empty(1, oh, ow, 3, dtype=dtype, device=device)

x_grid = torch.linspace(d, ow * 1.0 + d - 1.0, steps=ow, device=device)

base_grid[..., 0].copy_(x_grid)

y_grid = torch.linspace(d, oh * 1.0 + d - 1.0, steps=oh, device=device).unsqueeze_(-1)

base_grid[..., 1].copy_(y_grid)

base_grid[..., 2].fill_(1)

rescaled_theta1 = theta1.transpose(1, 2) / torch.tensor([0.5 * ow, 0.5 * oh], dtype=dtype, device=device)

output_grid1 = base_grid.view(1, oh * ow, 3).bmm(rescaled_theta1)

output_grid2 = base_grid.view(1, oh * ow, 3).bmm(theta2.transpose(1, 2))

output_grid = output_grid1 / output_grid2 - 1.0

return output_grid.view(1, oh, ow, 2)

探索

https://github.com/python-pillow/Pillow/blob/4634eafe3c695a014267eefdce830b4a825beed7/src/libImaging/Geometry.c#L394

可梯度方式

https://github.com/pytorch/vision/pull/7925

在torchvision.transforms.functional_tensor._perspective_grid中修改

theta1 = torch.tensor(

[[[coeffs[0], coeffs[1], coeffs[2]], [coeffs[3], coeffs[4], coeffs[5]]]], dtype=dtype, device=device

)

theta2 = torch.tensor([[[coeffs[6], coeffs[7], 1.0], [coeffs[6], coeffs[7], 1.0]]], dtype=dtype, device=device)

# 修改这两句,试图可微分

theta1 = torch.reshape(coeffs[:6], (1, 2, 3)).to(dtype=dtype, device=device)

theta2 = torch.reshape(torch.cat(

(coeffs[6:], torch.ones(1, device=coeffs.device))), (1, 1, 3)).expand(-1, 2, -1).to(dtype=dtype, device=device)

修改后报错:

C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional.py:629: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

b_matrix = torch.tensor(startpoints, dtype=torch.float).view(8)

Traceback (most recent call last):

File "C:\conda\envs\CUDA110_torch\lib\site-packages\IPython\core\interactiveshell.py", line 3505, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-3-087b1cddce7b>", line 1, in <module>

F.perspective(img, startpoints, endpoints)

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional.py", line 688, in perspective

return F_t.perspective(img, coeffs, interpolation=interpolation.value, fill=fill)

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional_tensor.py", line 748, in perspective

grid = _perspective_grid(perspective_coeffs, ow=ow, oh=oh, dtype=dtype, device=img.device)

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional_tensor.py", line 709, in _perspective_grid

theta1 = torch.reshape(coeffs[:6], (1, 2, 3)).to(dtype=dtype, device=device)

TypeError: reshape(): argument 'input' (position 1) must be Tensor, not list

修改为:

theta1 = torch.reshape(torch.tensor(coeffs[:6]), (1, 2, 3)).to(dtype=dtype, device=device)

报错

C:\conda\envs\CUDA110_torch\python.exe D:\myGit\ipad_attack\mydata\test_torch自带的transform丢失梯度问题.py

C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional.py:629: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

b_matrix = torch.tensor(startpoints, dtype=torch.float).view(8)

Traceback (most recent call last):

File "D:\myGit\ipad_attack\mydata\test_torch自带的transform丢失梯度问题.py", line 30, in <module>

t = F.perspective(img, startpoints, endpoints)

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional.py", line 688, in perspective

return F_t.perspective(img, coeffs, interpolation=interpolation.value, fill=fill)

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional_tensor.py", line 748, in perspective

grid = _perspective_grid(perspective_coeffs, ow=ow, oh=oh, dtype=dtype, device=img.device)

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional_tensor.py", line 711, in _perspective_grid

(coeffs[6:], torch.ones(1, device=coeffs.device))), (1, 1, 3)).expand(-1, 2, -1).to(dtype=dtype, device=device)

AttributeError: 'list' object has no attribute 'device'

Process finished with exit code 1

打印coeffs:

[1.1200001239776611,

2.2111888142717362e-07,

-2.181137097068131e-05,

-2.3566984452827455e-07,

1.1200006008148193,

9.300353667640593e-06,

-5.271598157996493e-10,

1.9876917889405377e-09]

修改为:

运行测试代码:

import torch

from torchvision.transforms import functional as F

from torch.autograd import Function

img = torch.randn(1, 3, 224, 224)

startpoints = torch.tensor([[0., 0.], [0., 224.], [224., 0.], [224., 224.]], requires_grad=True)

endpoints = torch.tensor([[0., 0.], [0., 200.], [200., 0.], [200., 200.]], requires_grad=True)

print(startpoints.requires_grad)

t = F.perspective(img, startpoints, endpoints)

y = t**3

y.backward()

print(t.requires_grad,y)

发现:

C:\conda\envs\CUDA110_torch\lib\site-packages\torchvision\transforms\functional.py:629: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

b_matrix = torch.tensor(startpoints, dtype=torch.float).view(8)

Traceback (most recent call last):

File "D:\myGit\ipad_attack\mydata\test_torch自带的transform丢失梯度问题.py", line 33, in <module>

t.backward()

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torch\_tensor.py", line 396, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph, inputs=inputs)

File "C:\conda\envs\CUDA110_torch\lib\site-packages\torch\autograd\__init__.py", line 173, in backward

Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

RuntimeError: element 0 of tensors does not require grad and does not have a grad_fn

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Cell In[12], line 11

9 t = F.perspective(img, startpoints, endpoints)

10 y = t**3

---> 11 y.backward()

12 print(t.requires_grad,y)

File C:\conda\envs\CUDA110_torch\lib\site-packages\torch\_tensor.py:396, in Tensor.backward(self, gradient, retain_graph, create_graph, inputs)

387 if has_torch_function_unary(self):

388 return handle_torch_function(

389 Tensor.backward,

390 (self,),

(...)

394 create_graph=create_graph,

395 inputs=inputs)

--> 396 torch.autograd.backward(self, gradient, retain_graph, create_graph, inputs=inputs)

File C:\conda\envs\CUDA110_torch\lib\site-packages\torch\autograd\__init__.py:173, in backward(tensors, grad_tensors, retain_graph, create_graph, grad_variables, inputs)

168 retain_graph = create_graph

170 # The reason we repeat same the comment below is that

171 # some Python versions print out the first line of a multi-line function

172 # calls in the traceback and some print out the last line

--> 173 Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

174 tensors, grad_tensors_, retain_graph, create_graph, inputs,

175 allow_unreachable=True, accumulate_grad=True)

RuntimeError: element 0 of tensors does not require grad and does not have a grad_fn

![[计网00] 计算机网络开篇导论](https://img-blog.csdnimg.cn/direct/f06d309470c34ce1954fd598727a62d4.png)