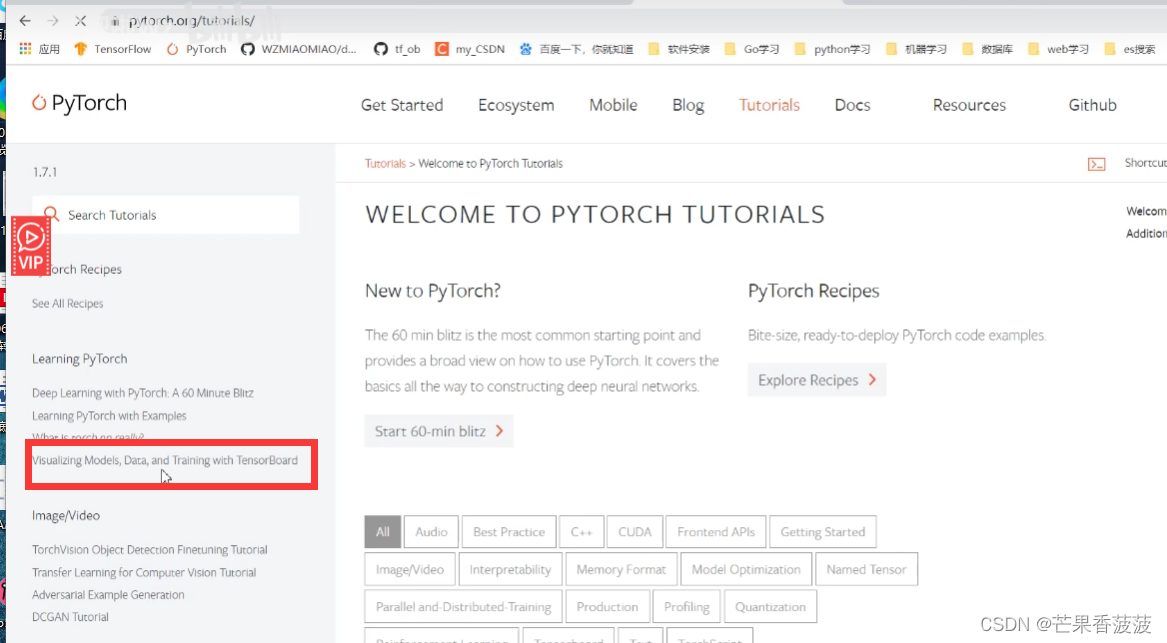

1. 创建环境

首先创建一个环境:

conda create -n pytorch

conda activate pytorch然后安装tensorboard

pip install tensorboard安装opencv

pip install opencv-python2. 简单的案例

标量和图像的显示:

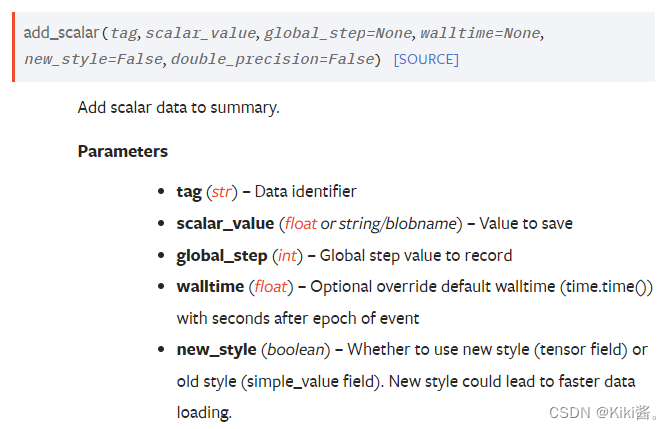

2.1标量实现的方法是add_scalar,第一个参数是给显示框起个名称,第二个参数是一个标量(也就是一个数值),第三个参数是第几次。

2.2图像实现的方法是add_image,第一个仍然是给显示框起个名字,第二个是图像,可以按住ctrl, 把光标放在add_image上,可以看到显示类型,有HWC,CHW等,如果是HWC,则需要使用dataformats指明格式。0,1,2就表示的是第0,1,2次。意思就是第0次显示img1,第1次显示img,第二次显示img1。

新建test_tensorboard.py文件,文件内容写:

from torch.utils.tensorboard import SummaryWriter

import cv2

writer = SummaryWriter("logs")

#writer = SummaryWriter() 默认可以不加参数

# writer = SummaryWriter(comment="lr_0.01_epoch_100")

for i in range(10):

writer.add_scalar("loss",2*i,i)

img = cv2.imread("E:/TOOLE/slam_evo/pythonProject/2.jpg",-1)

img1 = cv2.imread("E:/TOOLE/slam_evo/pythonProject/1.jpg",-1)

print(type(img))

print(img.shape)

writer.add_image("image1",img1,0,dataformats='HWC')

writer.add_image("image1",img,1,dataformats='HWC')

writer.add_image("image1",img1,2,dataformats='HWC')

writer.close()在终端运行就可以执行程序。

python test_tensorboard.py然后在中断执行

(pytorch) E:\TOOLE\slam_evo\pythonProject>tensorboard --logdir=logs

如果想换个端口,则:

(pytorch) E:\TOOLE\slam_evo\pythonProject>tensorboard --logdir=logs --port=6067得到一个网址:

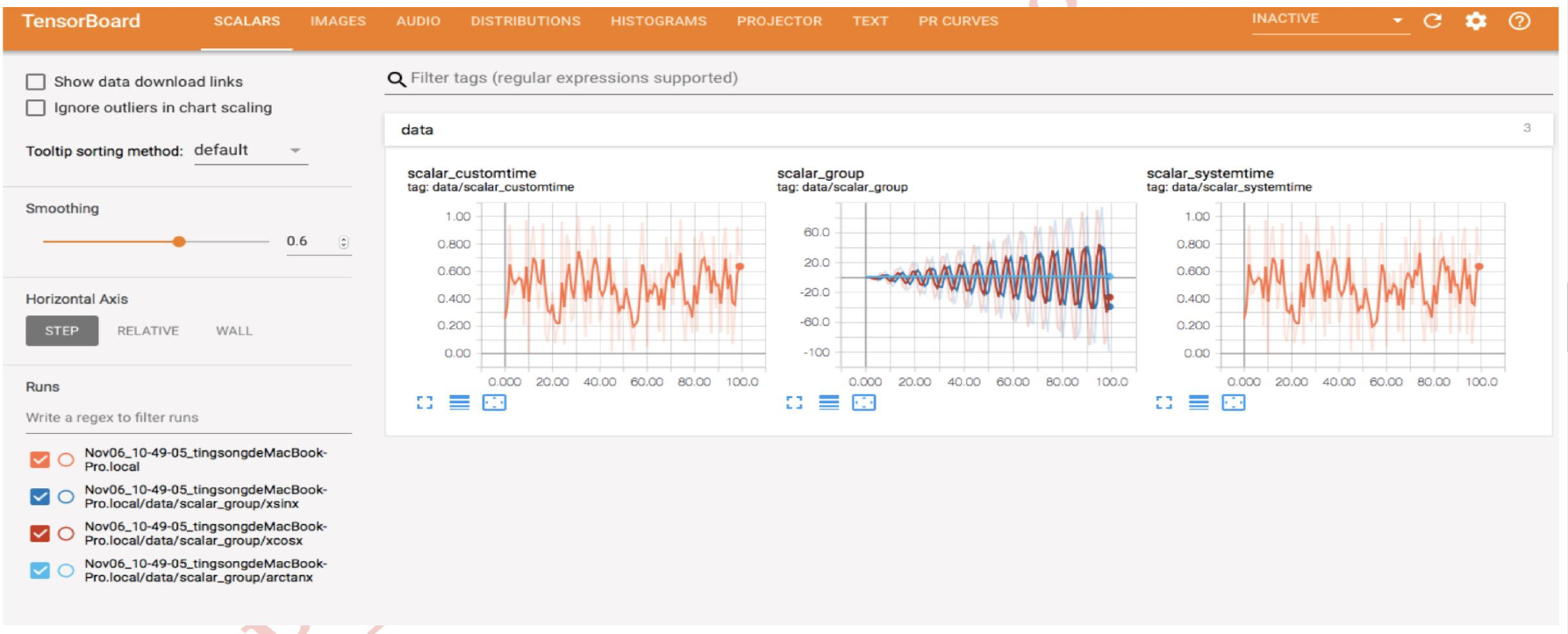

点击该网址,显示结果如下:

2.3 图形重复的处理

我们看到图中有重复的,这是因为name,也就是add_image或者add_scalar在多次重复运行的时候,第一个参数name没有改变,图像显示的是多次叠加的图像,如果想要每次都不叠加显示,则可以这样处理:

1)删除event文件

2)改变add_image或者add_scalar的第一个参数的名子

writer.add_image("image1",img1,0,dataformats='HWC')改成image2:

writer.add_image("image2",img1,0,dataformats='HWC')3. 在程序中使用tensorboard,显示loss,训练图像等

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import numpy as np

import torchvision

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("logs")

tensor = torch.randn(3,3)

bTensor = type(tensor) == torch.Tensor

print(bTensor)

print("tensor is on ", tensor.device)

#数据转到GPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print(device)

if torch.cuda.is_available():

tensor = tensor.to(device)

print("tensor is on ",tensor.device)

#数据转到CPU

if tensor.device == 'cuda:0':

tensor = tensor.to(torch.device("cpu"))

print("tensor is on", tensor.device)

if tensor.device == "cpu":

tensor = tensor.to(torch.device("cuda:0"))

print("tensor is on", tensor.device)

trainning_data = datasets.MNIST(root="data",train=True,transform=ToTensor(),download=True)

print(len(trainning_data))

test_data = datasets.MNIST(root="data",train=True,transform=ToTensor(),download=False)

train_loader = DataLoader(trainning_data, batch_size=64,shuffle=True)

test_loader = DataLoader(test_data, batch_size=64,shuffle=True)

print(len(train_loader)) #分成了多少个batch

print(len(trainning_data)) #总共多少个图像

# for x, y in train_loader:

# print(x.shape)

# print(y.shape)

class MinistNet(nn.Module):

def __init__(self):

super().__init__()

# self.flat = nn.Flatten()

self.conv1 = nn.Conv2d(1,1,3,1,1)

self.hideLayer1 = nn.Linear(28*28,256)

self.hideLayer2 = nn.Linear(256,10)

def forward(self,x):

x= self.conv1(x)

x = x.view(-1,28*28)

x = self.hideLayer1(x)

x = torch.sigmoid(x)

x = self.hideLayer2(x)

# x = nn.Sigmoid(x)

return x

model = MinistNet()

writer.add_graph(model, torch.rand([1,1,28,28]))

model = model.to(device)

cuda = next(model.parameters()).device

print(model)

criterion = nn.CrossEntropyLoss()

optimer = torch.optim.RMSprop(model.parameters(),lr= 0.001)

def train():

train_losses = []

train_acces = []

eval_losses = []

eval_acces = []

#训练

model.train()

tensorboard_ind =0;

for epoch in range(10):

batchsizeNum = 0

train_loss = 0

train_acc = 0

train_correct = 0

for x,y in train_loader:

# print(epoch)

# print(x.shape)

# print(y.shape)

writer.add_images("minist_img",x,tensorboard_ind)

x = x.to('cuda')

y = y.to('cuda')

bte = type(x)==torch.Tensor

bte1 = type(y)==torch.Tensor

A = x.device

B = y.device

pred_y = model(x)

loss = criterion(pred_y,y)

optimer.zero_grad()

loss.backward()

optimer.step()

loss_val = loss.item()

batchsizeNum = batchsizeNum +1

train_acc += (pred_y.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

tensorboard_ind += 1

writer.add_scalar("loss1",loss.item(),tensorboard_ind)

# print("loss: ",loss_val," ",epoch, " ", batchsizeNum)

train_losses.append(train_loss / len(trainning_data))

train_acces.append(train_acc / len(trainning_data))

for i, (name, param) in enumerate(model.named_parameters()):

if 'bn' not in name:

writer.add_histogram(name, param, epoch)

# if name == 'conv1':

# print(param.weight.shape)

# in_channels = param.weight.shape[1]

# out_channels = param.weight.shape[0]

# k_w, k_h = param.weight.shape[3], param.weight.shape[2]

# kernel_all = param.weight.view(-1, 1, k_w, k_h) # 每个通道的卷积核

# kernel_grid = torchvision.utils.make_grid(kernel_all, nrow=in_channels)

# writer.add_image(f'{name}_kernel', kernel_grid, global_step=epoch)

#测试

model.eval()

with torch.no_grad():

num_batch = len(test_data)

numSize = len(test_data)

test_loss, test_correct = 0,0

for x,y in test_loader:

x = x.to(device)

y = y.to(device)

pred_y = model(x)

test_loss += criterion(pred_y, y).item()

test_correct += (pred_y.argmax(1) == y).type(torch.float).sum().item()

test_loss /= num_batch

test_correct /= numSize

eval_losses.append(test_loss)

eval_acces.append(test_correct)

print("test result:",100 * test_correct,"% avg loss:",test_loss)

PATH = "dict_model_%d_dict.pth"%(epoch)

torch.save({"epoch": epoch,

"model_state_dict": model.state_dict(), }, PATH)

writer.close()

plt.plot(np.arange(len(train_losses)), train_losses, label="train loss")

plt.plot(np.arange(len(train_acces)), train_acces, label="train acc")

plt.plot(np.arange(len(eval_losses)), eval_losses, label="valid loss")

plt.plot(np.arange(len(eval_acces)), eval_acces, label="valid acc")

plt.legend() # 显示图例

plt.xlabel('epoches')

# plt.ylabel("epoch")

plt.title('Model accuracy&loss')

plt.show()

torch.save(model,"mode_con_line2.pth")#保存网络模型结构

# torch.save(model,) #保存模型中的参数

torch.save(model.state_dict(),"model_dict.pth")

# Press the green button in the gutter to run the script.

if __name__ == '__main__':

train()

python xx.py执行该文件,然后使用tensorboard --logdir=logs 得到一个网址,点击该网址即可得到显示信息。

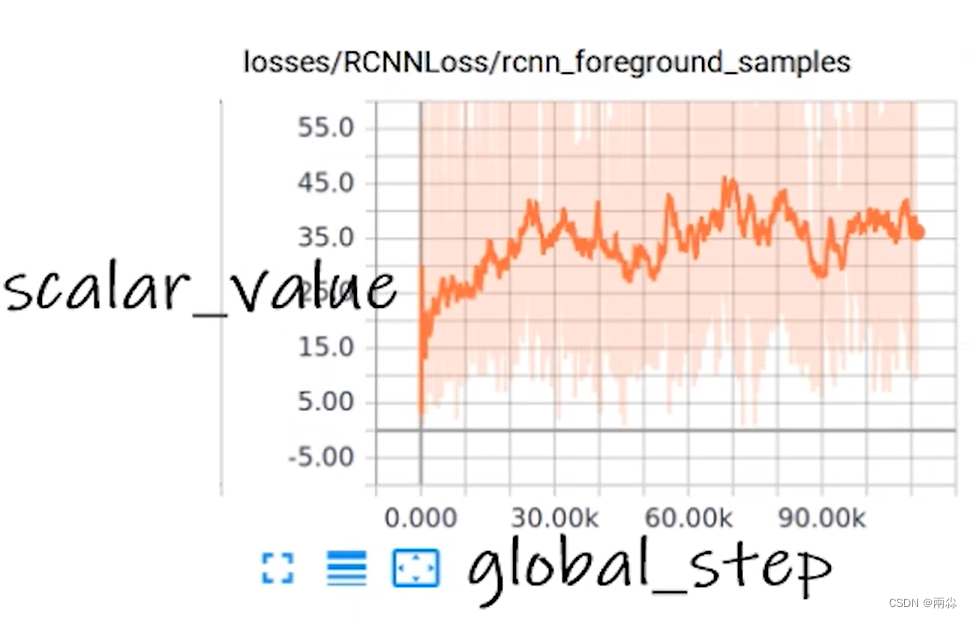

显示loss:

显示训练图像:

参数直方图显示:

![[Linux]Linux编译器gcc/g++](https://i-blog.csdnimg.cn/direct/6039c464a0054394bf8c9bbc1e20c438.png)