MobileNetV2是一种轻量级的深度神经网络,设计用于移动和嵌入式设备。它的核心思想是通过深度可分离卷积(Depthwise Separable Convolutions)和倒残差结构(Inverted Residuals)来减少计算复杂度和模型参数量。其主要特点包括:

- 深度可分离卷积:将标准卷积分解为深度卷积(Depthwise Convolution)和逐点卷积(Pointwise Convolution),大大减少了计算量。

- 倒残差结构:通过先扩展特征维度再压缩的方式,使网络更容易训练和优化,同时保持较高的性能。

- 线性瓶颈:在每个倒残差块的最后使用线性激活函数,以避免信息丢失。

MobileNetV2的具体架构

MobileNetV2由多个倒残差块组成,每个块包含:

- 逐点卷积:使用1x1卷积核扩展特征维度。

- 深度卷积:使用3x3卷积核对每个通道独立进行卷积操作。

- 逐点卷积:再次使用1x1卷积核压缩特征维度。

- 跳跃连接:将输入和输出相加(在特征维度相同的情况下),形成残差连接。

这些块叠加在一起,形成了一个深度神经网络,可以有效地提取图像特征。

垃圾分类系统的工作流程

定义了损失函数后,可以得到损失函数关于权重的梯度。梯度用于指示优化器优化权重的方向,以提高模型性能。

在训练MobileNetV2之前对MobileNetV2Backbone层的参数进行了固定,使其在训练过程中对该模块的权重参数不进行更新;只对MobileNetV2Head模块的参数进行更新。

MindSpore支持的损失函数有SoftmaxCrossEntropyWithLogits、L1Loss、MSELoss等。这里使用SoftmaxCrossEntropyWithLogits损失函数。

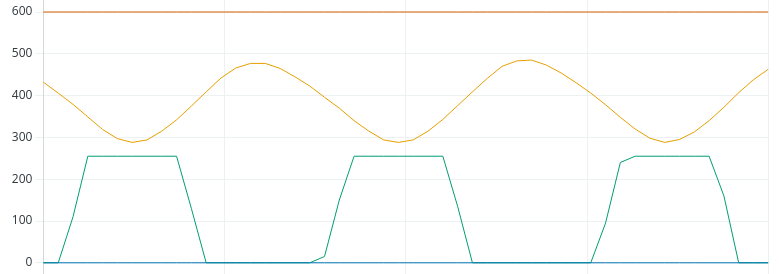

训练测试过程中会打印loss值,loss值会波动,但总体来说loss值会逐步减小,精度逐步提高。每个人运行的loss值有一定随机性,不一定完全相同。

每打印一个epoch后模型都会在测试集上的计算测试精度,从打印的精度值分析MobileNetV2模型的预测能力在不断提升。

数据预处理:

- 加载图像数据,并将其调整为统一的大小(如224x224)。

- 归一化图像像素值,使其在0到1之间。

- 将标签转换为独热编码(one-hot encoding)。

利用ImageFolderDataset方法读取垃圾分类数据集,并整体对数据集进行处理。读取数据集时指定训练集和测试集,首先对整个数据集进行归一化,修改图像频道等预处理操作。然后对训练集的数据依次进行RandomCropDecodeResize、RandomHorizontalFlip、RandomColorAdjust、shuffle操作,以增加训练数据的丰富度;对测试集进行Decode、Resize、CenterCrop等预处理操作;最后返回处理后的数据集

模型构建:

- 使用预训练的MobileNetV2模型作为特征提取器,去掉顶层的分类部分。

- 添加全局平均池化层(Global Average Pooling Layer),将特征图转换为固定长度的特征向量。

- 添加全连接层和输出层,根据具体的分类任务输出相应数量的类别。

__all__ = ['MobileNetV2', 'MobileNetV2Backbone', 'MobileNetV2Head', 'mobilenet_v2'] def _make_divisible(v, divisor, min_value=None): if min_value is None: min_value = divisor new_v = max(min_value, int(v + divisor / 2) // divisor * divisor) if new_v < 0.9 * v: new_v += divisor return new_v class GlobalAvgPooling(nn.Cell): """ Global avg pooling definition. Args: Returns: Tensor, output tensor. Examples: >>> GlobalAvgPooling() """ def __init__(self): super(GlobalAvgPooling, self).__init__() def construct(self, x): x = P.mean(x, (2, 3)) return x class ConvBNReLU(nn.Cell): """ Convolution/Depthwise fused with Batchnorm and ReLU block definition. Args: in_planes (int): Input channel. out_planes (int): Output channel. kernel_size (int): Input kernel size. stride (int): Stride size for the first convolutional layer. Default: 1. groups (int): channel group. Convolution is 1 while Depthiwse is input channel. Default: 1. Returns: Tensor, output tensor. Examples: >>> ConvBNReLU(16, 256, kernel_size=1, stride=1, groups=1) """ def __init__(self, in_planes, out_planes, kernel_size=3, stride=1, groups=1): super(ConvBNReLU, self).__init__() padding = (kernel_size - 1) // 2 in_channels = in_planes out_channels = out_planes if groups == 1: conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='pad', padding=padding) else: out_channels = in_planes conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='pad', padding=padding, group=in_channels) layers = [conv, nn.BatchNorm2d(out_planes), nn.ReLU6()] self.features = nn.SequentialCell(layers) def construct(self, x): output = self.features(x) return output class InvertedResidual(nn.Cell): """ Mobilenetv2 residual block definition. Args: inp (int): Input channel. oup (int): Output channel. stride (int): Stride size for the first convolutional layer. Default: 1. expand_ratio (int): expand ration of input channel Returns: Tensor, output tensor. Examples: >>> ResidualBlock(3, 256, 1, 1) """ def __init__(self, inp, oup, stride, expand_ratio): super(InvertedResidual, self).__init__() assert stride in [1, 2] hidden_dim = int(round(inp * expand_ratio)) self.use_res_connect = stride == 1 and inp == oup layers = [] if expand_ratio != 1: layers.append(ConvBNReLU(inp, hidden_dim, kernel_size=1)) layers.extend([ ConvBNReLU(hidden_dim, hidden_dim, stride=stride, groups=hidden_dim), nn.Conv2d(hidden_dim, oup, kernel_size=1, stride=1, has_bias=False), nn.BatchNorm2d(oup), ]) self.conv = nn.SequentialCell(layers) self.cast = P.Cast() def construct(self, x): identity = x x = self.conv(x) if self.use_res_connect: return P.add(identity, x) return x class MobileNetV2Backbone(nn.Cell): """ MobileNetV2 architecture. Args: class_num (int): number of classes. width_mult (int): Channels multiplier for round to 8/16 and others. Default is 1. has_dropout (bool): Is dropout used. Default is false inverted_residual_setting (list): Inverted residual settings. Default is None round_nearest (list): Channel round to . Default is 8 Returns: Tensor, output tensor. Examples: >>> MobileNetV2(num_classes=1000) """ def __init__(self, width_mult=1., inverted_residual_setting=None, round_nearest=8, input_channel=32, last_channel=1280): super(MobileNetV2Backbone, self).__init__() block = InvertedResidual # setting of inverted residual blocks self.cfgs = inverted_residual_setting if inverted_residual_setting is None: self.cfgs = [ # t, c, n, s [1, 16, 1, 1], [6, 24, 2, 2], [6, 32, 3, 2], [6, 64, 4, 2], [6, 96, 3, 1], [6, 160, 3, 2], [6, 320, 1, 1], ] # building first layer input_channel = _make_divisible(input_channel * width_mult, round_nearest) self.out_channels = _make_divisible(last_channel * max(1.0, width_mult), round_nearest) features = [ConvBNReLU(3, input_channel, stride=2)] # building inverted residual blocks for t, c, n, s in self.cfgs: output_channel = _make_divisible(c * width_mult, round_nearest) for i in range(n): stride = s if i == 0 else 1 features.append(block(input_channel, output_channel, stride, expand_ratio=t)) input_channel = output_channel features.append(ConvBNReLU(input_channel, self.out_channels, kernel_size=1)) self.features = nn.SequentialCell(features) self._initialize_weights() def construct(self, x): x = self.features(x) return x def _initialize_weights(self): """ Initialize weights. Args: Returns: None. Examples: >>> _initialize_weights() """ self.init_parameters_data() for _, m in self.cells_and_names(): if isinstance(m, nn.Conv2d): n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels m.weight.set_data(Tensor(np.random.normal(0, np.sqrt(2. / n), m.weight.data.shape).astype("float32"))) if m.bias is not None: m.bias.set_data( Tensor(np.zeros(m.bias.data.shape, dtype="float32"))) elif isinstance(m, nn.BatchNorm2d): m.gamma.set_data( Tensor(np.ones(m.gamma.data.shape, dtype="float32"))) m.beta.set_data( Tensor(np.zeros(m.beta.data.shape, dtype="float32"))) @property def get_features(self): return self.features class MobileNetV2Head(nn.Cell): """ MobileNetV2 architecture. Args: class_num (int): Number of classes. Default is 1000. has_dropout (bool): Is dropout used. Default is false Returns: Tensor, output tensor. Examples: >>> MobileNetV2(num_classes=1000) """ def __init__(self, input_channel=1280, num_classes=1000, has_dropout=False, activation="None"): super(MobileNetV2Head, self).__init__() # mobilenet head head = ([GlobalAvgPooling(), nn.Dense(input_channel, num_classes, has_bias=True)] if not has_dropout else [GlobalAvgPooling(), nn.Dropout(0.2), nn.Dense(input_channel, num_classes, has_bias=True)]) self.head = nn.SequentialCell(head) self.need_activation = True if activation == "Sigmoid": self.activation = nn.Sigmoid() elif activation == "Softmax": self.activation = nn.Softmax() else: self.need_activation = False self._initialize_weights() def construct(self, x): x = self.head(x) if self.need_activation: x = self.activation(x) return x def _initialize_weights(self): """ Initialize weights. Args: Returns: None. Examples: >>> _initialize_weights() """ self.init_parameters_data() for _, m in self.cells_and_names(): if isinstance(m, nn.Dense): m.weight.set_data(Tensor(np.random.normal( 0, 0.01, m.weight.data.shape).astype("float32"))) if m.bias is not None: m.bias.set_data( Tensor(np.zeros(m.bias.data.shape, dtype="float32"))) @property def get_head(self): return self.head class MobileNetV2(nn.Cell): """ MobileNetV2 architecture. Args: class_num (int): number of classes. width_mult (int): Channels multiplier for round to 8/16 and others. Default is 1. has_dropout (bool): Is dropout used. Default is false inverted_residual_setting (list): Inverted residual settings. Default is None round_nearest (list): Channel round to . Default is 8 Returns: Tensor, output tensor. Examples: >>> MobileNetV2(backbone, head) """ def __init__(self, num_classes=1000, width_mult=1., has_dropout=False, inverted_residual_setting=None, \ round_nearest=8, input_channel=32, last_channel=1280): super(MobileNetV2, self).__init__() self.backbone = MobileNetV2Backbone(width_mult=width_mult, \ inverted_residual_setting=inverted_residual_setting, \ round_nearest=round_nearest, input_channel=input_channel, last_channel=last_channel).get_features self.head = MobileNetV2Head(input_channel=self.backbone.out_channel, num_classes=num_classes, \ has_dropout=has_dropout).get_head def construct(self, x): x = self.backbone(x) x = self.head(x) return x class MobileNetV2Combine(nn.Cell): """ MobileNetV2Combine architecture. Args: backbone (Cell): the features extract layers. head (Cell): the fully connected layers. Returns: Tensor, output tensor. Examples: >>> MobileNetV2(num_classes=1000) """ def __init__(self, backbone, head): super(MobileNetV2Combine, self).__init__(auto_prefix=False) self.backbone = backbone self.head = head def construct(self, x): x = self.backbone(x) x = self.head(x) return x def mobilenet_v2(backbone, head): return MobileNetV2Combine(backbone, head)

模型训练:

- 冻结MobileNetV2的卷积层,只训练新增的全连接层。

- 使用交叉熵损失函数和Adam优化器进行模型训练。

- 在验证集上监控模型表现,并使用早停(Early Stopping)和模型检查点(Model Checkpoint)保存最优模型。

def cosine_decay(total_steps, lr_init=0.0, lr_end=0.0, lr_max=0.1, warmup_steps=0): """ Applies cosine decay to generate learning rate array. Args: total_steps(int): all steps in training. lr_init(float): init learning rate. lr_end(float): end learning rate lr_max(float): max learning rate. warmup_steps(int): all steps in warmup epochs. Returns: list, learning rate array. """ lr_init, lr_end, lr_max = float(lr_init), float(lr_end), float(lr_max) decay_steps = total_steps - warmup_steps lr_all_steps = [] inc_per_step = (lr_max - lr_init) / warmup_steps if warmup_steps else 0 for i in range(total_steps): if i < warmup_steps: lr = lr_init + inc_per_step * (i + 1) else: cosine_decay = 0.5 * (1 + math.cos(math.pi * (i - warmup_steps) / decay_steps)) lr = (lr_max - lr_end) * cosine_decay + lr_end lr_all_steps.append(lr) return lr_all_steps

模型训练与测试

在进行正式的训练之前,定义训练函数,读取数据并对模型进行实例化,定义优化器和损失函数。

首先简单介绍损失函数及优化器的概念:

损失函数:又叫目标函数,用于衡量预测值与实际值差异的程度。深度学习通过不停地迭代来缩小损失函数的值。定义一个好的损失函数,可以有效提高模型的性能。

优化器:用于最小化损失函数,从而在训练过程中改进模型。模型推理

加载模型Checkpoint进行推理,使用load_checkpoint接口加载数据时,需要把数据传入给原始网络,而不能传递给带有优化器和损失函数的训练网络。CKPT="save_mobilenetV2_model.ckpt" def image_process(image): """Precess one image per time. Args: image: shape (H, W, C) """ mean=[0.485*255, 0.456*255, 0.406*255] std=[0.229*255, 0.224*255, 0.225*255] image = (np.array(image) - mean) / std image = image.transpose((2,0,1)) img_tensor = Tensor(np.array([image], np.float32)) return img_tensor def infer_one(network, image_path): image = Image.open(image_path).resize((config.image_height, config.image_width)) logits = network(image_process(image)) pred = np.argmax(logits.asnumpy(), axis=1)[0] print(image_path, class_en[pred]) def infer(): backbone = MobileNetV2Backbone(last_channel=config.backbone_out_channels) head = MobileNetV2Head(input_channel=backbone.out_channels, num_classes=config.num_classes) network = mobilenet_v2(backbone, head) load_checkpoint(CKPT, network) for i in range(91, 100): infer_one(network, f'data_en/test/Cardboard/000{i}.jpg') infer()