1.VAE(Variational auto-encoder)

笔记来源及推荐文章:

1.变分自编码器(一):原来是这么一回事

2.变分自编码器(二):从贝叶斯观点出发

3.变分自编码器(三):这样做为什么能成?

4.变分自编码器(四):一步到位的聚类方案

5.变分自编码器 = 最小化先验分布 + 最大化互信息

6.变分自编码器(六):从几何视角来理解VAE的尝试

7.Github:pytorch-stable-diffusion

8.Generate Images Using Variational Autoencoder (VAE)

1.1 Introduction

VAE learns the distribution of the data instead of just a compressed image, and by using the distribution, we can decode and generate new data.

VAE由两部分:VAE Encoder 和 VAE Decoder 组成

VAE Encoder :Maps the input data to a latent space.CLIP

The encoder is trying to learn the parameters ϕ \phi ϕ to compress data input x to a latent vector z, and the output encoding z is drawn from Gaussian density with parameters ϕ \phi ϕ.

VAE Decoder:Reconstructs the data from the latent space.

As for the decoder, its input is encoding z, the output from the encoder. It parametrizes the reconstructed x’ over parameters θ, and the output x’ is drawn from the distribution of the data.

Why do we use VAE to convert the picture into latent space and then backward?

Dimensionality Reduction and Representation Learning

Compact Representation: VAEs map high-dimensional data (like images) to a lower-dimensional latent space. This compact representation captures the essential features and structures of the data.

Feature Extraction: The latent space often reveals meaningful features and patterns, which can be useful for various downstream tasks such as clustering, classification, or visualization.

VAE learns the distribution of the data instead of just a compressed image, and by using the distribution, we can decode and generate new data.

U-Net for text-to-image VAE 在 Stable Diffusion 中位置(左侧黄框中)

VAE 在 Stable Diffusion 中位置(左侧黄框中)

U-Net for text-to-image

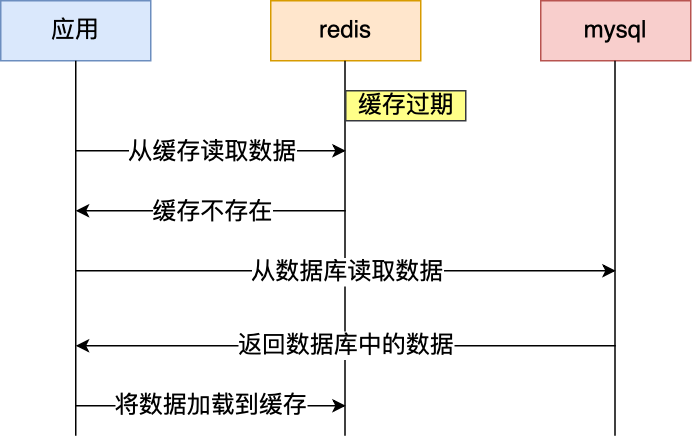

VAE 在Stable Diffusion中的作用:

正向扩散过程(Forward Diffusion Process):对image的latents进行加噪

在向clear image加噪之前,我们先将clear image通过 VAE Encoder转为latents,在latent space中,我们从标准正态分布中随机采样得到一个噪声分布 ϵ \epsilon ϵ,将此 ϵ \epsilon ϵ添加到image latents上,由此完成对clear image的正向扩散过程。

注:因为我们需要控制噪声的程度,所以引入time,每一个time对应一个噪声程度,t影响 α t \alpha_t αt的值,也就是加噪公式中的系数,这样我们就控制了噪声的程度。

反向扩散过程(Reverse Diffusion Process):将去噪后的 image latents 重构为 image

unet对noisy image latent完成噪声预测后,image latent减去预测噪声得到去噪后的image latent,经过多轮去噪过程后,将完成去噪的image latents 重构问clear image

1.2 Attention

详情见:U-Net for text-to-image中的SelfAttention

class SelfAttention(nn.Module):

def __init__(self, n_heads, d_embed, in_proj_bias=True, out_proj_bias=True):

super().__init__()

# This combines the Wq, Wk and Wv matrices into one matrix

self.in_proj = nn.Linear(d_embed, 3 * d_embed, bias=in_proj_bias)

# This one represents the Wo matrixAttention

self.out_proj = nn.Linear(d_embed, d_embed, bias=out_proj_bias)

self.n_heads = n_heads

self.d_head = d_embed // n_heads

def forward(self, x, causal_mask=False):

# x: # (Batch_Size, Seq_Len, Dim)

# (Batch_Size, Seq_Len, Dim)

input_shape = x.shape

# (Batch_Size, Seq_Len, Dim)

batch_size, sequence_length, d_embed = input_shape

# (Batch_Size, Seq_Len, H, Dim / H)

interim_shape = (batch_size, sequence_length, self.n_heads, self.d_head)

# (Batch_Size, Seq_Len, Dim) -> (Batch_Size, Seq_Len, Dim * 3) -> 3 tensor of shape (Batch_Size, Seq_Len, Dim)

q, k, v = self.in_proj(x).chunk(3, dim=-1)

# (Batch_Size, Seq_Len, Dim) -> (Batch_Size, Seq_Len, H, Dim / H) -> (Batch_Size, H, Seq_Len, Dim / H)

q = q.view(interim_shape).transpose(1, 2)

k = k.view(interim_shape).transpose(1, 2)

v = v.view(interim_shape).transpose(1, 2)

# (Batch_Size, H, Seq_Len, Dim / H) @ (Batch_Size, H, Dim / H, Seq_Len) -> (Batch_Size, H, Seq_Len, Seq_Len)

weight = q @ k.transpose(-1, -2)

if causal_mask:

# Mask where the upper triangle (above the principal diagonal) is 1

mask = torch.ones_like(weight, dtype=torch.bool).triu(1)

# Fill the upper triangle with -inf

weight.masked_fill_(mask, -torch.inf)

# Divide by d_k (Dim / H).

# (Batch_Size, H, Seq_Len, Seq_Len) -> (Batch_Size, H, Seq_Len, Seq_Len)

weight /= math.sqrt(self.d_head)

# (Batch_Size, H, Seq_Len, Seq_Len) -> (Batch_Size, H, Seq_Len, Seq_Len)

weight = F.softmax(weight, dim=-1)

# (Batch_Size, H, Seq_Len, Seq_Len) @ (Batch_Size, H, Seq_Len, Dim / H) -> (Batch_Size, H, Seq_Len, Dim / H)

output = weight @ v

# (Batch_Size, H, Seq_Len, Dim / H) -> (Batch_Size, Seq_Len, H, Dim / H)

output = output.transpose(1, 2)

# (Batch_Size, Seq_Len, H, Dim / H) -> (Batch_Size, Seq_Len, Dim)

output = output.reshape(input_shape)

# (Batch_Size, Seq_Len, Dim) -> (Batch_Size, Seq_Len, Dim)

output = self.out_proj(output)

# (Batch_Size, Seq_Len, Dim)

return output

1.3 VAE_AttentionBlock (GN+SelfAttention)

In image-based VAEs, attention can help focus on critical regions, improving the quality of generated images, especially in complex scenes.

class VAE_AttentionBlock(nn.Module):

def __init__(self, channels):

super().__init__()

self.groupnorm = nn.GroupNorm(32, channels)

self.attention = SelfAttention(1, channels)

def forward(self, x):

# x: (Batch_Size, Features, Height, Width)

residue = x

# (Batch_Size, Features, Height, Width) -> (Batch_Size, Features, Height, Width)

x = self.groupnorm(x)

n, c, h, w = x.shape

# (Batch_Size, Features, Height, Width) -> (Batch_Size, Features, Height * Width)

x = x.view((n, c, h * w))

# (Batch_Size, Features, Height * Width) -> (Batch_Size, Height * Width, Features). Each pixel becomes a feature of size "Features", the sequence length is "Height * Width".

x = x.transpose(-1, -2)

# Perform self-attention WITHOUT mask

# (Batch_Size, Height * Width, Features) -> (Batch_Size, Height * Width, Features)

x = self.attention(x)

# (Batch_Size, Height * Width, Features) -> (Batch_Size, Features, Height * Width)

x = x.transpose(-1, -2)

# (Batch_Size, Features, Height * Width) -> (Batch_Size, Features, Height, Width)

x = x.view((n, c, h, w))

# (Batch_Size, Features, Height, Width) + (Batch_Size, Features, Height, Width) -> (Batch_Size, Features, Height, Width)

x += residue

# (Batch_Size, Features, Height, Width)

return x

1.4 VAE_ResidualBlock (GN+Conv+Residual_Layer)

ResidualBlock mitigate the vanishing gradient problem, improve representational power, enhance convergence speed, and enable the construction of deeper and more complex architectures.

class VAE_ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels):

super().__init__()

self.groupnorm_1 = nn.GroupNorm(32, in_channels)

self.conv_1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1)

self.groupnorm_2 = nn.GroupNorm(32, out_channels)

self.conv_2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=1)

if in_channels == out_channels:

self.residual_layer = nn.Identity()

else:

self.residual_layer = nn.Conv2d(in_channels, out_channels, kernel_size=1, padding=0)

def forward(self, x):

# x: (Batch_Size, In_Channels, Height, Width)

residue = x

# (Batch_Size, In_Channels, Height, Width) -> (Batch_Size, In_Channels, Height, Width)

x = self.groupnorm_1(x)

# (Batch_Size, In_Channels, Height, Width) -> (Batch_Size, In_Channels, Height, Width)

x = F.silu(x)

# (Batch_Size, In_Channels, Height, Width) -> (Batch_Size, Out_Channels, Height, Width)

x = self.conv_1(x)

# (Batch_Size, Out_Channels, Height, Width) -> (Batch_Size, Out_Channels, Height, Width)

x = self.groupnorm_2(x)

# (Batch_Size, Out_Channels, Height, Width) -> (Batch_Size, Out_Channels, Height, Width)

x = F.silu(x)

# (Batch_Size, Out_Channels, Height, Width) -> (Batch_Size, Out_Channels, Height, Width)

x = self.conv_2(x)

# (Batch_Size, Out_Channels, Height, Width) -> (Batch_Size, Out_Channels, Height, Width)

return x + self.residual_layer(residue)

1.5 VAE_Encoder (Conv+ResidualBlock+AttentionBlock+GN)

import torch

from torch import nn

from torch.nn import functional as F

from decoder import VAE_AttentionBlock, VAE_ResidualBlock

class VAE_Encoder(nn.Sequential):

def __init__(self):

super().__init__(

# Initial convolutional layer to transform input from (Batch_Size, 3, Height, Width) to (Batch_Size, 128, Height, Width)

nn.Conv2d(3, 128, kernel_size=3, padding=1),

# Two residual blocks to enhance feature representation without changing spatial dimensions

VAE_ResidualBlock(128, 128),

VAE_ResidualBlock(128, 128),

# Downsample the spatial dimensions by half: (Batch_Size, 128, Height, Width) -> (Batch_Size, 128, Height/2, Width/2)

nn.Conv2d(128, 128, kernel_size=3, stride=2, padding=0),

# Residual block to transform features to a higher dimensional space: (Batch_Size, 128, Height/2, Width/2) -> (Batch_Size, 256, Height/2, Width/2)

VAE_ResidualBlock(128, 256),

# Another residual block to enhance features

VAE_ResidualBlock(256, 256),

# Further downsample the spatial dimensions by half: (Batch_Size, 256, Height/2, Width/2) -> (Batch_Size, 256, Height/4, Width/4)

nn.Conv2d(256, 256, kernel_size=3, stride=2, padding=0),

# Residual block to increase feature dimensions: (Batch_Size, 256, Height/4, Width/4) -> (Batch_Size, 512, Height/4, Width/4)

VAE_ResidualBlock(256, 512),

# Additional residual block to enhance features

VAE_ResidualBlock(512, 512),

# Further downsample the spatial dimensions by half: (Batch_Size, 512, Height/4, Width/4) -> (Batch_Size, 512, Height/8, Width/8)

nn.Conv2d(512, 512, kernel_size=3, stride=2, padding=0),

# Series of residual blocks to refine features at lower spatial dimensions

VAE_ResidualBlock(512, 512),

VAE_ResidualBlock(512, 512),

VAE_ResidualBlock(512, 512),

# Attention block to capture long-range dependencies in the feature map

VAE_AttentionBlock(512),

# Final residual block

VAE_ResidualBlock(512, 512),

# Normalization layer to standardize the feature maps

nn.GroupNorm(32, 512),

# Activation function for non-linearity

nn.SiLU(),

# Final convolutional layers to reduce feature dimensions to (Batch_Size, 8, Height/8, Width/8)

nn.Conv2d(512, 8, kernel_size=3, padding=1),

nn.Conv2d(8, 8, kernel_size=1, padding=0)

)

def forward(self, x, noise):

# x: (Batch_Size, Channel, Height, Width)

# noise: (Batch_Size, 4, Height/8, Width/8)

for module in self:

if getattr(module, 'stride', None) == (2, 2): # Check if the module is a downsampling layer

# Asymmetric padding: (Padding_Left, Padding_Right, Padding_Top, Padding_Bottom)

x = F.pad(x, (0, 1, 0, 1))

x = module(x) # Apply the module to the input tensor

# Split the output into mean and log_variance: (Batch_Size, 8, Height/8, Width/8) -> two tensors (Batch_Size, 4, Height/8, Width/8)

mean, log_variance = torch.chunk(x, 2, dim=1)

# Clamp the log_variance to ensure numerical stability: (Batch_Size, 4, Height/8, Width/8)

log_variance = torch.clamp(log_variance, -30, 20)

# Calculate the variance from the log variance: (Batch_Size, 4, Height/8, Width/8)

variance = log_variance.exp()

# Calculate the standard deviation from the variance: (Batch_Size, 4, Height/8, Width/8)

stdev = variance.sqrt()

# Reparameterization trick: generate latent variable with noise

x = mean + stdev * noise

# Scale the output by a constant factor

x *= 0.18215

return x

1.6 VAE_Decoder (Conv+ResidualBlock+AttentionBlock+UpSample+GN)

import torch

from torch import nn

from torch.nn import functional as F

from attention import SelfAttention

class VAE_Decoder(nn.Sequential):

def __init__(self):

super().__init__(

# (Batch_Size, 4, Height / 8, Width / 8) -> (Batch_Size, 4, Height / 8, Width / 8)

nn.Conv2d(4, 4, kernel_size=1, padding=0),

# (Batch_Size, 4, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 8, Width / 8)

nn.Conv2d(4, 512, kernel_size=3, padding=1),

# (Batch_Size, 512, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 8, Width / 8)

VAE_ResidualBlock(512, 512),

# (Batch_Size, 512, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 8, Width / 8)

VAE_AttentionBlock(512),

# (Batch_Size, 512, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 8, Width / 8)

VAE_ResidualBlock(512, 512),

# (Batch_Size, 512, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 8, Width / 8)

VAE_ResidualBlock(512, 512),

# (Batch_Size, 512, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 8, Width / 8)

VAE_ResidualBlock(512, 512),

# (Batch_Size, 512, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 8, Width / 8)

VAE_ResidualBlock(512, 512),

# Repeats the rows and columns of the data by scale_factor (like when you resize an image by doubling its size).

# (Batch_Size, 512, Height / 8, Width / 8) -> (Batch_Size, 512, Height / 4, Width / 4)

nn.Upsample(scale_factor=2),

# (Batch_Size, 512, Height / 4, Width / 4) -> (Batch_Size, 512, Height / 4, Width / 4)

nn.Conv2d(512, 512, kernel_size=3, padding=1),

# (Batch_Size, 512, Height / 4, Width / 4) -> (Batch_Size, 512, Height / 4, Width / 4)

VAE_ResidualBlock(512, 512),

# (Batch_Size, 512, Height / 4, Width / 4) -> (Batch_Size, 512, Height / 4, Width / 4)

VAE_ResidualBlock(512, 512),

# (Batch_Size, 512, Height / 4, Width / 4) -> (Batch_Size, 512, Height / 4, Width / 4)

VAE_ResidualBlock(512, 512),

# (Batch_Size, 512, Height / 4, Width / 4) -> (Batch_Size, 512, Height / 2, Width / 2)

nn.Upsample(scale_factor=2),

# (Batch_Size, 512, Height / 2, Width / 2) -> (Batch_Size, 512, Height / 2, Width / 2)

nn.Conv2d(512, 512, kernel_size=3, padding=1),

# (Batch_Size, 512, Height / 2, Width / 2) -> (Batch_Size, 256, Height / 2, Width / 2)

VAE_ResidualBlock(512, 256),

# (Batch_Size, 256, Height / 2, Width / 2) -> (Batch_Size, 256, Height / 2, Width / 2)

VAE_ResidualBlock(256, 256),

# (Batch_Size, 256, Height / 2, Width / 2) -> (Batch_Size, 256, Height / 2, Width / 2)

VAE_ResidualBlock(256, 256),

# (Batch_Size, 256, Height / 2, Width / 2) -> (Batch_Size, 256, Height, Width)

nn.Upsample(scale_factor=2),

# (Batch_Size, 256, Height, Width) -> (Batch_Size, 256, Height, Width)

nn.Conv2d(256, 256, kernel_size=3, padding=1),

# (Batch_Size, 256, Height, Width) -> (Batch_Size, 128, Height, Width)

VAE_ResidualBlock(256, 128),

# (Batch_Size, 128, Height, Width) -> (Batch_Size, 128, Height, Width)

VAE_ResidualBlock(128, 128),

# (Batch_Size, 128, Height, Width) -> (Batch_Size, 128, Height, Width)

VAE_ResidualBlock(128, 128),

# (Batch_Size, 128, Height, Width) -> (Batch_Size, 128, Height, Width)

nn.GroupNorm(32, 128),

# (Batch_Size, 128, Height, Width) -> (Batch_Size, 128, Height, Width)

8, Height, Width) -> (Batch_Size, 128, Height, Width)

nn.SiLU(),

# (Batch_Size, 128, Height, Width) -> (Batch_Size, 3, Height, Width)

nn.Conv2d(128, 3, kernel_size=3, padding=1),

)

def forward(self, x):

# x: (Batch_Size, 4, Height / 8, Width / 8)

# Remove the scaling added by the Encoder.

x /= 0.18215

for module in self:

x = module(x)

# (Batch_Size, 3, Height, Width)

return x

![BJDCTF2020[<span style='color:red;'>encode</span>]](https://img-blog.csdnimg.cn/direct/3546259108e045a1bc66849cbb2b2647.png#pic_center)