项目场景:

提示:这里简述项目相关背景:定时爬取外网的某个页面,并将需要的部分翻译为中文存入excel

接下了的,没学过的最好看一下

基本爬虫的学习

【爬虫】requests 结合 BeautifulSoup抓取网页数据_requests beautifulsoup 在界面中选取要抓取的元素-CSDN博客

问题描述 一:

提示:这里描述项目中遇到的问题:

以基本爬虫的学习的例子为例,换到你自己想要的url 运行不了

原因分析 一:

程序使用一段时间后会遇到HTTP Error 403: Forbidden错误。 因为在短时间内直接使用Get获取大量数据,会被服务器认为在对它进行攻击,所以拒绝我们的请求,自动把电脑IP封了。

解决方案 一:

我就不细讲了,看懂别人的就行:

python 爬虫禁止访问解决方法(403)_爬虫加了请求头还是403错误-CSDN博客

我的是这样的

import random

import time

import requests

from bs4 import BeautifulSoup

url = "https://pubmed.ncbi.nlm.nih.gov/"

# List of user-agent strings

my_headers = [

"Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/35.0.1916.153 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:30.0) Gecko/20100101 Firefox/30.0",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.75.14 (KHTML, like Gecko) Version/7.0.3 Safari/537.75.14",

"Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.2; Win64; x64; Trident/6.0)",

'Mozilla/5.0 (Windows; U; Windows NT 5.1; it; rv:1.8.1.11) Gecko/20071127 Firefox/2.0.0.11',

'Opera/9.25 (Windows NT 5.1; U; en)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)',

'Mozilla/5.0 (compatible; Konqueror/3.5; Linux) KHTML/3.5.5 (like Gecko) (Kubuntu)',

'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.0.12) Gecko/20070731 Ubuntu/dapper-security Firefox/1.5.0.12',

'Lynx/2.8.5rel.1 libwww-FM/2.14 SSL-MM/1.4.1 GNUTLS/1.2.9',

"Mozilla/5.0 (X11; Linux i686) AppleWebKit/535.7 (KHTML, like Gecko) Ubuntu/11.04 Chromium/16.0.912.77 Chrome/16.0.912.77 Safari/535.7",

"Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:10.0) Gecko/20100101 Firefox/10.0"

]

try:

with requests.Session() as session:

t = 0.1

time.sleep(t)

# 随机从列表中选择IP、Header

#proxy = random.choice(proxy_list)

header = random.choice(my_headers)

headers = {"User-Agent": header}

response = session.get(url, headers=headers)

response.raise_for_status() # Raises HTTPError for bad responses

print(f"Response status using {header}: {response.status_code}")

#print(response.content) # 打印网页内容

# 使用BeautifulSoup解析HTML内容

soup = BeautifulSoup(response.content, 'html.parser')

# Extract information from each 'li' element within 'items-list'

paper_items = soup.select('ul.items-list li.full-docsum')

for item in paper_items:

paper_link = item.find('a')['href'] # Extract href attribute from <a> tag

paper_title = item.find('a').text.strip() # Extract text from <a> tag

print(f"论文标题: {paper_title}")

print(f"链接: {url}{paper_link}")

print()

# for header in my_headers:

# headers = {"User-Agent": header}

# response = session.get(url, headers=headers)

# response.raise_for_status() # Raises HTTPError for bad responses

# print(f"Response status using {header}: {response.status_code}")

except requests.exceptions.HTTPError as errh:

print(f"HTTP error occurred: {errh}")

except requests.exceptions.RequestException as err:

print(f"Request error occurred: {err}")

问题描述 二:

使用python自带的translate 超多问题,又慢又多个单词组成的翻译不准确,且不到10次就崩了,

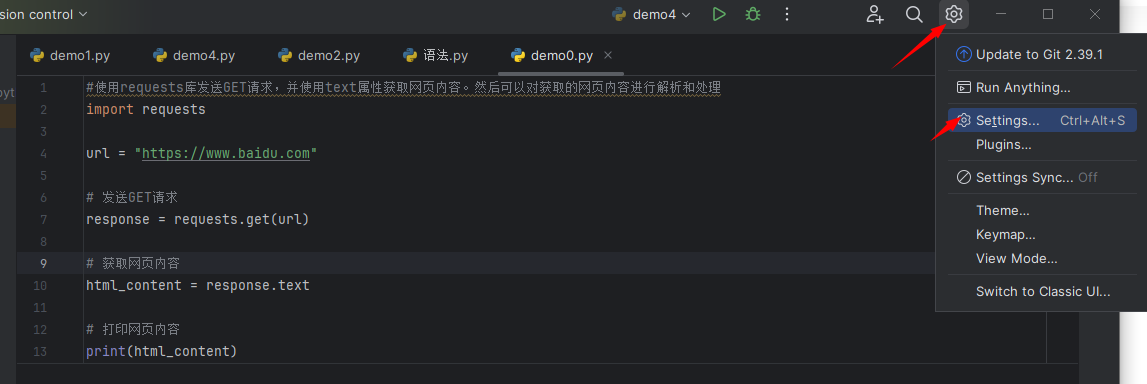

解决方案 二:

东西给家人们找好了,就学吧

【笔记】Python3|(一)用 Python 翻译文本的教程、代码与测试结果(第三方库 translate 和 腾讯 API 篇)_python调用有道翻译-CSDN博客

python实现调用腾讯云翻译API_腾讯翻译api怎么获取-CSDN博客

怎么存入ecxel 可以让 Al (gpt)帮你写

上面实现 完整代码

写自己的腾讯云 SecretId 和 SecretKey 还有 url

其中 从页面要获取的html源代码 放到Al (gtp) 让他帮你修改代码就行 不要直接用我的

from tencentcloud.common import credential

from tencentcloud.common.profile.client_profile import ClientProfile

from tencentcloud.common.profile.http_profile import HttpProfile

from tencentcloud.common.exception.tencent_cloud_sdk_exception import TencentCloudSDKException

from tencentcloud.tmt.v20180321 import tmt_client, models

SecretId = "xxxxxxxxxxxxxxxxxxxxxxxxxx"

SecretKey = "xxxxxxxxxxxxxxxxxxxxxxxxx"

import random

import time

import pandas as pd

from requests.exceptions import RequestException

import requests

from bs4 import BeautifulSoup

from openpyxl import Workbook

url = "https://pubmed.ncbi.nlm.nih.gov/"

my_headers = [

"Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/35.0.1916.153 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:30.0) Gecko/20100101 Firefox/30.0",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.75.14 (KHTML, like Gecko) Version/7.0.3 Safari/537.75.14",

"Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.2; Win64; x64; Trident/6.0)",

'Mozilla/5.0 (Windows; U; Windows NT 5.1; it; rv:1.8.1.11) Gecko/20071127 Firefox/2.0.0.11',

'Opera/9.25 (Windows NT 5.1; U; en)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)',

'Mozilla/5.0 (compatible; Konqueror/3.5; Linux) KHTML/3.5.5 (like Gecko) (Kubuntu)',

'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.0.12) Gecko/20070731 Ubuntu/dapper-security Firefox/1.5.0.12',

'Lynx/2.8.5rel.1 libwww-FM/2.14 SSL-MM/1.4.1 GNUTLS/1.2.9',

"Mozilla/5.0 (X11; Linux i686) AppleWebKit/535.7 (KHTML, like Gecko) Ubuntu/11.04 Chromium/16.0.912.77 Chrome/16.0.912.77 Safari/535.7",

"Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:10.0) Gecko/20100101 Firefox/10.0"

]

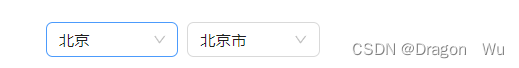

class Translator:

def __init__(self, from_lang, to_lang):

self.from_lang = from_lang

self.to_lang = to_lang

def translate(self, text):

try:

cred = credential.Credential(SecretId, SecretKey)

httpProfile = HttpProfile()

httpProfile.endpoint = "tmt.tencentcloudapi.com"

clientProfile = ClientProfile()

clientProfile.httpProfile = httpProfile

client = tmt_client.TmtClient(cred, "ap-beijing", clientProfile)

req = models.TextTranslateRequest()

req.SourceText = text

req.Source = self.from_lang

req.Target = self.to_lang

req.ProjectId = 0

resp = client.TextTranslate(req)

return resp.TargetText

except TencentCloudSDKException as err:

return err

def fetch_and_translate_papers(url, headers):

try:

with requests.Session() as session:

t = 0.1

time.sleep(t)

translator = Translator(from_lang="en", to_lang="zh")

header = random.choice(headers)

headers = {"User-Agent": header}

response = session.get(url, headers=headers)

response.raise_for_status()

print(f"Response status using {header}: {response.status_code}")

soup = BeautifulSoup(response.content, 'html.parser')

# Prepare data to store in DataFrame

data = []

paper_items = soup.select('ul.items-list li.full-docsum')

for item in paper_items:

paper_link = item.find('a')['href'] # Extract href attribute from <a> tag

paper_title = item.find('a').text.strip() # Extract text from <a> tag

chinese_paper_title = translator.translate(paper_title)

print(f"Paper Title: {paper_title}")

print(f"论文标题: {chinese_paper_title}")

print(f"链接: {url}{paper_link}")

print()

data.append({

'Paper Title': paper_title,

'论文标题': chinese_paper_title,

'链接': f"{url}{paper_link}",

})

# Create a DataFrame

df = pd.DataFrame(data)

# Save to Excel

file_name = 'pubmed.xlsx'

df.to_excel(file_name, index=False, engine='openpyxl')

print(f"Data saved to {file_name}")

except RequestException as e:

print(f"Error fetching {url}: {e}")

# Call the function

fetch_and_translate_papers(url, my_headers)

让 al 修改的是这段

paper_items = soup.select('ul.items-list li.full-docsum')

for item in paper_items:

paper_link = item.find('a')['href'] # Extract href attribute from <a> tag

paper_title = item.find('a').text.strip() # Extract text from <a> tag

chinese_paper_title = translator.translate(paper_title)

print(f"Paper Title: {paper_title}")

print(f"论文标题: {chinese_paper_title}")

print(f"链接: {url}{paper_link}")

print()

data.append({

'Paper Title': paper_title,

'论文标题': chinese_paper_title,

'链接': f"{url}{paper_link}",

})从页面要获取的html源代码 这个也要教吗 懒了呀

就是你要爬的页面 在页面鼠标 右键源代码

把你要爬的 html源代码 放al 让他办你改

问题描述 三:

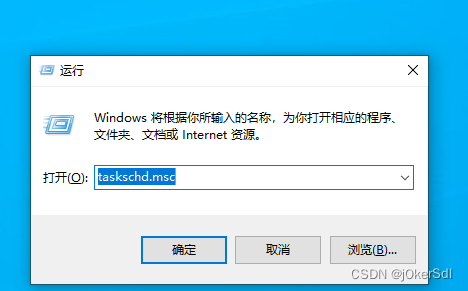

怎么在自己的电脑定时执行 这个代码泥

解决方案 三:

东西也给家人们找好了,学吧,人懒不想总结太多了

【Python】使用Windows任务计划程序定时运行Python脚本!-CSDN博客

之后 要进行修改时间的可以看这个

win10下设置定时任务,间隔每/分钟 - Acezhang - 博客园 (cnblogs.com)

到最后有一点 小问题 :

原因应该就是上面图片的没填 问题不大。 windows解决任务计划程序出错一个或多个指定的参数无效_计划任务一个或多个指定的参数无效-CSDN博客

其中 管理员的单词懂吧选那个

人麻了 要定时还是有很多问题的

你的bat(脚本) 要运得了才可以 点击bat 如果闪退 就是不成功

家人们 内容有点多 更多是自己去看别人的内容,我用了一天半完成的 不急慢慢来

居然没有打赏功能 可怜我写了半天