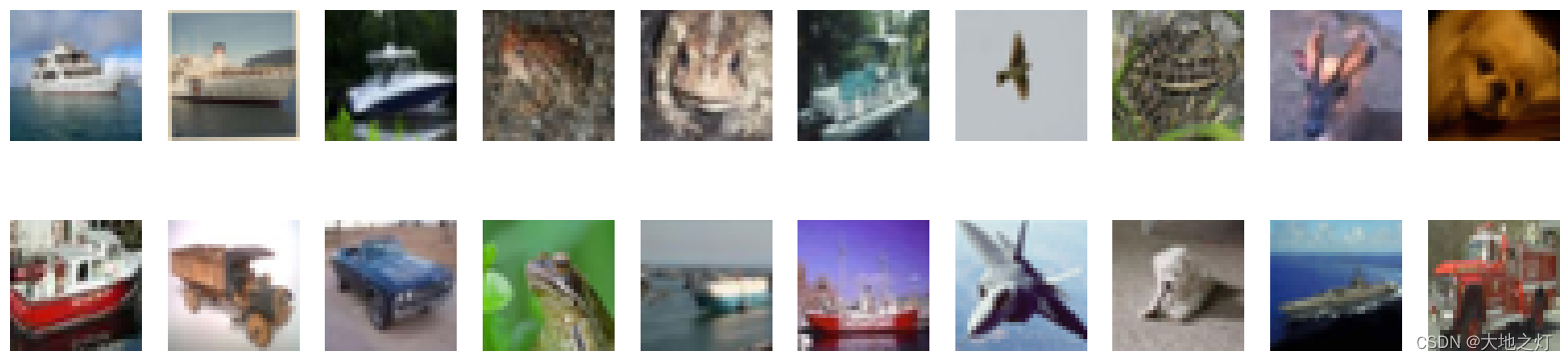

CIFAR10

目的:

实现从数据集中进行分类,一共有10个类别。

实现步骤:

1、导包:

import collections

import torch

from torch import nn

from d2l import torch as d2l

import shutil

import os

import math

import pandas as pd

import torchvision

2、下载数据集

#下载数据集

d2l.DATA_HUB['cifar10_tiny'] = (d2l.DATA_URL + 'kaggle_cifar10_tiny.zip',

'2068874e4b9a9f0fb07ebe0ad2b29754449ccacd')

# 如果使用完整的Kaggle竞赛的数据集,设置demo为False

demo = True

if demo:

data_dir = d2l.download_extract('cifar10_tiny')

else:

data_dir = '../data/kaggle/cifar-10/'

3、整理数据集

ef read_csv_labels (fname):

with open(fname,'r') as f:

lines = f.readlines()[1:] #[1:0]表示从第二行开始读取,因为第一行是行头

# 按照逗号分割每一行, 且rstrip 去除每行末尾的换行符

# eg: ["apple,orange,banana\n"] => [['apple','orange','banana']]

tokens = [l.rstrip().split(',') for l in lines]

return dict((name,label) for name, label in tokens)

labels = read_csv_labels(os.path.join(data_dir,'trainLabels.csv'))

4、将验证集从原始的训练集中拆分出来

def copyfile(filename,target_dir):

os.makedirs(target_dir,exist_ok=True)

shutil.copy(filename,target_dir)

# print("训练样本:",len(labels))

# print("类别:",len(set(labels.values())))

def reorg_train_valid(data_dir,labels,valid_ratio):

# Counter :用于计算每个类别出现的次数

# most_common() :用于统计返回出现次数最多的元素(类别,次数),是一个列表,并且按照次数降序的方式存储

# 【-1】表示取出列表中的最后一个元组。

# 【1】 表示取出该元组的次数。

n = collections.Counter(labels.values()).most_common()[-1][1]

# math.floor(): 向下取整 math.ceil(): 向上取整

# 每个类别分配给验证集的最小个数

n_valid_per_label = max(1,math.floor((n * valid_ratio)))

label_count = {}

for photo in os.listdir(os.path.join(data_dir,'train')):

label = labels[photo.split('.')[0]] #取出该标签所对应的类别

# print(train_file)

fname = os.path.join(data_dir,'train',photo)

copyfile(fname,os.path.join(data_dir,'train_valid_test','train_valid',label))

#如果该类别没有在label_count中或者是 数量小于规定的最小值,则将其复制到验证集中

if label not in label_count or label_count[label] < n_valid_per_label:

copyfile(fname,os.path.join(data_dir,'train_valid_test','valid',label))

label_count[label] = label_count.get(label,0) +1

else:

copyfile(fname,os.path.join(data_dir,'train_valid_test','train',label))

return n_valid_per_label

def reorg_test(data_dir):

for test_file in os.listdir(os.path.join(data_dir,'test')):

copyfile(os.path.join(data_dir,'test',test_file), os.path.join(data_dir,'train_valid_test','test','unknown'))

def reorg_cifar10_data(data_dir,valid_ratio):

labels = read_csv_labels(os.path.join(data_dir,'trainLabels.csv'))

reorg_train_valid(data_dir,labels,valid_ratio)

reorg_test(data_dir)

batch_size = 32 if demo else 128

valid_ratio = 0.1

reorg_cifar10_data(data_dir,valid_ratio)

"""

结果会生成一个train_valid_test的文件夹,里面有:

- test文件夹---unknow文件夹:5张没有标签的测试照片

- train_valid文件夹---10个类被的文件夹:每个文件夹包含所属类别的全部照片

- train文件夹--10个类别的文件夹:每个文件夹下包含90%的照片用于训练

- valid文件夹--10个类别的文件夹:每个文件夹下包含10%的照片用于验证

"""

5、数据增强

transform_train = torchvision.transforms.Compose([

# 原本图像是32*32,先放大成40*40, 在随机裁剪为32*32,实现训练数据的增强

torchvision.transforms.Resize(40),

torchvision.transforms.RandomResizedCrop(32, scale=(0.64, 1.0), ratio=(1.0, 1.0)),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

[0.4914, 0.4822, 0.4465],[0.2023, 0.1994, 0.2010]

)

])

transform_test = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

# 标准化图像的每个通道 : 消除评估结果中的随机性

torchvision.transforms.Normalize(

[0.4914, 0.4822, 0.4465],[0.2023, 0.1994, 0.2010]

)

])

6、加载数据集

#加载数据集

train_ds,train_valid_ds = [

torchvision.datasets.ImageFolder(os.path.join(data_dir,'train_valid_test',folder),transform=transform_train)

for folder in ['train','train_valid']

]

valid_ds, test_ds = [

torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'train_valid_test', folder), transform=transform_test

) for folder in ['valid', 'test']

]

#定义迭代器

train_iter, train_valid_iter = [

torch.utils.data.DataLoader(

dataset, batch_size, shuffle=True, drop_last=True

) for dataset in (train_ds, train_valid_ds)

]

valid_iter = torch.utils.data.DataLoader(

valid_ds,batch_size,shuffle=False,drop_last=True

)

test_iter = torch.utils.data.DataLoader(

test_ds,batch_size,shuffle=False,drop_last=False

)

7、定义训练模型:

def get_net():

num_classes = 10 #输出标签

net = d2l.resnet18(num_classes,in_channels=3)

return net

损失函数:

#损失函数

loss = nn.CrossEntropyLoss(reduction='none')

8、定义训练函数:

#定义训练函数

def train(net,train_iter,valid_iter,num_epochs,lr,wd,devices,lr_period,lr_decay):

trainer = torch.optim.SGD(net.parameters(),lr=lr,momentum=0.9,weight_decay=wd)

#学习率调度器:在经过lr_period个epoch之后,将学习率乘以lr_decay.

scheduler = torch.optim.lr_scheduler.StepLR(trainer,lr_period,lr_decay)

num_batches,timer = len(train_iter),d2l.Timer()

legend = ['train_loss','train_acc']

if valid_iter is not None:

legend.append('valid_acc')

animator = d2l.Animator(xlabel='epoch',xlim=[1,num_epochs],legend=legend)

net = nn.DataParallel(net,device_ids=devices).to(devices[0])

for epoch in range(num_epochs):

# 设置为训练模式

net.train()

metric = d2l.Accumulator(3)

for i,(X,y) in enumerate(train_iter):

timer.start()

l,acc = d2l.train_batch_ch13(net,X,y,loss,trainer,devices)

metric.add(l,acc,y.shape[0])

timer.stop()

if (i + 1) % (num_batches // 5 ) ==0 or i == num_batches - 1:

animator.add(epoch + (i + 1)/num_batches,(metric[0]/metric[2],metric[1]/metric[2],None))

if valid_iter is not None:

valid_acc = d2l.evaluate_accuracy_gpu(net,valid_iter)

animator.add(epoch+1,(None,None,valid_acc))

scheduler.step() #更新学习率

measures = (f'train loss {metric[0] / metric[2]:.3f},'

f'train acc{metric[1] / metric[2]:.3f}')

if valid_iter is not None:

measures += f', valid acc {valid_acc:.3f}'

print(measures + f'\n{metric[2] * num_epochs / timer.sum():.1f}'

f'example/sec on {str(devices)}')

9、定义参数,开始训练:

import time

# 在开头设置开始时间

start = time.perf_counter() # start = time.clock() python3.8之前可以

# 训练和验证模型

devices, num_epochs, lr, wd = d2l.try_all_gpus(), 100, 2e-4, 5e-4

lr_period, lr_decay, net = 4, 0.9, get_net()

train(net, train_iter, valid_iter, num_epochs, lr, wd, devices, lr_period, lr_decay)

# 在程序运行结束的位置添加结束时间

end = time.perf_counter() # end = time.clock() python3.8之前可以

# 再将其进行打印,即可显示出程序完成的运行耗时

print(f'运行耗时{(end-start):.4f}')

![[Linux][网络][网络层][IP协议]详细讲解](https://img-blog.csdnimg.cn/direct/5fa9788f39f545d0a4e2fda50716761d.png)