有这么一句话在业界广泛流传:数据和特征决定了机器学习的上限,而模型和算法只是逼近这个上限而已。由此可见,特征工程在机器学习中占有相当重要的地位。在实际应用当中,可以说特征工程是机器学习成功的关键。

特征工程是数据分析中最耗时间和精力的一部分工作,它不像算法和模型那样是确定的步骤,更多是工程上的经验和权衡。因此没有统一的方法。这里只是对一些常用的方法做一个总结。

特征工程包含了 Data PreProcessing(数据预处理)、Feature Extraction(特征提取)、Feature Selection(特征选择)和 Feature construction(特征构造)等子问题。

导入必要的包

import numpy as np

import pandas as pd

import re

import sys

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.utils.validation import check_X_y, check_is_fitted

from sklearn.preprocessing import FunctionTransformer

from sklearn.compose import ColumnTransformer, make_column_transformer, make_column_selector

from sklearn.pipeline import FeatureUnion, make_union, Pipeline, make_pipeline

from sklearn.model_selection import cross_val_score

import lightgbm as lgb

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

import gc

# Setting configuration.

warnings.filterwarnings('ignore')

sns.set_style('whitegrid')

SEED = 42

Jupyter Notebook 脚本:feature_engineering_demo_p3_feature_construction

特征构造

特征构造是从现有数据创建新特征的过程。目标是构建有用的功能,帮助我们的模型了解数据集中的信息与给定目标之间的关系。

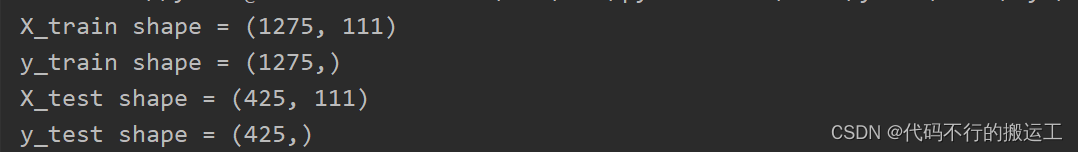

df = pd.read_csv('../datasets/Home-Credit-Default-Risk/prepared_data.csv', index_col='SK_ID_CURR')

定义帮助节省内存的函数

def convert_dtypes(df, verbose=True):

original_memory = df.memory_usage().sum()

df = df.apply(pd.to_numeric, errors='ignore')

# Convert booleans to integers

boolean_features = df.select_dtypes(bool).columns.tolist()

df[boolean_features] = df[boolean_features].astype(np.int32)

# Convert objects to category

object_features = df.select_dtypes(object).columns.tolist()

df[object_features] = df[object_features].astype('category')

# Float64 to float32

float_features = df.select_dtypes(float).columns.tolist()

df[float_features] = df[float_features].astype(np.float32)

# Int64 to int32

int_features = df.select_dtypes(int).columns.tolist()

df[int_features] = df[int_features].astype(np.int32)

new_memory = df.memory_usage().sum()

if verbose:

print(f'Original Memory Usage: {round(original_memory / 1e9, 2)} gb.')

print(f'New Memory Usage: {round(new_memory / 1e9, 2)} gb.')

return df

df = convert_dtypes(df)

X = df.drop('TARGET', axis=1)

y = df['TARGET']

del df

gc.collect()

简单数学变换

我们可以根据业务含义,创建具有一些明显实际含义的补充特征,例如:

math_features = pd.DataFrame(df['TARGET'])

# 贷款金额相对于收入的比率

math_features['CREDIT_INCOME_PERCENT'] = df['AMT_CREDIT'] / df['AMT_INCOME_TOTAL']

# 贷款年金占总收入比率

math_features['ANNUITY_INCOME_PERCENT'] = df['AMT_ANNUITY'] / df['AMT_INCOME_TOTAL']

# 以月为单位的付款期限

math_features['CREDIT_TERM'] = df['AMT_ANNUITY'] / df['AMT_CREDIT']

#工作时间占年龄的比率

math_features['DAYS_EMPLOYED_PERCENT'] = df['DAYS_EMPLOYED'] / df['DAYS_BIRTH']

# 该用户家庭的人均收入

math_features['INCOME_PER_PERSON'] = df['AMT_INCOME_TOTAL'] / df['CNT_FAM_MEMBERS']

我们可以在图形中直观地探索这些新变量:

plt.figure(figsize = (10, 6))

# iterate through the new features

for i, feature in enumerate(['CREDIT_INCOME_PERCENT', 'ANNUITY_INCOME_PERCENT', 'CREDIT_TERM', 'DAYS_EMPLOYED_PERCENT']):

# create a new subplot for each feature

plt.subplot(2, 2, i + 1)

sns.kdeplot(data=math_features, x=feature, hue='TARGET', common_norm=False)

当然,我们不可能手动计算出所有有实际含义的数学特征。我们可以借助于 featuretools 包找到尽可能多的特征组合进行加减乘除运算。另外,还有对单变量的对数、指数、倒数、平方根、三角函数等运算。

注意:对于二元运算虽然简单,但会出现阶乘式维度爆炸。因此,我们挑选出少数数值型特征进行简单运算。

numeric_to_trans = [

'AMT_CREDIT', 'REGION_POPULATION_RELATIVE', 'DAYS_BIRTH', 'DAYS_EMPLOYED',

'DAYS_REGISTRATION', 'AMT_INCOME_TOTAL', 'REGION_RATING_CLIENT', 'REGION_RATING_CLIENT_W_CITY',

'CNT_CHILDREN', 'CNT_FAM_MEMBERS', 'AMT_ANNUITY', 'AMT_GOODS_PRICE',

'EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3'

]

import featuretools as ft

def math_transform(

X, y=None,

variables=None,

func=None,

max_depth=2,

drop_original=True,

verbose=False

):

"""

Apply math operators to create new features.

Parameters

----------

variables: list, default=None

The list of input variables.

func: List[string], default=['add_numeric', 'subtract_numeric', 'multiply_numeric', 'divide_numeric']

List of Transform Feature functions to apply.

drop_original: bool, default=True

If True, the original variables to transform will be dropped from the dataframe.

"""

if variables is None:

variables = X.select_dtypes('number').columns.tolist()

df = X[variables].copy()

if func is None:

func = ['add_numeric', 'subtract_numeric', 'multiply_numeric', 'divide_numeric']

# Make an entityset and add the entity

es = ft.EntitySet(id = 'single_table')

es.add_dataframe(dataframe_name='df', dataframe=df, make_index=True, index='id')

# Run deep feature synthesis with transformation primitives

feature_matrix, features = ft.dfs(

entityset = es,

target_dataframe_name = 'df',

trans_primitives = func,

max_depth = max_depth,

verbose=verbose

)

new_features = feature_matrix.drop(variables, axis=1)

new_features.index = X.index

if drop_original:

return new_features

else:

return pd.concat([X, new_features], axis=1)

# trans_primitives = [

# 'add_numeric', 'subtract_numeric', 'multiply_numeric', 'divide_numeric', 'modulo_numeric',

# 'sine', 'cosine', 'tangent', 'square_root', 'natural_logarithm'

# ]

math_features = math_transform(

X = X[numeric_to_trans].abs(),

func = [

'add_numeric', 'subtract_numeric','divide_numeric',

'sine', 'square_root', 'natural_logarithm'

]

)

print('Math features shape', math_features.shape)

math_features = convert_dtypes(math_features)

分组统计特征衍生

分组统计特征衍生,顾名思义,就是分类特征和连续特征间的分组交互统计,这样可以得到更多有意义的特征,例如:

# Group AMT_INCOME_TOTAL by NAME_INCOME_TYPE and calculate mean, max, min of loans

df.groupby('NAME_INCOME_TYPE')['AMT_INCOME_TOTAL'].agg(['mean', 'max', 'min']).head()

常用的统计量

| var/std | 方差、标准差 |

| mean/median | 均值、中位数 |

| max/min | 最大值、最小值 |

| skew | 偏度 |

| mode | 众数 |

| nunique | 类别数 |

| frequency | 频数 |

| count | 个数 |

| quantile | 分位数 |

注意:分组特征必须是离散特征,且最好是一些取值较多的离散变量,这样可以避免新特征出现大量重复取值。分组使用连续值特征时一般需要先进行离散化。

接下来我们自定义一个transformer用来处理数值类型和分类型的分组变量衍生。

from itertools import product, permutations, combinations

from sklearn.preprocessing import KBinsDiscretizer

from sklearn.impute import SimpleImputer

from sklearn.compose import make_column_selector

class AggFeatures(BaseEstimator, TransformerMixin):

"""

Transformer to aggregate features in a dataframe. This can

be used to create features for each instance of the grouping variable.

Parameters

----------

variables: list, default=None

The list of input variables. At least one of `variables`,

groupby: list, default=None

The variables to group by.

func: function, string, list

List of Aggregation Feature types to apply.

Same functionality as parameter `func` in `pandas.agg()`.

Build-in func: ['mode', 'kurt', 'frequency', 'num_unique']

Default:

- Numeric: ['median', 'max', 'min', 'skew', 'std']

- Category: ['mode', 'num_unique', 'frequency']

n_bins: int, default=10

The number of bins to produce.

drop_original: bool, default=True

If True, the original variables to transform will be dropped from the dataframe.

"""

def __init__(self,

variables=None,

groupby=None,

func=None,

n_bins=10,

drop_original=True):

self.variables = variables

self.groupby = groupby

self.func = func

self.n_bins= n_bins

self.drop_original = drop_original

def fit(self, X, y=None):

"""

Parameters

----------

X : pandas dataframe of shape = [n_samples, n_features]

The training input samples.

y : pandas Series, default=None

y is not needed in this transformer. You can pass y or None.

"""

# check input dataframe

# X, y = check_X_y(X, y)

# Get the names and number of features in the train set.

self.feature_names_in_ = X.columns.to_list()

self.n_features_in_ = X.shape[1]

build_in_funcs = {'mode': self.mode,

'kurt': self.kurt,

'frequency': self.frequency,

'num_unique': pd.Series.nunique}

assert self.func is not None, "Your selected funcs is None."

self.func = [build_in_funcs.get(f, f) for f in self.func]

if self.variables is None:

self.variables = X.columns.tolist()

if self.groupby is None:

self.groupby = X.columns.tolist()

return self

def transform(self, X, y=None):

"""

Parameters

----------

X: pandas dataframe of shape = [n_samples, n_features]

The data to be transformed.

Returns

-------

X_new: pandas dataframe of shape = [n_samples, n_features]

A dataframe with the statistics aggregated the selected variables.

The columns are also renamed to keep track of features created.

"""

X = X.copy()

# check if class was fitted

check_is_fitted(self)

group_df = self.discretize(X[self.groupby], self.n_bins)

# Group by the specified variable and calculate the statistics

n = 0

for group_var in self.groupby:

# Skip the grouping variable

other_vars = [var for var in self.variables if var != group_var]

for f in self.func:

# Need to create new column names

colnames = [f"{f.__name__ if callable(f) else f}({var})_by({group_var})"

for var in other_vars]

X[colnames] = X[other_vars].groupby(group_df[group_var]).transform(f)

n += len(colnames)

print(f'Created {n} new features.')

if self.drop_original:

X = X.drop(self.feature_names_in_, axis=1)

return X

def mode(self, series):

return series.mode(dropna=False)[0]

def kurt(self, series):

return series.kurt()

def frequency(self, series):

freq = series.value_counts(normalize=True, dropna=False)

return series.map(freq)

def discretize(self, X, bins=10):

X = X.copy()

features_num = X.select_dtypes('number').columns

X[features_num] = X[features_num].apply(pd.qcut, q=bins, duplicates="drop")

X = X.astype('category')

return X

def get_feature_names_out(self, input_features=None):

check_is_fitted(self)

if input_features is None:

feature_names_in = self.feature_names_in_

elif len(input_features) == self.n_features_in_:

# If the input was an array, we let the user enter the variable names.

feature_names_in = list(input_features)

else:

raise ValueError(

"The number of input_features does not match the number of "

"features seen in the dataframe used in fit."

)

if self.drop_original:

feature_names_out = []

else:

feature_names_out = feature_names_in

func_names = [f.__name__ if callable(f) else f for f in self.func]

for group_var in feature_names_in:

# Skip the grouping variable

other_vars = [var for var in self.variables if var != group_var]

# Make new column names for the variable and stat

colnames = [f"{f}({var})_by({group_var})"

for f, var in product(func_names, other_vars)]

feature_names_out.extend(colnames)

return feature_names_out

在之前挑选的数值型特征基础上新增几个类别特征:

categorical_to_trans = [

'NAME_CONTRACT_TYPE', 'NAME_TYPE_SUITE', 'NAME_INCOME_TYPE',

'NAME_EDUCATION_TYPE', 'NAME_FAMILY_STATUS', 'NAME_HOUSING_TYPE'

]

数值型特征直接计算统计量,至于分类特征,计算众数、类别数和所属类别的占比。

agg_numeric_transformer = AggFeatures(

variables = numeric_to_trans,

func=['median', 'max', 'kurt'],

groupby = numeric_to_trans + categorical_to_trans,

drop_original=True

)

agg_numeric_features = agg_numeric_transformer.fit_transform(X, y)

agg_categorical_transformer = AggFeatures(

variables = categorical_to_trans,

func=['mode', 'frequency'],

groupby = numeric_to_trans + categorical_to_trans,

drop_original=True

)

agg_categorical_features = agg_categorical_transformer.fit_transform(X, y)

agg_features = pd.concat([agg_numeric_features, agg_categorical_features], axis=1)

print('Aggregation features shape', agg_features.shape)

agg_features = convert_dtypes(agg_features)

del agg_numeric_features, agg_categorical_features

gc.collect()

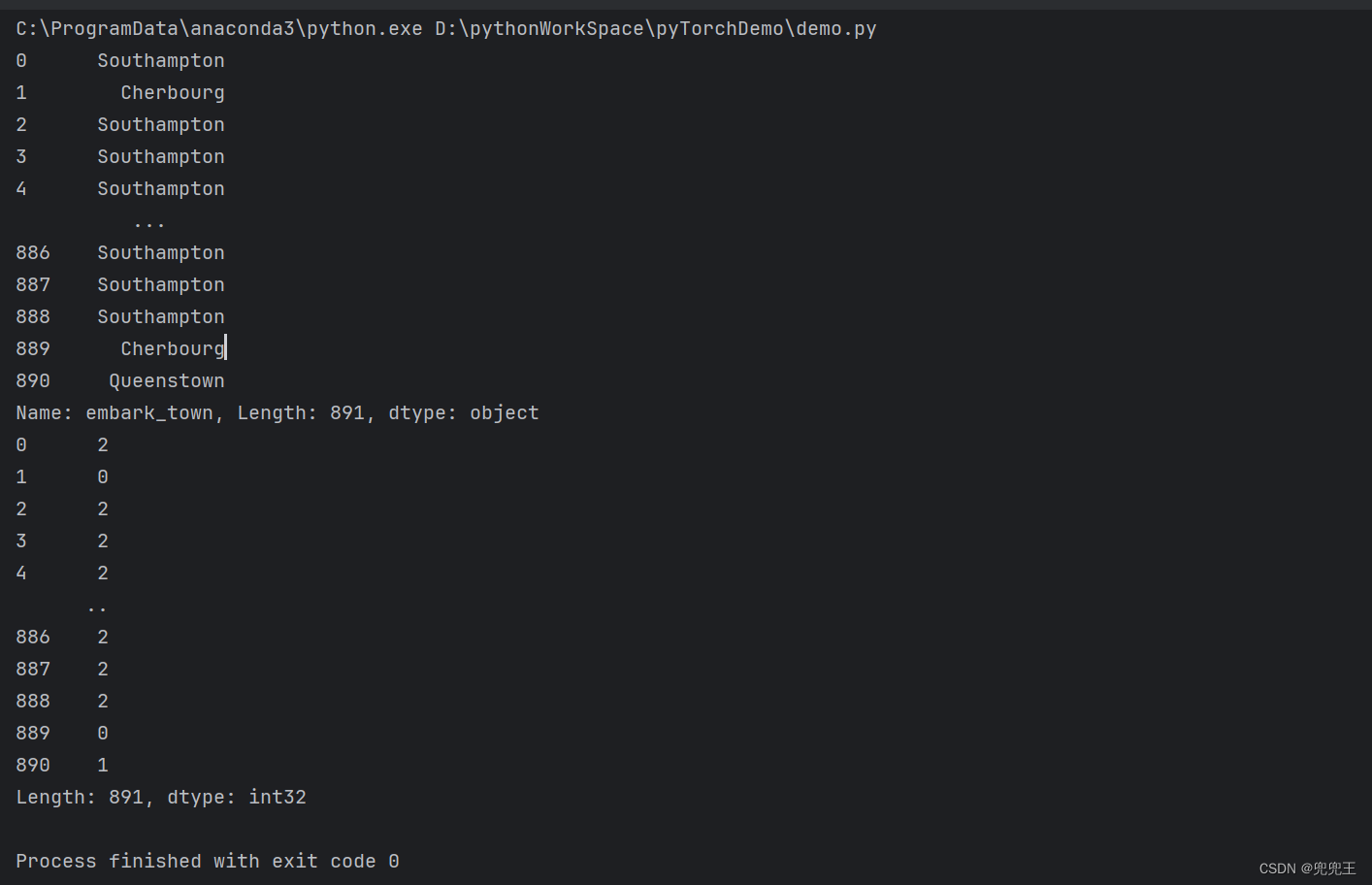

特征交互

通过将单独的特征求笛卡尔乘积的方式来组合2个或更多个特征,从而构造出组合特征。最终获得的预测能力可能远远超过任一特征单独的预测能力。笛卡尔乘积组合特征方法一般应用于离散特征之间。

def feature_interaction(X, y=None, left=None, right=None, drop_original=True):

"""

Parameters

----------

X: pandas dataframe.

left, right: The list of interact variables. default=None

drop_original: bool, default=True

If True, the original variables to transform will be dropped from the dataframe.

"""

left = X.columns if left is None else left

right = X.columns if right is None else right

# Make a new dataframe to hold interaction features

X_new = pd.DataFrame(index=X.index)

for rvar in right:

other_vars = [lvar for lvar in left if lvar !=rvar]

rseries = X[rvar].astype(str)

colnames = [f"{lvar}&{rvar}" for lvar in other_vars]

X_new[colnames] = X[other_vars].transform(lambda s: s.astype(str) + "&" + rseries)

if not drop_original:

X_new = pd.concat([X, X_new], axis=1)

return X_new

interaction_features = feature_interaction(X[categorical_to_trans])

print(interaction_features.shape)

print(interaction_features.iloc[:5, :2])

del interaction_features

gc.collect()

多项式特征

多项式特征是 sklearn 中特征构造的最简单方法。当我们创建多项式特征时,尽量避免使用过高的度数,因为特征的数量随着度数指数级地变化,并且可能过拟合。

现在我们使用3度多项式来查看结果:

from sklearn.preprocessing import PolynomialFeatures

# Make a new dataframe for polynomial features

X_poly = X[['EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3', 'DAYS_BIRTH']]

# Create the polynomial object with specified degree

poly_transformer = PolynomialFeatures(degree = 3)

# Train and transform the polynomial features

poly_features = poly_transformer.fit_transform(X_poly)

print('Polynomial features shape: ', poly_features.shape)

print('Polynomial features:', poly_transformer.get_feature_names_out()[-5:])

del poly_features

gc.collect()

聚类分析

聚类算法在特征构造中的应用有不少,例如:利用聚类算法对文本聚类,使用聚类类标结果作为输入特征;利用聚类算法对单个数值特征进行聚类,相当于分箱;利用聚类算法对R、F、M数据进行聚类,类似RFM模型,然后再使用代表衡量客户价值的聚类类标结果作为输入特征。

当一个或多个特征具有多峰分布(有两个或两个以上清晰的峰值)时,可以使用聚类算法为每个峰值分类,并输出聚类类标结果。

age_simi = X[['DAYS_BIRTH']]/-365

age_simi.columns=['age']

plt.figure(figsize=(5,4))

sns.histplot(x=age_simi['age'], bins=30)

可以看到有两个峰值:40和55

一般聚类类标结果为一个数值,但实际上这个数值并没有大小之分,所以一般需要进行one-hot编码,或者创建新特征来度量样本和每个类中心的相似性(距离)。相似性度量通常使用径向基函数(RBF)来计算。

径向基函数(Radial Basis Function,简称RBF)是一种在机器学习和统计建模中常用的函数类型。它们以距离某个中心点的距离作为输入,并输出一个数值,通常表示为距离的“衰减函数”。最常用的RBF是高斯RBF,其输出值随着输入值远离固定点而呈指数衰减。高斯RBF可以使用Scikit-Learn的rbf_kernel()函数计算 k ( x , y ) = exp ( − γ ∥ x − y ∥ 2 ) k(x,y)=\exp(-\gamma\|x-y\|^2) k(x,y)=exp(−γ∥x−y∥2),超参数 gamma 确定当x远离y时相似性度量衰减的速度。

下图显示了年龄的两个径向基函数:

from sklearn.metrics.pairwise import rbf_kernel

age_simi[['simi_40', 'simi_55']] = rbf_kernel(age_simi[['age']], [[40],[55]], gamma=0.01)

fig = plt.figure(figsize=(5,4))

ax1 = fig.add_subplot(111)

sns.histplot(data=age_simi, x='age', bins=30, ax=ax1)

ax2 = ax1.twinx()

sns.lineplot(data=age_simi, x='age', y='simi_40', ci=None, ax=ax2, color='green')

sns.lineplot(data=age_simi, x='age', y='simi_55', ci=None, ax=ax2, color='orange')

如果你给rbf_kernel()函数传递一个有两个特征的数组,它会测量二维距离(欧几里得)来测量相似性。

如果这个特定的特征与目标变量有很好的相关性,那么这个新特征将有很好的机会发挥作用。

接下来,我们自定义一个转换器,该转换器在fit()方法中使用KMeans聚类器来识别训练数据中的主要聚类,然后在transform()方法中使用rbf_kernel() 来衡量每个样本与每个聚类中心的相似程度:

from sklearn.cluster import KMeans

from sklearn.preprocessing import RobustScaler

from sklearn.metrics.pairwise import rbf_kernel

class ClusterSimilarity(BaseEstimator, TransformerMixin):

def __init__(self, n_clusters=5, gamma=1.0, standardize=True, random_state=None):

self.n_clusters = n_clusters

self.gamma = gamma

self.standardize = standardize

self.random_state = random_state

def fit(self, X, y=None):

# Standardize

if self.standardize:

self.scaler = RobustScaler()

X = self.scaler.fit_transform(X)

self.kmeans = KMeans(self.n_clusters, random_state=self.random_state)

self.kmeans.fit(X)

# Coordinates of cluster centers.

self.cluster_centers_ = self.kmeans.cluster_centers_

return self

def transform(self, X):

X = self.scaler.transform(X)

return rbf_kernel(X, self.cluster_centers_, gamma=self.gamma)

def get_feature_names_out(self, input_features=None):

return [f"centroid_{i}_similarity" for i in range(self.n_clusters)]

现在让我们使用这个转换器:

cluster = ClusterSimilarity(n_clusters=5, gamma=0.01, random_state=SEED)

cluster_similarities = cluster.fit_transform(pd.get_dummies(X))

cluster_labels = cluster.kmeans.predict(pd.get_dummies(X))

cluster_similarities = pd.DataFrame(

cluster_similarities,

columns = cluster.get_feature_names_out(),

index = X.index

)

print(cluster_similarities.shape)

cluster_similarities = convert_dtypes(cluster_similarities)

del age_simi, cluster_labels

gc.collect()

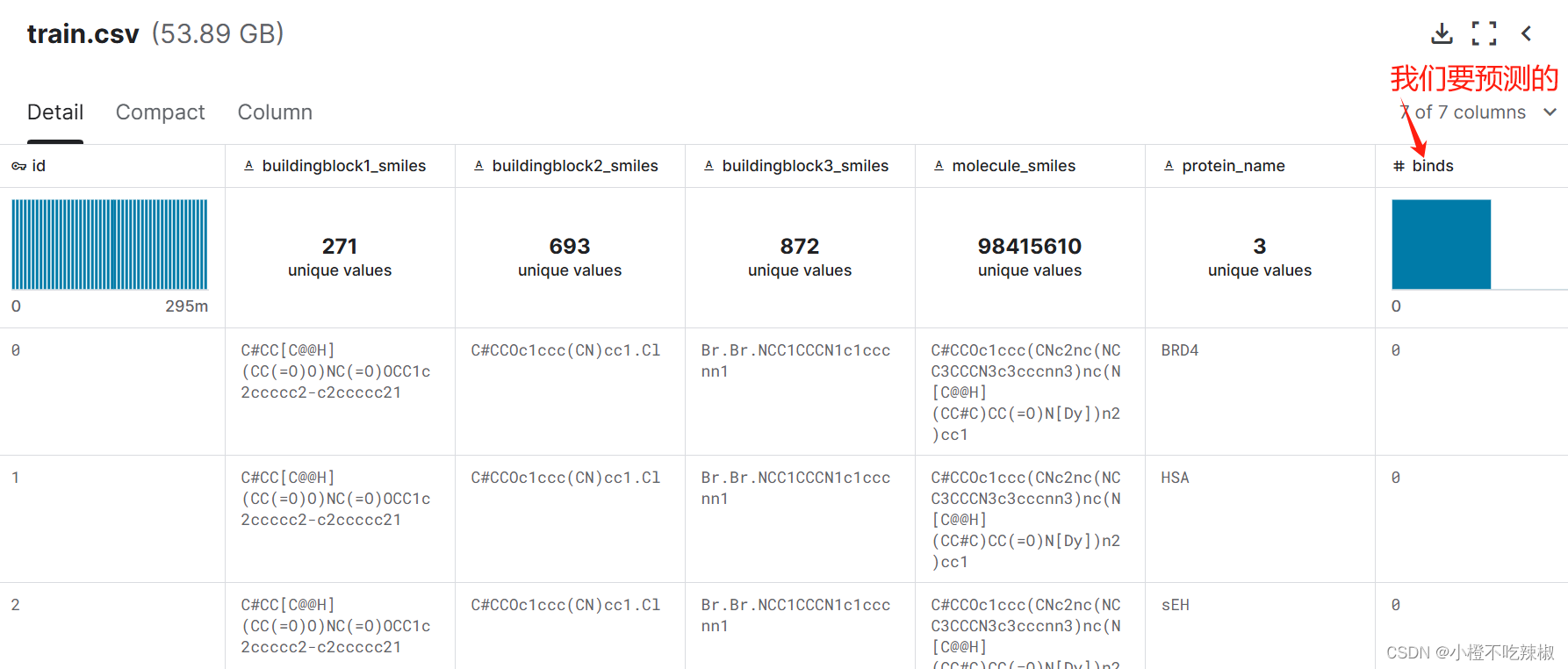

featuretools

在许多情况下数据分布在多个表中,而机器学习模型必须用单个表进行训练,因此特征工程要求我们将所有数据汇总到一个表中。这些数据集,通常基于 id 值连接,我们通过计算数值特征的一些统计量来合并。featuretools 包能很方便的自动完成这些任务。

featuretools 涉及到3个概念:实体(entity)、关系(relationship)和算子(primitive)。

- 所谓的实体就是一张表或者一个dataframe,多张表的集合就叫实体集(entityset)。

- 关系就是表之间的关联键的定义。

- 而算子就是一些特征工程的函数。有应用于实体集的聚合操作(Aggregation primitives)和应用于单个实体的转换操作(Transform primitives)两种。

featuretools.list_primitives()方法可以列出支持的操作。

本项目主要是基于application表的特征工程,对其他数据集只做简单的处理

import featuretools as ft

# Load other datasets

print("Loading...")

app = X.reset_index()

bureau = pd.read_csv('../datasets/Home-Credit-Default-Risk/bureau.csv')

bureau_balance = pd.read_csv('../datasets/Home-Credit-Default-Risk/bureau_balance.csv')

cash = pd.read_csv('../datasets/Home-Credit-Default-Risk/POS_CASH_balance.csv')

credit = pd.read_csv('../datasets/Home-Credit-Default-Risk/credit_card_balance.csv')

previous = pd.read_csv('../datasets/Home-Credit-Default-Risk/previous_application.csv')

installments = pd.read_csv('../datasets/Home-Credit-Default-Risk/installments_payments.csv')

# Empty entity set with id applications

es = ft.EntitySet(id = 'clients')

# Entities with a unique index

es = es.add_dataframe(dataframe_name= 'app', dataframe = app,

index = 'SK_ID_CURR')

es = es.add_dataframe(dataframe_name= 'bureau', dataframe = bureau,

index = 'SK_ID_BUREAU')

es = es.add_dataframe(dataframe_name= 'previous', dataframe = previous,

index = 'SK_ID_PREV')

# Entities that do not have a unique index

es = es.add_dataframe(dataframe_name= 'bureau_balance', dataframe = bureau_balance,

make_index = True, index = 'bureau_balance_index')

es = es.add_dataframe(dataframe_name= 'cash', dataframe = cash,

make_index = True, index = 'cash_index')

es = es.add_dataframe(dataframe_name= 'installments', dataframe = installments,

make_index = True, index = 'installments_index')

es = es.add_dataframe(dataframe_name= 'credit', dataframe = credit,

make_index = True, index = 'credit_index')

# add Relationship

es = es.add_relationship('app', 'SK_ID_CURR', 'bureau', 'SK_ID_CURR')

es = es.add_relationship('bureau', 'SK_ID_BUREAU', 'bureau_balance', 'SK_ID_BUREAU')

es = es.add_relationship('app','SK_ID_CURR', 'previous', 'SK_ID_CURR')

es = es.add_relationship('previous', 'SK_ID_PREV', 'cash', 'SK_ID_PREV')

es = es.add_relationship('previous', 'SK_ID_PREV', 'installments', 'SK_ID_PREV')

es = es.add_relationship('previous', 'SK_ID_PREV', 'credit', 'SK_ID_PREV')

# Default primitives from featuretools

agg_primitives = ["sum", "std", "max", "skew", "min", "mean", "count", "percent_true", "num_unique", "mode"]

trans_primitives = ["day", "year", "month", "weekday", "haversine", "num_words", "num_characters"]

# DFS with specified primitives

feature_matrix, feature_defs = ft.dfs(

entityset = es,

target_dataframe_name = 'app',

agg_primitives=['sum', 'max', 'mean', 'count'],

max_depth = 2,

verbose = 1

)

print('After DFS, features shape: ', feature_matrix.shape)

# view last 10 features

print(feature_defs[-10:])

feature_matrix = convert_dtypes(feature_matrix)

del app, bureau, bureau_balance, cash, credit, previous, installments

gc.collect()

主成分分析

由于我们新增的这些特征都是和原始特征高度相关,可以使用PCA的主成分作为新的特征来消除相关性。

由于主成分分析主要用于降维,我们将在后续特征选择部分详细介绍。

小结

合并之前创造的特征

# Combine datasets

X_created = pd.concat(

[feature_matrix, math_features, agg_features, cluster_similarities],

axis=1

)

X_created = convert_dtypes(X_created)

X_created.dtypes.value_counts()

del X, feature_matrix, math_features, agg_features, cluster_similarities

gc.collect()

def drop_missing_data(X, threshold=0.8):

X = X.copy()

# Remove variables with missing more than threshold(default 20%)

thresh = int(X.shape[0] * threshold)

X_new = X.dropna(axis=1, thresh=thresh)

print(f"Removed {X.shape[1]-X_new.shape[1]} variables with missing more than {1 - threshold:.1%}")

return X_new

# Simple imputer

def impute_simply(X, threshold=0.8):

"""

Univariate imputer for completing missing values with simple strategies.

"""

print("Simple imputer:")

X = X.copy()

variables = X.columns[X.isna().mean().between(0, 1-threshold, "right")].tolist()

features_num = X[variables].select_dtypes('number').columns.to_list()

features_cat = X[variables].select_dtypes(exclude='number').columns.to_list()

# Replaces missing values by the median or mode

medians = X[features_num].median().to_dict()

modes = X[features_cat].apply(lambda x: x.mode()[0]).to_dict()

impute_dict = {**medians, **modes}

X[variables] = X[variables].fillna(impute_dict)

print(f"Transformed {len(variables)} variables with missing (threshold={threshold:.1%}).")

print(f"And then, there are {X.isna().sum().gt(0).sum()} variables with missing.")

return X

# Handle missing values

X_created = drop_missing_data(X_created, threshold=0.8)

X_created = impute_simply(X_created, threshold=0.8)

X_created.shape

created_data = pd.concat([X_created, y], axis=1)

created_data.to_csv('../datasets/Home-Credit-Default-Risk/created_data.csv', index=True)

print(f'Memory Usage: {sys.getsizeof(created_data) / 1e9:.2f} gb')

del created_data

gc.collect()

我们继续使用LightGBM模型评估创造的新特征

from IPython.display import Latex

clf = lgb.LGBMClassifier(

objective='binary',

class_weight='balanced',

metric='auc',

verbose=0

)

categorical_cols = X_created.select_dtypes('category').columns.tolist()

scores = cross_val_score(

clf, X_created, y, cv=5, scoring="roc_auc",

params=dict(categorical_feature='name:' + ','.join(categorical_cols))

)

Latex(f"LightGBM classifier auc: {scores.mean():.5f} $\pm$ {scores.std():.5f}")

特征重要性

clf.fit(X_created, y, categorical_feature='name:' + ','.join(categorical_cols))

pd.Series(

clf.feature_importances_,

index=X_created.columns

).sort_values(ascending=False).head(10)

![[dvwa] CSRF](https://img-blog.csdnimg.cn/direct/8e45aae07fe14b4ebcc75db1186aeefb.png)

![[leetcode] all-nodes-distance-k-in-binary-tree 二叉树中所有距离为 K 的结点](https://img-blog.csdnimg.cn/direct/6d8eceafdb9b46a6888119f7de3829bd.png)