1 Title

HyperFormer: Learning Expressive Sparse Feature Representations via Hypergraph Transformer(Kaize Ding, Albert Jiongqian Liang, Bryan Perrozi, Ting Chen, Ruoxi Wang, Lichan Hong, Ed H. Chi, Huan Liu, Derek Zhiyuan Cheng)【2023 SIGIR '23】

2 Conclusion

This paper proposes tackle the problem of representation learning on feature-sparse data from a graph learning perspective. Specifically, this study proposes to model the sparse features of different instances using hypergraphs where each node represents a data instance and each hyperedge denotes a distinct feature value. By passing messages on the constructed hypergraphs based on our Hypergraph Transformer (HyperFormer), the learned feature representations capture not only the correlations among different instances but also the correlations among features

3 Good Sentences

1、Compared to conventional approaches, deep learning models have shown their superiority for handling high-dimensional sparse features. Methods such as Wide&Deep, Deep Crossing, PNN,DCN, AutoInt , Fi-GNN, DCN-v2 have been proposed to automatically model the cross features. However, existing methods are not able to explicitly capture the correlations between instances and are also ineffective to handle the tail features that appear rarely in the data.(The shortcomings which is waitting of improvement of related works)

2、Considering the fact that the scale of training data could be extremely large in practice, it is almost impossible to build a single feature hypergraph to handle all the data instances. To counter this issue, we propose to construct in-batch hypergraph based on data instances in the batch to further support mini-batch training(The problems this study meet and the solutions)

3、Considering the fact that the scale of training data could be extremely large in practice, it is almost impossible to build a single feature hypergraph to handle all the data instances. To counter this issue, we propose to construct in-batch hypergraph based on data instances in the batch to further support mini-batch training(another creativity of this study to improve the speed of data analysis)

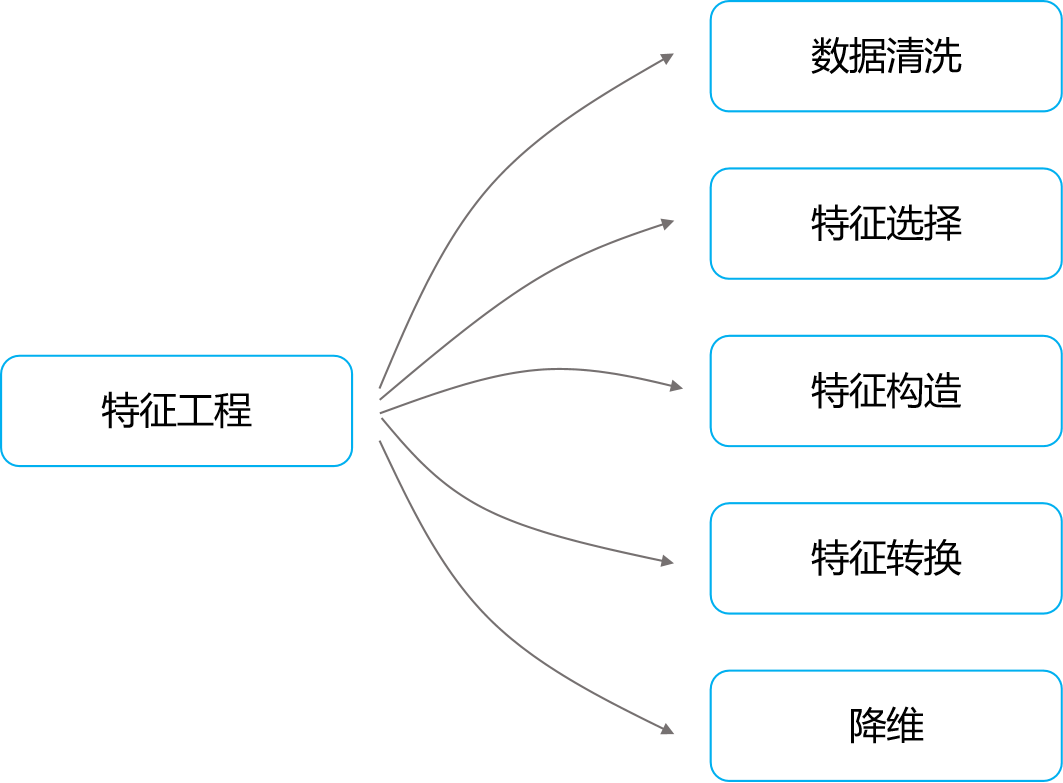

本文提出了一个超图Transformer来学习稀疏特征,看看怎么个事儿。

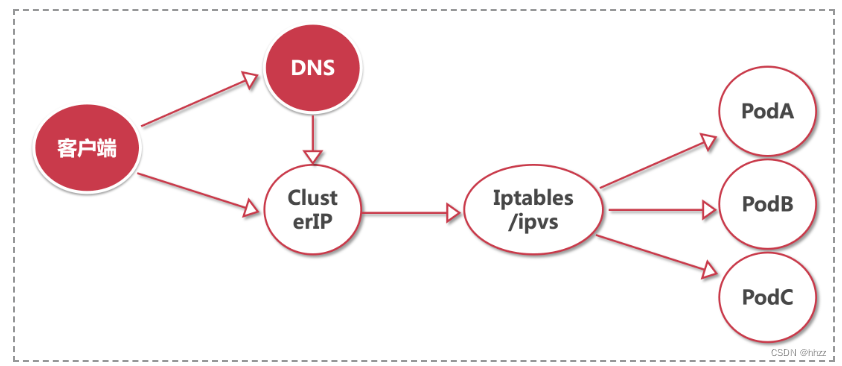

在本文中,作者提出通过关系表示学习来缓解特征稀疏性问题。由于每个特定的特性都可以出现在多个数据实例中,因此可以用来捕获实例相关性和特性相关性。

另外因为数据量太大,构建单个超图捕获所有特征基本上不可能,因此作者提出了基于批处理中的数据实例构建批内超图的方法。

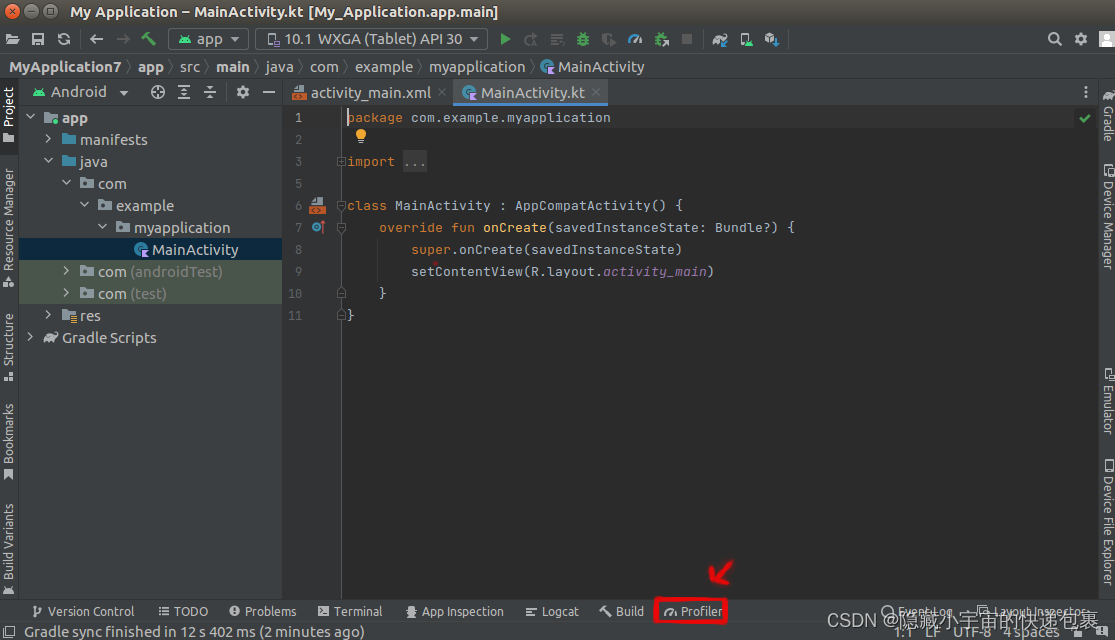

Hypergraph Transformer:

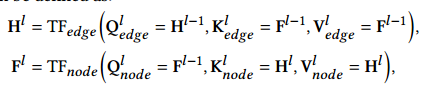

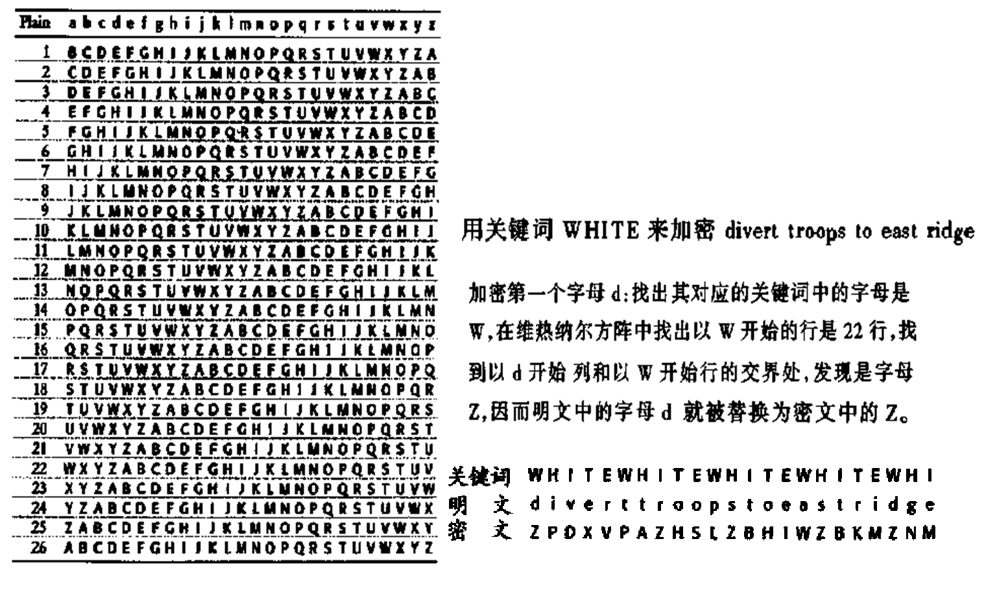

该模型采用类似Transformer的架构,利用超图结构对实例关联和特征关联进行编码。除了传统的GNN模型,HyperFormer中的每一层都使用两个不同的超图引导的消息传递函数来学习表征,同时捕获高阶实例关联和特征关联。形式上,Transformer层可以定义为:

其中,

其中,![]() 代表 Transformer-like的注意力机制,TF𝑒𝑑𝑔𝑒是将超边的信息聚合到节点的消息传递函数,TF𝑛𝑜𝑑𝑒是将节点的信息聚合到超边的消息传递函数

代表 Transformer-like的注意力机制,TF𝑒𝑑𝑔𝑒是将超边的信息聚合到节点的消息传递函数,TF𝑛𝑜𝑑𝑒是将节点的信息聚合到超边的消息传递函数

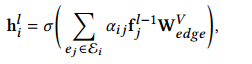

具体来说,首先从特征嵌入表F中查找初始化超边表示,并通过连接其所有特征表示来计算每个实例的初始节点表示。在不失一般性的前提下定义单个hyperformer的消息传递函数l为:对于所有的超边缘表示,首先应用特征到实例(边到节点)的消息传递来学习节点𝑣的下一层表示

。也就是将最后一个HyperFormer层𝑙−1的节点表示设置为Query。连接超边的表示可以投射到Key和Value中。K和V的相似度计算公式为:

,其中,

,其中,是特征到实例转换器的键的投影矩阵。则下一层节点表示可计算为:

σ是非线性激活函数比如Relu,W是可训练的投影矩阵

σ是非线性激活函数比如Relu,W是可训练的投影矩阵

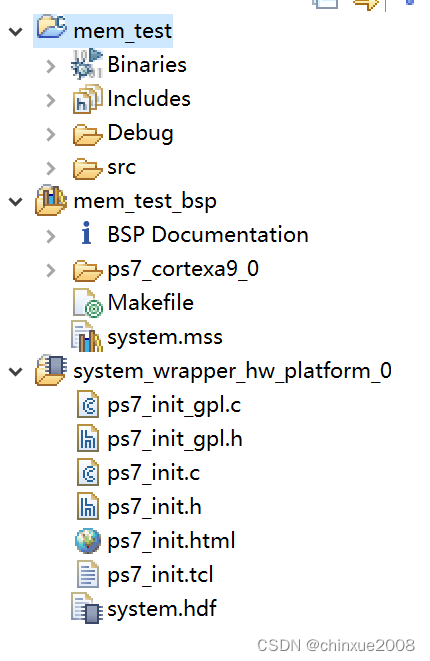

![[Qt] QString::fromLocal8Bit 的使用误区](https://img-blog.csdnimg.cn/direct/34e768b2de6140c59a3621cc4c8dec8b.png)