基于OpenStack官方的Ubuntu 22.04.3 LTS部署OpenStack-Bobcat教程(Neutron使用OVN)

注意事项

本文所有涉及密码的部分我都设置为123456

机器详情

| 主机名 | 网卡 | CPU | 内存 |

|---|---|---|---|

| controller | 网卡1:ens33 192.168.46.172/24 网卡2:ens34 不分配IP地址 |

2C | 8G |

| compute1 | 网卡1:ens33 192.168.46.173/24 网卡2:ens34 不分配IP地址 |

2C | 8G |

基础配置

Controller节点

设置主机名

hostnamectl set-hostname controller

设置静态IP

vim /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses:

- 192.168.46.172/24

routes:

- to: default

via: 192.168.46.2

nameservers:

addresses:

- 114.114.114.114

- 8.8.8.8

ens34:

dhcp4: no

dhcp6: no

addresses: []

version: 2

netplan apply

配置hosts

vim /etc/hosts

192.168.46.172 controller

192.168.46.173 compute1

Compute节点

设置主机名

hostnamectl set-hostname compute1

设置静态IP

vim /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses:

- 192.168.46.173/24

routes:

- to: default

via: 192.168.46.2

nameservers:

addresses:

- 114.114.114.114

- 8.8.8.8

ens34:

dhcp4: no

dhcp6: no

addresses: []

version: 2

netplan apply

配置hosts

vim /etc/hosts

192.168.46.172 controller

192.168.46.173 compute1

验证配置

Controller节点 && Compute节点

ping -c 4 www.baidu.com

# Compute节点

ping -c 4 controller

# Controller节点

ping -c 4 compute1

配置NTP

Controller节点 && Compute节点

设置时区

timedatectl set-timezone Asia/Shanghai

安装软件

apt install chrony -y

修改配置文件

vim /etc/chrony/chrony.conf

# 把开头是pool的所有行注释掉

# 添加如下的阿里时间服务器

server ntp.aliyun.com iburst

重启服务

systemctl restart chrony && systemctl enable chrony

验证配置

chronyc sources

# 出现如下所示表示配置成功

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 203.107.6.88 2 6 17 13 -2690us[-3632us] +/- 34ms

安装OpenStack packages

Controller节点 && Compute节点

add-apt-repository cloud-archive:bobcat

apt update

# Controller节点

apt install python3-openstackclient -y

安装SQL database

Controller节点

安装软件

apt install mariadb-server python3-pymysql -y

修改配置文件

vim /etc/mysql/mariadb.conf.d/99-openstack.cnf

[mysqld]

bind-address = 192.168.46.172 # Controller节点的IP地址

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

重启服务

systemctl restart mysql && systemctl enable mysql

初始化数据库

mysql_secure_installation

安装Message queue

Controller节点

安装软件

apt install rabbitmq-server -y

添加用户

rabbitmqctl add_user openstack RABBIT_PASS

RABBIT_PASS为设置的密码

设置权限

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

安装Memcached

Controller节点

安装软件

apt install memcached python3-memcache -y

修改配置文件

vim /etc/memcached.conf

-l 127.0.0.1

# 改为

-l Controller节点的IP地址

重启服务

systemctl restart memcached

安装Etcd

Controller节点

安装软件

apt install etcd -y

修改配置文件

vim /etc/default/etcd

# 如下IP地址均为Controller节点的IP地址

ETCD_NAME="controller"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER="controller=http://192.168.46.172:2380"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.46.172:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.46.172:2379"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.46.172:2379"

重启服务

systemctl restart etcd && systemctl enable etcd

安装Keystone

Controller节点

创建数据库

mysql

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

KEYSTONE_DBPASS为要设置的密码

exit

安装组件

apt install keystone -y

配置组件

vim /etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

KEYSTONE_DBPASS为创建keystone用户设置的数据库访问密码

[token]

provider = fernet

填充数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

# 出现如下的错误忽略即可,无影响

Exception ignored in: <function _removeHandlerRef at 0x7f285a6a83a0>

Traceback (most recent call last):

File "/usr/lib/python3.10/logging/__init__.py", line 846, in _removeHandlerRef

File "/usr/lib/python3.10/logging/__init__.py", line 226, in _acquireLock

File "/usr/lib/python3.10/threading.py", line 164, in acquire

File "/usr/lib/python3/dist-packages/eventlet/green/thread.py", line 34, in get_ident

AttributeError: 'NoneType' object has no attribute 'getcurrent'

初始化密钥

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

引导服务

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

ADMIN_PASS为准备设置管理员用户的密码

配置Apache

vim /etc/apache2/apache2.conf

# 不存在则添加

ServerName controller

重启Apache服务

systemctl restart apache2

创建脚本文件

vim admin-openrc.sh

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

ADMIN_PASS为之前设置的管理员用户的密码

chmod +x admin-openrc.sh

source admin-openrc.sh

创建domain

openstack domain create --description "An Example Domain" example

创建project

openstack project create --domain default \

--description "Demo Project" myproject

创建user

openstack user create --domain default \

--password-prompt myuser

创建role

openstack role create myrole

将role添加到project和user

openstack role add --project myproject --user myuser myrole

验证配置

source admin-openrc.sh

openstack --os-auth-url http://controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

# 正常应该输出类似如下的内容

root@controller:~# openstack --os-auth-url http://controller:5000/v3 \

> --os-project-domain-name Default --os-user-domain-name Default \

> --os-project-name admin --os-username admin token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2024-03-31T08:23:06+0000 |

| id | gAAAAABmCQ9aC7KVhtUt0bIyFuTU-lMkFo0hoZ0Aijv319VmVLKuO-zqx_K2Gp_E7ctscBU8Vx4ESY3eI4WLXYMeyHhcZW6V9Ki2XehhCMSgx8cRBtZNOKu6at9P_W1SF75Z6qYjcZhHMMhA7FqM5Audwu4HLM1IWpkbX0vFeqaGhEqVJnKfjaY |

| project_id | 1c9ca3eb987f477c9abdbcff2f605a60 |

| user_id | 33d24fdc018a46aa830bfd43fd7e9a6f |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

安装Glance

Controller节点

创建数据库

mysql

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'GLANCE_DBPASS';

GLANCE_DBPASS为要设置的密码

exit

创建glance用户

source admin-openrc.sh

openstack user create --domain default --password-prompt glance

将glacne添加到admin中去

openstack role add --project service --user glance admin

# 如果遇到如下的情况

No project with a name or ID of 'service' exists.

# 先创建名字为service的project

openstack project create --domain default service

# 在运行一下添加命令

创建glance服务实体

openstack service create --name glance \

--description "OpenStack Image" image

创建Image服务API端点

openstack endpoint create --region RegionOne \

image public http://controller:9292

openstack endpoint create --region RegionOne \

image internal http://controller:9292

openstack endpoint create --region RegionOne \

image admin http://controller:9292

安装组件

apt install glance -y

配置组件

vim /etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

GLANCE_DBPASS为创建glance用户访问数据库的密码

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

GLANCE_PASS为openstack创建glance用户设置的密码

[paste_deploy]

flavor = keystone

[DEFAULT]

enabled_backends=fs:file

[glance_store]

default_backend = fs

[fs]

filesystem_store_datadir = /var/lib/glance/images/

[oslo_limit]

auth_url = http://controller:5000

auth_type = password

user_domain_id = default

username = glance

system_scope = all

password = GLANCE_PASS

endpoint_id = 3622d855ba414f15a4becbef8479d5bf

region_name = RegionOne

GLANCE_PASS为openstack创建glance用户设置的密码

endpoint_id为创建Image服务API端点中类型为public的id

可以通过如下的命令查看

openstack endpoint list

添加权限

openstack role add --user glance --user-domain Default --system all reader

填充数据库

su -s /bin/sh -c "glance-manage db_sync" glance

重启Image服务

systemctl restart glance-api

验证配置

source admin-openrc.sh

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

glance image-create --name "cirros" \

--file /root/cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--visibility=public

glance image-list

# 输出如下的内容表示组件成功运行

root@controller:~# glance image-list

+--------------------------------------+--------+

| ID | Name |

+--------------------------------------+--------+

| 8244fbc0-f798-44c5-b0f3-9e5566e7e345 | cirros |

+--------------------------------------+--------+

安装Placement

Controller节点

创建数据库

mysql

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' \

IDENTIFIED BY 'PLACEMENT_DBPASS';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \

IDENTIFIED BY 'PLACEMENT_DBPASS';

PLACEMENT_DBPASS为要设置的密码

exit

创建placement用户

source admin-openrc.sh

openstack user create --domain default --password-prompt placement

将用户添加到admin

openstack role add --project service --user placement admin

创建placement服务实体

openstack service create --name placement \

--description "Placement API" placement

创建API 服务端点

openstack endpoint create --region RegionOne \

placement public http://controller:8778

openstack endpoint create --region RegionOne \

placement internal http://controller:8778

openstack endpoint create --region RegionOne \

placement admin http://controller:8778

安装组件

apt install placement-api -y

配置组件

vim /etc/placement/placement.conf

[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

PLACEMENT_DBPASS为创建placement用户访问数据库设置的密码

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = PLACEMENT_PASS

PLACEMENT_PASS为openstack创建placement用户设置的密码

填充数据库

su -s /bin/sh -c "placement-manage db sync" placement

重启apache2服务

systemctl restart apache2

验证配置

source admin-openrc.sh

placement-status upgrade check

# 输出如下的内容表示成功

root@controller:~# placement-status upgrade check

+-------------------------------------------+

| Upgrade Check Results |

+-------------------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Policy File JSON to YAML Migration |

| Result: Success |

| Details: None |

+-------------------------------------------+

openstack --os-placement-api-version 1.2 resource class list --sort-column name

# 输出如下的内容表示成功

root@controller:~# openstack --os-placement-api-version 1.2 resource class list --sort-column name

+----------------------------------------+

| name |

+----------------------------------------+

| DISK_GB |

| FPGA |

| IPV4_ADDRESS |

| MEMORY_MB |

| MEM_ENCRYPTION_CONTEXT |

| NET_BW_EGR_KILOBIT_PER_SEC |

| NET_BW_IGR_KILOBIT_PER_SEC |

| NET_PACKET_RATE_EGR_KILOPACKET_PER_SEC |

| NET_PACKET_RATE_IGR_KILOPACKET_PER_SEC |

| NET_PACKET_RATE_KILOPACKET_PER_SEC |

| NUMA_CORE |

| NUMA_MEMORY_MB |

| NUMA_SOCKET |

| NUMA_THREAD |

| PCI_DEVICE |

| PCPU |

| PGPU |

| SRIOV_NET_VF |

| VCPU |

| VGPU |

| VGPU_DISPLAY_HEAD |

+----------------------------------------+

openstack --os-placement-api-version 1.6 trait list --sort-column name

安装Nova

Controller节点

创建数据库

mysql

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

NOVA_DBPASS为要设置的密码

exit

创建nova用户

source admin-openrc.sh

openstack user create --domain default --password-prompt nova

添加到admin

openstack role add --project service --user nova admin

创建nova服务实体

openstack service create --name nova \

--description "OpenStack Compute" compute

创建API服务端点

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1

安装组件

apt install nova-api nova-conductor nova-novncproxy nova-scheduler -y

配置组件

vim /etc/nova/nova.conf

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

NOVA_DBPASS为创建nova用户设置访问数据库的密码

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

NOVA_DBPASS为创建nova用户设置访问数据库的密码

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller:5672/

my_ip = 192.168.46.172

RABBIT_PASS为rabbitmqctl创建openstack设置的密码

my_ip为Controller节点的IP地址

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

NOVA_PASS为openstack创建nova用户设置的密码

[service_user]

send_service_user_token = true

auth_url = http://controller:5000/v3

auth_strategy = keystone

auth_type = password

project_domain_name = Default

project_name = service

user_domain_name = Default

username = nova

password = NOVA_PASS

NOVA_PASS为openstack创建nova用户设置的密码

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

PLACEMENT_PASS为openstack创建placement用户设置的密码

填充数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

验证

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

# 输出类似如下的内容

root@controller:~# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | e52327df-10e2-41e1-a1d2-5d7724a85688 | rabbit://openstack:****@controller:5672/ | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

重启服务

systemctl restart nova-api nova-scheduler nova-conductor nova-novncproxy

Compute节点

安装组件

apt install nova-compute -y

配置组件

vim /etc/nova/nova.conf

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller

my_ip = 192.168.46.173

RABBIT_PASS为rabbit创建openstack用户设置的密码

my_ip为Compute节点的IP地址

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

NOVA_PASS为openstack创建nova用户设置的密码

[service_user]

send_service_user_token = true

auth_url = http://controller:5000/v3

auth_strategy = keystone

auth_type = password

project_domain_name = Default

project_name = service

user_domain_name = Default

username = nova

password = NOVA_PASS

NOVA_PASS为openstack创建nova用户设置的密码

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

controller替换成Controller的IP地址

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

PLACEMENT_PASS为openstack创建placement用户设置的密码

修改虚拟类型

egrep -c '(vmx|svm)' /proc/cpuinfo

如果返回为0则需要修改

vim /etc/nova/nova-compute.conf

[libvirt]

virt_type = qemu

重启服务

systemctl restart nova-compute

添加计算节点(Controller)

source admin-openrc.sh

openstack compute service list --service nova-compute

# 输出如下的内容

root@controller:~# openstack compute service list --service nova-compute

+--------------------------------------+--------------+----------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+--------------+----------+------+---------+-------+----------------------------+

| c9dd606c-0f31-4a9e-9c6f-bb40e33cf054 | nova-compute | compute1 | nova | enabled | up | 2024-03-31T08:01:53.000000 |

+--------------------------------------+--------------+----------+------+---------+-------+----------------------------+

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

# 输出如下的内容

root@controller:~# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': e52327df-10e2-41e1-a1d2-5d7724a85688

Checking host mapping for compute host 'compute1': 844b86c1-7310-4ddf-82cd-0b8f3350ef0d

Creating host mapping for compute host 'compute1': 844b86c1-7310-4ddf-82cd-0b8f3350ef0d

Found 1 unmapped computes in cell: e52327df-10e2-41e1-a1d2-5d7724a85688

验证配置(Controller)

openstack compute service list

# 输出如下的内容

root@controller:~# openstack compute service list

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| 2779af9c-cde5-41a6-87b2-5b6840d9b88b | nova-conductor | controller | internal | enabled | up | 2024-03-31T08:03:07.000000 |

| b24be4f1-ea05-4ee3-a7eb-8402ff53f172 | nova-scheduler | controller | internal | enabled | up | 2024-03-31T08:03:15.000000 |

| c9dd606c-0f31-4a9e-9c6f-bb40e33cf054 | nova-compute | compute1 | nova | enabled | up | 2024-03-31T08:03:13.000000 |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

openstack catalog list

openstack image list

nova-status upgrade check

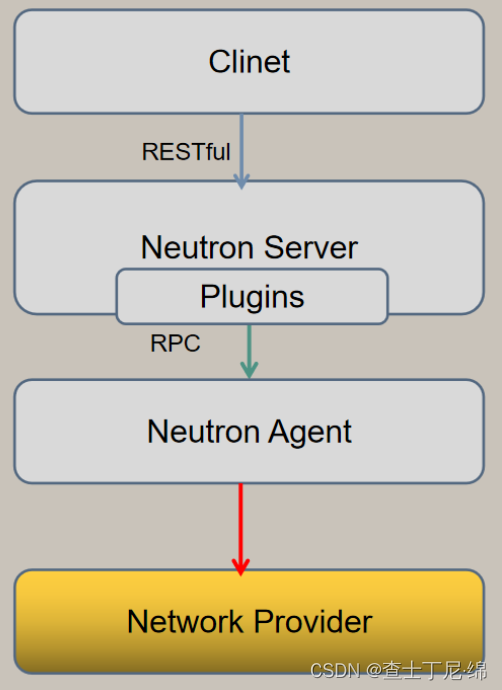

安装Neutron(使用的是OVN)

Controller节点

创建数据库

mysql

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'NEUTRON_DBPASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'NEUTRON_DBPASS';

NEUTRON_DBPASS为要设置的密码

exit

创建neutron用户

source admin-openrc.sh

openstack user create --domain default --password-prompt neutron

添加到admin

openstack role add --project service --user neutron admin

创建neutron服务实体

openstack service create --name neutron \

--description "OpenStack Networking" network

创建API端点

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:9696

安装组件

apt -y install neutron-server neutron-plugin-ml2 python3-neutronclient ovn-central openvswitch-switch

配置组件

vim /etc/neutron/neutron.conf

[DEFAULT]

bind_host = controller

bind_port = 9696

core_plugin = ml2

service_plugins = ovn-router

auth_strategy = keystone

state_path = /var/lib/neutron

allow_overlapping_ips = True

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

transport_url = rabbit://openstack:RABBIT_PASS@controller

RABBIT_PASS为rabbit创建openstack用户创建的密码

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = NEUTRON_PASS

NEUTRON_PASS为openstack创建neutron用户设置的密码

[database]

connection = mysql+pymysql://neutron:NEUTRON_PASS@controller/neutron

NEUTRON_PASS为创建neutron用户访问数据库设置的密码

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

NOVA_PASS为openstack创建nova用户设置的密码

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

debug = false

[ml2]

type_drivers = flat,geneve

tenant_network_types = geneve

mechanism_drivers = ovn

extension_drivers = port_security

overlay_ip_version = 4

[ml2_type_geneve]

vni_ranges = 1:65536

max_header_size = 38

[ml2_type_flat]

flat_networks = *

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovn]

ovn_nb_connection = tcp:ControllerIP:6641

ovn_sb_connection = tcp:ControllerIP:6642

ovn_l3_scheduler = leastloaded

ovn_metadata_enabled = True

ControllerIP为Controller节点的IP地址

vim /etc/default/openvswitch-switch

OVS_CTL_OPTS="--ovsdb-server-options='--remote=ptcp:6640:127.0.0.1'"

vim /etc/nova/nova.conf

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_PASS

insecure = false

NEUTRON_PASS为openstack创建neutron用户设置的密码

METADATA_PASS为创建的密码

填充数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

重启服务

systemctl restart nova-api

systemctl restart openvswitch-switch

ovs-vsctl add-br br-int

systemctl restart ovn-central ovn-northd

ovn-nbctl set-connection ptcp:6641:ControllerIP -- set connection . inactivity_probe=60000

ovn-sbctl set-connection ptcp:6642:ControllerIP -- set connection . inactivity_probe=60000

ControllerIP为Controller节点的IP地址

systemctl restart neutron-server

Compute节点

安装组件

apt -y install neutron-common neutron-plugin-ml2 neutron-ovn-metadata-agent ovn-host openvswitch-switch

配置组件

vim /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = ovn-router

auth_strategy = keystone

state_path = /var/lib/neutron

allow_overlapping_ips = True

transport_url = rabbit://openstack:RABBIT_PASS@controller

RABBIT_PASS为rabbit创建openstack设置的密码

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = NEUTRON_PASS

NEUTRON_PASS为openstack创建neutron用户设置的密码

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

debug = false

[ml2]

type_drivers = flat,geneve

tenant_network_types = geneve

mechanism_drivers = ovn

extension_drivers = port_security

overlay_ip_version = 4

[ml2_type_geneve]

vni_ranges = 1:65536

max_header_size = 38

[ml2_type_flat]

flat_networks = *

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovn]

ovn_nb_connection = tcp:ControllerIP:6641

ovn_sb_connection = tcp:ControllerIP:6642

ovn_l3_scheduler = leastloaded

ovn_metadata_enabled = True

ControllerIP为Controller节点的IP地址

vim /etc/neutron/neutron_ovn_metadata_agent.ini

[DEFAULT]

nova_metadata_host = controller

nova_metadata_protocol = http

metadata_proxy_shared_secret = METADATA_PASS

METADATA_PASS为设置的密码要与Controller的配置一样

[ovs]

ovsdb_connection = tcp:127.0.0.1:6640

[agent]

root_helper = sudo neutron-rootwrap /etc/neutron/rootwrap.conf

[ovn]

ovn_sb_connection = tcp:ControllerIP:6642

ControllerIP为Controller节点的IP地址

vim /etc/default/openvswitch-switch

OVS_CTL_OPTS="--ovsdb-server-options='--remote=ptcp:6640:127.0.0.1'"

vim /etc/nova/nova.conf

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_PASS

insecure = false

NEUTRON_PASS为openstack创建neutron用户设置的密码

METADATA_PASS为创建的密码

重启服务

systemctl restart openvswitch-switch ovn-controller ovn-host

systemctl restart neutron-ovn-metadata-agent

systemctl restart nova-compute

ovs-vsctl set open . external-ids:ovn-remote=tcp:ControllerIP:6642

ControllerIP为Controller节点的IP地址

ovs-vsctl set open . external-ids:ovn-encap-type=geneve

ovs-vsctl set open . external-ids:ovn-encap-ip=ComputeIP

ComputeIP为Compute节点的IP地址

配置验证(Controller)

source admin-openrc.sh

openstack network agent list

# 输入如下的内容表示成功

root@controller:~# openstack network agent list

+--------------------------------------+----------------------+----------+-------------------+-------+-------+----------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+----------------------+----------+-------------------+-------+-------+----------------------------+

| e42ab135-4870-4bfd-8b90-47ad92732627 | OVN Controller agent | compute1 | | :-) | UP | ovn-controller |

| 952c013f-c53d-54b8-89b4-d55930a40171 | OVN Metadata agent | compute1 | | :-) | UP | neutron-ovn-metadata-agent |

+--------------------------------------+----------------------+----------+-------------------+-------+-------+----------------------------+

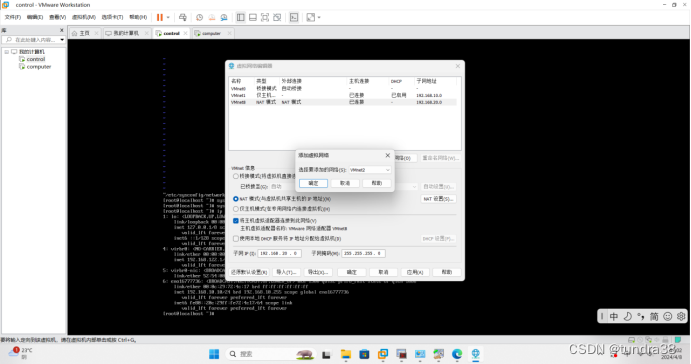

创建OVN网络

Controller节点 && Compute节点

# br-ens34你可以随便取名字

ovs-vsctl add-br br-ens34

# ens34为第二张网卡的名称

ovs-vsctl add-port br-ens34 ens34

# provider你可以随便取名字

ovs-vsctl set open . external-ids:ovn-bridge-mappings=provider:br-ens34

Controller节点

# 创建一个路由

openstack router create router

# 创建selfservice

openstack network create selfservice --provider-network-type geneve

# 创建selfservice子网

openstack subnet create --network selfservice \

--dns-nameserver 8.8.8.8 --gateway 172.16.1.1 \

--subnet-range 172.16.1.0/24 selfservice

# 创建外部网络

openstack network create --share --external \

--provider-physical-network provider \

--provider-network-type flat provider

# 创建外部网络子网

openstack subnet create --network provider \

--allocation-pool start=192.168.46.240,end=192.168.46.250 \

--dns-nameserver 8.8.8.8 --gateway 192.168.46.2 \

--subnet-range 192.168.46.0/24 provider

# 给路由设置网关

openstack router set router --external-gateway provider

# 给路由添加内部接口

openstack router add subnet router selfservice

# 验证是否成功

openstack port list --router router

# 输入如下的内容表示成功

root@controller:~# openstack port list --router router

+--------------------------------------+------+-------------------+-------------------------------------------------------------------------------+--------+

| ID | Name | MAC Address | Fixed IP Addresses | Status |

+--------------------------------------+------+-------------------+-------------------------------------------------------------------------------+--------+

| 56a061e8-f5b1-4df8-8dcb-347bb53ecead | | fa:16:3e:28:2c:c3 | ip_address='172.16.1.1', subnet_id='df79d0ea-9548-486c-8c79-b9dfe9da35ff' | ACTIVE |

| 6a5c3ba2-df0f-4552-91f1-8eafcdd65da0 | | fa:16:3e:02:f7:16 | ip_address='192.168.46.249', subnet_id='d608e2b9-d2e2-423c-bfec-2a23a1516760' | ACTIVE |

+--------------------------------------+------+-------------------+-------------------------------------------------------------------------------+--------+

# 也可以通过如下的命令查看

ovn-nbctl show

# 输出如下的内容

root@controller:~# ovn-nbctl show

switch dc4e3afc-da27-4e73-b741-a1c8d57434f7 (neutron-43fe943c-fe1a-4dfe-9f3a-91dc7cdf823f) (aka provider)

port 6a5c3ba2-df0f-4552-91f1-8eafcdd65da0

type: router

router-port: lrp-6a5c3ba2-df0f-4552-91f1-8eafcdd65da0

port dcd112c2-dd97-4f40-b7ba-f8d64bd68a5d

addresses: ["fa:16:3e:16:bc:3a 192.168.46.246"]

port provnet-3140ef3f-1dc0-4490-bfdb-134d1c611aec

type: localnet

addresses: ["unknown"]

port 201604e9-1146-4536-a56d-965e82e26d8e

type: localport

addresses: ["fa:16:3e:5e:20:5b 192.168.46.240"]

switch e7a2f47f-0ea4-4587-bd18-d444a8f1b500 (neutron-31f3eb52-667f-4b5a-8ef3-3f1d048b29b3) (aka selfservice)

port d47fb30d-6d47-4d57-bd4d-96f779f6e765

type: localport

addresses: ["fa:16:3e:47:46:d8 172.16.1.2"]

port d2183412-590a-4bc0-b992-7efaf8a8c9f1

addresses: ["fa:16:3e:a0:e5:23 172.16.1.37"]

port 56a061e8-f5b1-4df8-8dcb-347bb53ecead

type: router

router-port: lrp-56a061e8-f5b1-4df8-8dcb-347bb53ecead

router 715fc656-e8f8-4328-aa2d-82b994bcfe2d (neutron-43435b65-0e5e-4bc0-90fa-5a01c1aa89c2) (aka router)

port lrp-56a061e8-f5b1-4df8-8dcb-347bb53ecead

mac: "fa:16:3e:28:2c:c3"

networks: ["172.16.1.1/24"]

port lrp-6a5c3ba2-df0f-4552-91f1-8eafcdd65da0

mac: "fa:16:3e:02:f7:16"

networks: ["192.168.46.249/24"]

gateway chassis: [e42ab135-4870-4bfd-8b90-47ad92732627]

nat d4d704be-2102-437b-bc4a-0be5504824aa

external ip: "192.168.46.249"

logical ip: "172.16.1.0/24"

type: "snat"

root@controller:~#

安装Dashboard

Controller节点

安装组件

apt install openstack-dashboard -y

配置组件

vim /etc/openstack-dashboard/local_settings.py

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*']

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

vim /etc/apache2/conf-available/openstack-dashboard.conf

# 不存在则添加

WSGIApplicationGroup %{GLOBAL}

如果不能正常访问

python3 /usr/share/openstack-dashboard/manage.py compress

重启服务

systemctl reload apache2.service

基础的配置(也可以去dashboard上创建)

创建实例的计算、内存和存储容量的大小

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

密钥设置

ssh-keygen -q -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

设置安全组

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default