一、TCP编程

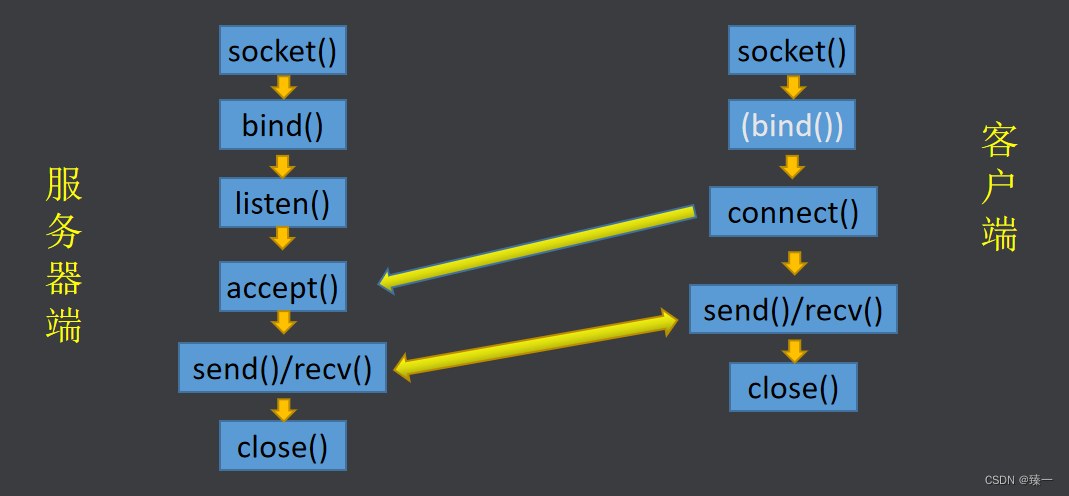

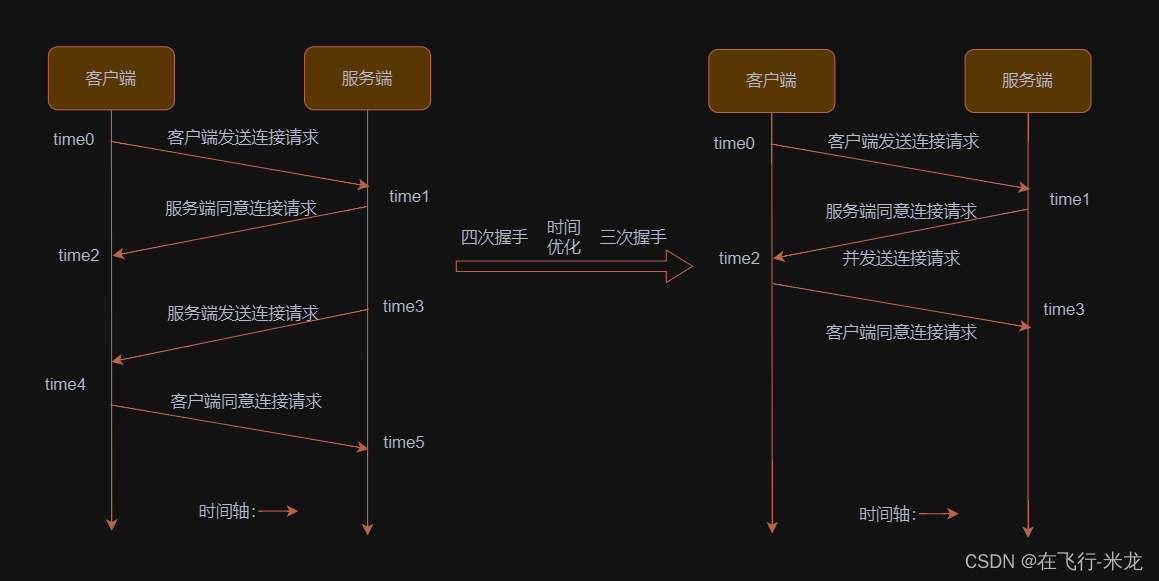

上一篇文章分析了基本的网络编程和TCP相关的编程框架。在实际应用中,TCP编程还是应用的相当广泛的。DPDK其实完全可以不走寻常路,自己搞定网络编程,但实际情况是大量的实际存在的工程和广泛被接受的TCP/IP协议和网络模型,可不是谁想动就动的。所以DPDK需要可以实现一种TCP编程的方法即虽然不能自己创建一种网络编程的新形式但可以实现TCP/IP编程的协议啊。这不就实现了TCP/IP编程么。

其实谷歌的QUIC和最新的HTTPS/3不就是自己搞的么,所以,其实没有什么能与不能的问题。是可不可以推广更被接受的问题。闲话扯完,聊回正事儿。

二、、源码分析

首先需要说明一点,开发者需要对基本TCP编程和相关协议至少有基础的了解,否则可能会有理解上的偏差。这里不对这些协议等进行介绍,主要是资料太多了,从教科书到网上到各种培训机构视频多得很。有不太清楚的可以回头稍微补充一下。

下面代码来自开源网站github(https://github.com/hjlogzw/DPDK-TCP-UDP_Protocol_Stack)

TCP编程的要点在上一篇分析过,下面看一个开源的例程:

1、数据结构和协议实现

#ifndef __TCP_H__

#define __TCP_H__

#include "common.h"

int tcp_process(struct rte_mbuf* pstTcpMbuf);

int tcp_out(struct rte_mempool* pstMbufPool);

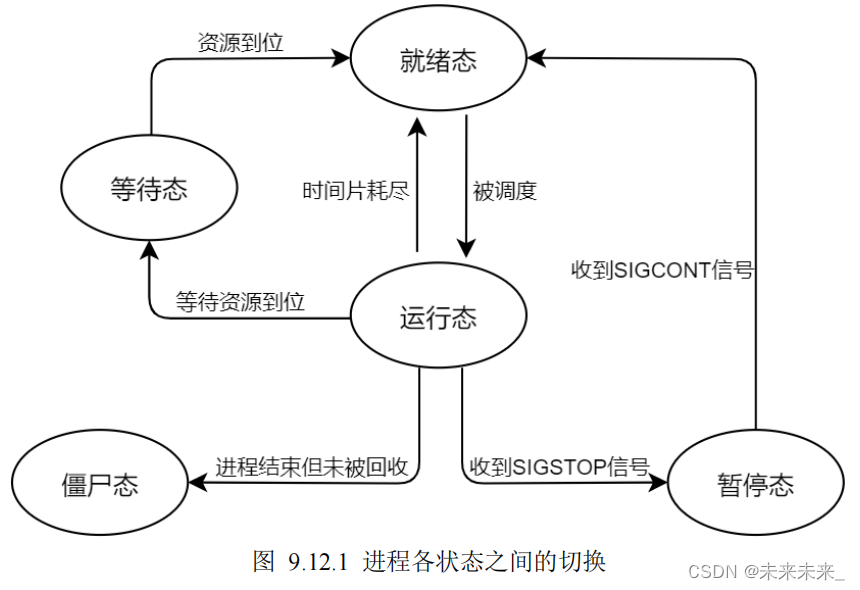

// 11种tcp连接状态

typedef enum _ENUM_TCP_STATUS

{

TCP_STATUS_CLOSED = 0,

TCP_STATUS_LISTEN,

TCP_STATUS_SYN_RCVD,

TCP_STATUS_SYN_SENT,

TCP_STATUS_ESTABLISHED,

TCP_STATUS_FIN_WAIT_1,

TCP_STATUS_FIN_WAIT_2,

TCP_STATUS_CLOSING,

TCP_STATUS_TIME_WAIT,

TCP_STATUS_CLOSE_WAIT,

TCP_STATUS_LAST_ACK

}TCP_STATUS;

// tcb control block

struct tcp_stream

{

int fd;

uint32_t dip;

uint8_t localmac[RTE_ETHER_ADDR_LEN];

uint16_t dport;

uint8_t protocol;

uint16_t sport;

uint32_t sip;

uint32_t snd_nxt; // seqnum

uint32_t rcv_nxt; // acknum

TCP_STATUS status;

struct rte_ring* sndbuf;

struct rte_ring* rcvbuf;

struct tcp_stream* prev;

struct tcp_stream* next;

pthread_cond_t cond;

pthread_mutex_t mutex;

};

struct tcp_table

{

int count;

//struct tcp_stream *listener_set; //

#if ENABLE_SINGLE_EPOLL

struct eventpoll* ep; // single epoll

#endif

struct tcp_stream* tcb_set;

};

struct tcp_fragment

{

uint16_t sport;

uint16_t dport;

uint32_t seqnum;

uint32_t acknum;

uint8_t hdrlen_off;

uint8_t tcp_flags;

uint16_t windows;

uint16_t cksum;

uint16_t tcp_urp;

int optlen;

uint32_t option[D_TCP_OPTION_LENGTH];

unsigned char* data;

uint32_t length;

};

#endif

2、接口实现

#include "tcp.h"

static struct tcp_stream * tcp_stream_create(uint32_t sip, uint32_t dip, uint16_t sport, uint16_t dport)

{

char acBuf[32] = {0};

unsigned int uiSeed;

struct tcp_stream *pstStream = rte_malloc("tcp_stream", sizeof(struct tcp_stream), 0);

if (pstStream == NULL)

return NULL;

pstStream->sip = sip;

pstStream->dip = dip;

pstStream->sport = sport;

pstStream->dport = dport;

pstStream->protocol = IPPROTO_TCP;

pstStream->fd = -1;

pstStream->status = TCP_STATUS_LISTEN;

sprintf(acBuf, "sndbuf%x%d", sip, sport);

pstStream->sndbuf = rte_ring_create(acBuf, D_RING_SIZE, rte_socket_id(), 0);

sprintf(acBuf, "rcvbuf%x%d", sip, sport);

pstStream->rcvbuf = rte_ring_create(acBuf, D_RING_SIZE, rte_socket_id(), 0);

// seq num

uiSeed = time(NULL);

pstStream->snd_nxt = rand_r(&uiSeed) % D_TCP_MAX_SEQ;

rte_memcpy(pstStream->localmac, &g_stCpuMac, RTE_ETHER_ADDR_LEN);

pthread_cond_t blank_cond = PTHREAD_COND_INITIALIZER;

rte_memcpy(&pstStream->cond, &blank_cond, sizeof(pthread_cond_t));

pthread_mutex_t blank_mutex = PTHREAD_MUTEX_INITIALIZER;

rte_memcpy(&pstStream->mutex, &blank_mutex, sizeof(pthread_mutex_t));

return pstStream;

}

static int tcp_handle_listen(struct tcp_stream *pstStream, struct rte_tcp_hdr *pstTcphdr,

struct rte_ipv4_hdr *pstIphdr)

{

if (pstTcphdr->tcp_flags & RTE_TCP_SYN_FLAG)

{

if (pstStream->status == TCP_STATUS_LISTEN)

{

struct tcp_stream *pstSyn = tcp_stream_create(pstIphdr->src_addr, pstIphdr->dst_addr,

pstTcphdr->src_port, pstTcphdr->dst_port);

LL_ADD(pstSyn, g_pstTcpTbl->tcb_set);

struct tcp_fragment *pstFragment = rte_malloc("tcp_fragment", sizeof(struct tcp_fragment), 0);

if (pstFragment == NULL)

return -1;

memset(pstFragment, 0, sizeof(struct tcp_fragment));

pstFragment->sport = pstTcphdr->dst_port;

pstFragment->dport = pstTcphdr->src_port;

struct in_addr addr;

addr.s_addr = pstSyn->sip;

printf("tcp ---> src: %s:%d ", inet_ntoa(addr), ntohs(pstTcphdr->src_port));

addr.s_addr = pstSyn->dip;

printf(" ---> dst: %s:%d \n", inet_ntoa(addr), ntohs(pstTcphdr->dst_port));

pstFragment->seqnum = pstSyn->snd_nxt;

pstFragment->acknum = ntohl(pstTcphdr->sent_seq) + 1;

pstSyn->rcv_nxt = pstFragment->acknum;

pstFragment->tcp_flags = (RTE_TCP_SYN_FLAG | RTE_TCP_ACK_FLAG);

pstFragment->windows = D_TCP_INITIAL_WINDOW;

pstFragment->hdrlen_off = 0x50;

pstFragment->data = NULL;

pstFragment->length = 0;

rte_ring_mp_enqueue(pstSyn->sndbuf, pstFragment);

pstSyn->status = TCP_STATUS_SYN_RCVD;

}

}

return 0;

}

static int tcp_handle_syn_rcvd(struct tcp_stream *pstStream, struct rte_tcp_hdr *pstTcphdr)

{

if (pstTcphdr->tcp_flags & RTE_TCP_ACK_FLAG)

{

if (pstStream->status == TCP_STATUS_SYN_RCVD)

{

uint32_t acknum = ntohl(pstTcphdr->recv_ack);

if (acknum == pstStream->snd_nxt + 1)

{

printf("ack response success!\n");

}

else

{

printf("ack response error! \n");

}

pstStream->status = TCP_STATUS_ESTABLISHED;

// accept

struct tcp_stream *pstListener = tcp_stream_search(0, 0, 0, pstStream->dport);

if (pstListener == NULL)

{

rte_exit(EXIT_FAILURE, "tcp_stream_search failed\n");

}

pthread_mutex_lock(&pstListener->mutex);

pthread_cond_signal(&pstListener->cond); // 唤醒accept中的等待

pthread_mutex_unlock(&pstListener->mutex);

#if ENABLE_SINGLE_EPOLL

epoll_event_callback(g_pstTcpTbl->ep, pstListener->fd, EPOLLIN);

#endif

}

}

return 0;

}

int tcp_process(struct rte_mbuf *pstTcpMbuf)

{

struct rte_ipv4_hdr *pstIpHdr;

struct rte_tcp_hdr *pstTcpHdr;

struct tcp_stream *pstTcpStream;

unsigned short usOldTcpCkSum;

unsigned short usNewTcpCkSum;

pstIpHdr = rte_pktmbuf_mtod_offset(pstTcpMbuf, struct rte_ipv4_hdr *, sizeof(struct rte_ether_hdr));

pstTcpHdr = (struct rte_tcp_hdr *)(pstIpHdr + 1);

// 校验和

usOldTcpCkSum = pstTcpHdr->cksum;

pstTcpHdr->cksum = 0;

usNewTcpCkSum = rte_ipv4_udptcp_cksum(pstIpHdr, pstTcpHdr);

if (usOldTcpCkSum != usNewTcpCkSum)

{

printf("cksum: %x, tcp cksum: %x\n", usOldTcpCkSum, usNewTcpCkSum);

rte_pktmbuf_free(pstTcpMbuf);

return -1;

}

// 搜索涵盖了半连接队列和全连接队列

// 搜索的stream,根据status状态调用对应处理函数

pstTcpStream = tcp_stream_search(pstIpHdr->src_addr, pstIpHdr->dst_addr,

pstTcpHdr->src_port, pstTcpHdr->dst_port);

if (pstTcpStream == NULL)

{

rte_pktmbuf_free(pstTcpMbuf);

return -2;

}

switch(pstTcpStream->status)

{

case TCP_STATUS_CLOSED: //client

break;

case TCP_STATUS_LISTEN: // server

tcp_handle_listen(pstTcpStream, pstTcpHdr, pstIpHdr);

break;

case TCP_STATUS_SYN_RCVD: // server

tcp_handle_syn_rcvd(pstTcpStream, pstTcpHdr);

break;

case TCP_STATUS_SYN_SENT: // client

break;

case TCP_STATUS_ESTABLISHED: // server | client

{

int tcplen = ntohs(pstIpHdr->total_length) - sizeof(struct rte_ipv4_hdr);

tcp_handle_established(pstTcpStream, pstTcpHdr, tcplen);

// printf("tcplen = %d\n", tcplen);

break;

}

case TCP_STATUS_FIN_WAIT_1: // ~client

break;

case TCP_STATUS_FIN_WAIT_2: // ~client

break;

case TCP_STATUS_CLOSING: // ~client

break;

case TCP_STATUS_TIME_WAIT: // ~client

break;

case TCP_STATUS_CLOSE_WAIT: // ~server

tcp_handle_close_wait(pstTcpStream, pstTcpHdr);

break;

case TCP_STATUS_LAST_ACK: // ~server

tcp_handle_last_ack(pstTcpStream, pstTcpHdr);

break;

}

return 0;

}

int tcp_out(struct rte_mempool *pstMbufPool)

{

struct tcp_table *pstTable = tcpInstance();

struct tcp_stream *pstStream = NULL;

for(pstStream = pstTable->tcb_set; pstStream != NULL; pstStream = pstStream->next)

{

if(pstStream->sndbuf == NULL)

continue;

struct tcp_fragment *pstFragment = NULL;

int iSendCnt = rte_ring_mc_dequeue(pstStream->sndbuf, (void**)&pstFragment);

if (iSendCnt < 0)

continue;

// struct in_addr addr;

// addr.s_addr = pstStream->sip;

// printf("tcp_out ---> src: %s:%d \n", inet_ntoa(addr), ntohs(pstFragment->dport));

uint8_t *dstmac = ng_get_dst_macaddr(pstStream->sip); // 这里的源ip指的是对端ip

if (dstmac == NULL) // 先广播发个arp包确定对端mac地址

{

printf("ng_send_arp\n");

struct rte_mbuf *pstArpbuf = ng_send_arp(pstMbufPool, RTE_ARP_OP_REQUEST, g_aucDefaultArpMac,

pstStream->dip, pstStream->sip);

rte_ring_mp_enqueue_burst(g_pstRingIns->pstOutRing, (void **)&pstArpbuf, 1, NULL);

rte_ring_mp_enqueue(pstStream->sndbuf, pstFragment); // 将取出的数据再次放入队列

}

else

{

printf("ng_send_data\n");

struct rte_mbuf *pstTcpBuf = ng_tcp_pkt(pstMbufPool, pstStream->dip, pstStream->sip,

pstStream->localmac, dstmac, pstFragment);

rte_ring_mp_enqueue_burst(g_pstRingIns->pstOutRing, (void **)&pstTcpBuf, 1, NULL);

if (pstFragment->data != NULL)

rte_free(pstFragment->data);

rte_free(pstFragment);

}

}

return 0;

}

3、应用例程

#include <stdio.h>

#include <math.h>

#include <arpa/inet.h>

#include "common.h"

#include "tcp.h"

#include "udp.h"

struct rte_ether_addr g_stCpuMac;

struct rte_kni *g_pstKni; // todo:后续将全局变量统一初始化,不再使用getInstance()

struct St_InOut_Ring *g_pstRingIns = NULL; // todo:后续将全局变量统一初始化,不再使用getInstance()

struct localhost *g_pstHost = NULL; // todo:后续将全局变量统一初始化,不再使用getInstance()

struct arp_table *g_pstArpTbl = NULL; // todo:后续将全局变量统一初始化,不再使用getInstance()

struct tcp_table *g_pstTcpTbl = NULL; // todo:后续将全局变量统一初始化,不再使用getInstance()

unsigned char g_aucDefaultArpMac[RTE_ETHER_ADDR_LEN] = {0xFF, 0xFF, 0xFF, 0xFF, 0xFF, 0xFF};

unsigned char g_ucFdTable[D_MAX_FD_COUNT] = {0};

static struct St_InOut_Ring *ringInstance(void)

{

if (g_pstRingIns == NULL)

{

g_pstRingIns = rte_malloc("in/out ring", sizeof(struct St_InOut_Ring), 0);

memset(g_pstRingIns, 0, sizeof(struct St_InOut_Ring));

}

return g_pstRingIns;

}

void ng_init_port(struct rte_mempool *pstMbufPoolPub)

{

unsigned int uiPortsNum;

const int iRxQueueNum = 1;

const int iTxQueueNum = 1;

int iRet;

struct rte_eth_dev_info stDevInfo;

struct rte_eth_txconf stTxConf;

struct rte_eth_conf stPortConf = // 端口配置信息

{

.rxmode = {.max_rx_pkt_len = 1518 } // RTE_ETHER_MAX_LEN = 1518

};

uiPortsNum = rte_eth_dev_count_avail();

if (uiPortsNum == 0)

rte_exit(EXIT_FAILURE, "No Supported eth found\n");

rte_eth_dev_info_get(D_PORT_ID, &stDevInfo);

// 配置以太网设备

rte_eth_dev_configure(D_PORT_ID, iRxQueueNum, iTxQueueNum, &stPortConf);

iRet = rte_eth_rx_queue_setup(D_PORT_ID, 0 , 1024, rte_eth_dev_socket_id(D_PORT_ID), NULL, pstMbufPoolPub);

if(iRet < 0)

rte_exit(EXIT_FAILURE, "Could not setup RX queue!\n");

stTxConf = stDevInfo.default_txconf;

stTxConf.offloads = stPortConf.txmode.offloads;

iRet = rte_eth_tx_queue_setup(D_PORT_ID, 0 , 1024, rte_eth_dev_socket_id(D_PORT_ID), &stTxConf);

if (iRet < 0)

rte_exit(EXIT_FAILURE, "Could not setup TX queue\n");

if (rte_eth_dev_start(D_PORT_ID) < 0 )

rte_exit(EXIT_FAILURE, "Could not start\n");

rte_eth_promiscuous_enable(D_PORT_ID);

}

static int ng_config_network_if(uint16_t port_id, unsigned char if_up) {

if (!rte_eth_dev_is_valid_port(port_id)) {

return -EINVAL;

}

int ret = 0;

if (if_up) {

rte_eth_dev_stop(port_id);

ret = rte_eth_dev_start(port_id);

} else {

rte_eth_dev_stop(port_id);

}

if (ret < 0) {

printf("Failed to start port : %d\n", port_id);

}

return 0;

}

static struct rte_kni *ng_alloc_kni(struct rte_mempool *mbuf_pool) {

struct rte_kni *kni_hanlder = NULL;

struct rte_kni_conf conf;

memset(&conf, 0, sizeof(conf));

snprintf(conf.name, RTE_KNI_NAMESIZE, "vEth%u", D_PORT_ID);

conf.group_id = D_PORT_ID;

conf.mbuf_size = D_MAX_PACKET_SIZE;

rte_eth_macaddr_get(D_PORT_ID, (struct rte_ether_addr *)conf.mac_addr);

rte_eth_dev_get_mtu(D_PORT_ID, &conf.mtu);

// print_ethaddr("ng_alloc_kni: ", (struct ether_addr *)conf.mac_addr);

/*

struct rte_eth_dev_info dev_info;

memset(&dev_info, 0, sizeof(dev_info));

rte_eth_dev_info_get(D_PORT_ID, &dev_info);

*/

struct rte_kni_ops ops;

memset(&ops, 0, sizeof(ops));

ops.port_id = D_PORT_ID;

ops.config_network_if = ng_config_network_if;

kni_hanlder = rte_kni_alloc(mbuf_pool, &conf, &ops);

if (!kni_hanlder) {

rte_exit(EXIT_FAILURE, "Failed to create kni for port : %d\n", D_PORT_ID);

}

return kni_hanlder;

}

static int pkt_process(void *arg)

{

struct rte_mempool *pstMbufPool;

int iRxNum;

int i;

struct rte_ether_hdr *pstEthHdr;

struct rte_ipv4_hdr *pstIpHdr;

pstMbufPool = (struct rte_mempool *)arg;

while(1)

{

struct rte_mbuf *pstMbuf[32];

iRxNum = rte_ring_mc_dequeue_burst(g_pstRingIns->pstInRing, (void**)pstMbuf, D_BURST_SIZE, NULL);

if(iRxNum <= 0)

continue;

for(i = 0; i < iRxNum; ++i)

{

pstEthHdr = rte_pktmbuf_mtod_offset(pstMbuf[i], struct rte_ether_hdr *, 0);

if (pstEthHdr->ether_type == rte_cpu_to_be_16(RTE_ETHER_TYPE_IPV4)) //IPv4: 0800

{

pstIpHdr = rte_pktmbuf_mtod_offset(pstMbuf[i], struct rte_ipv4_hdr *, sizeof(struct rte_ether_hdr));

// 维护一个arp表

ng_arp_entry_insert(pstIpHdr->src_addr, pstEthHdr->s_addr.addr_bytes);

if(pstIpHdr->next_proto_id == IPPROTO_UDP) // udp

{

// udp process

//udp_process(pstMbuf[i]);

}

else if(pstIpHdr->next_proto_id == IPPROTO_TCP) // tcp

{

// printf("tcp_process ---\n");

tcp_process(pstMbuf[i]);

}

else

{

rte_kni_tx_burst(g_pstKni, pstMbuf, iRxNum);

// printf("tcp/udp --> rte_kni_handle_request\n");

}

}

else

{

// ifconfig vEth0 192.168.181.169 up

rte_kni_tx_burst(g_pstKni, pstMbuf, iRxNum);

// printf("ip --> rte_kni_handle_request\n");

}

}

rte_kni_handle_request(g_pstKni);

// to send

udp_out(pstMbufPool);

tcp_out(pstMbufPool);

}

return 0;

}

#define BUFFER_SIZE 1024

#ifdef ENABLE_SINGLE_EPOLL

int tcp_server_entry(__attribute__((unused)) void *arg)

{

int listenfd = nsocket(AF_INET, SOCK_STREAM, 0);

if (listenfd == -1)

{

return -1;

}

struct sockaddr_in servaddr;

memset(&servaddr, 0, sizeof(struct sockaddr));

servaddr.sin_family = AF_INET;

servaddr.sin_addr.s_addr = htonl(INADDR_ANY);

servaddr.sin_port = htons(9999);

nbind(listenfd, (struct sockaddr*)&servaddr, sizeof(servaddr));

nlisten(listenfd, 10);

int epfd = nepoll_create(1);

struct epoll_event ev, events[1024];

ev.data.fd = listenfd;

ev.events |= EPOLLIN;

nepoll_ctl(epfd, EPOLL_CTL_ADD, listenfd, &ev);

char buff[BUFFER_SIZE] = {'\0'};

while(1)

{

int nready = nepoll_wait(epfd, events, 1024, 5);

if(nready < 0)

continue;

for(int i = 0; i < nready; ++i)

{

int fd = events[i].data.fd;

if(listenfd == fd)

{

struct sockaddr_in client;

socklen_t len = sizeof(client);

int connfd = naccept(listenfd, (struct sockaddr*)&client, &len);

struct epoll_event ev;

ev.events = EPOLLIN;

ev.data.fd = connfd;

nepoll_ctl(epfd, EPOLL_CTL_ADD, connfd, &ev);

}

else

{

int n = nrecv(fd, buff, BUFFER_SIZE, 0); //block

if (n > 0)

{

printf(" arno --> recv: %s\n", buff);

nsend(fd, buff, n, 0);

}

else

{

printf("error: %s\n", strerror(errno));

nepoll_ctl(epfd, EPOLL_CTL_DEL, fd, NULL);

nclose(fd);

}

}

}

}

return 0;

}

#else

int tcp_server_entry(__attribute__((unused)) void *arg)

{

int listenfd;

int iRet = -1;

struct sockaddr_in servaddr;

listenfd = nsocket(AF_INET, SOCK_STREAM, 0);

if (listenfd == -1)

{

printf("[%s][%d] nsocket error!\n", __FUNCTION__, __LINE__);

return -1;

}

memset(&servaddr, 0, sizeof(struct sockaddr));

servaddr.sin_family = AF_INET;

servaddr.sin_addr.s_addr = htonl(INADDR_ANY);

servaddr.sin_port = htons(9999);

iRet = nbind(listenfd, (struct sockaddr*)&servaddr, sizeof(servaddr));

if(iRet < 0)

{

printf("nbind error!\n");

return -1;

}

nlisten(listenfd, 10);

while (1)

{

struct sockaddr_in client;

socklen_t len = sizeof(client);

int connfd = naccept(listenfd, (struct sockaddr*)&client, &len);

char buff[D_TCP_BUFFER_SIZE] = {0};

while (1)

{

int n = nrecv(connfd, buff, D_TCP_BUFFER_SIZE, 0); //block

if (n > 0)

{

printf("recv: %s\n", buff);

nsend(connfd, buff, n, 0);

}

else if (n == 0)

{

printf("nclose()\n");

nclose(connfd);

break;

}

else

{ //nonblock

}

}

}

nclose(listenfd);

return 0;

}

#endif

int main(int argc, char *argv[])

{

struct rte_mempool *pstMbufPoolPub;

struct St_InOut_Ring *pstRing;

struct rte_mbuf *pstRecvMbuf[32] = {NULL};

struct rte_mbuf *pstSendMbuf[32] = {NULL};

int iRxNum;

int iTotalNum;

int iOffset;

int iTxNum;

unsigned int uiCoreId;

if(rte_eal_init(argc, argv) < 0)

rte_exit(EXIT_FAILURE, "Error with EAL init\n");

pstMbufPoolPub = rte_pktmbuf_pool_create("MBUF_POOL_PUB", D_NUM_MBUFS, 0, 0,

RTE_MBUF_DEFAULT_BUF_SIZE, rte_socket_id());

if(pstMbufPoolPub == NULL)

{

printf("rte_errno = %x, errmsg = %s\n", rte_errno, rte_strerror(rte_errno));

return -1;

}

if (-1 == rte_kni_init(D_PORT_ID))

rte_exit(EXIT_FAILURE, "kni init failed\n");

ng_init_port(pstMbufPoolPub);

g_pstKni = ng_alloc_kni(pstMbufPoolPub);

// ng_init_port(pstMbufPoolPub);

rte_eth_macaddr_get(D_PORT_ID, &g_stCpuMac);

pstRing = ringInstance();

if(pstRing == NULL)

rte_exit(EXIT_FAILURE, "ring buffer init failed\n");

pstRing->pstInRing = rte_ring_create("in ring", D_RING_SIZE, rte_socket_id(), RING_F_SP_ENQ | RING_F_SC_DEQ);

pstRing->pstOutRing = rte_ring_create("out ring", D_RING_SIZE, rte_socket_id(), RING_F_SP_ENQ | RING_F_SC_DEQ);

uiCoreId = rte_lcore_id();

uiCoreId = rte_get_next_lcore(uiCoreId, 1, 0);

rte_eal_remote_launch(pkt_process, pstMbufPoolPub, uiCoreId);

uiCoreId = rte_get_next_lcore(uiCoreId, 1, 0);

rte_eal_remote_launch(udp_server_entry, pstMbufPoolPub, uiCoreId);

uiCoreId = rte_get_next_lcore(uiCoreId, 1, 0);

rte_eal_remote_launch(tcp_server_entry, pstMbufPoolPub, uiCoreId);

while (1)

{

// rx

iRxNum = rte_eth_rx_burst(D_PORT_ID, 0, pstRecvMbuf, D_BURST_SIZE);

if(iRxNum > 0)

rte_ring_sp_enqueue_burst(pstRing->pstInRing, (void**)pstRecvMbuf, iRxNum, NULL);

// tx

iTotalNum = rte_ring_sc_dequeue_burst(pstRing->pstOutRing, (void**)pstSendMbuf, D_BURST_SIZE, NULL);

if(iTotalNum > 0)

{

iOffset = 0;

while(iOffset < iTotalNum)

{

iTxNum = rte_eth_tx_burst(D_PORT_ID, 0, &pstSendMbuf[iOffset], iTotalNum - iOffset);

if(iTxNum > 0)

iOffset += iTxNum;

}

}

}

}

这个代码的优点在于实现的比较简单(简单才是王道,特别是对于学习),没有太多复杂的相关协议的处理(只实现主干)。而且代码实现的手段也相对容易看明白,这样更有助于学习。源码的Readme中有相关测试启动的方法,此处不再赘述。

另外,此开源的代码基本实现了相关的功能,如果有什么需要可以继续在这个基础上进行完善或者重构,非常感谢作者。不过需要注意的是,代码的管理有点零乱,需要自己处理一下。

三、总结

基础建设有一个重要的问题,一旦被统一如果不是出现致命性的问题,很难被推翻重建。因为重建的成本太高了,特别是积久年深的基础设施,即使出现致命性问题,重建的成本都会让人绝望。前些年出现的国际上的一些大银行想重新改写ATM和银行交易的代码的惨痛教训估计人们都看到了。这也从一个侧面体现了,新技术想打败老技术不是说短时间就能打败的,有的甚至是几代人的努力都无法完成。

所以学习新技术的时候儿不要总盯着他的优势,能不能被大规模的快速应用,也是一个重要之处。

DPDK有不少的优势,但这种优势在哪儿?实际应用中哪些应用场景上面更有普及的优势而不是单纯的只看到优势而不顾实际的应用场景。这就做为一个提醒吧。