大家好!今天我来分享一下如何使用Python爬虫来获取小红书上的时尚穿搭灵感。小红书作为国内最大的时尚生活社区之一,拥有众多的时尚达人和潮流穿搭内容,如果你想获取最新的时尚灵感,就不容错过这个简单又有效的爬虫方法。

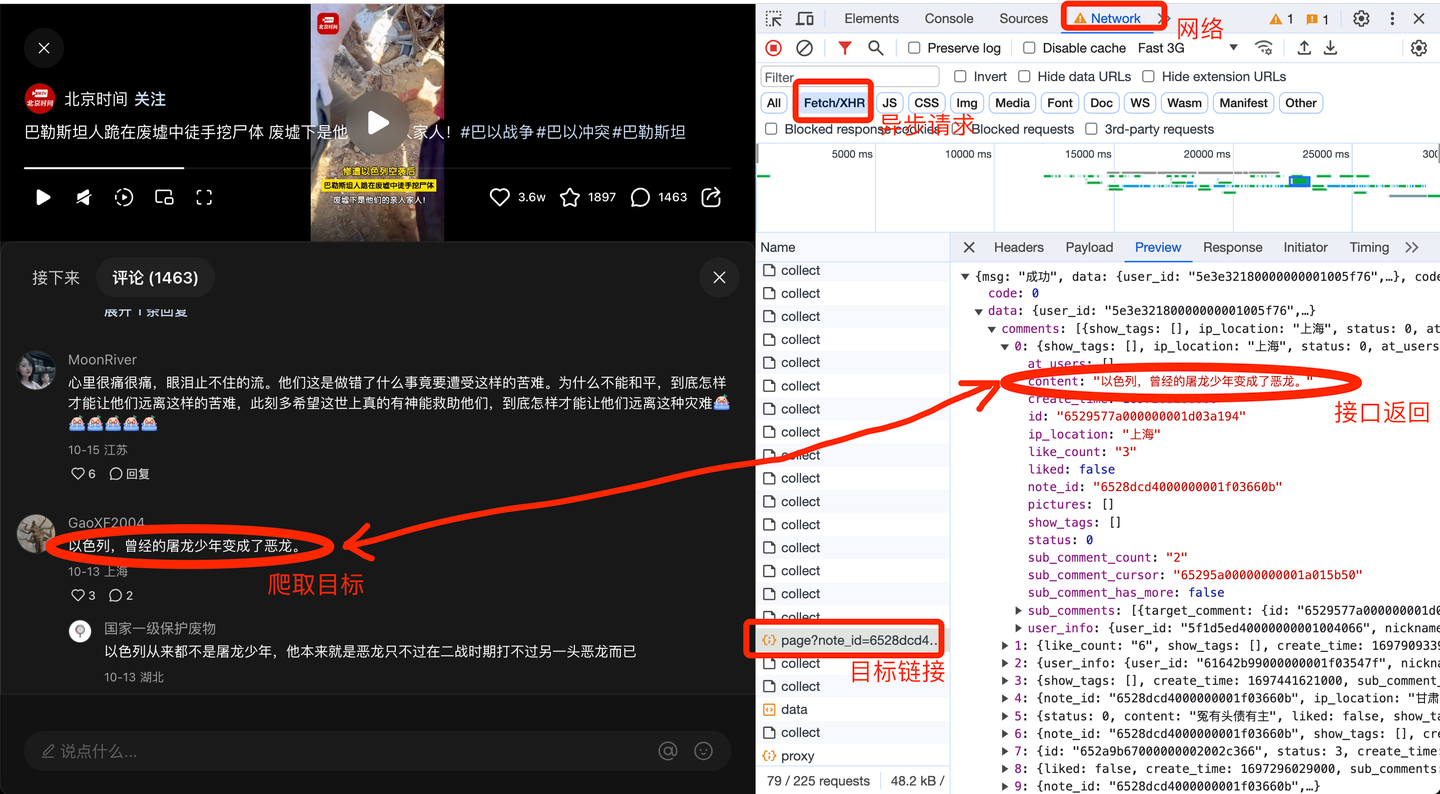

在本文中,我将带领大家使用Python的Selenium和BeautifulSoup库,通过模拟用户操作和解析网页内容,来实现从小红书上获取穿搭灵感的爬虫。废话不多说,让我们开始吧!

准备工作

在开始之前,我们需要准备以下工具和环境:

- Python 3.x

- Selenium库

- BeautifulSoup库

- Chrome浏览器

- Chrome驱动(根据浏览器版本下载)

确保你已经安装了Python和所需的库,并将Chrome驱动放置在合适的位置。

获取小红书文章链接

首先,我们需要获取小红书上的文章链接,以便后续爬取文章内容。为了实现这一步,我们使用了一个名为GetUrl.py的脚本,它使用了Selenium库来模拟用户操作,滚动页面并获取文章链接。

以下是GetUrl.py的主要代码:

#!/usr/bin/env python3

# coding:utf-8

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.common.exceptions import StaleElementReferenceException

import bag

import time

import random

def main():

result = []

count = []

while True:

try:

WebDriverWait(web, 30).until(

EC.presence_of_all_elements_located((By.XPATH, r'//*[@id="exploreFeeds"]')))

web.find_element(By.TAG_NAME, r'body').send_keys(Keys.END)

web.implicitly_wait(random.randint(50, 100))

web.implicitly_wait(10)

time.sleep(2)

links = web.find_elements(By.XPATH, r'//*[@id="exploreFeeds"]/section/div/div/a')

for link in links:

url = link.get_attribute('href')

if 'user' in url:

pass

elif url not in result:

result.append(url)

else:

pass

count.append(len(result))

print(len(result))

if count.count(count[-1]) > 30:

break

except StaleElementReferenceException:

time.sleep(5)

web.execute_script(

"window.scrollTo(0, document.body.scrollHeight / {});".format(random.randint(2, 3)))

if len(result) >= 100:

break

# bag.Bag.save_json(result, path)

return result

if __name__ == '__main__':

web = bag.Bag.web_debug()

# path = r'./小红书(穿搭).json'

# if os.path.isfile(path):

# result = bag.Bag.read_json(path)

# else:

# result = []

main()

以上代码通过模拟按下END键滚动页面,并使用XPath定位获取文章链接,最终返回一个包含所有文章链接的列表

# 小红书(穿搭).json

[

"https://www.xiaohongshu.com/explore/647456080000000013001d32",

"https://www.xiaohongshu.com/explore/64756d910000000013008834",

"https://www.xiaohongshu.com/explore/6476d212000000001300223c",

"https://www.xiaohongshu.com/explore/6475ca17000000001303489f",

"https://www.xiaohongshu.com/explore/646724320000000013003afe",

"https://www.xiaohongshu.com/explore/6468ac66000000001300c40c",

"https://www.xiaohongshu.com/explore/646ff3420000000013004c63",

"https://www.xiaohongshu.com/explore/6465f68b0000000013014649",

"https://www.xiaohongshu.com/explore/6477436f000000001203de52",

"https://www.xiaohongshu.com/explore/647427420000000012032c28",

"https://www.xiaohongshu.com/explore/6472ef100000000011010a28",

"https://www.xiaohongshu.com/explore/646ec809000000002701106f",

"https://www.xiaohongshu.com/explore/646f734e000000002702833b",

"https://www.xiaohongshu.com/explore/6470a18c0000000013005e41",

"https://www.xiaohongshu.com/explore/6470920a0000000013035a3c",

"https://www.xiaohongshu.com/explore/64687088000000001300b2e8",

"https://www.xiaohongshu.com/explore/6468b6c60000000014024c64",

"https://www.xiaohongshu.com/explore/646899030000000013035b16",

"https://www.xiaohongshu.com/explore/646ae0a3000000000800d11c",

"https://www.xiaohongshu.com/explore/6468fe10000000001300a508",

"https://www.xiaohongshu.com/explore/6475dafd0000000027010763",

"https://www.xiaohongshu.com/explore/6475da7e0000000013000f19",

"https://www.xiaohongshu.com/explore/6478388f000000001300d5ca",

"https://www.xiaohongshu.com/explore/6477258d000000001303e0c0",

"https://www.xiaohongshu.com/explore/646996de000000000703a943",

"https://www.xiaohongshu.com/explore/646b02bb0000000027012d49",

"https://www.xiaohongshu.com/explore/64709647000000000703ae75",

"https://www.xiaohongshu.com/explore/647087c00000000027011878",

"https://www.xiaohongshu.com/explore/64757cbc0000000013014a00",

"https://www.xiaohongshu.com/explore/647348af0000000013037ab2",

"https://www.xiaohongshu.com/explore/646617240000000027002540",

"https://www.xiaohongshu.com/explore/646ca2ba0000000013037a15",

"https://www.xiaohongshu.com/explore/64685572000000000703836c",

"https://www.xiaohongshu.com/explore/6475e92d000000001300e694",

"https://www.xiaohongshu.com/explore/64773b3f0000000013035447",

"https://www.xiaohongshu.com/explore/646f7fa80000000013030224",

"https://www.xiaohongshu.com/explore/646620300000000007039fcd",

"https://www.xiaohongshu.com/explore/6469b2960000000013032102",

"https://www.xiaohongshu.com/explore/64686d450000000013012004",

"https://www.xiaohongshu.com/explore/6468268a000000001300f9a0",

"https://www.xiaohongshu.com/explore/64683cd9000000002702b38e",

"https://www.xiaohongshu.com/explore/646c80b20000000011011f67",

"https://www.xiaohongshu.com/explore/64775be9000000002702a98f",

"https://www.xiaohongshu.com/explore/6475f76a000000001300f020",

"https://www.xiaohongshu.com/explore/6469a73d0000000027012cde",

"https://www.xiaohongshu.com/explore/646dc5b500000000110113ce",

"https://www.xiaohongshu.com/explore/646e0cb2000000001203d2d5",

"https://www.xiaohongshu.com/explore/6476bf690000000013030d9a",

"https://www.xiaohongshu.com/explore/6477194b000000000800ec38",

"https://www.xiaohongshu.com/explore/64747523000000001300c8c4",

"https://www.xiaohongshu.com/explore/6474b5b8000000001203c201",

"https://www.xiaohongshu.com/explore/6470a79d0000000014026113",

"https://www.xiaohongshu.com/explore/6471a4270000000013005ee3",

"https://www.xiaohongshu.com/explore/646c8bad000000000703a8eb",

"https://www.xiaohongshu.com/explore/646f27110000000007038aba",

"https://www.xiaohongshu.com/explore/646f2a24000000001303e883",

"https://www.xiaohongshu.com/explore/646f467100000000130376ee",

"https://www.xiaohongshu.com/explore/6464ec9f0000000013015f91",

"https://www.xiaohongshu.com/explore/6472225700000000130090e9",

"https://www.xiaohongshu.com/explore/646b6d7a000000000800e6f2",

"https://www.xiaohongshu.com/explore/644a82930000000027010550",

"https://www.xiaohongshu.com/explore/644a4893000000001203eb72",

"https://www.xiaohongshu.com/explore/6462206d0000000013000279",

"https://www.xiaohongshu.com/explore/646175ad00000000270285ea",

"https://www.xiaohongshu.com/explore/645af993000000001402417e",

"https://www.xiaohongshu.com/explore/645b603b0000000027010c66",

"https://www.xiaohongshu.com/explore/644016420000000013035988",

"https://www.xiaohongshu.com/explore/644f54c90000000013008fe8",

"https://www.xiaohongshu.com/explore/644fa915000000001303542f",

"https://www.xiaohongshu.com/explore/6450fd6c000000001303130f",

"https://www.xiaohongshu.com/explore/6450d8ff0000000013014dcb",

"https://www.xiaohongshu.com/explore/6450c126000000001203f238",

"https://www.xiaohongshu.com/explore/6450d23d000000000800d4f5",

"https://www.xiaohongshu.com/explore/64562297000000001303e2db",

"https://www.xiaohongshu.com/explore/645f627c0000000011012c97",

"https://www.xiaohongshu.com/explore/644caf47000000001301486f",

"https://www.xiaohongshu.com/explore/644bca75000000002702aa2c",

"https://www.xiaohongshu.com/explore/645a12b900000000270289dd",

"https://www.xiaohongshu.com/explore/645ccbf3000000001300a1fb",

"https://www.xiaohongshu.com/explore/645c585a0000000013037e4b",

"https://www.xiaohongshu.com/explore/644cdca60000000027003411",

"https://www.xiaohongshu.com/explore/64585b5c0000000014026a6b",

"https://www.xiaohongshu.com/explore/6458c86c0000000013030ee7",

"https://www.xiaohongshu.com/explore/6458c4af0000000013005945",

"https://www.xiaohongshu.com/explore/6458f2a30000000027028641",

"https://www.xiaohongshu.com/explore/6458cb76000000000800d190",

"https://www.xiaohongshu.com/explore/6457a2af00000000130090c3",

"https://www.xiaohongshu.com/explore/64630cc80000000027029630",

"https://www.xiaohongshu.com/explore/645a2db100000000110101e7",

"https://www.xiaohongshu.com/explore/6461f9380000000012032775",

"https://www.xiaohongshu.com/explore/64512005000000001300e298",

"https://www.xiaohongshu.com/explore/64551a9b000000000800dc92",

"https://www.xiaohongshu.com/explore/64534539000000000800d044",

"https://www.xiaohongshu.com/explore/644d3c550000000027000537",

"https://www.xiaohongshu.com/explore/644cd7190000000012030bf9",

"https://www.xiaohongshu.com/explore/64539ffd00000000130017f0",

"https://www.xiaohongshu.com/explore/645454b7000000002700368d",

"https://www.xiaohongshu.com/explore/644f4a06000000001203f270",

"https://www.xiaohongshu.com/explore/644c7f960000000013008576",

"https://www.xiaohongshu.com/explore/6449225d000000001300e292",

"https://www.xiaohongshu.com/explore/64525bfc0000000013012bb4",

"https://www.xiaohongshu.com/explore/645b32fb0000000027003867",

"https://www.xiaohongshu.com/explore/645b29700000000014025eb5",

"https://www.xiaohongshu.com/explore/645234780000000027001908",

"https://www.xiaohongshu.com/explore/6450f8d5000000001203c476",

"https://www.xiaohongshu.com/explore/6451c5fb000000001303fd11",

"https://www.xiaohongshu.com/explore/644a7879000000001303eb12"

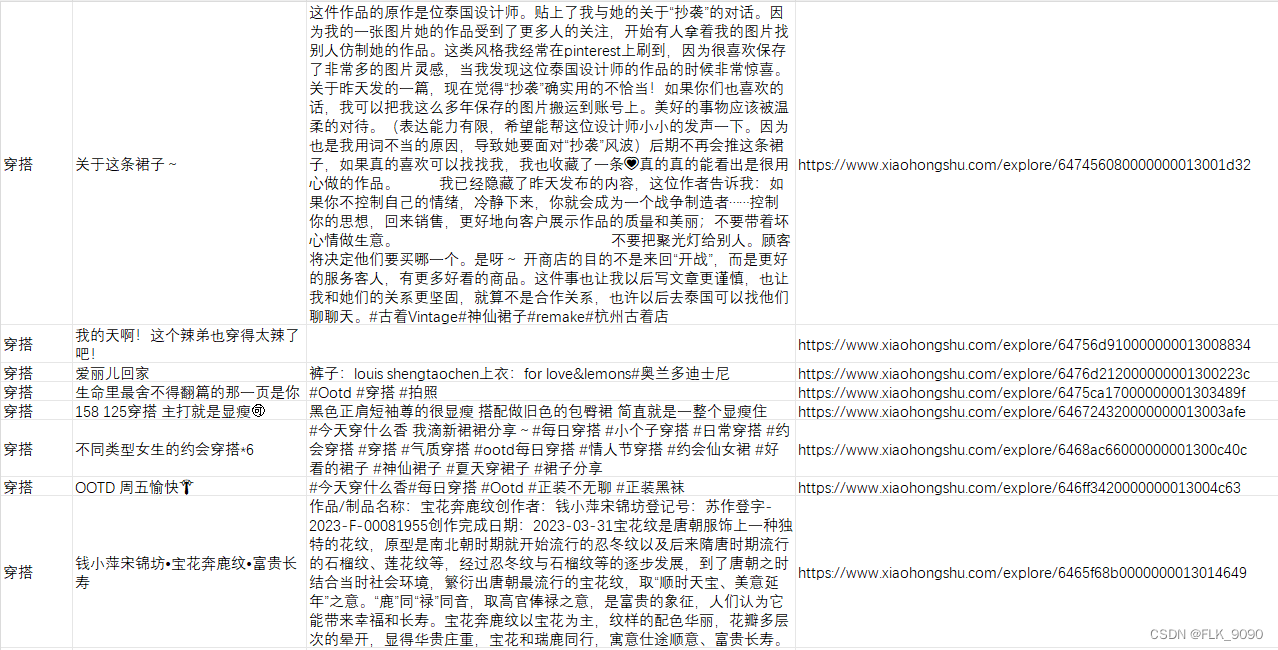

]爬取文章内容

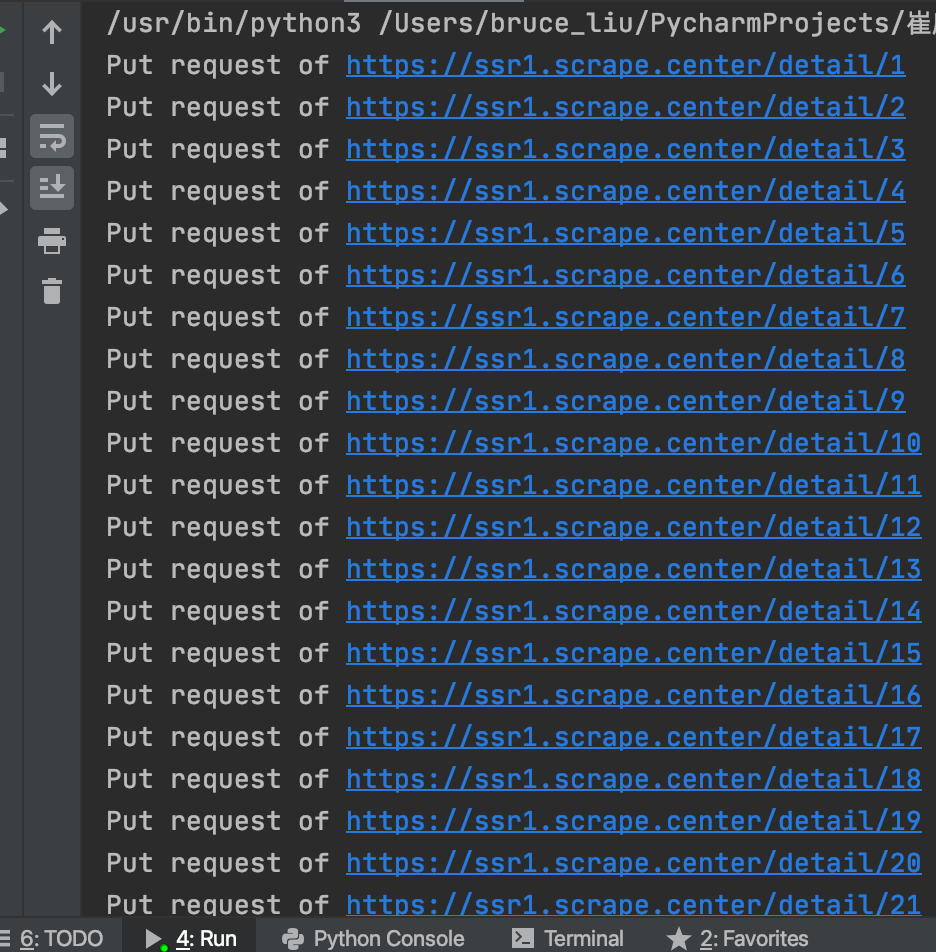

接下来,我们使用获取到的文章链接来爬取文章的标题和内容。为了实现这一步,我们使用了一个名为xiaohongshu.py的脚本,它使用了BeautifulSoup库来解析网页内容,获取文章的标题和正文。

以下是xiaohongshu.py的主要代码:

#!/usr/bin/env python3

# coding:utf-8

from bs4 import BeautifulSoup

from tqdm import tqdm

import bag

import time

from concurrent.futures import ProcessPoolExecutor

import GetUrl

session = bag.session.create_session()

session.cookies[''] = r'你的cookies'

def main():

result = []

with ProcessPoolExecutor(max_workers=20) as pro:

tasks = []

for url in tqdm(urls):

tasks.append(pro.submit(get_data, url))

for task in tqdm(tasks):

result.extend(task.result())

bag.Bag.save_excel(result, r'./小红书(穿搭).xlsx')

def get_data(url):

result = []

resp = session.get(url)

time.sleep(2)

resp.encoding = 'utf8'

resp.close()

html = BeautifulSoup(resp.text, 'lxml')

soup = html.find_all('div', class_="desc")

title = html.find_all('div', class_='title')[0].text

mid = []

for i in soup:

mid.append(i.text.strip())

result.append([

'穿搭',

title,

'\n'.join(mid),

url

])

return result

if __name__ == '__main__':

web = bag.Bag.web_debug()

GetUrl.web = web

urls = GetUrl.main()

main()

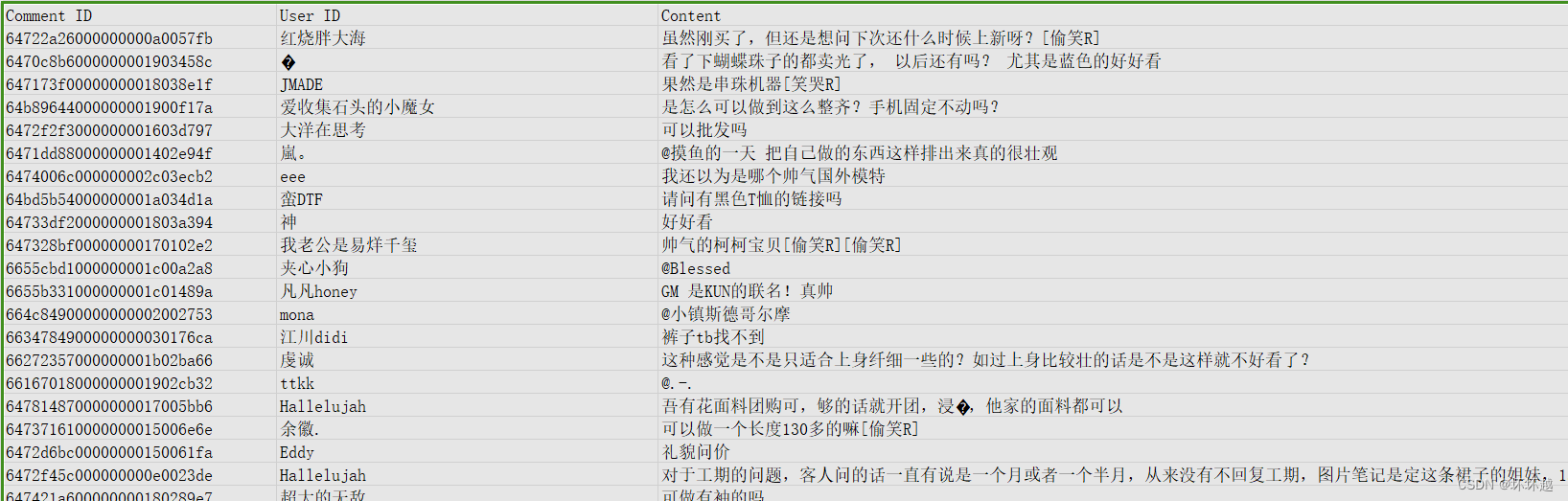

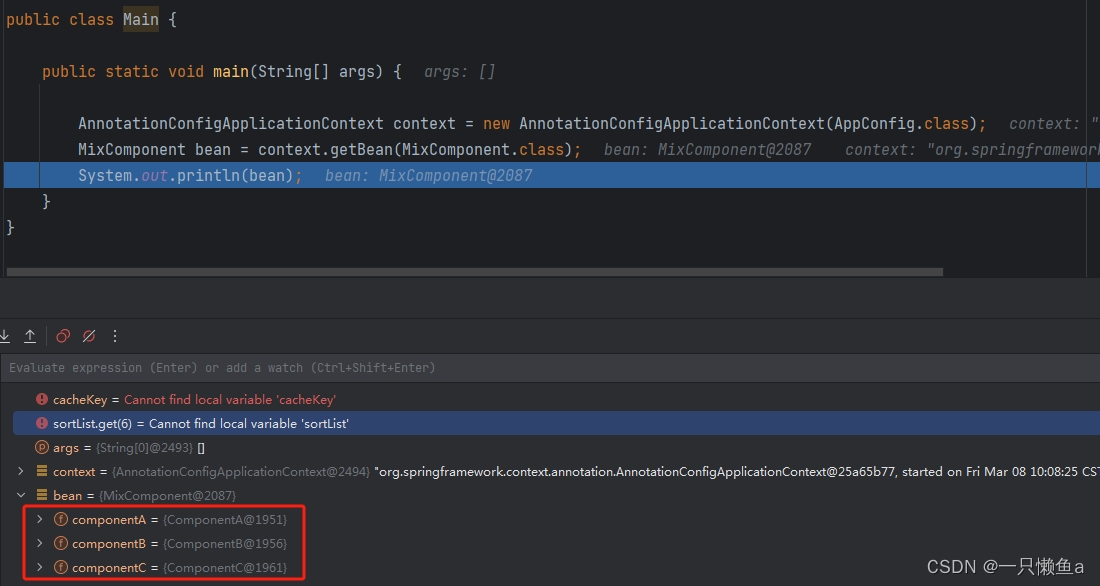

以上代码使用了多线程的方式并发爬取文章内容,通过解析网页获取文章的标题和正文,并将结果保存为Excel文件

运行结果

结语

通过使用Python爬虫,可以轻松地获取小红书上的时尚穿搭灵感。本文中,我们使用了Selenium和BeautifulSoup库,通过模拟用户操作和解析网页内容,实现了从小红书上获取穿搭灵感的爬虫。

希望本文对于想要获取时尚灵感的你有所帮助。如果你有任何疑问或意见,请在评论区留言,我将尽快回复。谢谢阅读!