简介

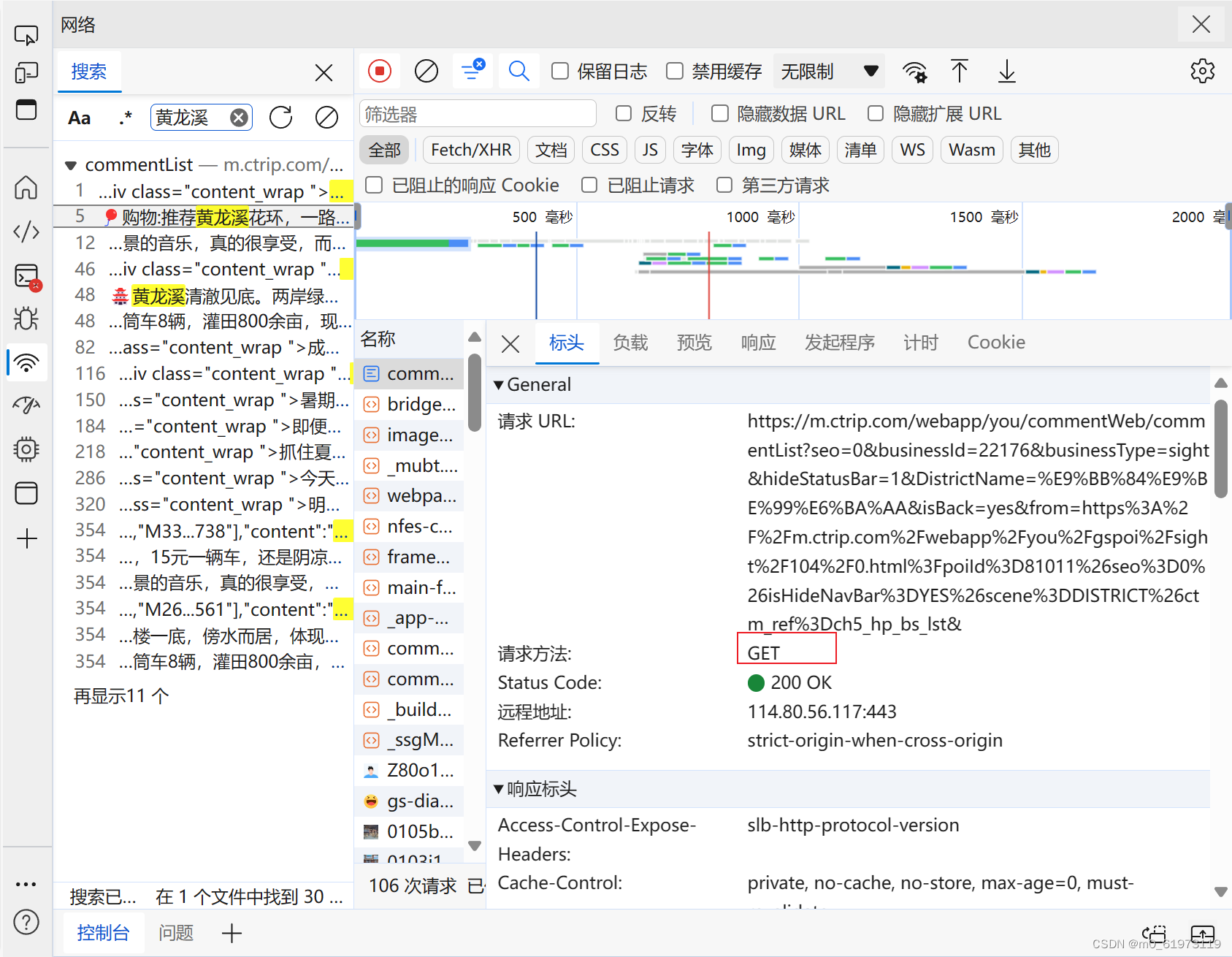

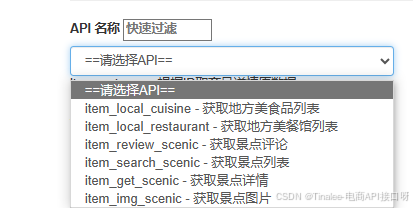

本文介绍了使用Python编写的爬虫代码,用于爬取携程旅游网站上的游记文章。通过该代码,可以获取游记的标题、内容、天数、时间、和谁一起以及玩法等信息

导入所需的库

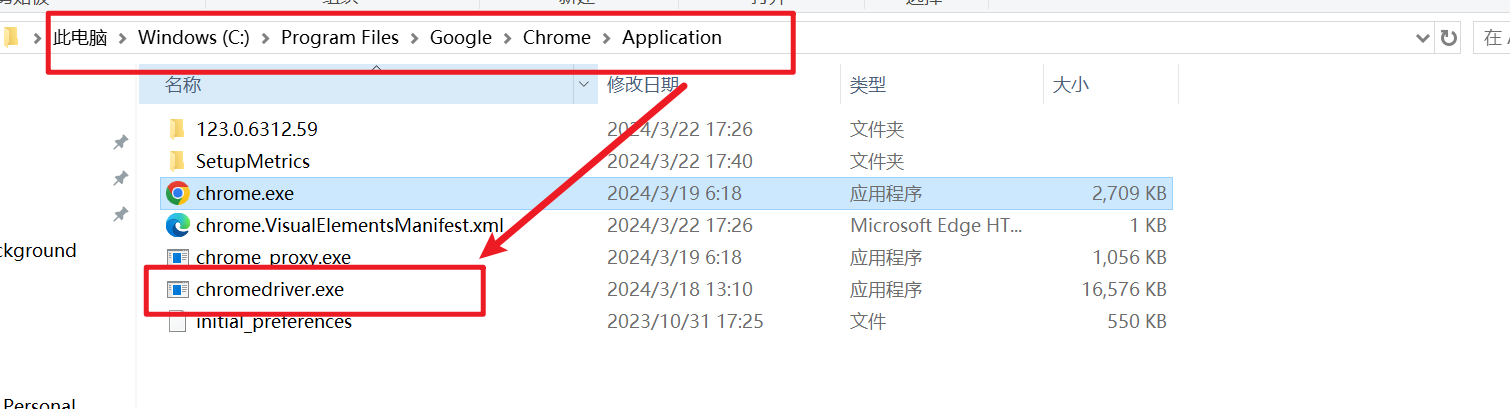

首先,我们需要导入所需的库,包括re、BeautifulSoup、bag、easygui和webbrowser。其中,re库用于正则表达式匹配,BeautifulSoup库用于解析HTML页面,bag库用于创建会话,easygui库用于显示错误信息,webbrowser库用于打开验证码验证页面。

import re

from bs4 import BeautifulSoup

import bag

import easygui

import webbrowser

import time

创建会话并设置请求头和cookies信息

session = bag.session.create_session()

session.headers['Referer'] = r'https://you.ctrip.com/travels/'

session.get(r'https://you.ctrip.com/TravelSite/Home/IndexTravelListHtml?p=2&Idea=0&Type=100&Plate=0')

session.cookies[''] = r"你的cookies"

定义主函数main(),在其中调用catch函数并打印结果

def main():

resp = catch(r'https://you.ctrip.com/travels/qinhuangdao132/4011655.html')

print(resp)定义show_error_message函数,用于显示错误信息,并提供一个验证按钮以打开验证码验证页面

def show_error_message(error):

choices = ["验证"]

button_box_choice = easygui.buttonbox(error, choices=choices)

if button_box_choice == "验证":

webbrowser.open("https://verify.ctrip.com/static/ctripVerify.html?returnUrl=https%3A%2F%2Fyou.ctrip.com%2Ftravelsite%2Ftravels%2Fshanhaiguan120556%2F3700092.html&bgref=l2j65wlGQDYtmBZjKEoy5w%3D%3D")

定义catch函数,用于解析游记文章页面并提取相关信息

def catch(url) -> list:

result_ = []

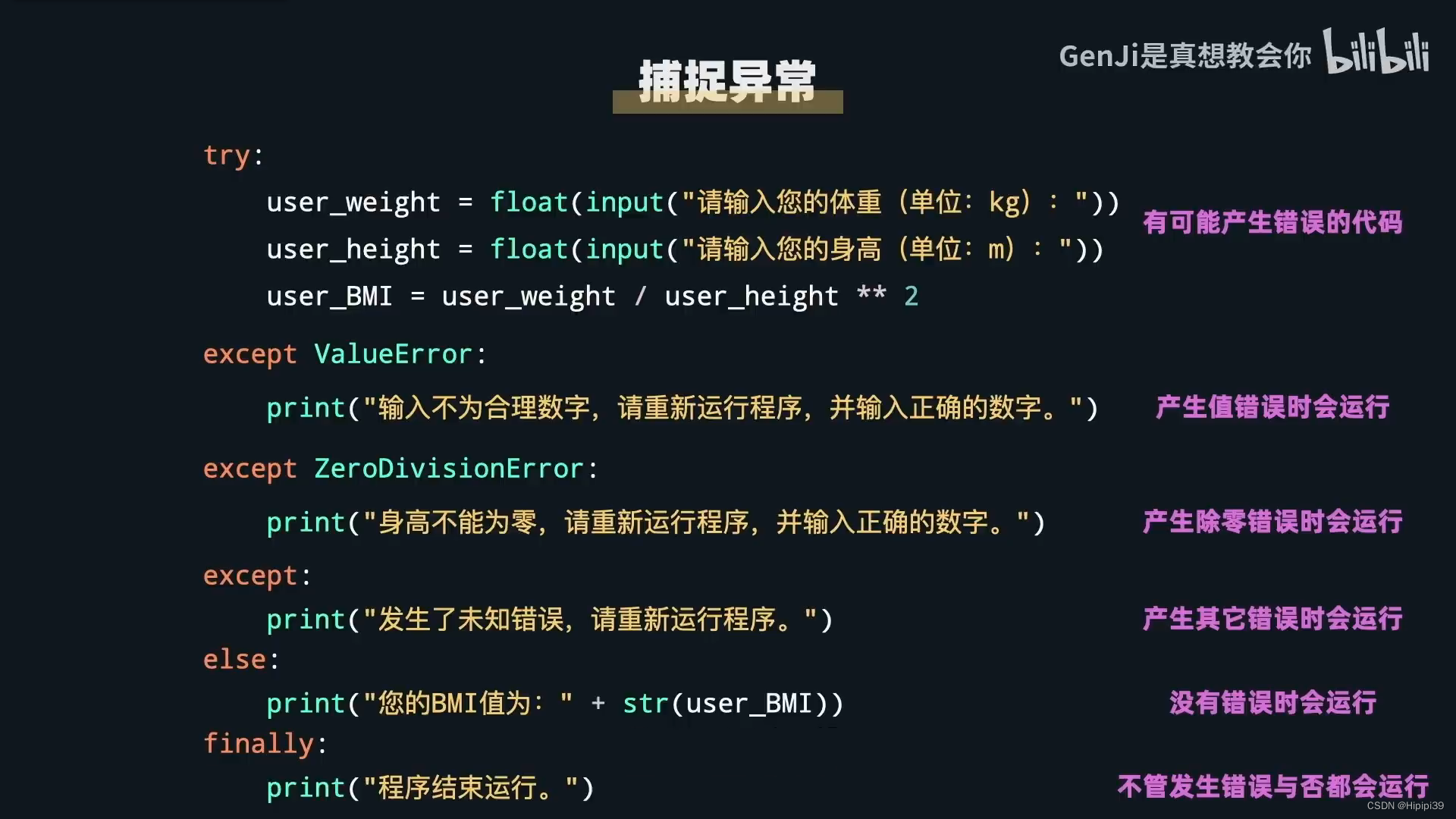

try:

resp = session.get(url)

resp.encoding = 'utf8'

resp.close()

time.sleep(2)

html = BeautifulSoup(resp.text, 'lxml')

# 省略部分代码

return result_

except Exception as e:

show_error_message(e)

time.sleep(10)

catch(url)

完整代码

#!/usr/bin/env python3

# coding:utf-8

import re

from bs4 import BeautifulSoup

import bag

import easygui

import webbrowser

import time

session = bag.session.create_session()

session.headers['Referer'] = r'https://you.ctrip.com/travels/'

session.get(r'https://you.ctrip.com/TravelSite/Home/IndexTravelListHtml?p=2&Idea=0&Type=100&Plate=0')

session.cookies[

''] = r"你的cookies"

# noinspection PyBroadException

def main():

resp = catch(r'https://you.ctrip.com/travels/qinhuangdao132/4011655.html')

print(resp)

def show_error_message(error):

choices = ["验证"]

button_box_choice = easygui.buttonbox(error, choices=choices)

if button_box_choice == "验证":

webbrowser.open(

"https://verify.ctrip.com/static/ctripVerify.html?returnUrl=https%3A%2F%2Fyou.ctrip.com%2Ftravelsite%2Ftravels%2Fshanhaiguan120556%2F3700092.html&bgref=l2j65wlGQDYtmBZjKEoy5w%3D%3D")

def catch(url) -> list:

result_ = []

try:

resp = session.get(url)

resp.encoding = 'utf8'

resp.close()

time.sleep(2)

# print(resp.text)

html = BeautifulSoup(resp.text, 'lxml')

title = re.findall(r'<h1 class="title1">(.*?)</h1>', resp.text)

if len(title) == 0:

title = html.find_all('div', class_="ctd_head_left")[0].h2.text

soup = html.find_all('div', class_="ctd_content")

days = re.compile(r'<span><i class="days"></i>天数:(.*?)</span>', re.S)

times = re.compile(r'<span><i class="times"></i>时间:(.*?)</span>', re.S)

whos = re.compile(r'<span><i class="whos"></i>和谁:(.*?)</span>', re.S)

plays = re.compile(r'<span><i class="plays"></i>玩法:(.*?)</span>', re.S)

content = re.compile(r'<h3>.*?发表于.*?</h3>(.*?)</div>]', re.S)

mid = []

for info in re.findall(r'<p>(.*?)</p>', str(re.findall(content, str(soup)))):

if info == '':

pass

else:

mid.append(re.sub(r'<.*?>', '', info))

if len(mid) == 0:

for info in re.findall(r'<p>(.*?)</p>', str(soup)):

if info == '':

pass

else:

mid.append(re.sub(r'<.*?>', '', info))

result_.append([

''.join(title).strip(),

'\n'.join(mid),

''.join(re.findall(days, str(soup))).replace(' ', ''),

''.join(re.findall(times, str(soup))).replace(' ', ''),

''.join(re.findall(whos, str(soup))),

''.join(re.findall(plays, str(soup))),

url

])

return result_

except Exception as e:

show_error_message(e)

time.sleep(10)

catch(url)

if __name__ == '__main__':

main()

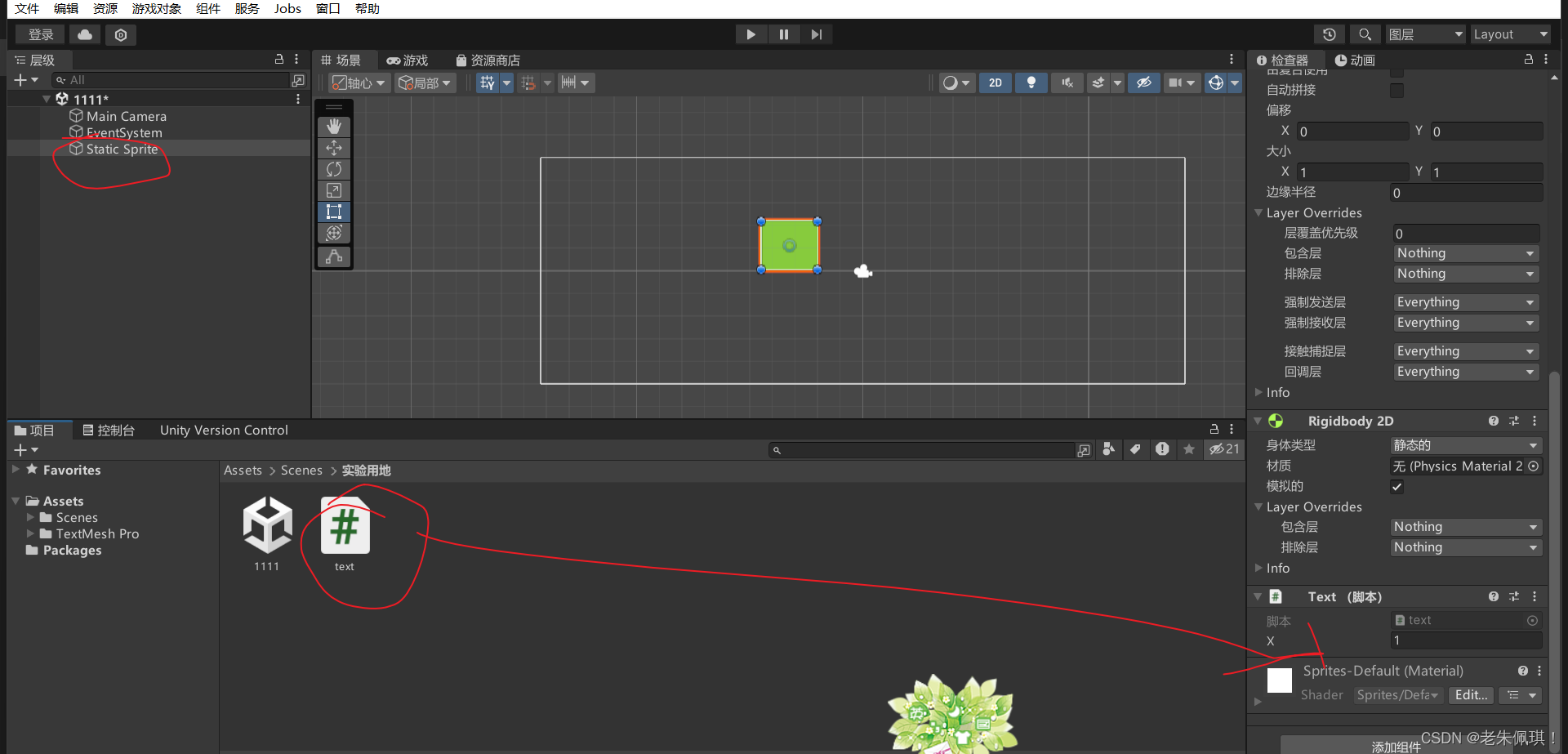

运行结果

结语

如果你觉得本教程对你有所帮助,不妨点赞并关注我的CSDN账号。我会持续为大家带来更多有趣且实用的教程和资源。谢谢大家的支持!