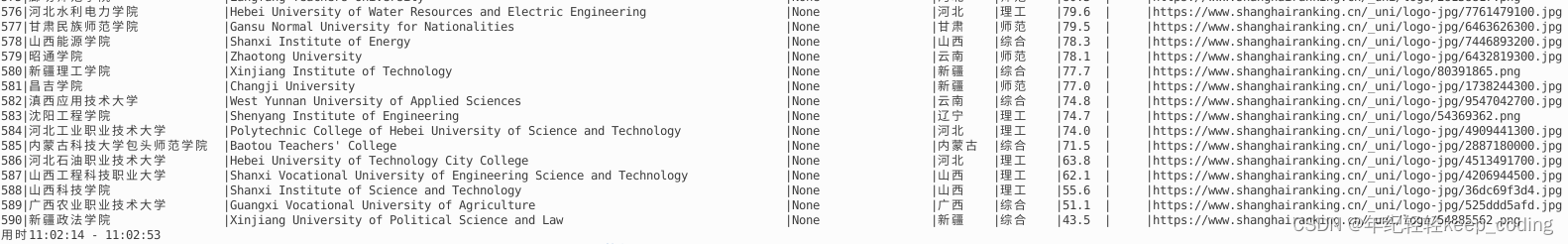

之前实现了对大学排数据爬取:爬虫2_2019年549所中国大学排名.

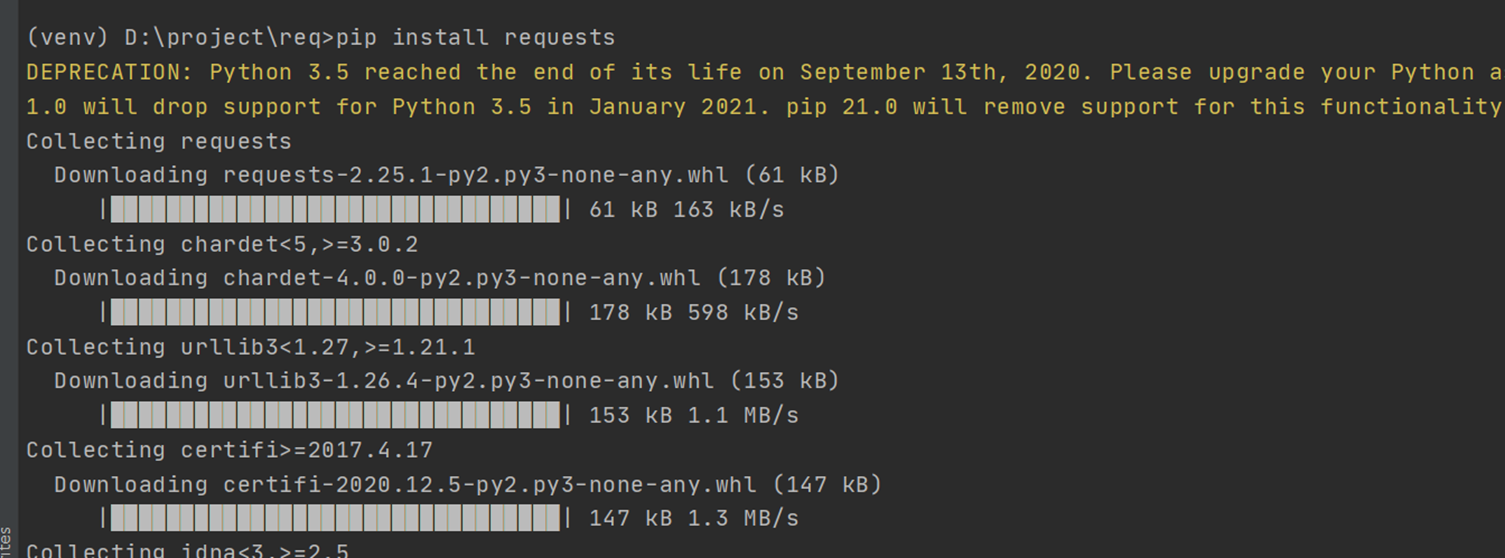

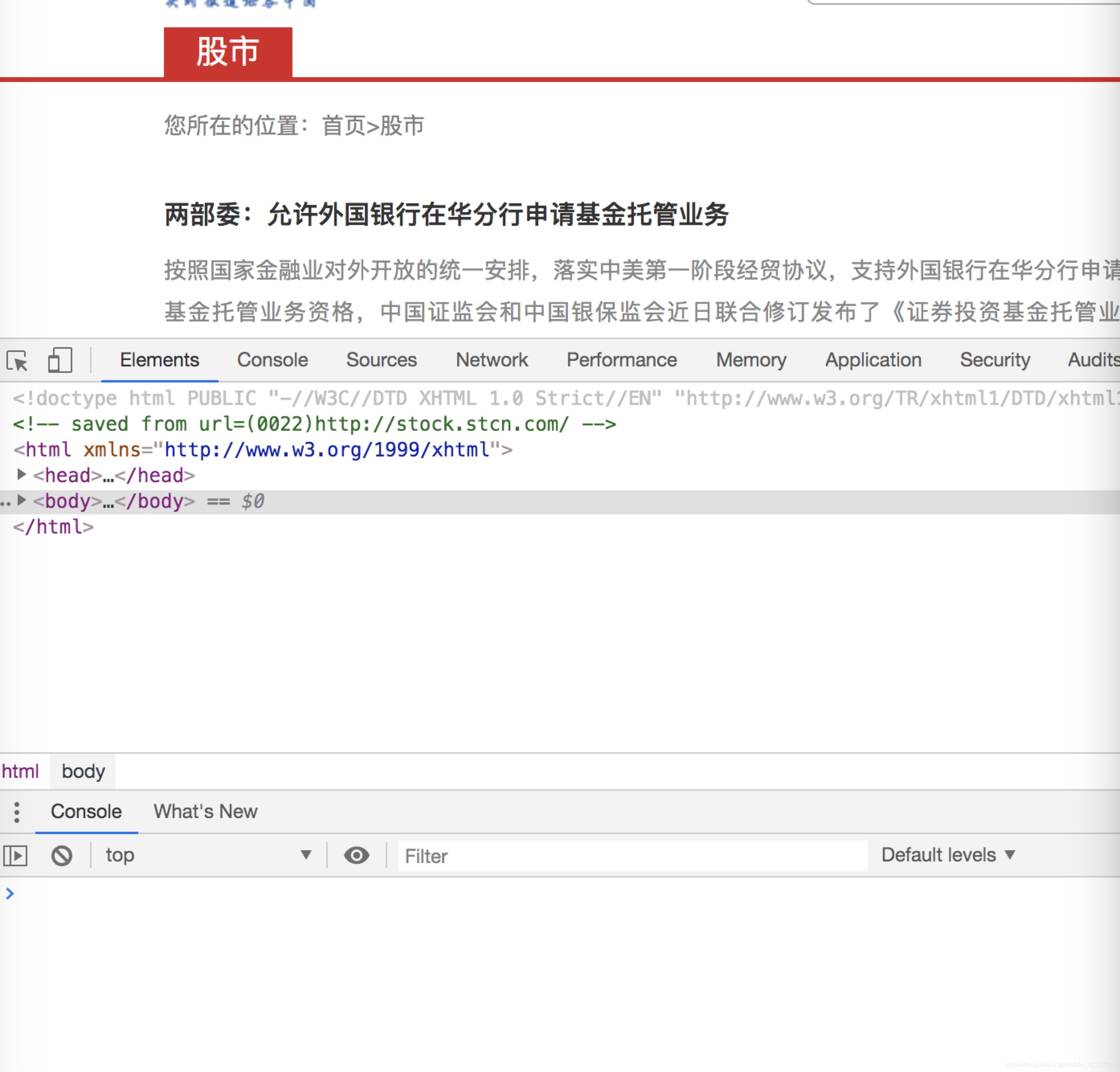

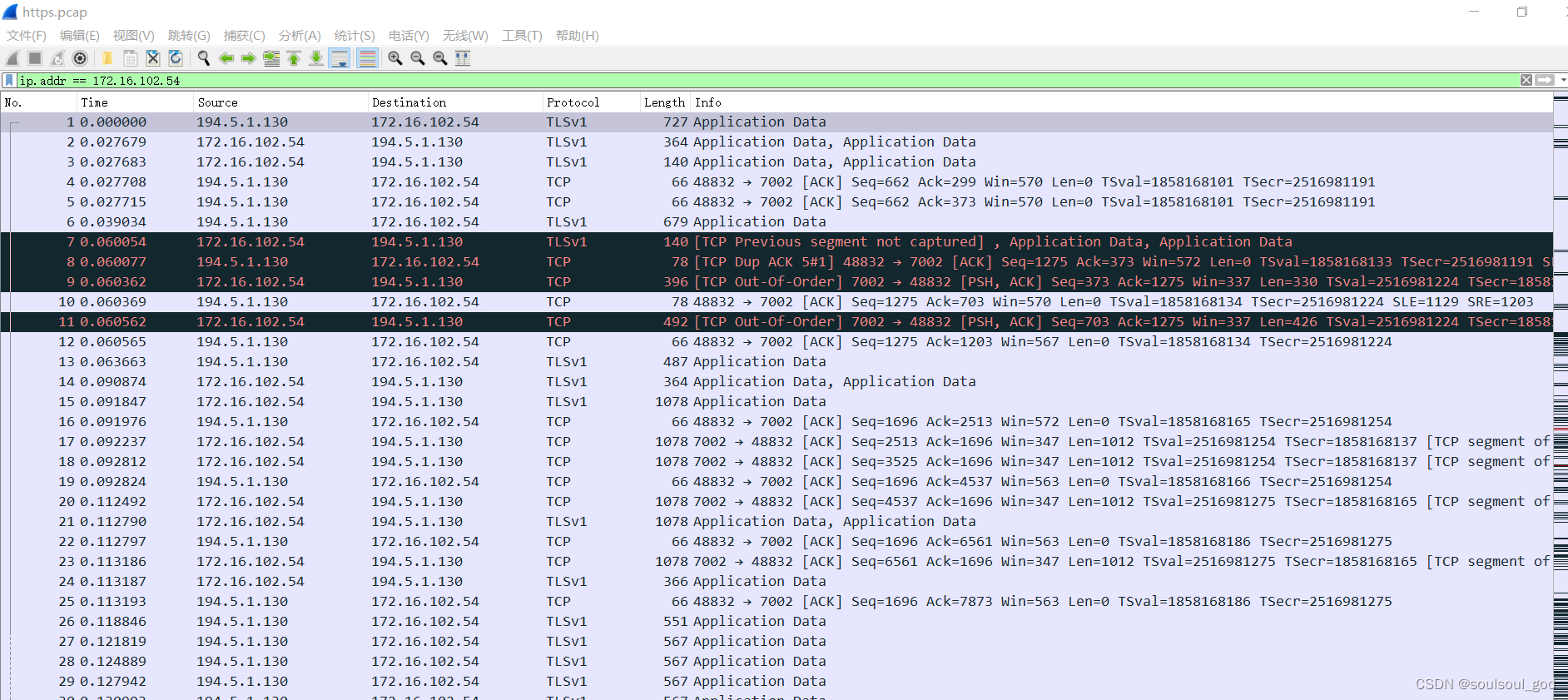

近期复现代码,发现原网站升级,在翻页时,发现URL不改变,修改代码,使用网页自动化工具selenium实现对该类网站数据获取。

#-*- coding: UTF-8 -*-

from bs4 import BeautifulSoup

import bs4

from selenium import webdriver

from selenium.webdriver.common.action_chains import ActionChains # 鼠标操作

from selenium.webdriver.common.by import By

import time

def get_info(soup, _type, element, param=None):

if _type == "find":

if param is not None:

params = dict([param.split('=')])

res = soup.find(element, **params)

else:

res = soup.find(element)

if res is not None:

res = res.string.replace(" ", "").replace("\n", "")

else:

res = "None"

if _type == "find_all":

if param is not None:

params = dict([param.split('=')])

res = soup.find_all(element, **params)

else:

res = soup.find_all(element)

return res

def fillUnivList(html):

soup = BeautifulSoup(html, 'html.parser')

for tr in soup.find('tbody').children:

if isinstance(tr, bs4.element.Tag): # 如果为Tag类型

td_list = tr.find_all('td')

"排名"

top = get_info(td_list[0], "find", "div", "class_=ranking")

"logo"

logo = td_list[1].find('img')["src"]

"中文名/英文名"

university_list = get_info(td_list[1], "find_all", "a")

ch_name = university_list[0].string.replace("\n", "").replace("\t", "").strip(" ")

en_name = university_list[1].string.replace("\n", "").strip(" ")

"学校标签"

tags = get_info(td_list[1], "find", "p")

"学校地址"

area = td_list[2].text.replace("\n", "").strip(" ")

"学校行业"

main = td_list[3].text.replace("\n", "").strip(" ")

"综合分数"

score = td_list[4].text.replace("\n", "").strip(" ")

"办学层次"

layer = td_list[5].text.replace("\n", "").strip(" ")

print("{:<3}|{}|{:<80}|{}|{}|{}|{:<6}|{:<5}|{}".format(

top, ch_name.ljust(14, "\u3000"),en_name, tags.ljust(12, "\u3000"), area.ljust(4, "\u3000"),

main.ljust(4, "\u3000"), score, layer, logo))

def action_run(driver, actions, info, by=By.ID, time_num=1):

while 1:

config_facesearch = driver.find_element(by=by, value=info)

if config_facesearch.is_displayed():

actions.move_to_element(config_facesearch).click().perform()

time.sleep(time_num)

break

else:

print("%s is not find, watting..." % (info))

time.sleep(1)

if __name__ == "__main__":

url = "https://www.shanghairanking.cn/rankings/bcur/2023"

start = time.strftime("%H:%M:%S", time.localtime())

driver = webdriver.Firefox()

# driver = webdriver.Chrome()

driver.maximize_window()

driver.get(url)

time.sleep(2)

"鼠标操作"

actions = ActionChains(driver)

for i in range(20):

html = driver.page_source

fillUnivList(html)

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);") # 滚动至底部

action_run(driver, actions, info="li[title='下一页']", by=By.CSS_SELECTOR)

end = time.strftime("%H:%M:%S", time.localtime())

print("用时%s - %s" % (start, end))

# 关闭浏览器

driver.quit()