1)lxml的使用方法

from lxml import etree

my_page = '''

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8"/>

<title>Title</title>

</head>

<body>

<div>我的⽂章</div>

<ul>

<li id="l1" class="c1">北京</li>

<li id="l2">上海</li>

<li id="c3">深圳</li>

<li id="c4">武汉</li>

</ul>

</body>

</html>

'''

html = etree.fromstring(my_page)

html_data = html.xpath('//div')

print(html_data)

from lxml import etree

my_page = '''<html>

<title>程序员zhenguo</title>

<body>

<h1>我的⽹站</h1>

<div>我的⽂章</div>

<div id="photos">

<img src="pic1.png" />

<span id="pic1"> 从零学Python </span>

<img src="pic2.png" />

<span id="pic2" >⼤纲</span>

<p>

<a href="http://www.zglg.work">更多详情</a>

</p>

<a href="http://www.zglg.work/python-packages/">Python</a>

<a href="http://www.zglg.work/python-intro/">Python⼩⽩</a>

<a href="http://www.zglg.work/python-level/">Python进阶</a>

</div>

<div id="explain">

<p class="md-nav__item">本站总访问量159323次</p>

</div>

<div class="foot">Copyright © 2019 - 2021 程序员zhenguo</div>

</body>

</html>'''

html = etree.fromstring(my_page)

# ⼀、定位

divs1 = html.xpath('//div')

divs2 = html.xpath('//div[@id]')

divs3 = html.xpath('//div[@class="foot"]')

2)音乐爬取案例

import time

import requests

import lxml.etree

import os

import random

from lxml import etree

# https://www.tuke88.com/peiyue/zonghe_0_1.html

page_n = int(input('请输入你想要爬取的网页数量: '))

for i in range(page_n):

url = f'https://www.tuke88.com/peiyue/zonghe_0_{

i}.html'

res = requests.get(url)

# 第三步,用lxml框架提取html网页我们想要的内容

html_parser = lxml.etree.HTMLParser()

html = lxml.etree.fromstring(res.text, parser=html_parser)

titles = html.xpath("//div[@class='lmt']//div[@class='audio-list']//a[@class='title']/text()")

print(titles)

mp3_urls = html.xpath("//div[@class='lmt']//div[@class='audio-list']//source/@src")

print(mp3_urls)

if not os.path.exists('pymp3'):

os.mkdir('pymp3')

for title, mp3_url in zip(titles, mp3_urls):

mp3Stream = requests.get(mp3_url, stream=True)

with open(os.path.join('pymp3', title+".mp3"), "wb+") as f:

f.write(mp3Stream.raw.read())

print(f'[info]{

title}.mp3下载成功')

time.sleep(random.uniform(0.1, 0.4))

#r.content是经过处理的(比如自动解码gzip,deflate),r.raw是原始数据(socket返回的内容)

#deflate [dɪˈfleɪt] 压缩

//https://developer.mozilla.org/zh-CN/docs/Web javascript学习网址

//https://dabblet.com/ javascript在线编译环境

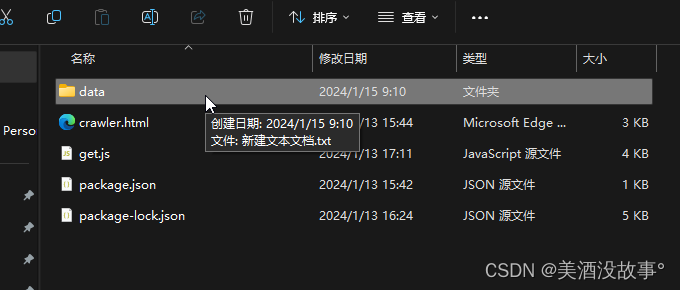

3)爬取c-log博客数据

import os

import random

import time

import requests

from lxml import etree

import pdfkit

author_name = input("请输入博主ID: ")

MAX_PAGE = 200

i = 1

sess = requests.Session()

agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:122.0) Gecko/20100101 Firefox/122.0'

sess.headers['User-Agent'] = agent

def crawler_blog_by(author_name, article_id, title, i):

article_request_url = f'https://blog.csdn.net/{

author_name}/article/details/{

article_id}?spm=1001.2100.3001.7377'

# https://blog.csdn.net/{author_name}/article/details/{article_id}?spm=1001.2100.3001.7377

response = sess.get(article_request_url)

selector = etree.HTML(response.text)

head_msg = selector.xpath(r"//head")[0]

head_str = etree.tostring(head_msg, encoding='utf8', method='html').decode()

body_msg = selector.xpath(r"//div[@id='content_views']")[0]

body_str = etree.tostring(body_msg, encoding='utf8', method='html').decode()

if not os.path.exists("c_articles"):

os.mkdir("c_articles")

title = title.replace("/", "-").replace(":", "").replace(":", "")

save_file_name = os.path.join("c_articles", f'{

author_name}-{

title}-{

article_id}.html')

with open(save_file_name, 'w', encoding='utf8') as f:

f.write(f"""<head> <meta charset="utf-8"> </head>

{

body_str}""")

print(f'[info] : {

author_name}第{

i}篇{

title}-{

article_id}.html保存成功')

i += 1

# 循环爬取分页html

for page_no in range(MAX_PAGE):

try:

data = {

"page": page_no,

"size": 20,

"businessType": "blog",

"orderby": "",

"noMore": False,

"year": "",

"month": "",

"username": author_name}

# 'https://blog.csdn.net/community/home-api/v1/get-business-list?page=2&size=20&businessType=blog&orderby=&noMore=false&year=&month=&username=weixin_46274168

pages_dict = sess.get('https://blog.csdn.net/community/home-api/v1/get-business-list?page=2&size=20&businessType=blog&orderby=&noMore=false&year=&month=&username=weixin_46274168').json()

for article in pages_dict['data']['list']:

article_id = article['articleId']

title = article['title']

crawler_blog_by(author_name, article_id, title, i)

time.sleep(random.uniform(0.4, 1.0))

except Exception as e:

print(e)

4)密钥学习

1) os.path.splitext的使用,会返回两个值,分别为文件名和扩展名

import os

path = '/usr/local/bin/python.exe'

filename, ext = os.path.splitext(path)

print('文件名:', filename)

print('扩展名:', ext)

# 文件名: /usr/local/bin/python

# 扩展名: .exe

https://www.liaoxuefeng.com/ 廖雪峰学习网址

//md5加密

import hashlib

def getMd5(data):

obj = hashlib.md5()

obj.update(data.encode('utf-8'))

return obj.hexdigest()

print(getMd5('zhen guo'))

//去除字符串首尾的空格或者特殊字符

str = "00000003210Runoob01230000000"

#去除首尾字符 0

str1 = str.strip('0')

print(str1)

# 去除首尾空格

str2 = " Runoob "

str3 = str2.strip()

print(str3)

pip install pycryptodome

rsa加密

from Crypto import Random

from Crypto.PublicKey import RSA

from Crypto.Cipher import PKCS1_v1_5 as PKCS1_cipher

random_generator = Random.new().read

rsa = RSA.generate(2048, random_generator)

# 生成公钥

public_key = rsa.publickey().exportKey()

with open('public_a.rsa', 'wb') as f:

f.write(public_key)

print(public_key)

# 生成私钥

private_key = rsa.exportKey()

with open('private_a.rsa', 'wb') as f:

f.write(private_key)

print(private_key)

5)rsa加密解密案例

cipher [ˈsaɪfə] 密码的意思

from Crypto import Random

from Crypto.PublicKey import RSA

from Crypto.Cipher import PKCS1_v1_5 as PKCS1_cipher

random_generator = Random.new().read

rsa = RSA.generate(2048, random_generator)

# 生成公钥

public_key = rsa.publickey().exportKey()

with open('public_a.rsa', 'wb') as f:

f.write(public_key)

print(public_key)

# 生成私钥

private_key = rsa.exportKey()

with open('private_a.rsa', 'wb') as f:

f.write(private_key)

print(private_key)

data = input('请输入待加密的文本: ')

with open('public_a.rsa', 'r') as f:

key = f.read()

pub_key = RSA.importKey(str(key))

cipher = PKCS1_cipher.new(pub_key)

# 输入的是文本,需要转换为字节类型

rsa_text = cipher.encrypt(data.encode('utf8'))

# 发送给客户端,客户端中,公钥和私钥都是有的

with open('private_a.rsa', 'r') as f:

key = f.read()

pri_key = RSA.importKey(key)

cipher = PKCS1_cipher.new(pri_key)

raw_data = cipher.decrypt(rsa_text, 0)

print(f"加密后的数据{

rsa_text},解密后等于:{

raw_data.decode('utf8')}")

6)enumerate和zip内置函数学习

enumerate的使用方法

s = [1, 2, 3, 4, 5]

e = enumerate(s)

print(e)

# <enumerate object at 0x000001E8F043D188>

for index, value in e:

print('%s, %s' % (index, value))

#字符串切片

title = 'adfefnfnnf;nfnnfnefn'

title1 = title[:4] #out adfe

print(title1)

title2 = title[:-5] #out adfefnfnnf;nfnn

print(title2)

#什么叫对象id:

#对象ID是用来唯一标识对象的值(身份:标签)

range 用于生成一个整数序列

#zip函数使用

a = [3, 2, 1, 5, 67]

b = [1, 6, 3, 90]

for i, j in zip(a, b):

print(f'i ={i},j={j}')

![第九章[函数]:9.12:偏函数Partial function](https://img-blog.csdnimg.cn/img_convert/d08d0c544e555916938ba788497e85f6.jpeg)

![基于SpringBoot开发的校刊投稿系统[附源码]](https://img-blog.csdnimg.cn/direct/19be7ec42a60433597bcb0692079b6dd.png)