Abstract

We propose a new framework for estimating generative models via an adversarial process, in which we simultaneously train two models: a generative model G that captures the data distribution, and a discriminative model D that estimates the probability that a sample came from the training data rather than G. The training procedure for G is to maximize the probability of D making a mistake. This framework corresponds to a minimax two-player game. In the space of arbitrary functions G and D, a unique solution exists, with G recovering the training data distribution and D equal to 1/2 everywhere. In the case where G and D are defined by multilayer perceptrons, the entire system can be trained with backpropagation.

There is no need for any Markov chains or unrolled approximate inference networks during either training or generation of samples. Experiments demonstrate the potential of the framework through qualitative and quantitative evaluation of the generated samples.

翻译:

我们提出了一个通过对抗过程来估计生成模型的新框架,其中我们同时训练两个模型:捕获数据分布的生成模型G和估计样本来自训练数据而不是G的概率的判别模型D。G的训练过程是最大化D犯错的概率。这个框架对应于一个极大极小的二人博弈。在任意函数G和D的空间中,存在一个唯一解,G恢复训练数据分布,D处处等于1/2。在G和D由多层感知器定义的情况下,整个系统可以通过反向传播进行训练。

在训练或生成样本过程中,不需要任何马尔可夫链或展开近似推理网络。通过对生成的样本进行定性和定量评估,实验证明了该框架的潜力。

总结:

在统计学眼里,整个世界是通过采样不同的分布来得到的,所以抓住数据分布就能解决生成的任务

一个尝试让对方做错选择,一个判断对方的是否属于某个分布

Introduction

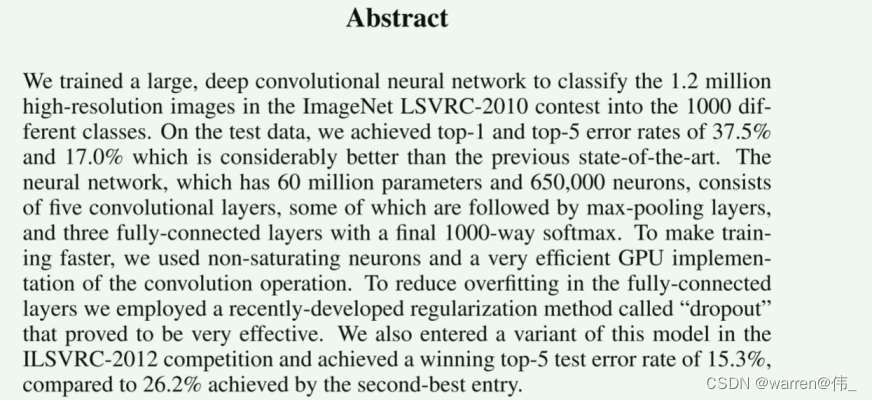

The promise of deep learning is to discover rich, hierarchical models that represent probability distributions over the kinds of data encountered in artificial intelligence applications, such as natural images, audio waveforms containing speech, and symbols in natural language corpora. So far, the most striking successes in deep learning have involved discriminative models, usually those that map a high-dimensional, rich sensory input to a class label. These striking successes have primarily been based on the backpropagation and dropout algorithms, using piecewise linear units which have a particularly well-behaved gradient . Deep generative models have had less of an impact, due to the difficulty of approximating many intractable probabilistic computations that arise in maximum likelihood estimation and related strategies, and due to difficulty of leveraging the benefits of piecewise linear units in the generative context. We propose a new generative model estimation procedure that sidesteps these difficulties.

翻译:

深度学习的前景是发现丰富的分层模型,这些模型表示人工智能应用中遇到的各种数据的概率分布,例如自然图像、包含语音的音频波形和自然语言语料库中的符号。到目前为止,深度学习中最引人注目的成功涉及到判别模型,通常是那些将高维、丰富的感官输入映射到类标签的模型。这些惊人的成功主要是基于反向传播和dropout算法,使用具有特别良好行为梯度的分段线性单元。深度生成模型的影响较小,因为在最大似然估计和相关策略中出现的许多难以处理的概率计算难以近似,并且由于难以在生成环境中利用分段线性单元的好处。我们提出了一种新的生成模型估计方法来避免这些困难。

总结:

深度学习不仅仅是讲深度神经网络,更多的是对整个数据分布的一个特征表示

过去的生成模型在近似分布计算最大似然时遇到很多困难,故提出新框架

In the proposed adversarial nets framework, the generative model is pitted against an adversary: a discriminative model that learns to determine whether a sample is from the model distribution or the data distribution. The generative model can be thought of as analogous to a team of counterfeiters, trying to produce fake currency and use it without detection, while the discriminative model is analogous to the police, trying to detect the counterfeit currency. Competition in this game drives both teams to improve their methods until the counterfeits are indistiguishable from the genuine articles.

翻译:

在提出的对抗网络框架中,生成模型与对手进行了竞争:一个判别模型,该模型学习确定样本是来自模型分布还是来自数据分布。生成模型可以被认为类似于一组伪造者,试图制造假币并在不被发现的情况下使用,而判别模型类似于警察,试图检测假币。这个游戏中的竞争促使两队改进他们的方法,直到假货与正品无法区分。

This framework can yield specific training algorithms for many kinds of model and optimization algorithm. In this article, we explore the special case when the generative model generates samples by passing random noise through a multilayer perceptron, and the discriminative model is also a multilayer perceptron. We refer to this special case as adversarial nets. In this case, we can train both models using only the highly successful backpropagation and dropout algorithms and sample from the generative model using only forward propagation. No approximate inference or Markov chains are necessary.

翻译:

该框架可以为多种模型和优化算法生成特定的训练算法。在本文中,我们探讨了生成模型通过多层感知器传递随机噪声生成样本的特殊情况,而判别模型也是多层感知器。我们把这种特殊情况称为对抗性网络。在这种情况下,我们可以只使用非常成功的反向传播和dropout算法来训练两个模型,并且只使用正向传播从生成模型中采样。不需要近似推理或马尔可夫链

总结:

生成模型是一个MLP,输入是一个随机的噪音,这个MLP能把产生随机噪音的分布映射到任何一个我们想要拟合的分布

Related work

Until recently, most work on deep generative models focused on models that provided a parametric specification of a probability distribution function. The model can then be trained by maximizing the log likelihood. In this family of model, perhaps the most succesful is the deep Boltzmann machine. Such models generally have intractable likelihood functions and therefore require numerous approximations to the likelihood gradient. These difficulties motivated the development of “generative machines”–models that do not explicitly represent the likelihood, yet are able to generate samples from the desired distribution. Generative stochastic networks are an example of a generative machine that can be trained with exact backpropagation rather than the numerous approximations required for Boltzmann machines. This work extends the idea of a generative machine by eliminating the Markov chains used in generative stochastic networks.

翻译:

直到最近,大多数关于深度生成模型的工作都集中在提供概率分布函数的参数说明的模型上。然后可以通过最大化对数似然来训练模型。在这类模型中,也许最成功的是深度玻尔兹曼机。这种模型通常具有难以处理的似然函数,因此需要对似然梯度进行多次近似。这些困难促使了“生成机器”的发展——这些模型不能明确地表示可能性,但能够从期望的分布中生成样本。生成随机网络是生成机器的一个例子,它可以用精确的反向传播来训练,而不是玻尔兹曼机器所需要的大量近似。这项工作通过消除生成随机网络中使用的马尔可夫链,扩展了生成机器的思想。

总结:

与以往追求完全算出分布不同,新的生成模型只要求近似分布而不是求解分布

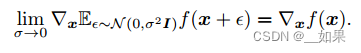

Our work backpropagates derivatives through generative processes by using the observation that

总结:

观察到对 f 的期望求导就是对 f 自己求导,这也是为什么通过反向传播求解GAN

We were unaware at the time we developed this work that Kingma and Welling and Rezende et al had developed more general stochastic backpropagation rules, allowing one to backpropagate through Gaussian distributions with finite variance, and to backpropagate to the covariance parameter as well as the mean. These backpropagation rules could allow one to learn the conditional variance of the generator, which we treated as a hyperparameter in this work. Kingma and Welling and Rezende et al use stochastic backpropagation to train variational autoencoders (VAEs). Like generative adversarial networks, variational autoencoders pair a differentiable generator network with a second neural network. Unlike generative adversarial networks, the second network in a VAE is a recognition model that performs approximate inference. GANs require differentiation through the visible units, and thus cannot model discrete data, while VAEs require differentiation through the hidden units, and thus cannot have discrete latent variables. Other VAElike approaches exist but are less closely related to our method.

翻译:

在我们开展这项工作的时候,我们并没有意识到Kingma、Welling和Rezende等人已经开发了更一般的随机反向传播规则,允许人们通过有限方差的高斯分布进行反向传播,并反向传播到协方差参数和平均值。这些反向传播规则允许人们学习生成器的条件方差,我们将其视为本工作中的超参数。Kingma和Welling以及Rezende等人使用随机反向传播来训练变分自编码器(VAEs)。与生成对抗网络一样,变分自编码器将一个可微生成器网络与第二个神经网络配对。与生成对抗网络不同,VAE中的第二个网络是一个执行近似推理的识别模型。GANs需要通过可见单元进行微分,因此不能对离散数据进行建模,而VAEs需要通过隐藏单元进行微分,因此不能具有离散的潜在变量。其他类似VAE的方法也存在,但与我们的方法关系不太密切

Previous work has also taken the approach of using a discriminative criterion to train a generative model. These approaches use criteria that are intractable for deep generative models. These methods are difficult even to approximate for deep models because they involve ratios of probabilities which cannot be approximated using variational approximations that lower bound the probability. Noise-contrastive estimation (NCE) involves training a generative model by learning the weights that make the model useful for discriminating data from a fixed noise distribution. Using a previously trained model as the noise distribution allows training a sequence of models of increasing quality. This can be seen as an informal competition mechanism similar in spirit to the formal competition used in the adversarial networks game. The key limitation of NCE is that its “discriminator” is defined by the ratio of the probability densities of the noise distribution and the model distribution, and thus requires the ability to evaluate and backpropagate through both densities.

翻译:

以前的工作也采用了使用判别标准来训练生成模型的方法。这些方法使用的标准对于深度生成模型来说是难以处理的。这些方法甚至很难对深度模型进行近似,因为它们涉及到概率的比率,而这些比率不能用概率下界的变分近似来近似。噪声对比估计(NCE)涉及通过学习权重来训练生成模型,使模型能够从固定的噪声分布中区分数据。使用先前训练的模型作为噪声分布,可以训练一系列质量不断提高的模型。这可以看作是一种非正式的竞争机制,在精神上类似于对抗性网络游戏中使用的正式竞争。NCE的关键限制是它的“鉴别器”是由噪声分布的概率密度和模型分布的概率密度之比定义的,因此需要能够通过这两个密度进行评估和反向传播。

总结:

通过一个辨别模型来帮助生成模型也不是很行,例如NCE

NCE的损失函数相对复杂一些,性能没有GAN好

Some previous work has used the general concept of having two neural networks compete. The most relevant work is predictability minimization. In predictability minimization, each hidden unit in a neural network is trained to be different from the output of a second network, which predicts the value of that hidden unit given the value of all of the other hidden units. This work differs from predictability minimization in three important ways: 1) in this work, the competition between the networks is the sole training criterion, and is sufficient on its own to train the network. Predictability minimization is only a regularizer that encourages the hidden units of a neural network to be statistically independent while they accomplish some other task; it is not a primary training criterion.

2) The nature of the competition is different. In predictability minimization, two networks’ outputs are compared, with one network trying to make the outputs similar and the other trying to make the outputs different. The output in question is a single scalar. In GANs, one network produces a rich, high dimensional vector that is used as the input to another network, and attempts to choose an input that the other network does not know how to process. 3) The specification of the learning process is different. Predictability minimization is described as an optimization problem with an objective function to be minimized, and learning approaches the minimum of the objective function. GANs are based on a minimax game rather than an optimization problem, and have a value function that one agent seeks to maximize and the other seeks to minimize. The game terminates at a saddle point that is a minimum with respect to one player’s strategy and a maximum with respect to the other player’s strategy.

翻译:

之前的一些工作使用了两个神经网络竞争的一般概念。最相关的工作是可预测性最小化。在可预测性最小化中,神经网络中的每个隐藏单元被训练成不同于第二个网络的输出,第二个网络在给定所有其他隐藏单元的值的情况下预测该隐藏单元的值。这项工作与可预测性最小化在三个重要方面有所不同:

1)在这项工作中,网络之间的竞争是唯一的训练标准,并且本身就足以训练网络。可预测性最小化只是一个正则化器,它鼓励神经网络的隐藏单元在完成其他任务时在统计上独立;这不是主要的训练标准。

2)竞争性质不同。在可预测性最小化中,比较两个网络的输出,一个网络试图使输出相似,另一个网络试图使输出不同。所讨论的输出是一个标量。在gan中,一个网络产生一个丰富的高维向量,用作另一个网络的输入,并试图选择另一个网络不知道如何处理的输入

3)学习过程的规范不同。可预测性最小化被描述为一个目标函数最小化的优化问题,并且学习接近目标函数的最小值。GANs基于极大极小博弈而非优化问题,并且具有一个代理寻求最大化而另一个代理寻求最小化的价值函数。游戏终止于一个鞍点,这个鞍点相对于一个玩家的策略是最小值,相对于另一个玩家的策略是最大值

总结:

解释与predictability minimization算法的区别

Generative adversarial networks has been sometimes confused with the related concept of “adversarial examples”. Adversarial examples are examples found by using gradient-based optimization directly on the input to a classification network, in order to find examples that are similar to the data yet misclassified. This is different from the present work because adversarial examples are not a mechanism for training a generative model. Instead, adversarial examples are primarily an analysis tool for showing that neural networks behave in intriguing ways, often confidently classifying two images differently with high confidence even though the difference between them is imperceptible to a human observer. The existence of such adversarial examples does suggest that generative adversarial network training could be inefficient, because they show that it is possible to make modern discriminative networks confidently recognize a class without emulating any of the human-perceptible attributes of that class.

翻译:

生成对抗网络有时会与“对抗示例”的相关概念混淆。对抗性示例是通过直接对分类网络的输入使用基于梯度的优化来找到的示例,以便找到与数据相似但分类错误的示例。这与目前的工作不同,因为对抗性示例不是训练生成模型的机制。相反,对抗性示例主要是一种分析工具,用于显示神经网络以有趣的方式运行,通常自信地对两幅图像进行不同的分类,并具有很高的置信度,即使它们之间的差异对于人类观察者来说是难以察觉的。这些对抗例子的存在确实表明,生成对抗网络训练可能是低效的,因为它们表明,有可能使现代判别网络自信地识别一个类别,而不模仿该类别的任何人类可感知属性。

总结:

解释与另一个名词adversarial examples的区别

Adversarial nets

The adversarial modeling framework is most straightforward to apply when the models are both multilayer perceptrons. To learn the generator’s distribution pg over data x, we define a prior on input noise variables pz(z), then represent a mapping to data space as G(z; θg), where G is a differentiable function represented by a multilayer perceptron with parameters θg. We also define a second multilayer perceptron D(x; θd) that outputs a single scalar. D(x) represents the probability that x came from the data rather than pg. We train D to maximize the probability of assigning the correct label to both training examples and samples from G. We simultaneously train G to minimize log(1 − D(G(z))). In other words, D and G play the following two-player minimax game with value function V (G; D):

翻译:

当模型都是多层感知器时,对抗性建模框架最容易应用。为了了解生成器在数据x上的分布pg,我们定义了输入噪声变量pz(z)的先验,然后将到数据空间的映射表示为G(z;θg),其中G是由参数为θg的多层感知器表示的可微函数。我们还定义了第二个多层感知器D(x;θd)输出单个标量。D(x)表示x来自数据而不是pg的概率。我们训练D以最大化为训练样例和G的样本分配正确标签的概率。我们同时训练G以最小化log(1 - D(G(z)))。换句话说,D和G进行如下的二人极大极小博弈,其值函数为V (G;D):

总结:

举个例子,4k显示器的一张图片像素大概为800万,每个像素是一个随机变量,那么x是一个长为800万维的多维随机变量,我们认为每个像素的值都是由后面的分布pg来控制的;选取估计差不多大小的随机噪声,利用MLP强行拟合我们想要的分布

不好的地方在于随机噪声不稳定,无法每次都找到比较好的z

判别模型输出标号,0是来自生成模型,0~1是来自真实数据

log(1 - D(G(z))):当辨别器做得很好的时候是log1=0;做得不好时是负数,越不好越趋于负无穷

目标函数V(G,D):第一项是从真实分布中采样,若辨别器很好则为log1=0;第二项是从噪音分布中采样

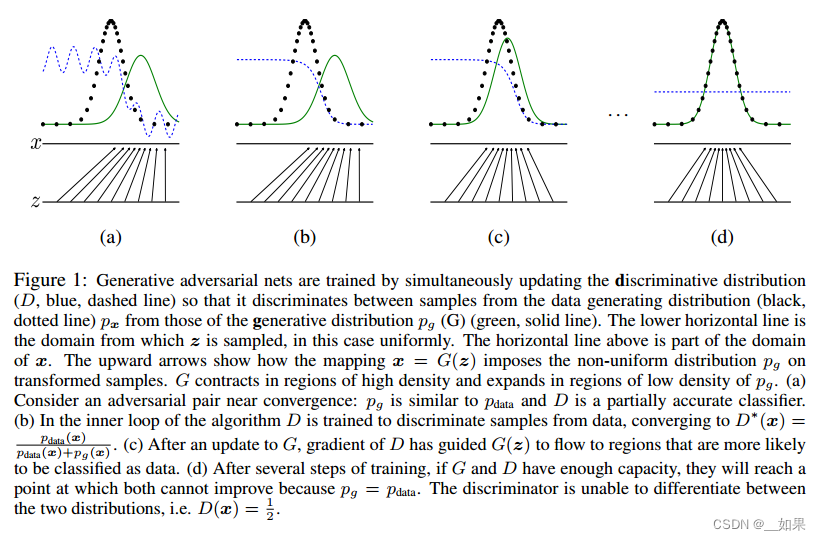

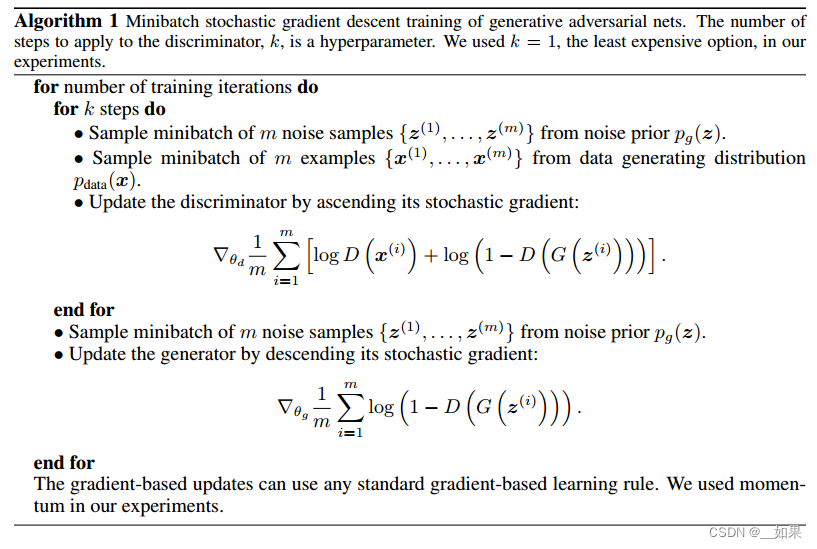

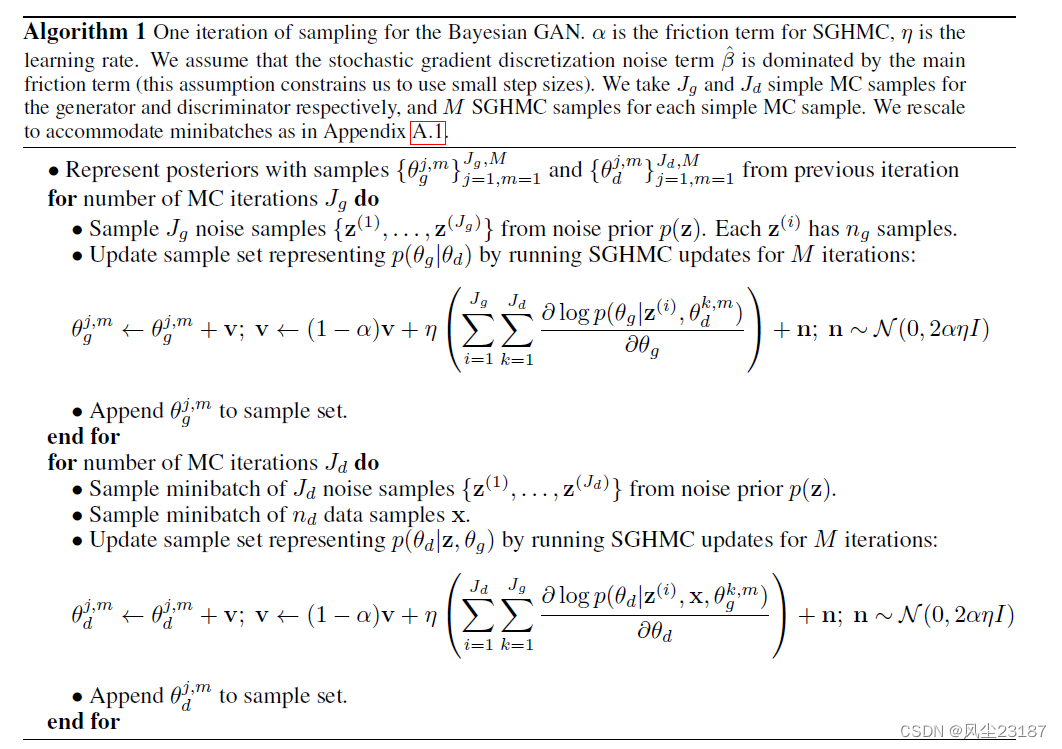

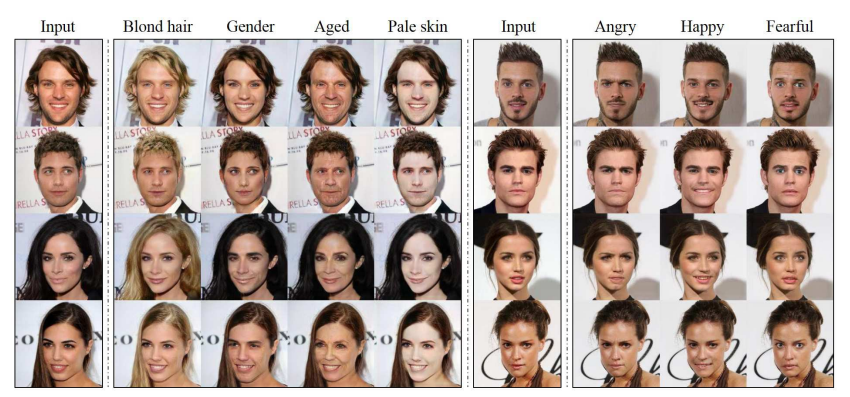

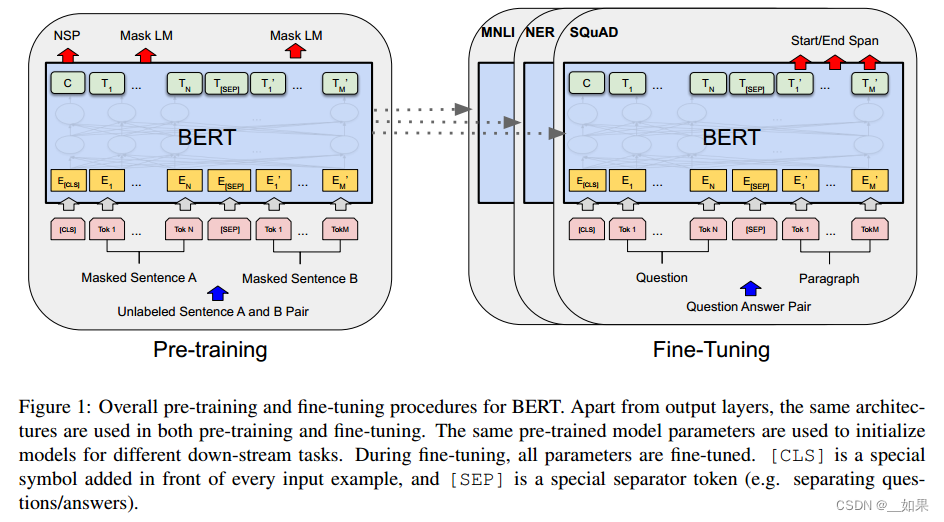

In the next section, we present a theoretical analysis of adversarial nets, essentially showing that the training criterion allows one to recover the data generating distribution as G and D are given enough capacity, i.e., in the non-parametric limit. See Figure 1 for a less formal, more pedagogical explanation of the approach. In practice, we must implement the game using an iterative, numerical approach. Optimizing D to completion in the inner loop of training is computationally prohibitive, and on finite datasets would result in overfitting. Instead, we alternate between k steps of optimizing D and one step of optimizing G. This results in D being maintained near its optimal solution, so long as G changes slowly enough. The procedure is formally presented in Algorithm 1.

翻译:

在下一节中,我们提出了对抗性网络的理论分析,本质上表明训练准则允许人们恢复数据生成分布,因为G和D有足够的容量,即在非参数极限下。请参见图1,以获得对该方法的不太正式、更具教学性的解释。在实践中,我们必须使用迭代的数值方法来执行游戏。在训练的内循环中优化D到完成在计算上是禁止的,并且在有限的数据集上会导致过拟合。相反,我们在优化D的k步和优化G的1步之间交替进行,这导致D保持在其最优解附近,只要G变化足够慢。该过程在算法1中正式提出

In practice, equation 1 may not provide sufficient gradient for G to learn well. Early in learning, when G is poor, D can reject samples with high confidence because they are clearly different from the training data. In this case, log(1 − D(G(z))) saturates. Rather than training G to minimize log(1 − D(G(z))) we can train G to maximize log D(G(z)). This objective function results in the same fixed point of the dynamics of G and D but provides much stronger gradients early in learning.

翻译:

在实际操作中,方程1可能无法提供足够的梯度,使G能够学好。在学习早期,当G较差时,D可以拒绝高置信度的样本,因为它们与训练数据明显不同。在这种情况下,log(1−D(G(z)))饱和。与其训练G最小化log(1−D(G(z)),不如训练G最大化log D(G(z))。这个目标函数的结果是G和D的动力学相同的不动点,但在学习的早期提供了更强的梯度。

总结:

V(G,D)的最后一项有点问题,早期时G比较弱,很容易把D训练得特别好,导致梯度无法求解。因此建议把最后一项改成最大化log D(G(z)),但是这种改法也有问题,后续工作会加以改进

Theoretical Results

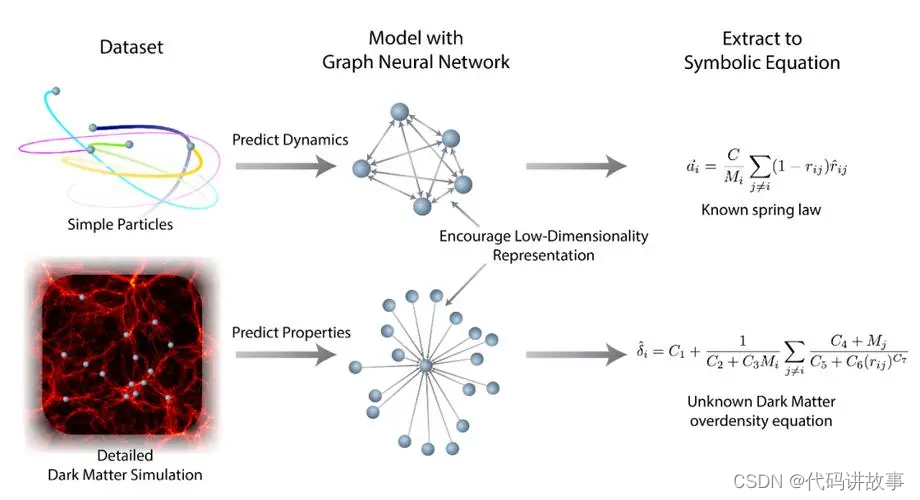

The generator G implicitly defines a probability distribution pg as the distribution of the samples G(z) obtained when z ∼ pz. Therefore, we would like Algorithm 1 to converge to a good estimator of pdata, if given enough capacity and training time. The results of this section are done in a nonparametric setting, e.g. we represent a model with infinite capacity by studying convergence in the space of probability density functions.

翻译:

生成器G隐式地定义了一个概率分布pg作为z ~ pz时得到的样本G(z)的分布。因此,如果给定足够的容量和训练时间,我们希望算法1收敛到pdata的一个很好的估计器。本节的结果是在非参数设置下得到的,例如,我们通过研究概率密度函数空间中的收敛性来表示具有无限容量的模型

We will show in section 4.1 that this minimax game has a global optimum for pg = pdata. We will then show in section 4.2 that Algorithm 1 optimizes Eq 1, thus obtaining the desired result.

翻译:

我们将在4.1节中展示这个极大极小对策对于pg = pdata具有全局最优。然后,我们将在4.2节中说明,算法1优化了Eq 1,从而获得了期望的结果。

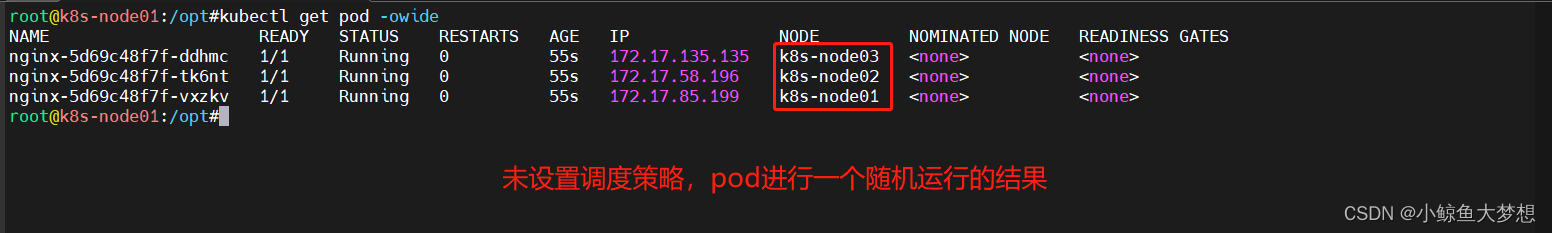

图一表示的是GAN在前三步和最后一步干的事情,这里x与z是最简单的情况——一维标量,粗点是x真实分布,绿线是z拟合的分布,蓝色虚线是辨别器

a中噪音均匀采样,把z映射成一个相似分布,这时的辨别器效果不佳

b中调整辨别器,使数据分布靠近真实分布时辨别器的值靠近1

c中更新生成器,训练后峰值往左移且均值变大,继续糊弄辨别器

d中完成训练,得到靠近真实分布的映射函数,也就是生成器,辨别器恒为0.5

从真实分布采样m个,从随机噪声分布采样m个,先更新辨别器,再更新生成器,k为超参数

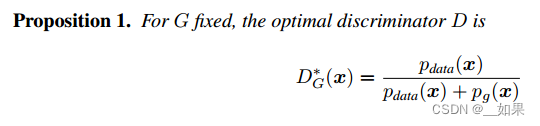

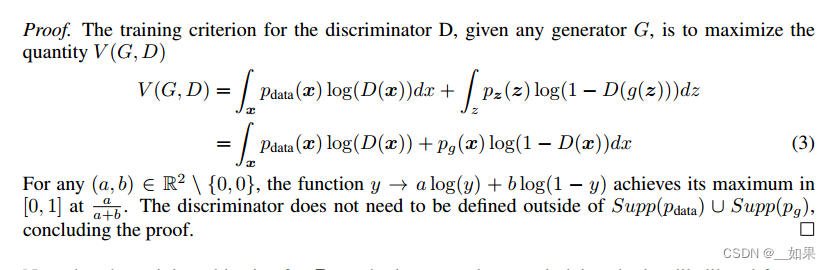

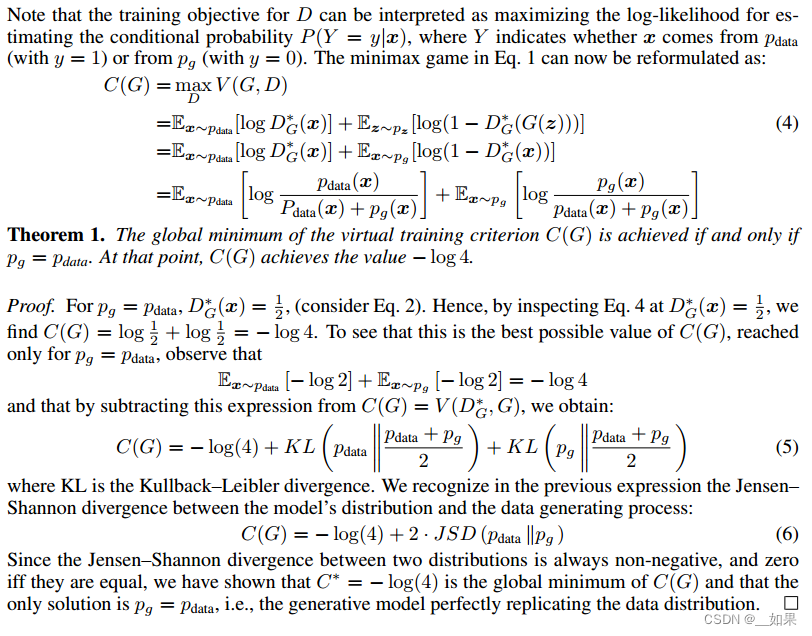

Global Optimality of pg = pdata

对辨别器,最优解为1/2。这里的二分类十分有用,避免了统计学中各式各样的判断分布是否相同的方法,只要随机采样的概率都是1/2,就说明两个分布无差异

证明过程:对价值函数求期望,把g(z)替换为x,则可以把后一项变成对x求期望,则可以把前后两项合并,再把pdata与pg用a与b代替,D(x)用y代替,y是凸函数,所以求最大值就是对y求导=0,得到a/(a+b),也就证明了pdata/pdata+pg是最优解

把最优解带回价值函数,此时价值函数只与G相关,minG当且仅当G生成的分布与真实分布是相等的

KL散度公式

KL散度公式

因此在两项的分母同乘1/2,在括号外乘1/2补齐,得到了两个KL散度,由于KL散度是大于等于0的,因此minKL就是让KL=0,也就是pdata=(pdata+pg)/2,也就是pdata=pg

也可用JS散度表示,JS散度是对称的,有的人说GAN使用了对称散度使它训练简单一点

Convergence of Algorithm 1

命题2:当我们的G和D有足够的容量时,每一步对D优化到最好,迭代这个过程能使pg收敛到pdata

把价值函数看作是pg的函数,也就变成了一个函数的函数。它在一个高维的值空间中迭代,现在我们要在一个函数空间里面做梯度下降,每一步把D求到最优,一个凸函数的一个上限函数还是凸函数,梯度下降一定会找到最优解

Advantages and disadvantages

This new framework comes with advantages and disadvantages relative to previous modeling frameworks. The disadvantages are primarily that there is no explicit representation of pg(x), and that D must be synchronized well with G during training (in particular, G must not be trained too much without updating D, in order to avoid “the Helvetica scenario” in which G collapses too many values of z to the same value of x to have enough diversity to model pdata), much as the negative chains of a Boltzmann machine must be kept up to date between learning steps. The advantages are that Markov chains are never needed, only backprop is used to obtain gradients, no inference is needed during learning, and a wide variety of functions can be incorporated into the model. Table 2 summarizes the comparison of generative adversarial nets with other generative modeling approaches.

The aforementioned advantages are primarily computational. Adversarial models may also gain some statistical advantage from the generator network not being updated directly with data examples, but only with gradients flowing through the discriminator. This means that components of the input are not copied directly into the generator’s parameters. Another advantage of adversarial networks is that they can represent very sharp, even degenerate distributions, while methods based on Markov chains require that the distribution be somewhat blurry in order for the chains to be able to mix between modes

翻译:

与以前的建模框架相比,这个新框架有优点也有缺点。缺点主要是没有显式表示的pg (x)和D必须同步与G在训练(特别是G不能训练太多没有更新,为了避免“Helvetica场景”,即G崩溃太多相同的z值x的值有足够的多样性模型pdata),负链的玻耳兹曼机之间必须保持最新的学习步骤。它的优点是不需要马尔可夫链,只需要反向获取梯度,在学习过程中不需要推理,并且可以将各种各样的函数纳入模型。表2总结了生成对抗网络与其他生成建模方法的比较。

上述优点主要是计算性的。对抗性模型也可以从生成器网络中获得一些统计优势,这些生成器网络不直接使用数据示例更新,而是只使用流过鉴别器的梯度。这意味着输入的组件不会直接复制到生成器的参数中。对抗网络的另一个优点是,它们可以表示非常清晰,甚至退化的分布,而基于马尔可夫链的方法要求分布有些模糊,以便链能够在模式之间混合

总结:

训练较难,G与D需要平衡好;生成数据并没有去看真实的数据,没有试图去拟合真实数据的特征

![数据结构-顺序表的实现 [王道]](https://img-blog.csdnimg.cn/direct/03eb4cb8040d4f28beddaa63a22d8bc9.png)

![[晓理紫]每日论文分享(有中文摘要,源码或项目地址)--强化学习、模仿学习、机器人、开放词汇](https://img-blog.csdnimg.cn/direct/ebec921a860f447eb4a230522e1bf577.jpeg#pic_center)