本笔记不使用layer相关API,搭建一个三层的神经网络来训练MNIST数据集。

前向传播和梯度更新都使用最基础的tensorflow API来做。

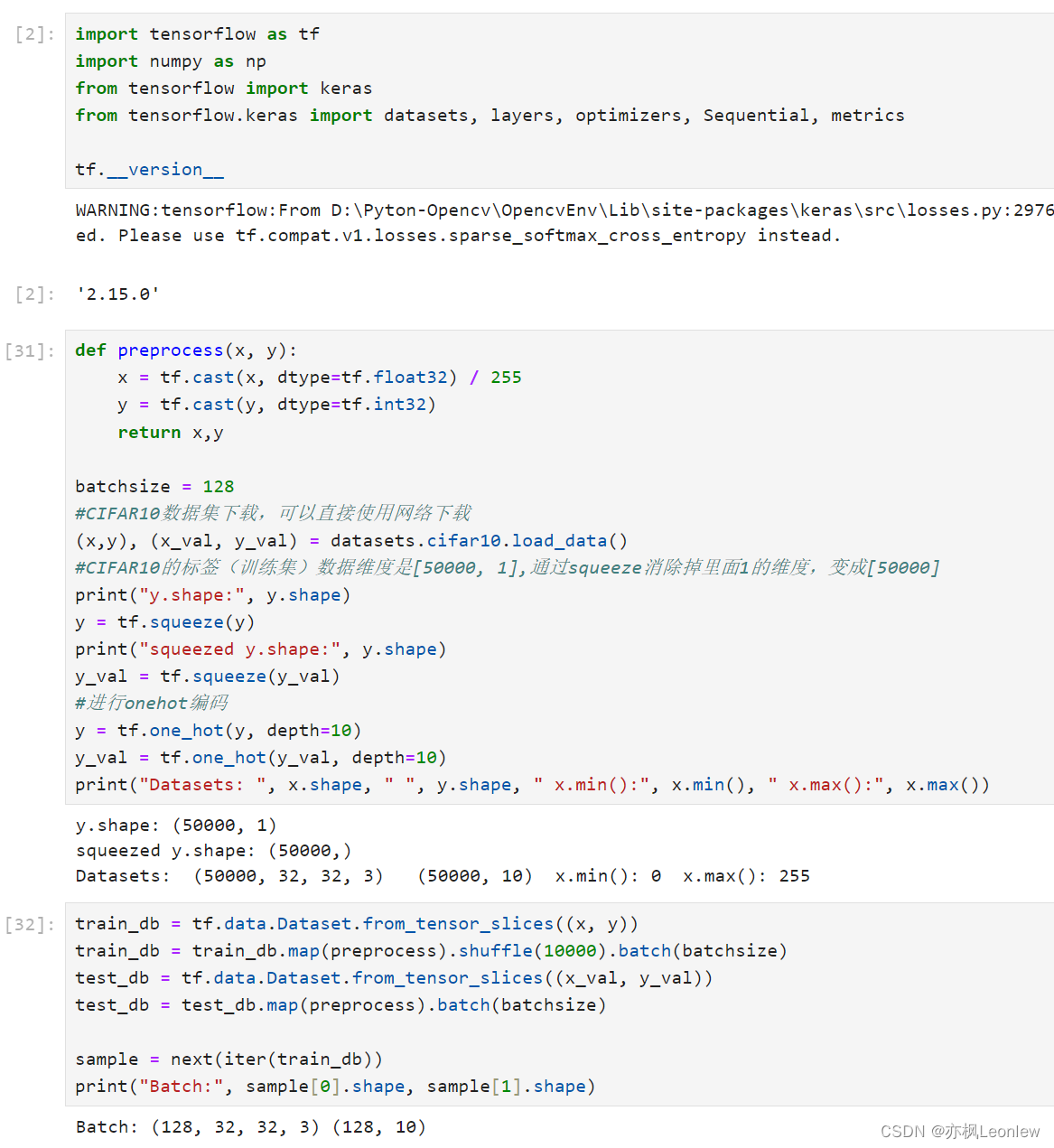

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import datasets

import numpy as np

def load_mnist():

path = r'./mnist.npz' #放置mnist.py的目录。注意斜杠

f = np.load(path)

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

f.close()

return (x_train, y_train), (x_test, y_test)

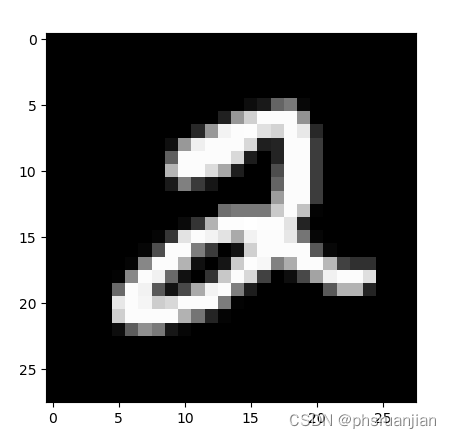

#加载mnist数据集

#X_train: [60000, 28, 28] 图片

#Y_train: [60000] 标签

#mnist数据集下载:https://blog.csdn.net/charles_neil/article/details/107851880

# https://www.zhihu.com/question/56773355

(X_train,Y_train),(X_test,Y_test) = load_mnist()

#转换为tensor

#图片数据值转换到0-1

x = tf.convert_to_tensor(X_train, dtype=tf.float32) / 255.

y = tf.convert_to_tensor(Y_train, dtype=tf.int32)

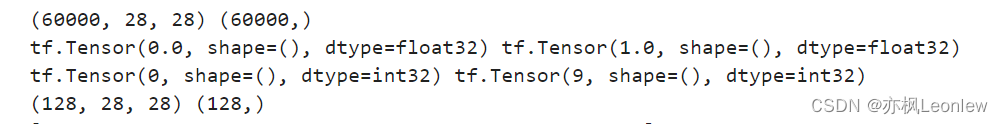

print(x.shape,y.shape)

print(tf.reduce_min(x), tf.reduce_max(x))

print(tf.reduce_min(y), tf.reduce_max(y))

#数据集切分为多个batch

train_db = tf.data.Dataset.from_tensor_slices((x,y)).batch(128)

train_iter = iter(train_db)

sample = next(train_iter)

print(sample[0].shape, sample[1].shape)

#学习率

lr = 0.1

#用三个神经元,[b:784] => [b,256] => [b,128] => [b,10]

w1 = tf.Variable(tf.random.truncated_normal([784,256], stddev=0.1))

b1 = tf.Variable(tf.zeros([256]))

w2 = tf.Variable(tf.random.truncated_normal([256,128], stddev=0.1))

b2 = tf.Variable(tf.zeros([128]))

w3 = tf.Variable(tf.random.truncated_normal([128,10], stddev=0.1))

b3 = tf.Variable(tf.zeros([10]))

for epoch in range(10):

print("[==================Epoch ", epoch, "========================]")

for step, (x,y) in enumerate(train_db):

x = tf.reshape(x, [-1, 28*28])

#对标签进行onehot编码

y_onehot = tf.one_hot(y, depth=10)

with tf.GradientTape() as tape:

#第一层,输入x [128,784]

#x@w + b: [batch, 784] [784,256] + [256] => [batch,256]

h1 = x@w1 + b1

h1 = tf.nn.relu(h1)

#第二层:[batch, 256] => [batch, 128]

h2 = h1@w2 + b2

h2 = tf.nn.relu(h2)

#输出层:[batch,128] => [batch,10]

out = h2@w3 + b3

#计算损失

#使用MSE: mean(sum(y - out)^2)

loss = tf.reduce_mean(tf.square(y_onehot - out))

#计算梯度

grads = tape.gradient(loss, [w1,b1,w2,b2,w3,b3])

#更新w和b: w = w - lr * w_grad

w1.assign_sub(lr * grads[0])

b1.assign_sub(lr * grads[1])

w2.assign_sub(lr * grads[2])

b2.assign_sub(lr * grads[3])

w3.assign_sub(lr * grads[4])

b3.assign_sub(lr * grads[5])

if (step % 100 == 0):

print("Batch:", step, "loss:", float(loss))运行结果:

![[每周一更]-(第83期):Go新项目-Gin中间件的使用和案例(10)](https://img-blog.csdnimg.cn/direct/ba5addccbb5a4aafae0dde5224c9a0e6.png#pic_center)