概述

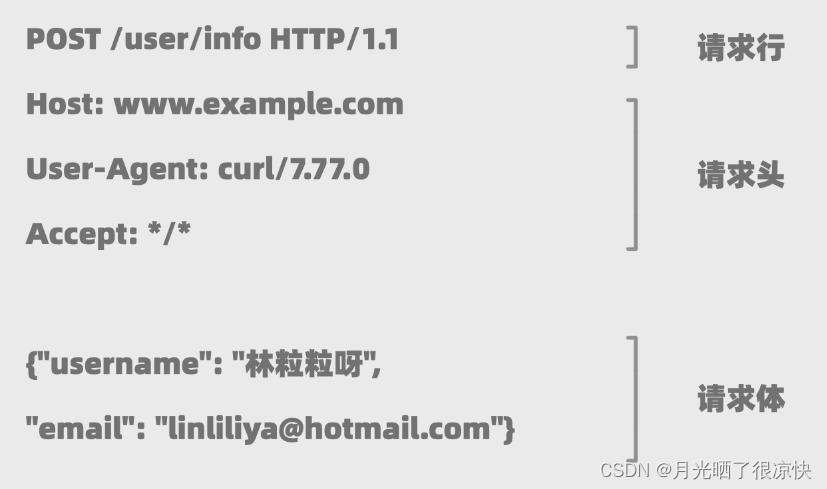

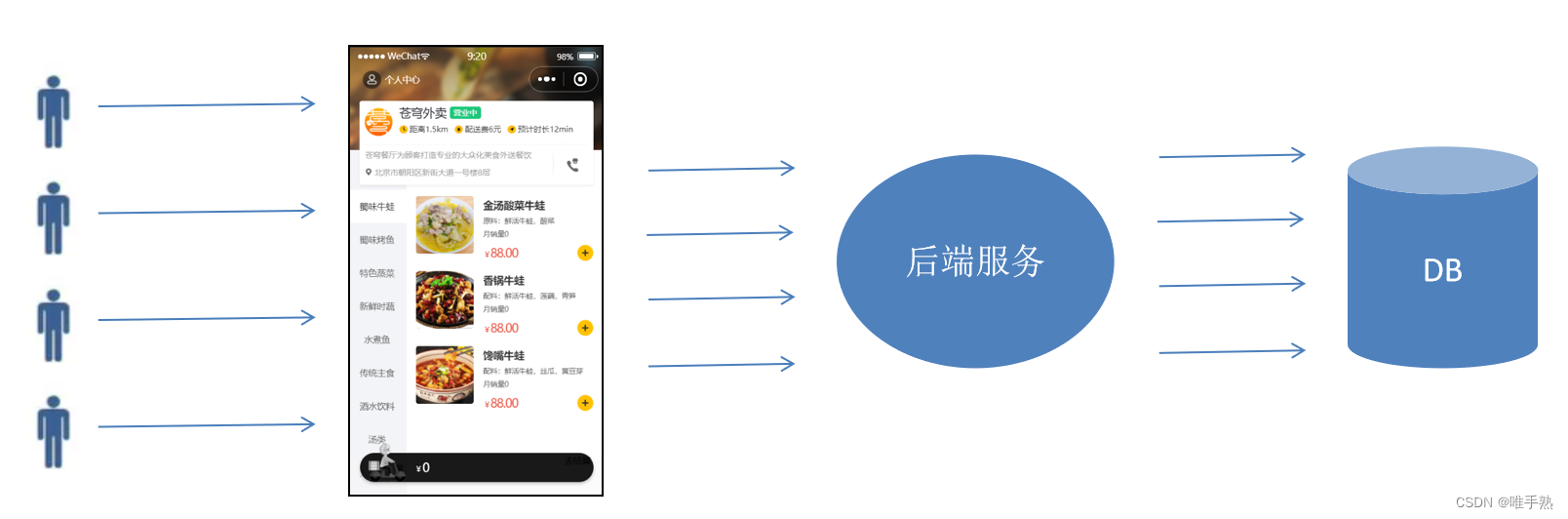

- 介绍一下请求状态原理

- 分析需要登录的网站请求特点

- 分析登陆前后请求差异

- 如何从接口分析一步步构建一个合理的登录爬虫

- 巧方法解决登录

案例分析

案例一

https://login2.scrape.center/

默认重定向导致无法获取到重定向前的cookie

案例二爬虫源码

# coding=utf-8

import requests

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive',

'Content-Type': 'application/x-www-form-urlencoded',

'Origin': 'https://login2.scrape.center',

'Pragma': 'no-cache',

'Referer': 'https://login2.scrape.center/login',

'Sec-Fetch-Dest': 'document',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'same-origin',

'Sec-Fetch-User': '?1',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'sec-ch-ua': '"Not_A Brand";v="8", "Chromium";v="120", "Google Chrome";v="120"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

}

data = {

'username': 'admin',

'password': 'admin',

}

response = requests.post('https://login2.scrape.center/login', headers=headers, data=data, allow_redirects=False)

status = response.status_code

################### 直接获取响应的cookie字段 ################

logined_cookies = response.cookies

print(status)

print(logined_cookies)

# 将cookies输出为字典

print(dict(logined_cookies)) # {

'sessionid': 'h1d41qkv8qzrlhk0ykfzx54g3ph0od6j'}

################# 从响应头内获取cookie ###################

headers_resp = dict(response.headers)

cookies = headers_resp.get('Set-Cookie') # 获取到cookie字符串

print(cookies) # sessionid=h1d41qkv8qzrlhk0ykfzx54g3ph0od6j; expires=Wed, 24 Jan 2024 23:40:31 GMT; HttpOnly; Max-Age=1209600; Path=/; SameSite=Lax

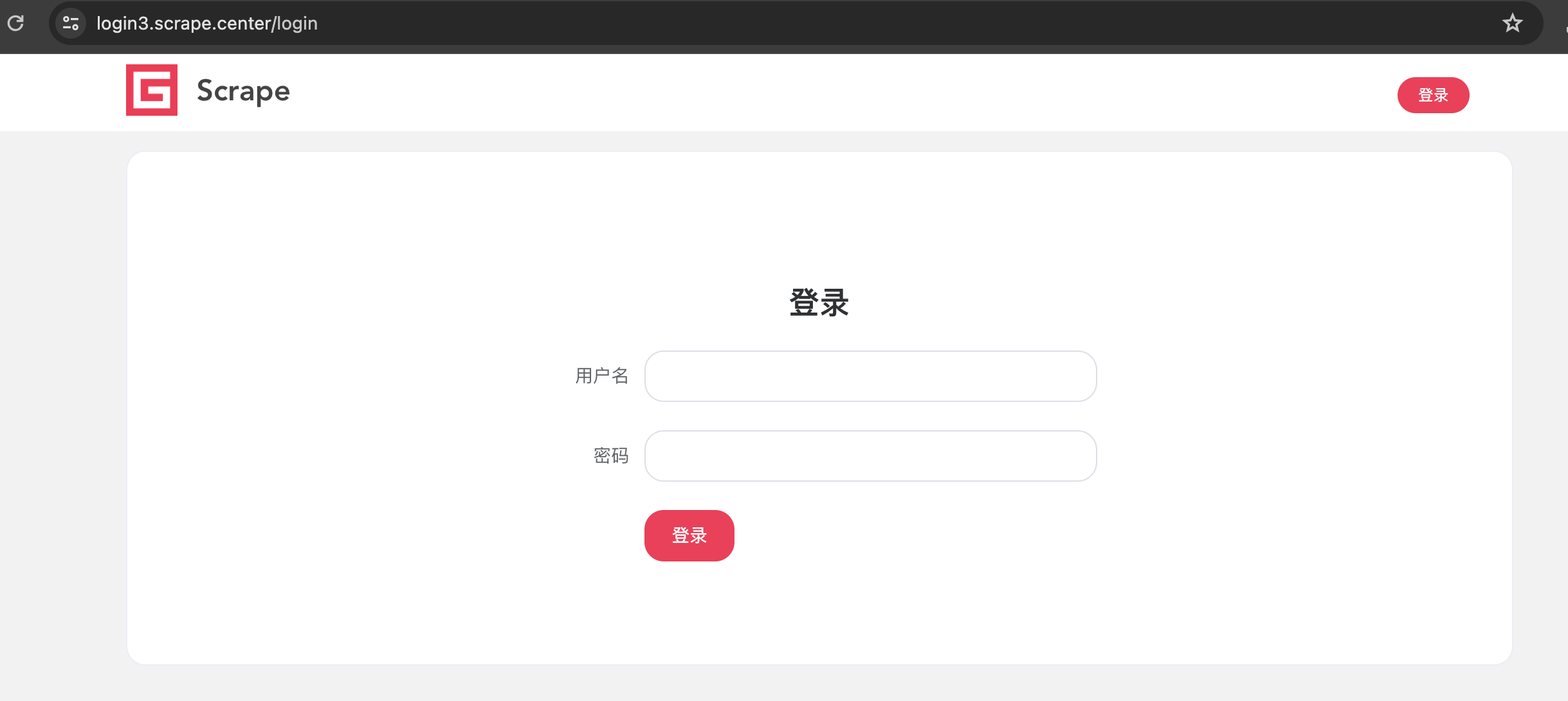

案例二

https://login3.scrape.center/login

案例三

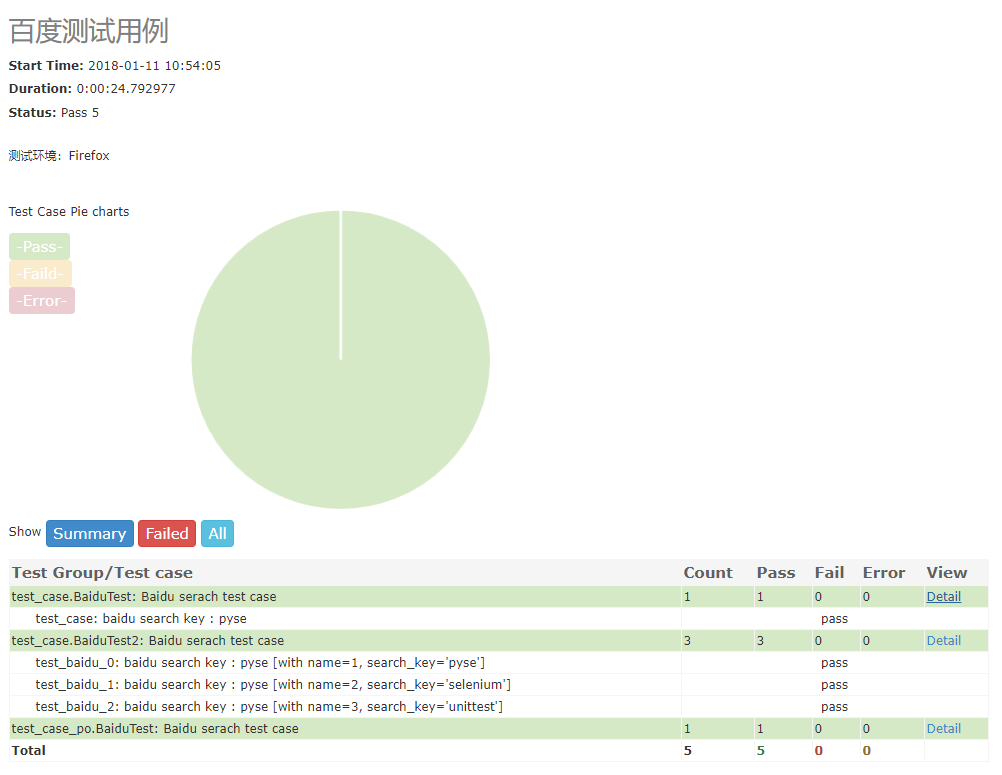

法信爬虫

登录脚本

# coding=utf-8

import time

import requests

cookies = {

'ASP.NET_SessionId': 'at0wmkahm3o1yf2uzdcyo24z',

'insert_cookie': '89314150',

'showUpdate': '2024-01-05',

'Hm_lvt_a317640b4aeca83b20c90d410335b70f': '1704930439',

'clx': 'n',

'sid': 'at0wmkahm3o1yf2uzdcyo24z',

'isAutoLogin': 'off',

'Hm_lpvt_a317640b4aeca83b20c90d410335b70f': str(int(time.time())),

}

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive',

'Content-Type': 'application/x-www-form-urlencoded',

# 'Cookie': 'ASP.NET_SessionId=at0wmkahm3o1yf2uzdcyo24z; insert_cookie=89314150; showUpdate=2024-01-05; Hm_lvt_a317640b4aeca83b20c90d410335b70f=1704930439; clx=n; sid=at0wmkahm3o1yf2uzdcyo24z; isAutoLogin=off; Hm_lpvt_a317640b4aeca83b20c90d410335b70f=1704930535',

'Origin': 'https://www.faxin.cn',

'Pragma': 'no-cache',

'Referer': 'https://www.faxin.cn/login.aspx?url=/lib/zyfl/zyflcontent.aspx%3Fgid=A341404%26libid=010101',

'Sec-Fetch-Dest': 'document',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'same-origin',

'Sec-Fetch-User': '?1',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'sec-ch-ua': '"Not_A Brand";v="8", "Chromium";v="120", "Google Chrome";v="120"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

}

data = {

'__VIEWSTATE': '/wEPDwUJNzUzMzc2OTcyZBgBBR5fX0NvbnRyb2xzUmVxdWlyZVBvc3RCYWNrS2V5X18WAgUKaXNSZW1lbWJlcgULaXNBdXRvTG9naW7CqpQN/iu5+xaD2O1P5o5lGgo7gA==',

'__VIEWSTATEGENERATOR': 'C2EE9ABB',

'WebUCHead_Special1$hiddIsLogin': '0',

'WebUCSearchNoAdvSearch1$lib': '',

'keyword': '',

'user_name': '',# 你的用户名

'user_password': '', # 你的密码

'isLogout': '0',

}

response = requests.post(

'https://www.faxin.cn/login.aspx?url=%2flib%2fzyfl%2fzyflcontent.aspx%3fgid%3dA341404%26libid%3d010101',

cookies=cookies,

headers=headers,

data=data,

allow_redirects=False

)

status = response.status_code

################### 直接获取响应的cookie字段 ################

logined_cookies = response.cookies

print(status)

print(logined_cookies)

# 将cookies输出为字典

print(dict(logined_cookies))