记录一次改代码的挣扎经历:

看了几篇关于DCT频域的深度模型文献,尤其是21年FcaNet:基于DCT 的attention model,咱就是说想试试将我模型的输入改为分组的DCT系数,然后就开始下面的波折了。

第一次尝试:

我直接调用了库函数,然后出现问题了:这个库函数是应用在numpy数组上,得在CPU上处理。

from scipy.fftpack import dct, idct

...

dct_block = dct(dct(block, axis=2, norm='ortho'), axis=3, norm='ortho') # [B,C,k,k]

...

block = idct(idct(dct_block, axis=2, norm='ortho'), axis=3, norm='ortho') # [B,C,k,k]第二次尝试:

好吧,我先把数据调回CPU,处理后,再调回GPU,又有新问题了:这样做(将block从GPU转移至CPU)torch类型张量转换为numpy数组时,torch张量的梯度无法保存。

# 图像分块

...

# 将块转移到 CPU

block_cpu = block.cpu() # [B,C,k,k]

# 在 CPU 上对块应用 DCT

dct_block_np = dct(dct(block_cpu.numpy(), axis=2, norm='ortho'), axis=3, norm='ortho') # [B,C,k,k]

# 将结果传输回 GPU

dct_block = torch.from_numpy(dct_block_np).to(image.device) # [B,C,k,k]

...

# 将块转移到 CPU

dct_block_cpu = dct_block.cpu()

# 在 CPU 上对块应用逆 DCT

block_np = idct(idct(dct_block_cpu.numpy(), axis=2, norm='ortho'), axis=3, norm='ortho')

# 将结果传输回 GPU

block = torch.from_numpy(block_np).to(dct_block.device) # [B,C,k,k]

第三次尝试:

根据报错提醒,我进行以下改进,将block_cpu.numpy -> block_cpu.detach.numpy(),即忽略掉torch类型张量带着的梯度信息,哈哈,这样一改,梯度就丢失了,模型就不能反向传播进行更新训练了。

# 图像分块

...

# 将块转移到 CPU

block_cpu = block.cpu() # [B,C,k,k]

# 在 CPU 上对块应用 DCT

dct_block_np = dct(dct(block_cpu.numpy(), axis=2, norm='ortho'), axis=3, norm='ortho') # [B,C,k,k]

# 将结果传输回 GPU

dct_block = torch.from_numpy(dct_block_np).to(image.device) # [B,C,k,k]

...

# 将块转移到 CPU

dct_block_cpu = dct_block.cpu()

# 在 CPU 上对块应用逆 DCT

block_np = idct(idct(dct_block_cpu.detach.numpy(), axis=2, norm='ortho'), axis=3, norm='ortho')

# 将结果传输回 GPU

block = torch.from_numpy(block_np).to(dct_block.device) # [B,C,k,k]第四次尝试:

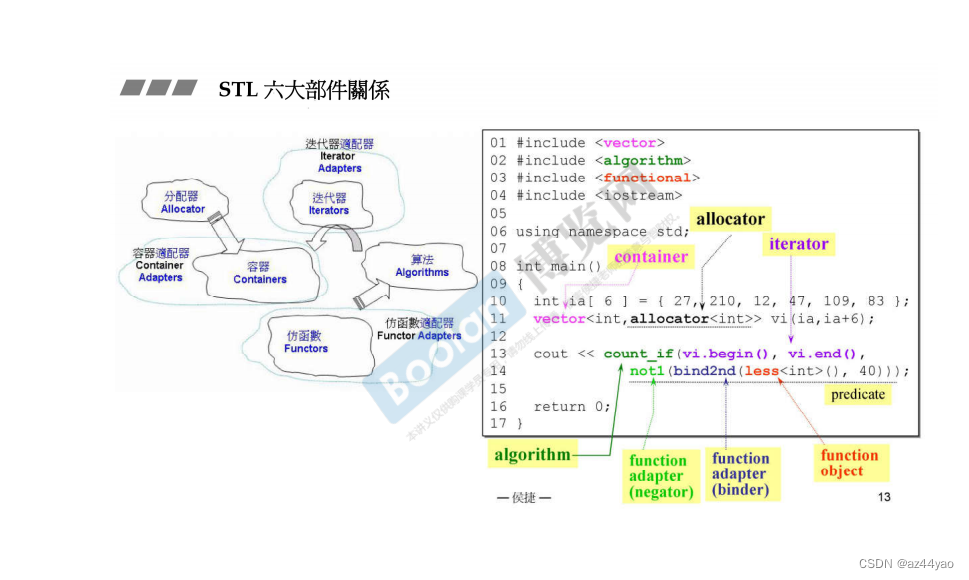

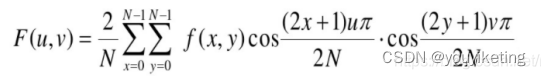

CPU上库函数不好用,那我自己写(借鉴)DCT变换的函数嘛,DCT就是输入k*k图像关于k*k个余弦基函数的加权和嘛:

别人写的的8 x 8d的DCT和IDCT的实现:

class DCT8X8(nn.Module):

""" Discrete Cosine Transformation

Input:

image(tensor): batch x height x width

Output:

dcp(tensor): batch x height x width

"""

def __init__(self):

super(DCT8X8, self).__init__()

tensor = np.zeros((8, 8, 8, 8), dtype=np.float32)

for x, y, u, v in itertools.product(range(8), repeat=4):

tensor[x, y, u, v] = np.cos((2 * x + 1) * u * np.pi / 16) * np.cos((2 * y + 1) * v * np.pi / 16)

alpha = np.array([1. / np.sqrt(2)] + [1] * 7)

self.tensor = nn.Parameter(torch.from_numpy(tensor).float())

self.scale = nn.Parameter(torch.from_numpy(np.outer(alpha, alpha) * 0.25).float())

def forward(self, image):

image = image - 128

result = self.scale * torch.tensordot(image, self.tensor, dims=2)

result.view(image.shape)

return result

class IDCT8X8(nn.Module):

""" Inverse discrete Cosine Transformation

Input:

dcp(tensor): batch x height x width

Output:

image(tensor): batch x height x width

"""

def __init__(self):

super(IDCT8X8, self).__init__()

alpha = np.array([1. / np.sqrt(2)] + [1] * 7)

self.alpha = nn.Parameter(torch.from_numpy(np.outer(alpha, alpha)).float())

tensor = np.zeros((8, 8, 8, 8), dtype=np.float32)

for x, y, u, v in itertools.product(range(8), repeat=4):

tensor[x, y, u, v] = np.cos((2 * u + 1) * x * np.pi / 16) * np.cos((2 * v + 1) * y * np.pi / 16)

self.tensor = nn.Parameter(torch.from_numpy(tensor).float())

def forward(self, image):

image = image * self.alpha

result = 0.25 * torch.tensordot(image, self.tensor, dims=2) + 128

result.view(image.shape)

return result我根据上述改的任意block_size的DCT和IDCT:

class DCTCustom(nn.Module):

"""Customizable Discrete Cosine Transformation

Input:

image(tensor): batch x height x width

Output:

dct(tensor): batch x height x width

"""

def __init__(self, input_size=8):

super(DCTCustom, self).__init__()

self.input_size = input_size

tensor = np.zeros((input_size, input_size, input_size, input_size), dtype=np.float32)

for x, y, u, v in itertools.product(range(input_size), repeat=4):

tensor[x, y, u, v] = np.cos((2 * x + 1) * u * np.pi / (2 * input_size)) * np.cos((2 * y + 1) * v * np.pi / (2 * input_size))

alpha = np.array([1. / np.sqrt(2)] + [1] * (input_size - 1))

self.tensor = nn.Parameter(torch.from_numpy(tensor).float())

self.scale = nn.Parameter(torch.from_numpy(np.outer(alpha, alpha) * 0.25).float())

def forward(self, image):

image = image - 128

result = self.scale * torch.tensordot(image, self.tensor, dims=2)

result = result.view(image.shape) # Corrected line

return result

class IDCTCustom(nn.Module):

""" Inverse discrete Cosine Transformation

Input:

dcp(tensor): batch x height x width

Output:

image(tensor): batch x height x width

"""

def __init__(self, block_size=8):

super(IDCTCustom, self).__init__()

self.block_size = block_size

# Compute alpha coefficients

alpha = np.array([1. / np.sqrt(2)] + [1] * (block_size - 1))

self.alpha = nn.Parameter(torch.from_numpy(np.outer(alpha, alpha)).float())

# Compute tensor for IDCT

tensor = np.zeros((block_size, block_size, block_size, block_size), dtype=np.float32)

for x, y, u, v in itertools.product(range(block_size), repeat=4):

tensor[x, y, u, v] = np.cos((2 * u + 1) * x * np.pi / (2 * block_size)) * np.cos(

(2 * v + 1) * y * np.pi / (2 * block_size)

)

self.tensor = nn.Parameter(torch.from_numpy(tensor).float())

def forward(self, image):

if image.shape[-2] % self.block_size != 0 or image.shape[-1] % self.block_size != 0:

raise ValueError("Input dimensions must be divisible by the block size.")

# Apply IDCT

image = image * self.alpha

result = 0.25 * torch.tensordot(image, self.tensor, dims=2) + 128

result = result.view(image.shape)

return result不出意外的话,问题又出现了,我对一个torch.ones((2,3,k,k))的张量进行DCT,再IDCT恢复。当k=8时(即block_size=8x8)时,能够完全恢复,但当k!=8(=16、32)时,经IDCT后无法恢复原始输入,懵。

第五次尝试(hh):

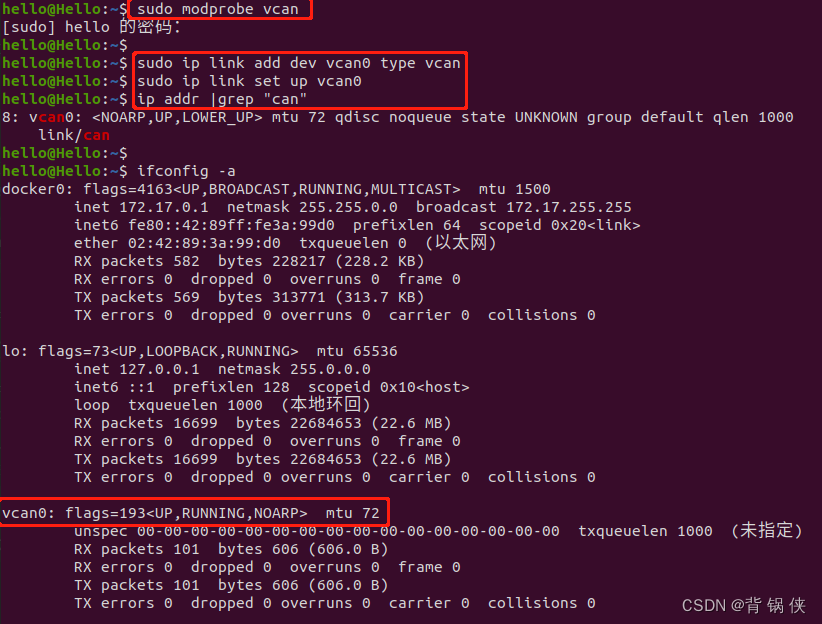

突然!我发现了torch内置的DCT函数!可以再GPU上实现DCT。

import torch_dct as dct

# 图像分块 # [B,C,H,W]

... # [B,C,k,k]

# dct

block = dct.dct_2d(block) # [B,C,k,k]

...

# idct

block = dct.idct_2d(block) # [B,C,k,k] 然后又有问题了:

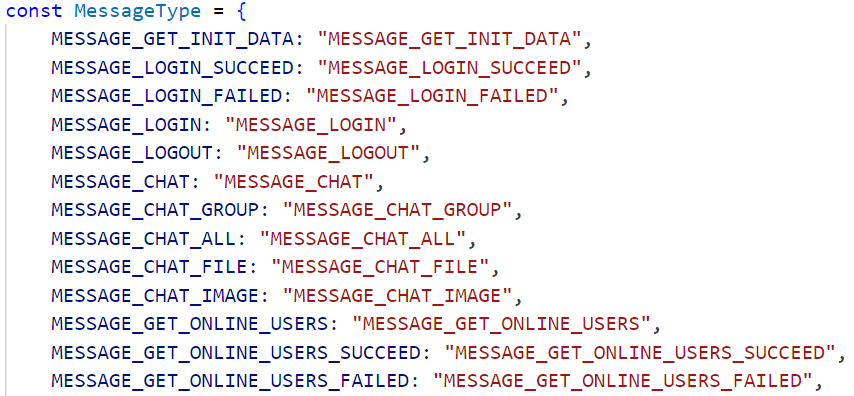

我的模型开始训练后,我发现我的每个epoch的loss都为NAN...

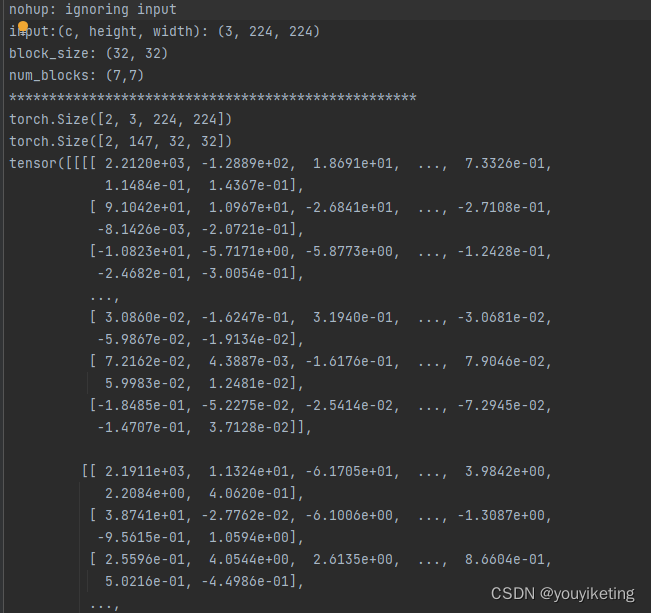

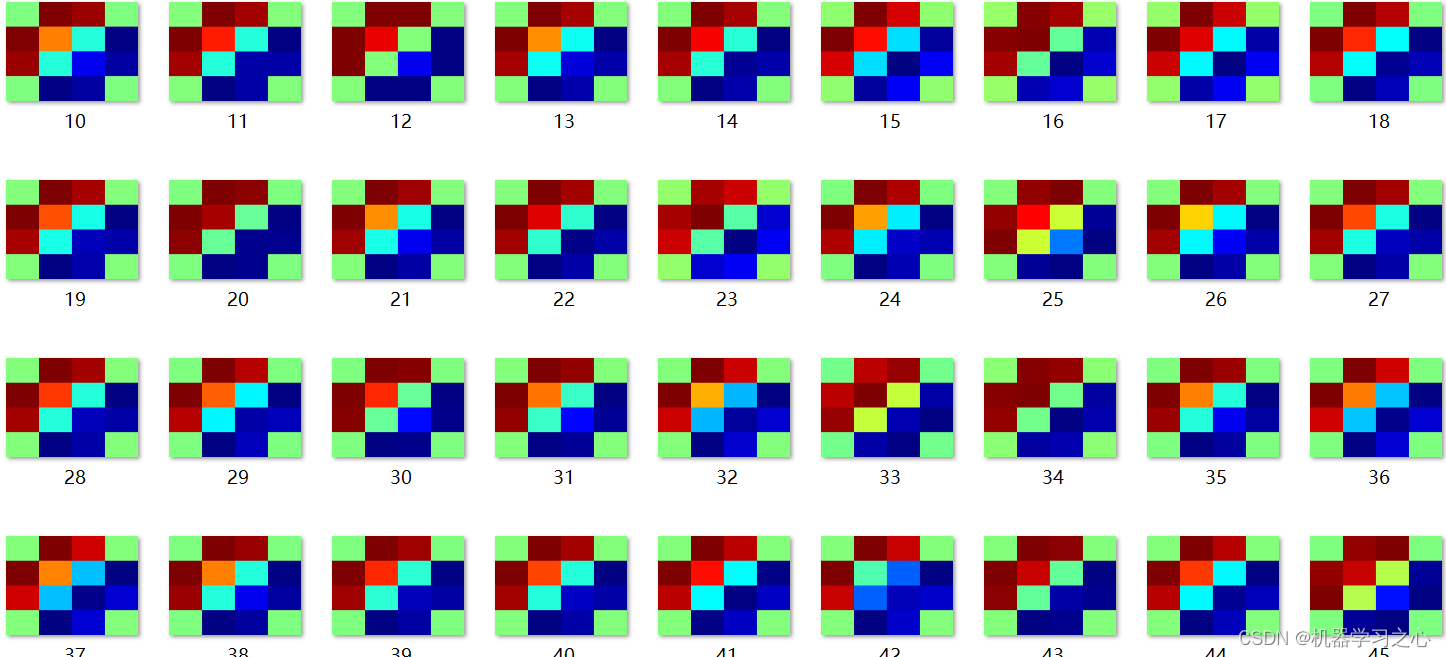

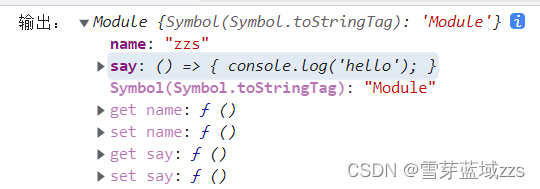

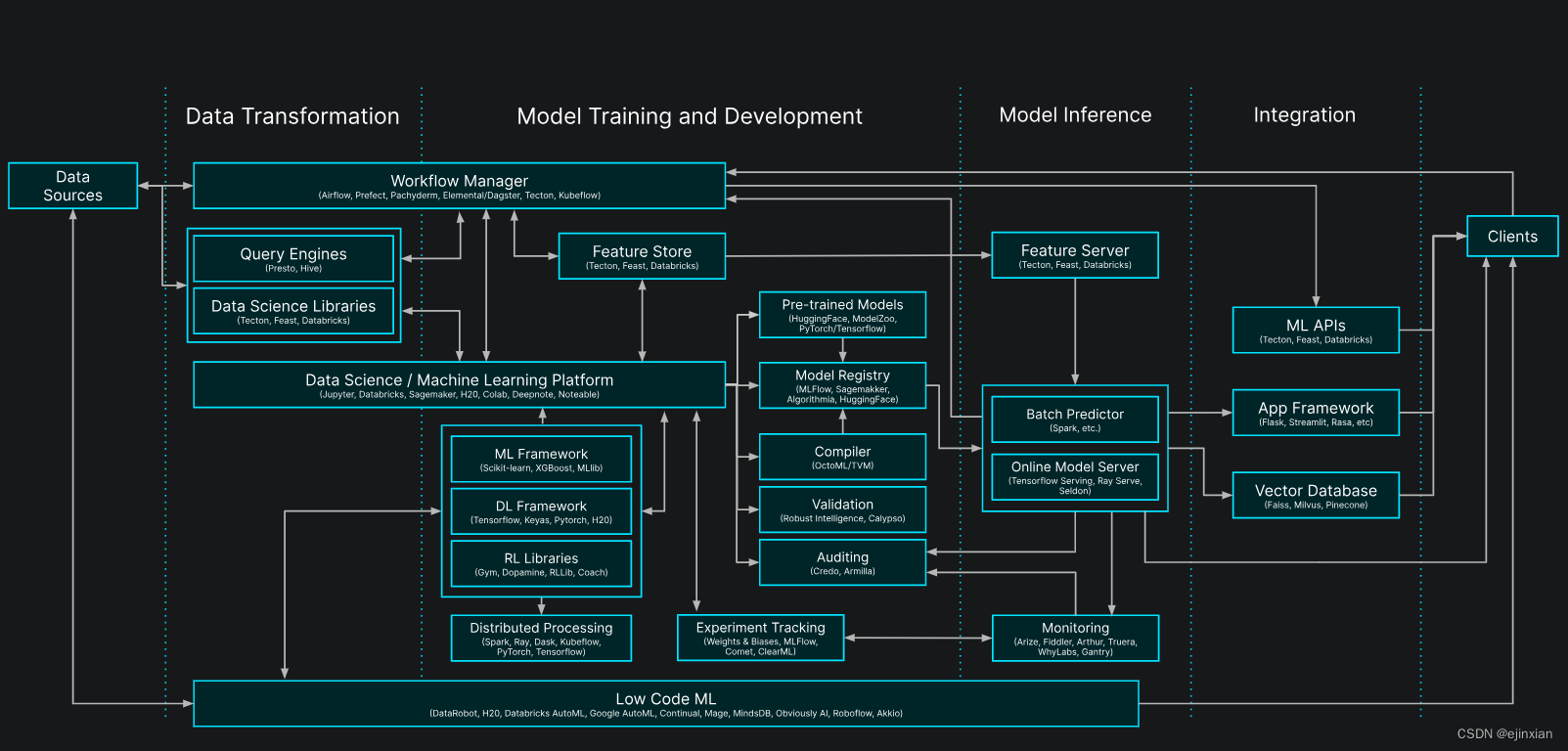

然后我打印了DCT输出,发现DCT系数长这个样子,CNN不高兴好好训练吧。

我们再想想办法将输入数据归一化到范围[0, 1]或[-1, 1]之间,再喂给CNN吧。