前面的博文读论文:Restormer: Efficient Transformer for High-Resolution Image Restoration 介绍了 Restormer 网络结构的网络技术特点,本文用 pytorch 实现其中的主要网络结构模块。

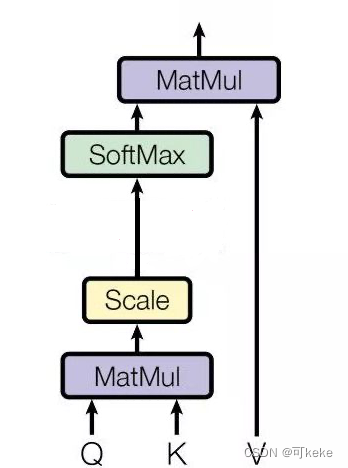

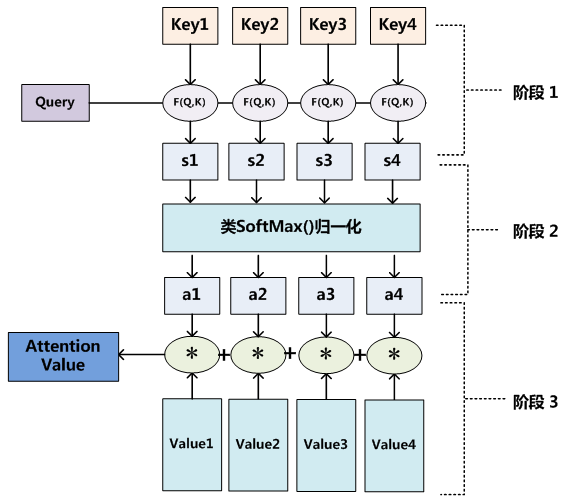

1. MDTA(Multi-Dconv Head Transposed Attention:多头注意力机制

## Multi-DConv Head Transposed Self-Attention (MDTA)

class Attention(nn.Module):

def __init__(self, dim, num_heads, bias):

super(Attention, self).__init__()

self.num_heads = num_heads # 注意力头的个数

self.temperature = nn.Parameter(torch.ones(num_heads, 1, 1)) # 可学习系数

# 1*1 升维

self.qkv = nn.Conv2d(dim, dim*3, kernel_size=1, bias=bias)

# 3*3 分组卷积

self.qkv_dwconv = nn.Conv2d(dim*3, dim*3, kernel_size=3, stride=1, padding=1, groups=dim*3, bias=bias)

# 1*1 卷积

self.project_out = nn.Conv2d(dim, dim, kernel_size=1, bias=bias)

def forward(self, x):

b,c,h,w = x.shape # 输入的结构 batch 数,通道数和高宽

qkv = self.qkv_dwconv(self.qkv(x))

q,k,v = qkv.chunk(3, dim=1) # 第 1 个维度方向切分成 3 块

# 改变 q, k, v 的结构为 b head c (h w),将每个二维 plane 展平

q = rearrange(q, 'b (head c) h w -> b head c (h w)', head=self.num_heads)

k = rearrange(k, 'b (head c) h w -> b head c (h w)', head=self.num_heads)

v = rearrange(v, 'b (head c) h w -> b head c (h w)', head=self.num_heads)

q = torch.nn.functional.normalize(q, dim=-1) # C 维度标准化,这里的 C 与通道维度略有不同

k = torch.nn.functional.normalize(k, dim=-1)

attn = (q @ k.transpose(-2, -1)) * self.temperature # @ 是矩阵乘

attn = attn.softmax(dim=-1)

out = (attn @ v) # 注意力图(严格来说不算图)

# 将展平后的注意力图恢复

out = rearrange(out, 'b head c (h w) -> b (head c) h w', head=self.num_heads, h=h, w=w)

# 真正的注意力图

out = self.project_out(out)

return out

2. GDFN( Gated-Dconv Feed-Forward Network)

## Gated-Dconv Feed-Forward Network (GDFN)

class FeedForward(nn.Module):

def __init__(self, dim, ffn_expansion_factor, bias):

super(FeedForward, self).__init__()

# 隐藏层特征维度等于输入维度乘以扩张因子

hidden_features = int(dim*ffn_expansion_factor)

# 1*1 升维

self.project_in = nn.Conv2d(dim, hidden_features*2, kernel_size=1, bias=bias)

# 3*3 分组卷积

self.dwconv = nn.Conv2d(hidden_features*2, hidden_features*2, kernel_size=3, stride=1, padding=1, groups=hidden_features*2, bias=bias)

# 1*1 降维

self.project_out = nn.Conv2d(hidden_features, dim, kernel_size=1, bias=bias)

def forward(self, x):

x = self.project_in(x)

x1, x2 = self.dwconv(x).chunk(2, dim=1) # 第 1 个维度方向切分成 2 块

x = F.gelu(x1) * x2 # gelu 相当于 relu+dropout

x = self.project_out(x)

return x

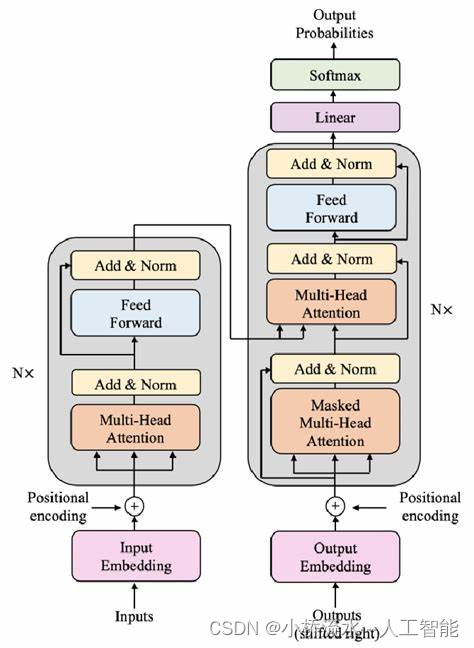

3. TransformerBlock

## 就是标准的 Transformer 架构

class TransformerBlock(nn.Module):

def __init__(self, dim, num_heads, ffn_expansion_factor, bias, LayerNorm_type):

super(TransformerBlock, self).__init__()

self.norm1 = LayerNorm(dim, LayerNorm_type) # 层标准化

self.attn = Attention(dim, num_heads, bias) # 自注意力

self.norm2 = LayerNorm(dim, LayerNorm_type) # 层表转化

self.ffn = FeedForward(dim, ffn_expansion_factor, bias) # FFN

def forward(self, x):

x = x + self.attn(self.norm1(x)) # 残差

x = x + self.ffn(self.norm2(x)) # 残差

return x

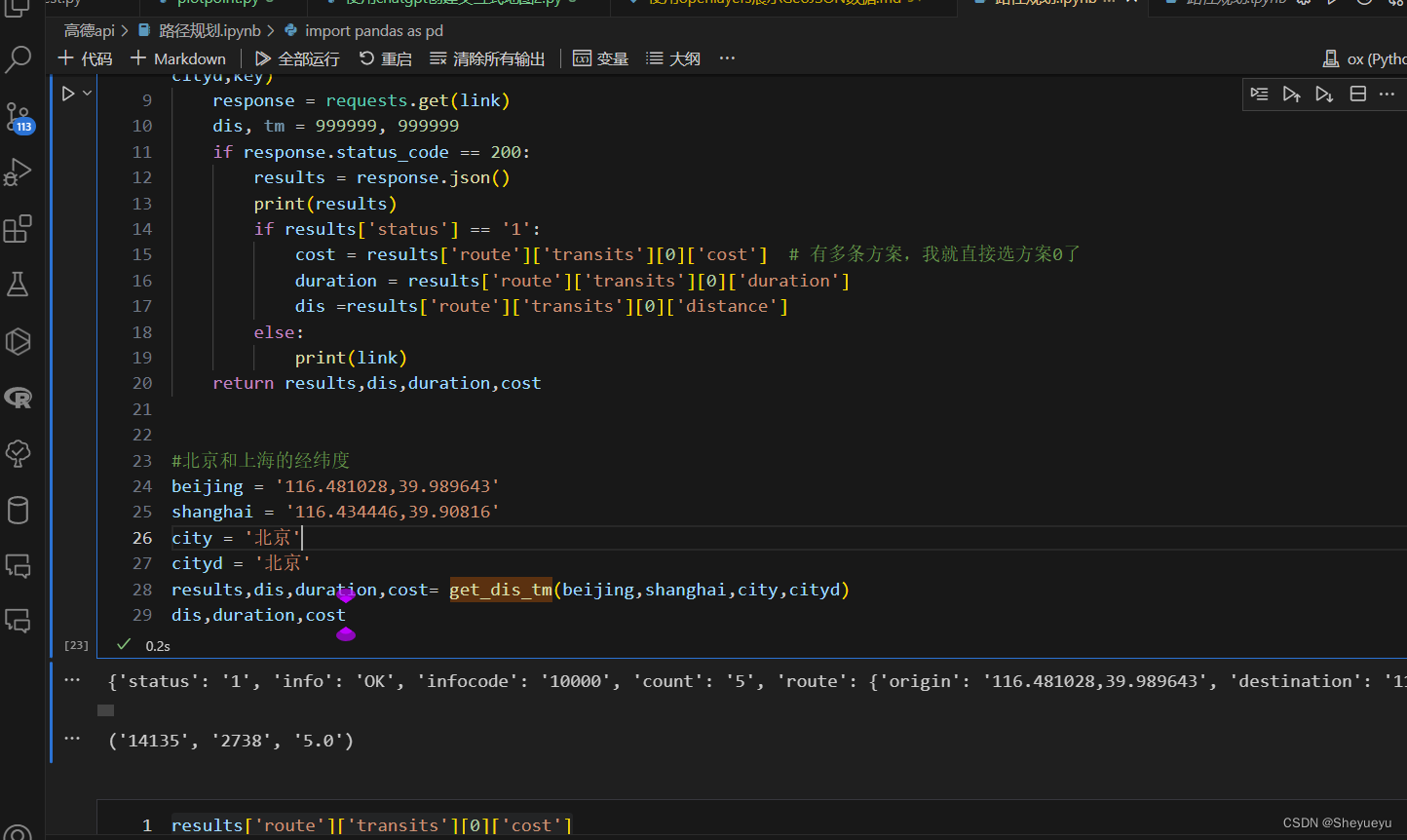

4. 测试样例

model = Restormer()

print(model) # 打印网络结构

x = torch.randn((1, 3, 512, 512)) #随机生成输入图像

x = model(x) # 送入网络

print(x.shape) # 打印网络输入的图像结构

![[kubernetes]控制平面ETCD](https://img-blog.csdnimg.cn/direct/e20e34e6ece24933a65945da262a84ef.png)