简介

Es是一个使用java语言并且基于Lucene编写的搜索引擎框架,它提供了分布式的全文搜索功能,提供了一个统一基于RESTful风格的WEB接口。Lucene本身就是一个搜索引擎底层,ES本身就是对Lucene的封装。

分布式主要是为了扩展ES的横向扩展能力。

全文检索(倒排索引):将一段词语进行分词,并且将分出来的单个词语统一放到一个分词库中,在搜索时,根据关键字去分词库中检索,找到匹配的内容。

ES和Solr的区别

Solr在查询死数据(数据不会改变)时,查询速度相对ES更快一些。但是数据如果是实时改变的,Solr的查询速度会特别慢,ES在查询效率基本不变

Solr搭建基于需要依赖Zookeeper来帮助管理。ES本身就是支持集群搭建,不需要第三方的介入。

ES对云计算和大数据的支持比较好

IK分词器

由于ES对中文分词比较差,所以需要在github上下载安装与ES版本一直的分词器,本文章用的是ES 8.6版本。

ES的RESTful语法

GET请求

http://ip:port/index 查询索引信息

http://ip:port/index/type/doc_id 查询指定文档信息

POST请求

http://ip:port/index/type/_search 查询,可以在请求体中添加JSON字符串来代表查询条件

http://ip:port/index/type/doc_id/_update 修改文档,在请求体中指定JSON字符串来指定修改的具体信息

PUT请求

http://ip:port/index 创建一个索引,需要在请求体中指定索引信息

http://ip:port/index/type/_mappings 代表创建索引时指定索引文档存储的属性信息

DELETE请求

http://ip:port/index 删库

http://ip:port/index/type/doc_id 删除指定文档

索引操作

创建索引

# 创建一个索引

# number_of_shards 分片数

# number_of_replicas 备份数

PUT /person

{

"settings": {

"number_of_shards": 5 ,

"number_of_replicas": 1

}

}

索引名称为person

查看索引信息

GET /person

person是索引名称

删除索引

DELETE /person

person是索引名称

ES中Field类型

字符串类型

在ES中string类型分为text类型和keyword类型,text类型一般用于全文检索,将当前Field进行分词。keyword类型是当前不会被分词

数值类型

long:一个带符号的64位整数,最小值为-263,最大值为263-1。

integer:一个带符号的32位整数,最小值为-231,最大值为231-1。

short:一个带符号的16位整数,最小值为-32,768,最大值为32,767。

byte:一个带符号的8位整数,最小值为-128,最大值为127。

double:双精度64位IEEE 754浮点数,限制为有限值。

float:单精度32位IEEE 754浮点数,限制为有限值。

half_float:半精度16位IEEE 754浮点数,限制为有限值。精度比float小一半。

scaled_float:由长整型支持的有限浮点数,由固定的双倍缩放因子进行缩放。根据一个long和scaled来表达一个浮点型

时间类型

date类型,针对时间类型指定具体的格式

布尔类型

boolean类型,表达true和false

二进制类型

binary 类型接受二进制值作为 Base64 编码的字符串。默认情况下,该字段不存储,并且不可搜索。

范围类型

赋值时,无需指定具体内容,只需要指定一个范围即可。指定gt、lt、gte、lte。long_range、integer_range、double_range、float_range、date_range、ip_range

经纬度类型

geo_point:用来存储经纬度

IP类型

存储IPv4和IPv6格式的IP都可以存储

其他

其他数据类型参考官网 官网

ES创建索引并指定数据结构

# 创建索引并指定数据结构

PUT /book

{

"settings": {

"number_of_shards": 5, # 分片数

"number_of_replicas": 1 # 备份数

},

"mappings": {

# 指定数据结构

"novel": {

# 类型type

"properties": {

# 文档存储field

"name": {

# field属性名

"type": "text", # 指定field类型

"analyzer":"ik_max_word", # 指定分词器这里使用的是ik分词器

"index": true, # 指定当前field可以被作为查询条件

"store": false # 是否需要额外存储

},

"author": {

"type":"keyword"

},

"count": {

"type":"long"

},

"onSale": {

"type":"date",

"format":"yyyy-MM-dd HH:mm:ss||yyyy-MM-dd" # date类型格式化方式

},

"descr": {

"type":"text",

"analyzer":"ik_max_word"

}

}

}

}

}

ES文档操作

文档在ES服务中的唯一标识,

_index,_type,_id三个内容为符合,锁定一个文档。

新建文档

ES自动会给文档生成_id

# 添加文档,自动生成id

POST /book/novel

{

"name":"僵子压",

"author":"tang",

"count":10000,

"on-sale":"2000-01-01",

"descr":"福建省会计分录睡觉了"

}

ES返回结果如下

{

"_index" : "book", # 索引名称

"_type" : "novel", # 类型

"_id" : "c22vW4wBI8QiIVYXVJqk", # ES自动生成的_id

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}

ES手动添加_id

# 添加文档,手动指定id 这里指定的_id为1

PUT /book/novel/1

{

"name":"毛泽东选集",

"author":"毛泽东",

"count":10000,

"on-sale":"1951-10-12",

"descr":"毛泽东选集是记录了毛泽东领导红军革命时期的重要文献"

}

修改文档

覆盖修改

PUT /book/novel/1

{

"name":"毛泽东选集",

"author":"毛泽东",

"count":10000,

"on-sale":"1951-10-12",

"descr":"毛泽东选集是记录了毛泽东领导红军革命时期的重要文献"

}

该操作既可以手动添加_id也可以覆盖修改

doc修改方式

# 修改文档基于doc方式

POST /book/novel/1/_update

{

"doc":{

"count":"123123" # 指定需要修改的field即可

}

}

删除文档

# 根据_id删除文档

DELETE /book/novel/c22vW4wBI8QiIVYXVJqk

c22vW4wBI8QiIVYXVJqk是文档的_id

java操作ES

引入依赖

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>6.5.4</version>

</dependency>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

<version>6.5.4</version>

</dependency>

除了要引入elasticsearch的API之外还要引入elasticsearch的高级API(elasticsearch-rest-high-level-client)。

连接ES

public class ESClient {

public static RestHighLevelClient getClient() {

// 创建HttpHost对象

HttpHost host = new HttpHost("localhost", 9200);

// 创建RestClientBuilder对象

RestClientBuilder builder = RestClient.builder(host);

return new RestHighLevelClient(builder);

}

}

创建索引

设置settings

"settings": {

"number_of_shards": 5,

"number_of_replicas": 1

}

以上信息等同与如下java代码

Settings.Builder settings = Settings.builder()

.put("number_of_shards", 3)

.put("number_of_replicas", 1);

设置mappings

"mappings": {

"man": {

"properties": {

"name": {

"type": "text"

},

"age": {

"type":"integer"

}

}

}

}

以上信息等同与如下java代码

/** ES索引名称 */

private String index = "person";

/** ES索引类型 */

private String type = "man";

XContentBuilder mappings = JsonXContent.contentBuilder()

.startObject() // 有startObject就会有endObject成对出现

.startObject("properties")

.startObject("name")

.field("type", "text")

.endObject()

.startObject("age")

.field("type", "integer")

.endObject()

.endObject()

.endObject();

// 将settings和mappings封装到Request对象中

CreateIndexRequest request = new CreateIndexRequest(index)

.settings(settings)

.mapping(type, mappings);

使用Client创建索引

/** ES客户端连接对象 */

private RestHighLevelClient client = ESClient.getClient();

// 连接es创建索引

CreateIndexResponse result = client.indices().create(request, RequestOptions.DEFAULT);

至此就在ES创建了

person索引

检查索引是否存在

/** ES客户端连接对象 */

private RestHighLevelClient client = ESClient.getClient();

/** ES索引名称 */

private String index = "person";

@Test

public void existsIndex() throws IOException {

// 准备request对象

GetIndexRequest request = new GetIndexRequest();

request.indices(index);

// 通过client对象

boolean exists = client.indices().exists(request, RequestOptions.DEFAULT);

System.out.println(exists);

}

删除索引

/** ES客户端连接对象 */

private RestHighLevelClient client = ESClient.getClient();

/** ES索引名称 */

private String index = "person";

@Test

public void deleteIndex() throws IOException {

DeleteIndexRequest request = new DeleteIndexRequest();

request.indices(index);

AcknowledgedResponse isDelete = client.indices().delete(request, RequestOptions.DEFAULT);

System.out.println("is delete: " + isDelete);

}

如果索引不存在然后进行删除会抛出异常

添加文档

准备实体类

@Data

@NoArgsConstructor

@AllArgsConstructor

public class Person {

@JsonIgnore

private Integer id;

private String name;

private Integer age;

}

由于在实体类中的id,ES是无法指定的所以在序列化的时候需要使用

@JsonIgnore注解忽略掉。除了id在外ES中的日期类型在java中使用new Date()是无法接收的所以需要使用@JsonFormat(pattern = "yyyy-MM-dd")进行格式化。

/** 操作ES的客户端对象 */

private RestHighLevelClient client = ESClient.getClient();

/** ES索引名称 */

private String index = "person";

/** ES索引类型 */

private String type = "man";

/** json序列化对象 */

private ObjectMapper mapper = new ObjectMapper();

@Test

public void createDocumentTest() throws IOException {

// 准备json数据

Person person = new Person(1, "张三", 20);

String json = mapper.writeValueAsString(person);

// 使用request添加数据 手动指定id

IndexRequest request = new IndexRequest(index, type, person.getId().toString());

request.source(json, XContentType.JSON);

IndexResponse indexResponse = client.index(request, RequestOptions.DEFAULT);

// 接收返回结果

System.out.println(indexResponse.getResult());

}

修改文档

@Test

public void updateDocumentTest() throws IOException {

// 创建mapper,指定需要修改的内容

Map<String, Object> doc = new HashMap<String, Object>() {

{

put("name", "zhangsan");

}};

String docId = "1";

UpdateRequest request = new UpdateRequest(index, type, docId);

request.doc(doc);

UpdateResponse update = client.update(request);

System.out.println(update.getResult());

}

删除文档

@Test

public void deleteDocumentTest() throws IOException {

// 封装request对象,根据id进行删除

DeleteRequest request = new DeleteRequest(index, type, "1");

DeleteResponse delete = client.delete(request, RequestOptions.DEFAULT);

System.out.println(delete.getResult());

}

批量操作

批量添加

/** 操作ES的客户端对象 */

private RestHighLevelClient client = ESClient.getClient();

/** ES索引名称 */

private String index = "person";

/** ES索引类型 */

private String type = "man";

/** json序列化对象 */

private ObjectMapper mapper = new ObjectMapper();

@Test

public void bulkCreateDocumentTest() throws IOException {

Person p1 = new Person(1, "zhans", 20);

Person p2 = new Person(2, "lisi", 20);

Person p3 = new Person(3, "wangwu", 20);

String json1 = mapper.writeValueAsString(p1);

String json2 = mapper.writeValueAsString(p2);

String json3 = mapper.writeValueAsString(p3);

BulkRequest request = new BulkRequest();

request.add(new IndexRequest(index, type, p1.getId().toString()).source(json1, XContentType.JSON));

request.add(new IndexRequest(index, type, p2.getId().toString()).source(json2, XContentType.JSON));

request.add(new IndexRequest(index, type, p3.getId().toString()).source(json3, XContentType.JSON));

BulkResponse response = client.bulk(request, RequestOptions.DEFAULT);

System.out.println(response.toString());

}

批量删除

@Test

public void bulkDeleteDocumentTest() throws IOException {

BulkRequest request = new BulkRequest();

request.add(new DeleteRequest(index, type, "1"));

request.add(new DeleteRequest(index, type, "2"));

request.add(new DeleteRequest(index, type, "3"));

BulkResponse response = client.bulk(request);

System.out.println(response.toString());

}

ES查询

term查询方式

term查询是代表完全匹配,搜索之前不会对你搜索的关键字进行分词,对你的关键字去文档分词库中匹配内容。

# term查询

POST /sms-logs-index/sms-logs-type/_search

{

"from":0, # limit 第一个参数

"size":5, # limit 第二次参数

"query": {

"term": {

"province": {

"value": "北京" # 完全匹配

}

}

}

}

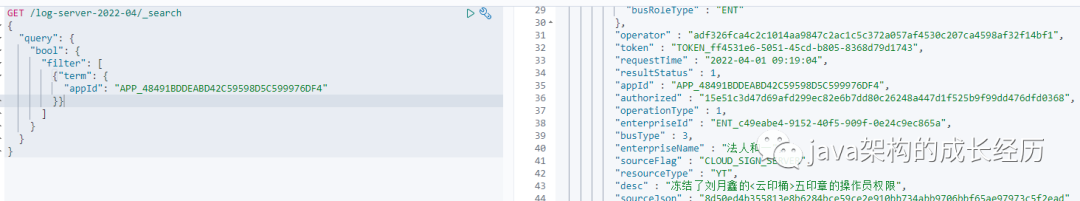

执行结果如下

{

"took" : 25,

"timed_out" : false,

"_shards" : {

"total" : 3,

"successful" : 3,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 1.5404451,

"hits" : [

{

"_index" : "sms-logs-index",

"_type" : "sms-logs-type",

"_id" : "2",

"_score" : 1.5404451,

"_source" : {

// 这里才是我们需要的数据

"createDate" : 1702477378258,

"sendDate" : 1702477378258,

"longCode" : "1069886623",

"mobile" : "13800000002",

"corpName" : "公司B",

"smsContent" : "短信内容2",

"state" : 1,

"operatorId" : 2,

"province" : "北京",

"ipAddr" : "192.168.1.2",

"replyTotal" : 6,

"fee" : 20

}

}

]

}

}

使用java代码进行查询

private ObjectMapper mapper = new ObjectMapper();

private String index = "sms-logs-index";

private String type = "sms-logs-type";

private RestHighLevelClient client = ESClient.getClient();

@Test

public void termQueryTest() throws IOException {

// 创建request对象

SearchRequest search = new SearchRequest(index);

search.types(type);

// 指定查询条件

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.from(0);

builder.size(5);

builder.query(QueryBuilders.termQuery("province", "北京"));

search.source(builder);

// 执行查询语句

SearchResponse result = client.search(search);

// 获取到_source中的数据

SearchHit[] hits = result.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map); // 获取到的数据

}

}

terms查询方式

terms和term的查询机制是一致的,都不会将指定的查询关键字进行分词,直接去分词库中进行匹配,找到相应文档内容。terms是针对一个字段包含多个值的时候使用,类似MySQL中的

in、or查询条件。

# terms查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"terms": {

"province": [

"北京", # 可以指定多个

"上海"

]

}

}

}

使用java代码进行查询

@Test

public void termsQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

// 封装查询条件

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.termsQuery("province", "北京", "上海"));

request.source(builder);

// 执行查询

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

}

match查询

match查询属于高层查询,会根据查询字段的不一样,采用不同的查询方式。

1、如果查询的是日期或者数值的话,会将你查询的字符串转化为日期或者数值对待。

2、如果查询的内容是一个不能被分词的内容(keyword),match查询不会对你指定的查询进行分词。

3、如果查询内容是一个可以被分词的内容(text),match会将你指定的查询内容根据一定方式去分词,去分词库中匹配指定的内容

match查询,实际底层就是term查询,将多个term查询结果给你封装到一起了

# match查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"match": {

"smsContent": "短信" # 指定field来进行查询

}

}

}

返回的数据中的

_score最高说明匹配程度最高

使用java代码

@Test

public void matchQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

// 默认返回前10条数据,通过设置builder.size()可以修改返回的数据条数

builder.size(20);

builder.query(QueryBuilders.matchQuery("smsContent", "短信"));

request.source(builder);

SearchResponse search = client.search(request);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

System.out.println("length:\t" + hits.length);

}

match_all查询

查询全部内容,不指定任何查询条件

# match_all查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"match_all": {

} # 不指定查询条件

}

}

如果数据比较多会返回前10条数据。

使用java代码

@Test

public void matchAllQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

// 默认返回前10条数据,通过设置builder.size()可以修改返回的数据条数

builder.size(20);

builder.query(QueryBuilders.matchAllQuery());

request.source(builder);

SearchResponse search = client.search(request);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

System.out.println("length:\t" + hits.length);

}

布尔match查询

基于一个field匹配内容采用and或者or的方式连接进行查询

# 布尔match查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"match": {

"smsContent": {

"query": "短信 3",

"operator": "and" # 查询条件即包含“短信”也包含“3”

}

}

}

}

使用java代码

@Test

public void booleanMatchQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

// 默认返回前10条数据,通过设置builder.size()可以修改返回的数据条数

builder.size(20);

builder.query(QueryBuilders.matchQuery("smsContent", "短信 3")

.operator(Operator.AND)); // 可以使用AND或者OR

request.source(builder);

SearchResponse search = client.search(request);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

System.out.println("length:\t" + hits.length);

}

multi_match查询

multi_match针对多个field进行检索,多个field对一个一个text

# multi_match查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"multi_match": {

"query": "北京", # field的值

"fields": ["province", "smsContent"] # 指定具体的field

}

}

}

使用java代码

@Test

public void multiMatchQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

// 默认返回前10条数据,通过设置builder.size()可以修改返回的数据条数

builder.size(20);

builder.query(QueryBuilders.multiMatchQuery("北京", "province", "smsContent"));

request.source(builder);

SearchResponse search = client.search(request);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

System.out.println("length:\t" + hits.length);

}

id查询

# 通过id查询

GET /sms-logs-index/sms-logs-type/1 # 1是文档id

使用java代码

@Test

public void idQueryTest() throws IOException {

GetRequest request = new GetRequest(index, type, "1");

GetResponse response = client.get(request);

Map<String, Object> map = response.getSourceAsMap();

System.out.println(map);

}

ids查询

# ids查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"ids": {

"values": ["1", "3" , "2"]

}

}

}

使用java代码

@Test

public void idsQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.idsQuery().addIds("1", "3" ,"2"));

request.source(builder);

SearchResponse search = client.search(request);

for (SearchHit hit : search.getHits().getHits()) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

System.out.println(search.getHits().getHits().length);

}

prefix查询

前缀查询,通过一个关键字指定一个field的前缀查询到一个文档

# prefix查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"prefix": {

"corpName": {

"value": "公司"

}

}

}

}

@Test

public void prefixQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.prefixQuery("corpName", "公司"));

request.source(builder);

SearchResponse search = client.search(request);

for (SearchHit hit : search.getHits().getHits()) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

System.out.println(search.getHits().getHits().length);

}

fuzzy查询

模糊查询,输入文字的大概,ES就可以感觉输入的内容大概去匹配(即使你查询的内容有错别字),查询不文档

# fuzzy查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"fuzzy": {

"corpName": {

"value": "盒马先生", # 真正的值是盒马先生,如果是河马先生就会查询不出来

"prefix_length": 2 # 查询输入的值前两个不允许出现错误

}

}

}

}

@Test

public void fuzzyQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.fuzzyQuery("corpName", "盒马先生")

.prefixLength(2));

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

}

wildcard查询

通配查询,和MySQL中的like是一致的,在字符串中指定通配符

*和占位符?,?只能匹配一个字符

# wildcard查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"wildcard": {

"corpName": {

"value": "中国*"

}

}

}

}

@Test

public void wildcardQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.wildcardQuery("corpName", "中国*"));

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

}

range查询

范围查询,只针对数值查询,对某个field进行大于或小于的范围指定

# range查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"range": {

"fee": {

"gte": 10, # >=

"lte": 20 # <=

}

}

}

}

@Test

public void rangeQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.rangeQuery("fee").gte(10).lte(20));

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

}

regexp查询

正则表达式查询,通过编写的正则表达式查询内容。prefix、fuzzy、wildcard和regexp查询效率比较低

# regexp查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"regexp": {

"mobile": "138[0-9]{8}"

}

}

}

@Test

public void regexpQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.regexpQuery("mobile", "138[0-9]{8}"));

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

}

深分页scroll

ES对from,size是有限制的,from和size的二者之和不能超过1w

ES的查询方式:

1、将用户指定的关键字进行分词。

2、将词汇去分词库进行检索,得到多个文档id。

3、去各个分片中拉取指定的数据(耗时较长)。

4、将数据根据score进行排序(耗时较长)。

5、根据from的值查询到的数据舍弃一部分。

scorll在ES中查询数据的方式:

1、将用户指定的关键字进行分词。

2、将词汇去分词库进行检索,得到多个文档id。

3、将文档id存储到es的上下文中(可以理解为es的内存中)。

4、根据指定的size去es中检索指定的数据,获取到的数据文档id会从上下文中进行移除。

5、如果需要查询下一页的数据,直接去es的上下文中进行查询下一页内容,重复4到5步的内容。(如果是from的方式会从第一步重新执行到第五步)

scorll查询方式,不适合做实时查询

# scorll查询 scroll=1m表示查询的文档id存储在es上下文1分钟的时间

POST /sms-logs-index/sms-logs-type/_search?scroll=1m

{

"query": {

"match_all": {

}

},

"size": 2,

"sort": [

{

"fee": {

"order": "desc" # 降序

}

}

]

}

# 查询scorll第二页的内容

POST /_search/scroll

{

"scroll_id": "DnF1ZXJ5VGhlbkZldGNoAwAAAAAAAAVPFmlBcmZIZ3pwVEVpOUVEZGV3SmFCbmcAAAAAAAAFURZpQXJmSGd6cFRFaTlFRGRld0phQm5nAAAAAAAABVAWaUFyZkhnenBURWk5RURkZXdKYUJuZw==", # 是指定上一个scroll指令返回的"_scroll_id"

"scroll":"1m" # 在ES上下文中存储1分钟的时间

}

# 删除ES上下文的数据

DELETE /_search/scroll/DnF1ZXJ5VGhlbkZldGNoAwAAAAAAAAVPFmlBcmZIZ3pwVEVpOUVEZGV3SmFCbmcAAAAAAAAFURZpQXJmSGd6cFRFaTlFRGRld0phQm5nAAAAAAAABVAWaUFyZkhnenBURWk5RURkZXdKYUJuZw==

@Test

public void scrollQueryTest() throws IOException {

// 创建SearchRequest

SearchRequest request = new SearchRequest(index);

request.types(type);

// 指定scroll信息,存活时间1分钟

request.scroll(TimeValue.timeValueMillis(1L));

SearchSourceBuilder builder = new SearchSourceBuilder();

// 每次查询2条

builder.size(2);

builder.sort("fee", SortOrder.DESC);

builder.query(QueryBuilders.matchAllQuery());

request.source(builder);

// 获取返回的scrollId

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

String scrollId = search.getScrollId();

System.out.println("第一页数据:");

for (SearchHit hit : search.getHits().getHits()) {

Map<String, Object> map = hit.getSourceAsMap();

System.out.println(map);

}

SearchHit[] searchHits = null;

do {

// 循环获取每一页内容,并指定存活1分钟时间

SearchScrollRequest scrollRequest = new SearchScrollRequest(scrollId);

scrollRequest.scroll(TimeValue.timeValueMillis(1L));

SearchResponse response = client.scroll(scrollRequest, RequestOptions.DEFAULT);

searchHits = response.getHits().getHits();

System.out.println("下一页数据:");

for (SearchHit searchHit : searchHits) {

System.out.println(searchHit.getSourceAsMap());

}

} while (searchHits.length > 0);

// 删除scroll

ClearScrollRequest clearScrollRequest = new ClearScrollRequest();

clearScrollRequest.addScrollId(scrollId);

ClearScrollResponse result = client.clearScroll(clearScrollRequest, RequestOptions.DEFAULT);

System.out.println(result.isSucceeded());

}

delete-by-query

根据term和match等查询方式删除大量文档。如果需要删除的内容是索引下的大部分数据,不推荐使用delete-by-query的方式进行删除。因为它会一条一条的删除数据。

# delete-by-query

POST /sms-logs-index/sms-logs-type/_delete_by_query

{

"query": {

"range":{

"fee":{

"gte": 10,

"lte": 20

}

}

}

}

@Test

public void deleteByQueryTest() throws IOException {

DeleteByQueryRequest deleteByQueryRequest = new DeleteByQueryRequest(index);

deleteByQueryRequest.types(type);

// 指定检索条件

deleteByQueryRequest.setQuery(QueryBuilders.rangeQuery("fee").gte(10).lte(20));

BulkByScrollResponse response = client.deleteByQuery(deleteByQueryRequest, RequestOptions.DEFAULT);

System.out.println(response);

}

复合查询

Bool查询

复合过滤器,将你多个查询条件,以一定条件的逻辑组合在一起。

must:所有条件,用must组合在一起,表示and意思。

must_not:将must_not中的条件,全部都不能匹配,表示not的意思。

should:所有的条件,使用should组合在一起,表示or的意思

# bool查询

# 查询省份为武汉或者北京且运营商不是联通的

# smsContent中包含中国和平安

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"bool": {

"should": [

{

"term": {

"province": {

"value": "北京"

}

}

},

{

"term": {

"province": {

"value": "武汉"

}

}

}

],

"must_not": [

{

"term": {

"operatorId": {

"value": "2" # 联通标识为2

}

}

}

],

"must": [

{

"match": {

"smsContent": "中国"

}

},

{

"match": {

"smsContent": "平安"

}

}

]

}

}

}

@Test

public void boolQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

// 指定查询条件

SearchSourceBuilder builder = new SearchSourceBuilder();

BoolQueryBuilder boolQuery = QueryBuilders.boolQuery();

// 查询省份为武汉或者北京且运营商不是联通的

// smsContent中包含中国和平安

boolQuery.should(QueryBuilders.termQuery("province", "武汉"));

boolQuery.should(QueryBuilders.termQuery("province", "北京"));

boolQuery.mustNot(QueryBuilders.termQuery("operatorId", 2));

boolQuery.must(QueryBuilders.matchQuery("smsContent", "中国"));

boolQuery.must(QueryBuilders.matchQuery("smsContent", "平安"));

builder.query(boolQuery);

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

for (SearchHit hit : search.getHits().getHits()) {

System.out.println(hit.getSourceAsMap());

}

}

boosting查询

可以帮助我们可以去影响查询后的

_score分数positive:只有匹配上positive的查询内容,才会被放回结果集中

negative:如果匹配上和positive并且也匹配上negative,就可以降低文档

_score。negative_boost:指定系数,必须小于1.0

关于查询时,分数的计算:

查询的关键字,在文档中出现的频次越高分数就越高

指定的内容越短,分数就越高

搜索时,指定的关键字也会被分词,这个被分词的内容,被分词库匹配的个数越多,分数越高。

# boosting查询

POST /sms-logs-index/sms-logs-type/_search

{

"query":{

"boosting": {

"positive":{

# 匹配结果集

"match": {

"smsContent":"收货安装"

}

},

"negative": {

# 如果匹配了positive的结果集再乘以negative_boost

"match": {

"smsContent":"王五"

}

},

"negative_boost": 0.5

}

}

}

@Test

public void boostingQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

// 指定查询条件

BoostingQueryBuilder boostingQuery = QueryBuilders.boostingQuery(QueryBuilders.matchQuery("smsContent", "收货安装"),

QueryBuilders.matchQuery("smsContent", "王五")).negativeBoost(0.5f);

builder.query(boostingQuery);

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

for (SearchHit hit : search.getHits().getHits()) {

System.out.println(hit.getSourceAsMap());

}

}

filter查询

query查询会根据你的查询条件去计算文档的匹配度得到一个分数,并且根据分数进行排序,不会做缓存。

filter查询会根据你的查询条件去查询文档,不去计算分数,而且filter会对经常被过滤的数据进行缓存。

# filter查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"bool": {

"filter": [

{

"term": {

"corpName":"公司D"

}

},

{

"range": {

"fee":{

"lte":50

}

}

}

]

}

}

}

@Test

public void filterQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

SearchSourceBuilder builder = new SearchSourceBuilder();

// 指定查询条件

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery().filter(QueryBuilders.termQuery("corpName", "公司D"))

.filter(QueryBuilders.rangeQuery("fee").lte(50));

builder.query(boolQueryBuilder);

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

for (SearchHit hit : search.getHits().getHits()) {

System.out.println(hit.getSourceAsMap());

}

}

高亮查询

将用户输入的关键字,可以一定的特殊样式展示给用户,让用户知道为什么这个结果被检索出来。高亮查询返回的数据本身就是文档中的一个field,单独将field以highlight的形式返回。ES提供了hightlight属性与Query同级别,参数如下:

1、fragment_size: 指定高亮数据展示多少个字符回来

2、pre_tags: 指定前缀标签

3、post_tags: 指定后缀标签

4、fields: 指定多个field以高亮形式返回

# 高亮查询

POST /sms-logs-index/sms-logs-type/_search

{

"query": {

"match": {

"smsContent": "短信"

}

},

"highlight": {

"fields": {

"smsContent": {

}

},

"pre_tags": "<font color='red'>",

"post_tags": "</font>",

"fragment_size":10

}

}

@Test

public void highLightQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

// 指定查询条件

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.matchQuery("smsContent", "短信"));

HighlightBuilder highlightBuilder = new HighlightBuilder();

highlightBuilder.field("smsContent", 10)

.preTags("<font color='red'>")

.postTags("</font>");

builder.highlighter(highlightBuilder);

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

for (SearchHit hit : search.getHits().getHits()) {

System.out.println(hit.getHighlightFields().get("smsContent"));

}

}

聚合查询

ES的聚合查询和MySQL的聚合查询类似,ES相比MySQL的聚合查询要强大的多。

聚合查询语法

POST /index/type/_search

{

# aggregations的缩写

"aggs": {

"聚合查询的名称": {

"agg_type": {

"属性":"值"

}

}

}

}

去重计数查询

去重计数,即Cardinality,先将返回的文档中指定的field进行去重,将去重后的数据进行统计

# 聚合去重查询

POST /sms-logs-index/sms-logs-type/_search

{

"aggs": {

"dedupQuery": {

# 本次聚合查询的名称

"cardinality": {

"field": "province"

}

}

}

}

@Test

public void cardinalityQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

// 指定的聚合查询方式

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.aggregation(AggregationBuilders.cardinality("dedupQuery")

.field("province"));

request.source(builder);

// 执行查询

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

Cardinality dedupQuery = (Cardinality) search.getAggregations().get("dedupQuery");

System.out.println(dedupQuery.getValue());

}

范围统计

统计一定范围内的文档个数,范围统计可以针对普通的数值,针对时间类型,针对ip类都可以做相应的统计

# 数值方式统计

POST /sms-logs-index/sms-logs-type/_search

{

"aggs": {

"numQuery": {

"range": {

"field": "fee",

"ranges": [

{

"to": 5

},

{

"from": 50, # >=

"to": 10 # < 不包括本身

},

{

"from": 10

}

]

}

}

}

}

# 时间范围统计

POST /sms-logs-index/sms-logs-type/_search

{

"aggs": {

"timeQuery": {

"range": {

"field": "createDate",

"format": "yyyy",

"ranges": [

{

"to": 2000

},

{

"from": 2000

}

]

}

}

}

}

# ip范围统计

POST /sms-logs-index/sms-logs-type/_search

{

"aggs": {

"ipQuery": {

"ip_range": {

"field": "ipAddr",

"ranges": [

{

"to": "192.168.1.5"

},

{

"from": "192.168.1.5"

}

]

}

}

}

}

@Test

public void rangeTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

// 指定的聚合查询方式

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.aggregation(AggregationBuilders.range("numQuery")

.field("fee")

.addUnboundedTo(5)

.addRange(5, 10)

.addUnboundedFrom(10));

request.source(builder);

// 执行查询

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

Range range = search.getAggregations().get("numQuery");

for (Range.Bucket bucket : range.getBuckets()) {

System.out.println(bucket.getKeyAsString());

System.out.println(bucket.getDocCount());

}

}

统计聚合查询

查询指定field的最大值、最小值、平均值、平方和等计算方式。使用

extended_stats

# 统计聚合查询

POST /sms-logs-index/sms-logs-type/_search

{

"aggs": {

"agg": {

"extended_stats": {

"field": "fee"

}

}

}

}

@Test

public void extendedStatsQueryTest() throws IOException {

SearchRequest request = new SearchRequest(index);

request.types(type);

// 指定的聚合查询方式

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.aggregation(AggregationBuilders.extendedStats("agg")

.field("fee"));

request.source(builder);

// 执行查询

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

ExtendedStats stats = search.getAggregations().get("agg");

System.out.println(String.format("max value: %s, min value: %s", stats.getMax(), stats.getMin()));

}

地图经纬度搜索

ES中提供了一种数据类型geo_point,这个类型就是用来存储经纬度

创建索引

# 创建一个索引,指定name、location

PUT /map

{

"settings": {

"number_of_shards": 5,

"number_of_replicas": 1

},

"mappings": {

"map": {

"properties": {

"name":{

"type":"text"

},

"location":{

"type":"geo_point"

}

}

}

}

}

地图检索方式

geo_distance: 直线距离检索方式

geo_bounding_box: 以两个点确定一个矩形,获取在矩形内的全部数据

geo_polygon: 以多个点确定一个多边形,获取多边形内的数据

# geo_distance查询方式

POST /map/map/_search

{

"query": {

"geo_distance": {

"location": {

# 确定点

"lon":116.724066,

"lat":39.952307

},

"distance":20000, # 确定半径

"distance_type":"arc" # 确定形状为圆形

}

}

}

# geo_bounding_box查询方式

POST /map/map/_search

{

"query": {

"geo_bounding_box": {

"location": {

"top_left": {

# 左上角

"lon":116.421358,

"lat":39.959903

},

"bottom_right": {

# 右下角

"lon":116.403414,

"lat":39.924091

}

}

}

}

}

# geo_polygon查询

POST /map/map/_search

{

"query": {

"geo_polygon": {

"location": {

"points": [

{

"lon":116.421358,

"lat":39.959903

},

{

"lon":116.403414,

"lat":39.924091

},

{

"lon":116.327826,

"lat":39.902406

}

]

}

}

}

}

@Test

public void geoPolygonQueryTest() throws IOException {

String index = "map";

String type = "map";

SearchRequest request = new SearchRequest(index);

request.types(type);

// 指定检索方式

SearchSourceBuilder builder = new SearchSourceBuilder();

builder.query(QueryBuilders.geoPolygonQuery("location", new ArrayList<GeoPoint>() {

{

add(new GeoPoint(39.959903, 116.421358));

add(new GeoPoint(39.924091, 116.403414));

add(new GeoPoint(39.902406, 116.327826));

}}));

request.source(builder);

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

for (SearchHit hit : search.getHits().getHits()) {

System.out.println(hit.getSourceAsMap());

}

}