/etc/profile

export JAVA_HOME=/opt/java/jdk-11.0.5/

export CLASSPATH=$JAVA_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin

/home/hadoop/.bashrc

export JAVA_HOME=/opt/java/jdk-11.0.5/

export CLASSPATH=$JAVA_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP VARIABLES START

export HADOOP_INSTALL=/home/bigdata/hadoop/

export PATH=$PATH:$HADOOP_INSTALL/bin

export PATH=$PATH:$HADOOP_INSTALL/sbin

export HADOOP_MAPRED_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_HOME=$HADOOP_INSTALL

export HADOOP_HDFS_HOME=$HADOOP_INSTALL

export YARN_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_INSTALL/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_INSTALL/lib"

#HADOOP VARIABLES END

/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/opt/java/jdk-11.0.5/

export HADOOP=/home/bigdata/hadoop/

export PATH=$PATH:/usr/local/hadoop/bin

/etc/hadoop/yarn-env.sh

JAVA_HOME=/opt/java/jdk-11.0.5/

/etc/hadoop/core-site.xml

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/bigdata/hadoopTmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/bigdata/hadoopTmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/bigdata/hadoopTmp/dfs/data</value>

</property>

/etc/hadoop/yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>127.0.0.1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>127.0.0.1:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>127.0.0.1:8031</value>

</property>

$hdfs namenode -format //文件系统初始化,后续不用多次初始化

$start-dfs.sh //启动HDFS(单机)

$start-all.sh //启动HDFS(集群)

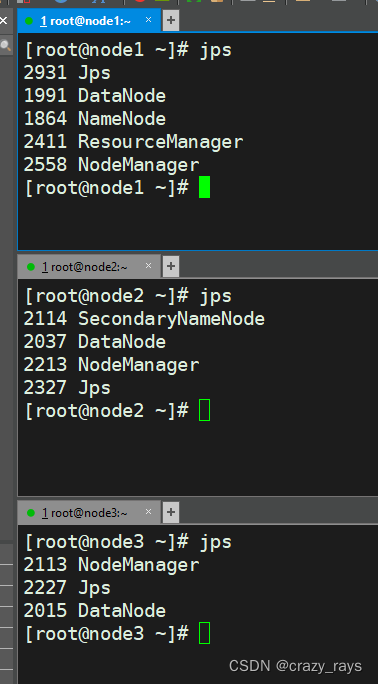

$jps //查看进程

##### issue

== 采用root启动 在/etc/profile配置如下:

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root