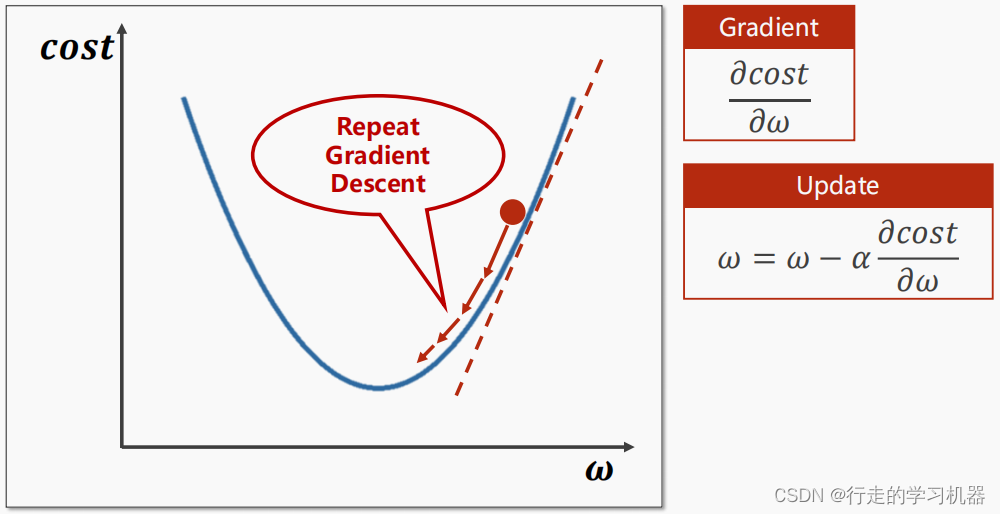

不断优化

Example:

for input, target in dataset:

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

loss.backward()

optimizer.step()import torch

import torchvision.datasets

from torch import nn

from torch.nn import Sequential,Conv2d,MaxPool2d,Flatten,Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("../data",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset,batch_size=1)

class XuZhenyu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super().__init__(*args, **kwargs)

self.model1 = Sequential(

Conv2d(3,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64,10),

)

def forward(self,x):

x=self.model1(x)

return x

loss = nn.CrossEntropyLoss()

xzy = XuZhenyu()

optim = torch.optim.SGD(xzy.parameters(),lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs,targets = data

outputs = xzy(imgs)

result_loss = loss(outputs,targets)

optim.zero_grad()

result_loss.backward()

optim.step()

running_loss = result_loss + result_loss

print(running_loss)