3-5 提高模型效果:归一化

主目录点这里

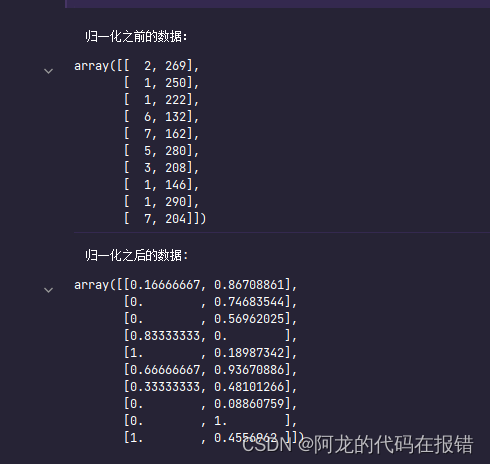

举例

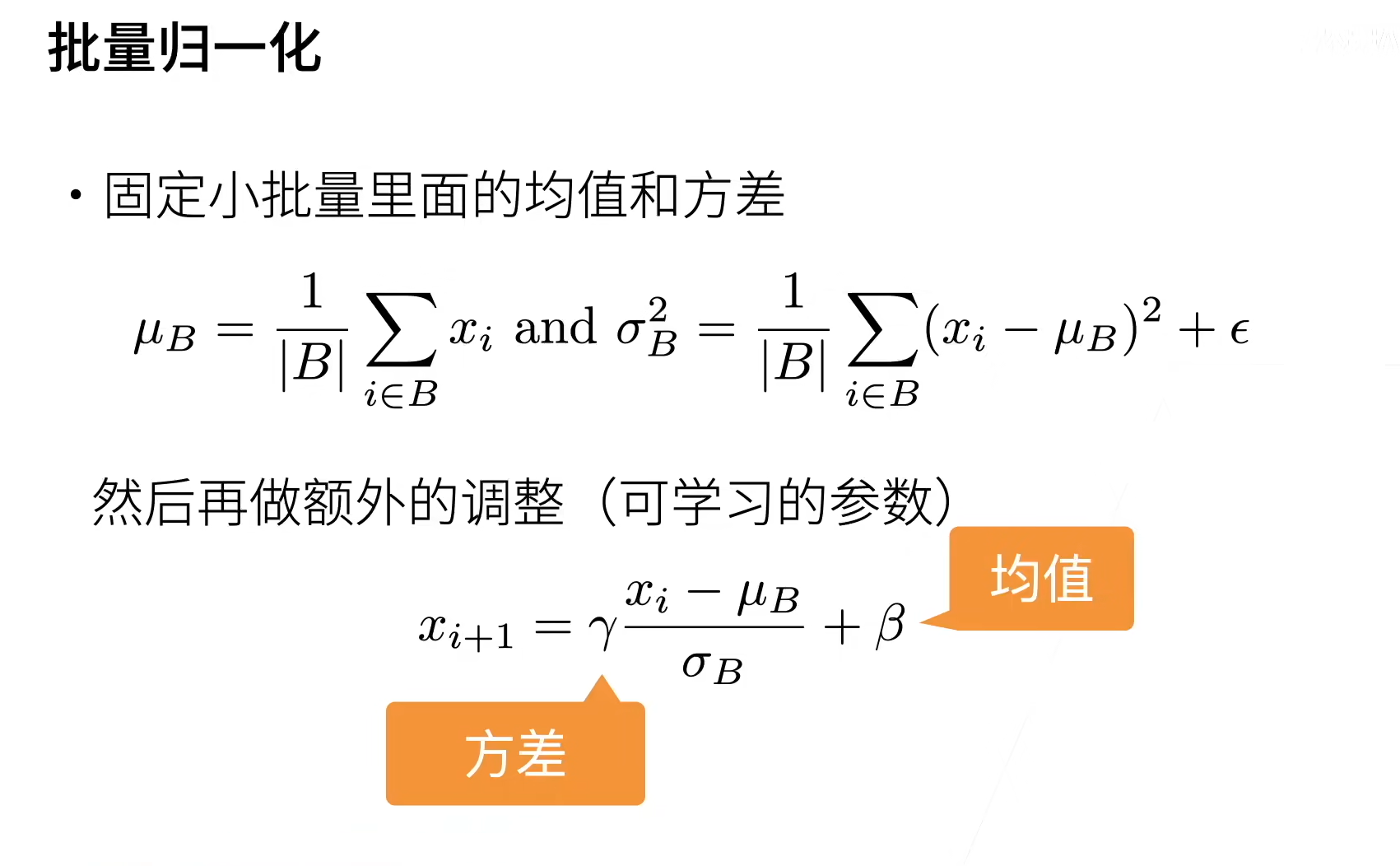

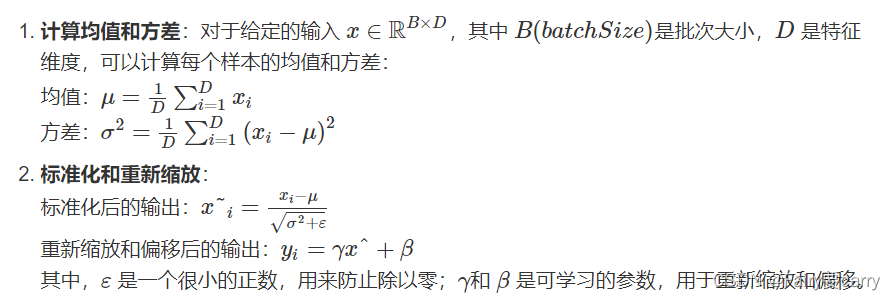

1. 批量归一化 (Batch Normalization, BN)

应用场景: 通常用于图像分类任务,它在训练期间对每个批次的数据进行归一化,以加速收敛并稳定训练过程。

代码示例:

import torch

import torch.nn as nn

import torch.optim as optim

# 定义一个简单的卷积神经网络使用批量归一化

class SimpleCNN(nn.Module):

def __init__(self):

super(SimpleCNN, self).__init__()

self.conv1 = nn.Conv2d(1, 32, kernel_size=3)

self.bn1 = nn.BatchNorm2d(32)

self.conv2 = nn.Conv2d(32, 64, kernel_size=3)

self.bn2 = nn.BatchNorm2d(64)

self.fc1 = nn.Linear(64 * 6 * 6, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.bn1(self.conv1(x))

x = nn.functional.relu(x)

x = self.bn2(self.conv2(x))

x = nn.functional.relu(x)

x = nn.functional.max_pool2d(x, 2)

x = x.view(-1, 64 * 6 * 6)

x = nn.functional.relu(self.fc1(x))

x = self.fc2(x)

return x

# 初始化模型

model = SimpleCNN()

# 打印模型结构

print(model)

2. 层归一化 (Layer Normalization, LN)

应用场景: 通常用于文本分类任务或RNN/LSTM网络中,它对每个样本的每一层的所有神经元进行归一化,而不是每个批次的数据。

代码示例:

import torch

import torch.nn as nn

# 定义一个简单的全连接网络使用层归一化

class SimpleFC(nn.Module):

def __init__(self):

super(SimpleFC, self).__init__()

self.fc1 = nn.Linear(20, 50)

self.ln1 = nn.LayerNorm(50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = self.ln1(self.fc1(x))

x = nn.functional.relu(x)

x = self.fc2(x)

return x

# 初始化模型

model = SimpleFC()

# 打印模型结构

print(model)

3. 实例归一化 (Instance Normalization, IN)

应用场景: 通常用于生成对抗网络(GAN)和风格迁移任务,它对每个样本的每个通道独立进行归一化,适合处理不同样本之间差异较大的情况。

代码示例:

import torch

import torch.nn as nn

# 定义一个简单的卷积神经网络使用实例归一化

class SimpleINN(nn.Module):

def __init__(self):

super(SimpleINN, self).__init__()

self.conv1 = nn.Conv2d(1, 32, kernel_size=3)

self.in1 = nn.InstanceNorm2d(32)

self.conv2 = nn.Conv2d(32, 64, kernel_size=3)

self.in2 = nn.InstanceNorm2d(64)

self.fc1 = nn.Linear(64 * 6 * 6, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.in1(self.conv1(x))

x = nn.functional.relu(x)

x = self.in2(self.conv2(x))

x = nn.functional.relu(x)

x = nn.functional.max_pool2d(x, 2)

x = x.view(-1, 64 * 6 * 6)

x = nn.functional.relu(self.fc1(x))

x = self.fc2(x)

return x

# 初始化模型

model = SimpleINN()

# 打印模型结构

print(model)

4. 组归一化 (Group Normalization, GN)

应用场景: 通常用于小批量数据或训练时批量大小非常小的情况。它将通道划分为组,并对每组内的通道进行归一化。

代码示例:

import torch

import torch.nn as nn

# 定义一个简单的卷积神经网络使用组归一化

class SimpleGNN(nn.Module):

def __init__(self):

super(SimpleGNN, self).__init__()

self.conv1 = nn.Conv2d(1, 32, kernel_size=3)

self.gn1 = nn.GroupNorm(4, 32) # 32个通道分成4组,每组8个通道

self.conv2 = nn.Conv2d(32, 64, kernel_size=3)

self.gn2 = nn.GroupNorm(8, 64) # 64个通道分成8组,每组8个通道

self.fc1 = nn.Linear(64 * 6 * 6, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.gn1(self.conv1(x))

x = nn.functional.relu(x)

x = self.gn2(self.conv2(x))

x = nn.functional.relu(x)

x = nn.functional.max_pool2d(x, 2)

x = x.view(-1, 64 * 6 * 6)

x = nn.functional.relu(self.fc1(x))

x = self.fc2(x)

return x

# 初始化模型

model = SimpleGNN()

# 打印模型结构

print(model)

后话

这些归一化技术各有特点和应用场景,选择适合的归一化方法可以显著提升模型的训练效果和性能。为了更加体现归一化在模型训练中的效果,我们可以选择一个更复杂的任务和网络结构,比如在 CIFAR-10 数据集上训练一个较深的卷积神经网络(CNN),并比较是否使用归一化的模型的训练和验证性能。

CIFAR-10 是一个包含 10 类的彩色图像数据集。我们将训练两个深度卷积神经网络,一个使用批量归一化(Batch Normalization, BN),另一个不使用。我们将展示它们在训练速度、收敛性和最终精度上的差异。

# -*- coding: UTF-8 -*-

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

from torch.utils.data import DataLoader, random_split

# 定义不使用批量归一化的深度卷积神经网络模型

class DeepCNN_NoBN(nn.Module):

def __init__(self):

super(DeepCNN_NoBN, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.conv3 = nn.Conv2d(128, 256, kernel_size=3, padding=1)

self.fc1 = nn.Linear(256 * 4* 4, 512)

self.fc2 = nn.Linear(512, 10)

def forward(self, x):

x = nn.functional.relu(self.conv1(x))

x = nn.functional.max_pool2d(x, 2)

x = nn.functional.relu(self.conv2(x))

x = nn.functional.max_pool2d(x, 2)

x = nn.functional.relu(self.conv3(x))

x = nn.functional.max_pool2d(x, 2)

x = x.view(-1, 256 * 4 * 4)

x = nn.functional.relu(self.fc1(x))

x = self.fc2(x)

return x

# 定义使用批量归一化的深度卷积神经网络模型

class DeepCNN_BN(nn.Module):

def __init__(self):

super(DeepCNN_BN, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, padding=1)

self.bn1 = nn.BatchNorm2d(64)

self.conv2 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.bn2 = nn.BatchNorm2d(128)

self.conv3 = nn.Conv2d(128, 256, kernel_size=3, padding=1)

self.bn3 = nn.BatchNorm2d(256)

self.fc1 = nn.Linear(256 * 4 * 4, 512)

self.fc2 = nn.Linear(512, 10)

def forward(self, x):

x = nn.functional.relu(self.bn1(self.conv1(x)))

x = nn.functional.max_pool2d(x, 2)

x = nn.functional.relu(self.bn2(self.conv2(x)))

x = nn.functional.max_pool2d(x, 2)

x = nn.functional.relu(self.bn3(self.conv3(x)))

x = nn.functional.max_pool2d(x, 2)

x = x.view(-1, 256 * 4 * 4)

x = nn.functional.relu(self.fc1(x))

x = self.fc2(x)

return x

# 训练和验证函数

def train_and_validate(model, train_loader, val_loader, epochs=20):

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

for epoch in range(epochs):

model.train()

train_loss, correct = 0, 0

for inputs, targets in train_loader:

inputs, targets = inputs.to(device), targets.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, targets)

loss.backward()

optimizer.step()

train_loss += loss.item()

_, predicted = outputs.max(1)

correct += predicted.eq(targets).sum().item()

train_loss /= len(train_loader)

train_accuracy = 100. * correct / len(train_loader.dataset)

model.eval()

val_loss, correct = 0, 0

with torch.no_grad():

for inputs, targets in val_loader:

inputs, targets = inputs.to(device), targets.to(device)

outputs = model(inputs)

loss = criterion(outputs, targets)

val_loss += loss.item()

_, predicted = outputs.max(1)

correct += predicted.eq(targets).sum().item()

val_loss /= len(val_loader)

val_accuracy = 100. * correct / len(val_loader.dataset)

print(f"Epoch: {epoch + 1}, Train Loss: {train_loss:.4f}, Train Acc: {train_accuracy:.2f}%, "

f"Val Loss: {val_loss:.4f}, Val Acc: {val_accuracy:.2f}%")

def main():

# CIFAR-10 数据集预处理

transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

])

train_dataset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

test_dataset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

# 分割训练集为训练集和验证集

train_size = int(0.8 * len(train_dataset))

val_size = len(train_dataset) - train_size

train_dataset, val_dataset = random_split(train_dataset, [train_size, val_size])

# 数据加载器,设置 num_workers 为 0 避免多进程问题

train_loader = DataLoader(train_dataset, batch_size=128, shuffle=True, num_workers=0)

val_loader = DataLoader(val_dataset, batch_size=128, shuffle=False, num_workers=0)

test_loader = DataLoader(test_dataset, batch_size=128, shuffle=False, num_workers=0)

# 初始化模型

model_no_bn = DeepCNN_NoBN()

model_bn = DeepCNN_BN()

print("Training model without Batch Normalization")

train_and_validate(model_no_bn, train_loader, val_loader)

print("\nTraining model with Batch Normalization")

train_and_validate(model_bn, train_loader, val_loader)

if __name__ == '__main__':

main()

![[数仓]三、离线数仓(Hive数仓系统)](https://i-blog.csdnimg.cn/direct/3377eadf6b2f4b268494c12afb62198f.png)