import os

import random

from functools import partial

import numpy as np

import paddle

from scipy import stats

from paddlenlp.data import Pad, Tuple

from paddlenlp.datasets import load_dataset

from paddlenlp.transformers import AutoModel, AutoTokenizer

import paddle.nn as nn

import paddle.nn.functional as F

import utils

dropout=0.2

save_dir='./checkpoints/zwqa/'

batch_size=16

epochs = 5

max_seq_length= 64

output_emb_size= 256

dup_rate= 0.3

train_set_file='./datasets/data/train.csv'

device='gpu'

seed=1000

model_name_or_path = 'rocketqa-zh-dureader-query-encoder'

margin=0.1

scale=10.

paddle.set_device(device)

def set_seed(seed):

random.seed(seed)

np.random.seed(seed)

paddle.seed(seed)

set_seed(seed)

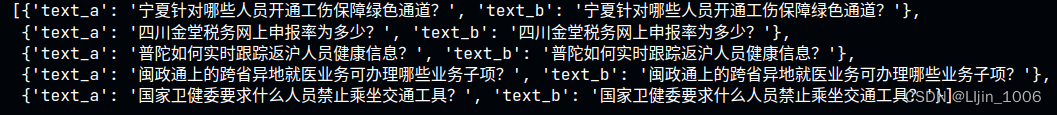

def read_simcse_text(data_path):

with open(data_path, "r", encoding="utf-8") as f:

for line in f:

data = line.rstrip()

yield {"text_a": data, "text_b": data}

train_ds = load_dataset(

read_simcse_text, data_path=train_set_file, lazy=False)

train_ds[:5]

pretrained_model = AutoModel.from_pretrained(\

model_name_or_path, hidden_dropout_prob=dropout, attention_probs_dropout_prob=dropout)

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path)

def convert_example(example, tokenizer, max_seq_length=512, do_evalute=False):

result = []

for key, text in example.items():

if "label" in key:

# do_evaluate

result += [example["label"]]

else:

# do_train

encoded_inputs = tokenizer(text=text,max_length=max_seq_length,truncation=True)

input_ids = encoded_inputs["input_ids"]

token_type_ids = encoded_inputs["token_type_ids"]

result += [input_ids, token_type_ids]

return result

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

trans_func(train_ds[0])#(前后加开始结束符)

batchify_fn=lambda samples,fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id, dtype="int64"), # query_input

Pad(axis=0, pad_val=tokenizer.pad_token_type_id, dtype="int64"), # query_segment

Pad(axis=0, pad_val=tokenizer.pad_token_id, dtype="int64"), # title_input

Pad(axis=0, pad_val=tokenizer.pad_token_type_id, dtype="int64"), # title_segment

):fn(samples)

def create_dataloader(dataset, mode="train", batch_size=1, batchify_fn=None, trans_fn=None):

if trans_fn:

dataset = dataset.map(trans_fn)

shuffle = True if mode == "train" else False

if mode == "train":

batch_sampler = paddle.io.DistributedBatchSampler(dataset,batch_size=batch_size,shuffle=shuffle)

else:

batch_sampler = paddle.io.BatchSampler(dataset, batch_size=batch_size, shuffle=shuffle)

return paddle.io.DataLoader(\

dataset=dataset, batch_sampler=batch_sampler, collate_fn=batchify_fn, return_list=True)

train_data_loader = create_dataloader(#构建dataloader

train_ds,

mode='train',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

for i in train_data_loader:

print(i)

break

dropout=0.

dropout if dropout is not None else 0.1

class SimCSE(nn.Layer):

def __init__(self, pretrained_model, dropout=None, margin=0.0, scale=20, output_emb_size=None):

super().__init__()

self.ptm = pretrained_model#预训练模型

#dropout is not None和dropout是不一样的,dropout=0.时,dropout是False,dropout is not None是True

self.dropout = nn.Dropout(dropout if dropout is not None else 0.1)

self.output_emb_size = output_emb_size

if output_emb_size > 0:#如果output_emb_size>0,线性转换

weight_attr = paddle.ParamAttr(initializer=paddle.nn.initializer.TruncatedNormal(std=0.02))

self.emb_reduce_linear = paddle.nn.Linear(768, output_emb_size, weight_attr=weight_attr)

self.margin = margin

self.scale = scale

@paddle.jit.to_static(

input_spec=[

paddle.static.InputSpec(shape=[None, None], dtype="int64"),

paddle.static.InputSpec(shape=[None, None], dtype="int64"),

]

)

def get_pooled_embedding(

self, input_ids, token_type_ids=None, position_ids=None, attention_mask=None, with_pooler=True

):

# Note: cls_embedding is poolerd embedding with act tanh

sequence_output, cls_embedding = self.ptm(input_ids, token_type_ids, position_ids, attention_mask)

if with_pooler is False:#如果ptm不返回池化层,把[CLS]输出作为池化输出