Docker Swarm集群管理

文章目录

资源列表

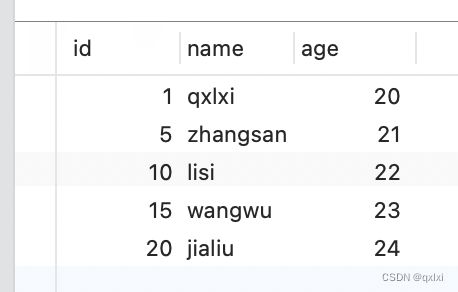

| 操作系统 | 配置 | 主机名 | IP | 所需软件 |

|---|---|---|---|---|

| CentOS 7.9 | 2C4G | manager | 192.168.93.101 | Docker 26.1.4 |

| CentOS 7.9 | 2C4G | worker01 | 192.168.93.102 | Docker 26.1.4 |

| CentOS 7.9 | 2C4G | worker02 | 192.168.93.103 | Docker 26.1.4 |

基础环境

- 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

- 关闭内核安全机制

setenforce 0

sed -i "s/^SELINUX=.*/SELINUX=disabled/g" /etc/selinux/config

- 修改主机名

hostnamectl set-hostname manager

hostnamectl set-hostname worker01

hostnamectl set-hostname worker02

- 绑定映射关系

cat >> /etc/hosts << EOF

192.168.93.101 manager

192.168.93.102 worker01

192.168.93.103 worker02

EOF

一、安装Docker

- 所有节点主机上安装并配置Docker,以manager主机为例进行演示

[root@manager ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@manager ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@manager ~]# yum clean all && yum makecache

[root@manager ~]# yum -y install docker-ce docker-ce-cli containerd.io

# 启动Docker

[root@manager ~]# systemctl start docker

[root@manager ~]# systemctl enable docker

# 查看Docker版本

[root@manager ~]# docker -v

Docker version 26.1.4, build 5650f9b

# 配置Docker加速器

[root@manager ~]# cd /etc/docker/

[root@manager docker]# cat >> daemon.json << EOF

{

"registry-mirrors": ["https://8xpk5wnt.mirror.aliyuncs.com"]

}

EOF

[root@manager docker]# systemctl restart docker

二、部署Docker Swarm集群

- 安装完Docker后,可以直接使用Docker swarm命令创建Docker Swarm集群(Docker默认支持Swarm模式)

2.1、创建Docker Swarm集群

- 命令格式:docker swarm init --advertise-addr

- 其中:–advertise-addr选项用于指定Swarm集群中管理节点(manager node)的IP地址,后续工作节点(worker node)加入集群时,必须能够访问管理节点的IP地址。

# 在manager主机上,执行如下命令即可创建一个Swarm集群

[root@manager ~]# docker swarm init --advertise-addr 192.168.93.101

Swarm initialized: current node (aa0o6ufowgxxn20choza7nsba) is now a manager.

To add a worker to this swarm, run the following command:

# 使用如下命令可以向集群中添加工作节点

docker swarm join --token SWMTKN-1-52hwnksbwzair59tbw2canzs501wpbu6dcu2med0cve8vyzcxu-3cefz89okcgwr24e82fd33mla 192.168.93.101:2377

# 使用如下命令可以向集群中添加管理节点

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

# 使用如下命令可以重新获取加入集群工作节点的提示信息

[root@manager ~]# docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-52hwnksbwzair59tbw2canzs501wpbu6dcu2med0cve8vyzcxu-3cefz89okcgwr24e82fd33mla 192.168.93.101:2377

# 使用如下命令可以重新获取加入集群管理节点的提示信息

[root@manager ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-52hwnksbwzair59tbw2canzs501wpbu6dcu2med0cve8vyzcxu-e4x6v2n0mkjxq40y23awx5iim 192.168.93.101:2377

2.2、添加Worker节点到Swarm集群

- 在worker01、worker02两个工作节点上,执行以下命令,将工作节点加入到Swarm集群中

# 注意:每个人加入集群的令牌都是不一样的,根据上面的的加入群集工作节点的令牌为准进行加入集群

[root@worker01 ~]# docker swarm join --token SWMTKN-1-52hwnksbwzair59tbw2canzs501wpbu6dcu2med0cve8vyzcxu-3cefz89okcgwr24e82fd33mla 192.168.93.101:2377

This node joined a swarm as a worker.

[root@worker02 ~]# docker swarm join --token SWMTKN-1-52hwnksbwzair59tbw2canzs501wpbu6dcu2med0cve8vyzcxu-3cefz89okcgwr24e82fd33mla 192.168.93.101:2377

This node joined a swarm as a worker.

- 命令执行完成后,使用docker info命令在管理节点上查看Swarm集群的信息

[root@manager ~]# docker info

Client: Docker Engine - Community

Version: 26.1.4

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.14.1

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.27.1

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 26.1.4

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

##################################################################

# swarm当前状态为活跃

Swarm: active

# swarm管理节点ID

NodeID: aa0o6ufowgxxn20choza7nsba

# 是否为管理节点?是的

Is Manager: true

# 集群ID

ClusterID: bmvz1t6x9kcidk1jsnuwdh3q2

# 一个管理节点

Managers: 1

# 三个工作节点(管理节点默认也是可以作为工作节点使用)

Nodes: 3

##################################################################

Data Path Port: 4789

Orchestration:

Task History Retention Limit: 5

Raft:

Snapshot Interval: 10000

Number of Old Snapshots to Retain: 0

Heartbeat Tick: 1

Election Tick: 10

Dispatcher:

Heartbeat Period: 5 seconds

CA Configuration:

Expiry Duration: 3 months

Force Rotate: 0

Autolock Managers: false

Root Rotation In Progress: false

Node Address: 192.168.93.101

Manager Addresses:

192.168.93.101:2377

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: d2d58213f83a351ca8f528a95fbd145f5654e957

runc version: v1.1.12-0-g51d5e94

init version: de40ad0

Security Options:

seccomp

Profile: builtin

Kernel Version: 3.10.0-1160.71.1.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 3.682GiB

Name: manager

ID: 5443edc1-fd3f-4a36-a647-ea0a5424e886

Docker Root Dir: /var/lib/docker

Debug Mode: false

Experimental: false

Insecure Registries:

127.0.0.0/8

Registry Mirrors:

https://8xpk5wnt.mirror.aliyuncs.com/

Live Restore Enabled: false

2.3、查看Swarm集群中Node节点的详细状态信息

- 使用docker node ls命令可以查看Swarm集群中全部节点的相信状态信息

[root@manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

aa0o6ufowgxxn20choza7nsba * manager Ready Active Leader 26.1.4

t20vr5utpq9s72ukrnzihdj88 worker01 Ready Active 26.1.4

tncaekyw2synma931269f3pzn worker02 Ready Active 26.1.4

# 上面信息中,AVAILABILITY表示Swarm Scheduler(调度器)是否可以向集群中的某个节点指派任务,对用有如下三种状态

Active:集群中该节点可以被指派任务

Pause:集群中该节点不可以被指派新的任务,但是其他已经存在的任务保持运行

Drain:集群中该节点不可以被指派新的任务,Swarm Scheduler停止已经存在的任务,并将它们调度到可用的节点上

- 查看某一个Node状态信息,只可以在管理节点上执行如下命令

# 查看manager节点的详细信息

[root@manager ~]# docker node inspect manager

[

{

"ID": "aa0o6ufowgxxn20choza7nsba",

"Version": {

"Index": 9

},

"CreatedAt": "2024-06-10T00:24:09.721501544Z",

"UpdatedAt": "2024-06-10T00:24:10.247087948Z",

"Spec": {

"Labels": {},

"Role": "manager",

"Availability": "active"

},

"Description": {

"Hostname": "manager",

"Platform": {

"Architecture": "x86_64",

"OS": "linux"

},

"Resources": {

"NanoCPUs": 2000000000,

"MemoryBytes": 3953922048

},

"Engine": {

"EngineVersion": "26.1.4",

"Plugins": [

{

"Type": "Log",

"Name": "awslogs"

},

{

"Type": "Log",

"Name": "fluentd"

},

{

"Type": "Log",

"Name": "gcplogs"

},

{

"Type": "Log",

"Name": "gelf"

},

{

"Type": "Log",

"Name": "journald"

},

{

"Type": "Log",

"Name": "json-file"

},

{

"Type": "Log",

"Name": "local"

},

{

"Type": "Log",

"Name": "splunk"

},

{

"Type": "Log",

"Name": "syslog"

},

{

"Type": "Network",

"Name": "bridge"

},

{

"Type": "Network",

"Name": "host"

},

{

"Type": "Network",

"Name": "ipvlan"

},

{

"Type": "Network",

"Name": "macvlan"

},

{

"Type": "Network",

"Name": "null"

},

{

"Type": "Network",

"Name": "overlay"

},

{

"Type": "Volume",

"Name": "local"

}

]

},

"TLSInfo": {

"TrustRoot": "-----BEGIN CERTIFICATE-----\nMIIBazCCARCgAwIBAgIUTybzspUWdYbOAJRfnyDau2d5050wCgYIKoZIzj0EAwIw\nEzERMA8GA1UEAxMIc3dhcm0tY2EwHhcNMjQwNjEwMDAxOTAwWhcNNDQwNjA1MDAx\nOTAwWjATMREwDwYDVQQDEwhzd2FybS1jYTBZMBMGByqGSM49AgEGCCqGSM49AwEH\nA0IABMkANStcqGO2+B2FOm5mLk1T55oj2zBIZTtoYLCqtRljcjKMHcu8f2QgI3Nu\nrO5WJ+lkCMEd5Mtaqbz5dCOuJ+mjQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNVHRMB\nAf8EBTADAQH/MB0GA1UdDgQWBBTiicu9VqQiX/OQxmwOygMlaQ5tbDAKBggqhkjO\nPQQDAgNJADBGAiEA4G2vH7RU3qL9aFkVjn5qVvXRPKGo5EQEPsNTObYjDN0CIQD8\ncH9CxBcO+gOk1N2K/iSJsPHnN2n9qWRIm1HXd1fGOA==\n-----END CERTIFICATE-----\n",

"CertIssuerSubject": "MBMxETAPBgNVBAMTCHN3YXJtLWNh",

"CertIssuerPublicKey": "MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAEyQA1K1yoY7b4HYU6bmYuTVPnmiPbMEhlO2hgsKq1GWNyMowdy7x/ZCAjc26s7lYn6WQIwR3ky1qpvPl0I64n6Q=="

}

},

"Status": {

"State": "ready",

"Addr": "192.168.93.101"

},

"ManagerStatus": {

"Leader": true,

"Reachability": "reachable",

"Addr": "192.168.93.101:2377"

}

}

]

# 查看worker01节点的详细信息

[root@manager ~]# docker node inspect worker01

# 查看worker02节点的详细信息

[root@manager ~]# docker node inspect worker02

三、Docker Swarm管理

3.1、案例概述

- 在企业中,相对于Docker Swarm集群的安装部署,更重要的是Docker Swarm集群的管理。公司要求云计算工程师对Docker Swarm进行日常管理。包括不限于节点管理、服务管理、网络管理、数据卷管理等等

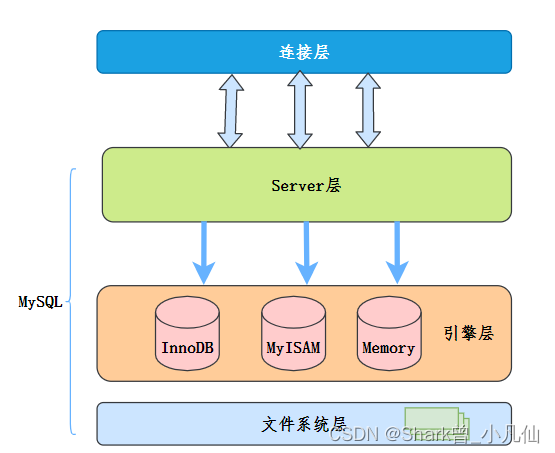

3.2、Docker Swarm中的节点

- 运行Docker主机时可以自动初始化一个Swarm集群,或者加入一个已经存在的Swarm集群,如此运行的Docker主机将称为Swarm集群中的节点(Node)

Swarm集群中的节点分为管理节点和工作节点

管理节点用于Swarm集群中的管理,负责执行编排和集群管理工作,保持并维护Swarm处于期望状态。Swarm集群中如果有多个管理节点,就会自动协商选举出一个leader(领袖)执行编排任务

工作节点时任务执行节点,管理节点将服务(service)下发至工作节点会执行。管理节点默认也作为工作节点

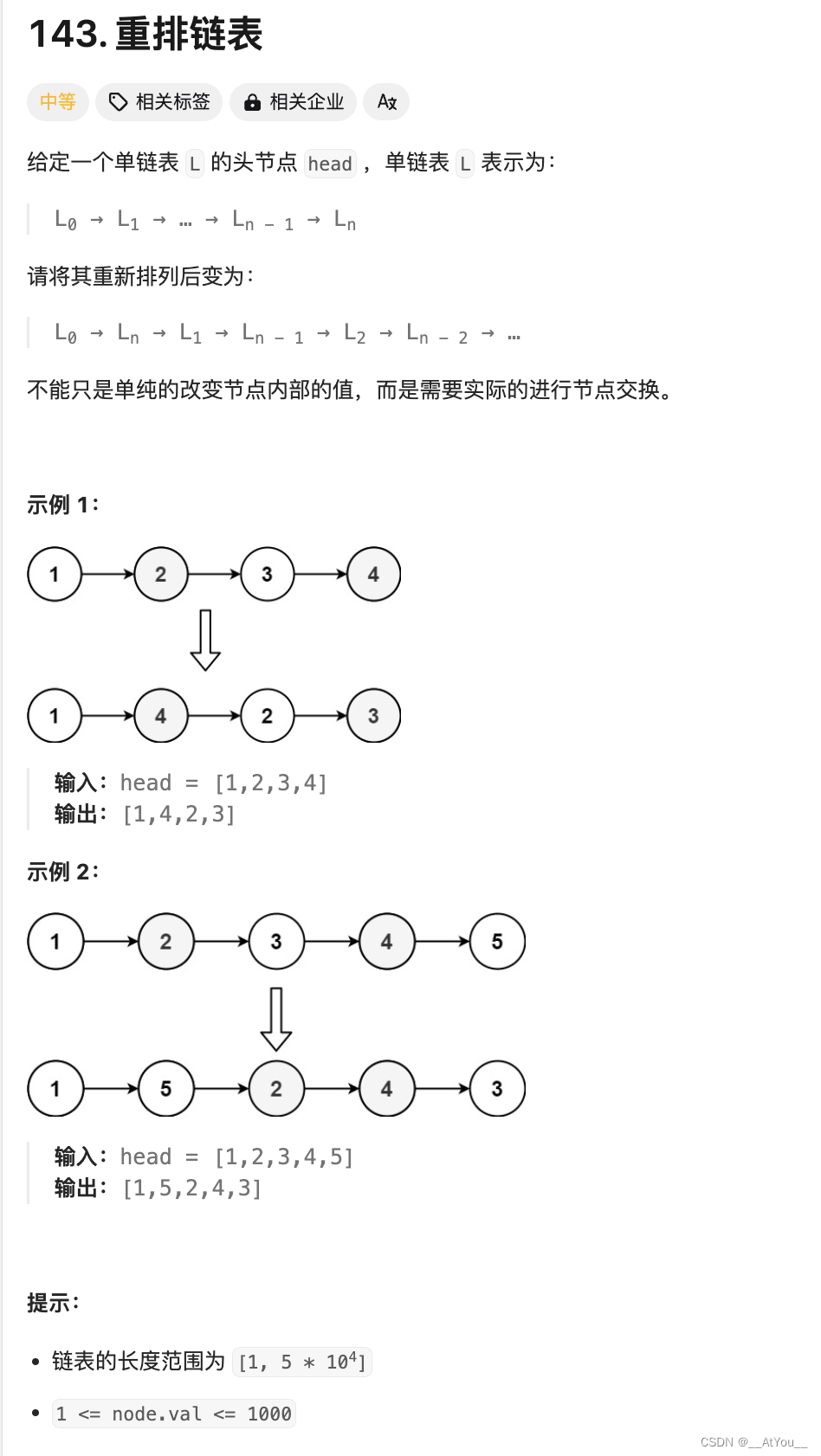

3.3、服务和任务

任务(task)是Swarm集群中最小的调度单位,对应一个单一的容器

服务(service)是指一组任务的集合,服务定义了任务的属性。服务包含两种工作模式:

- 副本服务:按照一定规则在各个工作节点上运行指定个数的任务

- 全局服务:每个工作节点上运行一个任务

服务的工作模式可以在执行docker service create命令创建服务时,通过-mode参数指定

在Swarm集群上部署服务,必须在管理节点上进行操作,下图是Service(服务)、Task(任务)、Container(容器)三者之间的关系

四、Docker Swarm节点管理

4.1、节点状态变更管理

Swarm支持设置一组管理节点,通过支持多管理节点实现HA(高可用)。这些管理节点之间的状态的一致性是非常重要的。在上面提到,**节点的AVAILABILITY有三种状态:Active、Pause、Drain。**对某个节点进行变更,可以将其AVAILABILITY值通过Docker CLI修改为对应的状态。下面是常见的变更操作:

- 设置管理节点只具有管理功能

- 对服务进行停机维护,可以修改AVAILABILITY为Drain状态

- 暂停一个节点,使该节点就不再接收新的Task(任务)

- 恢复一个不可用或暂停的节点

# 例如,将管理节点的AVAILABILITY值修改为Drain状态(不接收任务),使其只具备管理功能

[root@manager ~]# docker node update --availability drain manager

manager

[root@manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

############################################################################################################

aa0o6ufowgxxn20choza7nsba * manager Ready Drain Leader 26.1.4

############################################################################################################

t20vr5utpq9s72ukrnzihdj88 worker01 Ready Active 26.1.4

tncaekyw2synma931269f3pzn worker02 Ready Active 26.1.4

# 如此,管理节点就不能被指派新任务,也就是不能部署实际的Docker容器来运行服务,而只是担任管理者的角色

4.2、添加标签元数据

- 在生产环境中,可能每个节点的主机配置情况不同,比如:有的适合运行CPU密集型应用、有的适合运行IO密集型应用。Swarm支持给每个节点添加标签元数据,根据节点的标签,选择性地调度某个服务部署到期望的一组节点上

添加标签的命令格式如下所示

- docker node update --label-add 值 键

# 示例1:worker01主机在名称为GM-IDC-01的数据中心,为worker01节点添加标签为“CM-IDC-01”

[root@manager ~]# docker node update --label-add GM-IDC-01 worker01

worker01

# 查看worker01主机的标签是否添加成功

[root@manager ~]# docker node inspect worker01

[

{

"ID": "t20vr5utpq9s72ukrnzihdj88",

"Version": {

"Index": 22

},

"CreatedAt": "2024-06-10T00:31:04.564510894Z",

"UpdatedAt": "2024-06-10T01:08:23.261990244Z",

"Spec": {

"Labels": {

"GM-IDC-01": ""

},

## 部分内容省略

4.3、节点提权/降权

前面提到,在Swarm集群中节点分为管理节点与工作节点两种。在实际的生产环境中根据实际需求可更改节点的角色,常见操作有:

- 工作节点变为管理节点:提权操作

- 管理节点变为工作节点:降权操作

# 示例2:将worker01和worker02都升级为管理节点,具体操作如下所示。

[root@manager ~]# docker node promote worker01 worker02

Node worker01 promoted to a manager in the swarm.

Node worker02 promoted to a manager in the swarm.

# 可以看到两个工作节点的MANAGER STATUS配置段增加了Reachable

[root@manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

aa0o6ufowgxxn20choza7nsba * manager Ready Drain Leader 26.1.4

t20vr5utpq9s72ukrnzihdj88 worker01 Ready Active Reachable 26.1.4

tncaekyw2synma931269f3pzn worker02 Ready Active Reachable 26.1.4

# 示例3:对上面已经提前的worker01和worker02执行降权操作,需要执行如下命令

[root@manager ~]# docker node demote worker01 worker02

Manager worker01 demoted in the swarm.

Manager worker02 demoted in the swarm.

# 可以看到执行了降权之后,两个工作节点的MANAGER STATUS值为空

[root@manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

aa0o6ufowgxxn20choza7nsba * manager Ready Drain Leader 26.1.4

t20vr5utpq9s72ukrnzihdj88 worker01 Ready Active 26.1.4

tncaekyw2synma931269f3pzn worker02 Ready Active 26.1.4

4.4、退出Swarm集群

- 如果管理节点想要退出Swarm集群,在管理节点上执行docker swarm leave命令,具体操作如下

[root@manager ~]# docker swarm leave

# 忽略如下报错信息,因为集群中存在别的节点所以管理节点不能先退出

Error response from daemon: You are attempting to leave the swarm on a node that is participating as a manager. Removing the last manager erases all current state of the swarm. Use `--force` to ignore this message.

# 使用如下命令可以强制退出集群,则需要加上一个强制选项

[root@manager ~]# docker swarm leave --force

Node left the swarm.

- 同理,如果工作节点想要退出Swarm集群,在工作上执行docker swarm leave命令

[root@worker01 ~]# docker swarm leave

Node left the swarm.

# 即使管理节点已经退出Swarm集群,执行上述命令也可以使得工作节点退出集群。之后,根据需要,加入到其他新键的Swarm集群中。需要注意的是,管理节点退出集群后无法重新加入之前退出的集群;工作节点退出集群通过docker swarm join命令指定对应的token值重新加入集群

五、Docker Swarm服务管理

5.1、创建服务

- 使用docker service create命令可以创建Docker服务

# 示例4:从Docker镜像nginx创建一个名为Web的服务,指定服务副本数为。具体操作如下

# 重新创建集群

[root@manager ~]# docker swarm init --advertise-addr 192.168.93.101

Swarm initialized: current node (h4wy4loy8d63ufojudngef1fu) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-37t9a5b5y21u5aah9o3l5hwrt8aujgso18iavcnw3egewfekao-b66serglaiqs6tzhm9whi10xf 192.168.93.101:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

# 更改管理节点的状态,不接受任务

[root@manager ~]# docker node update --availability drain manager

manager

# 将两个工作节点退出旧集群

[root@worker01 ~]# docker swarm leave

Node left the swarm.

[root@worker02 ~]# docker swarm leave

Node left the swarm.

# 将两个工作节点加入新集群

[root@worker01 ~]# docker swarm join --token SWMTKN-1-37t9a5b5y21u5aah9o3l5hwrt8aujgso18iavcnw3egewfekao-b66serglaiqs6tzhm9whi10xf 192.168.93.101:2377

This node joined a swarm as a worker.

[root@worker02 ~]# docker swarm join --token SWMTKN-1-37t9a5b5y21u5aah9o3l5hwr

This node joined a swarm as a worker.

# 查看集群状态信息

[root@manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

h4wy4loy8d63ufojudngef1fu * manager Ready Active Leader 26.1.4

tfgwzuhvc9og2hhq57r4m85bi worker01 Ready Active 26.1.4

buu4v3t6ljcyjgci68d28l65w worker02 Ready Active 26.1.4

# 创建服务

[root@manager ~]# docker service create --replicas 2 --name web nginx

45jowgy1comocs5oez1u0352l

overall progress: 2 out of 2 tasks

1/2: running

2/2: running

verify: Service 45jowgy1comocs5oez1u0352l converged

# 执行如下命令可以查看当前已经部署启动的全局应用服务

[root@manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

45jowgy1como web replicated 2/2 nginx:latest

# 执行如下命令可以查询指定服务的详细信息

[root@manager ~]# docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

1hx3e8z6ehj6 web.1 nginx:latest worker02 Running Running 27 seconds ago

uhsw5wew3sae web.2 nginx:latest worker01 Running Running 47 seconds ago

# 上面信息显示,在worker01和worker02节点上部署了Web应用服务,也包含了它们对应的当前状态信息,此时,可以通过执行docker ps命令,在工作节点上查看当前启动的Docker容器

[root@worker01 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

032941efc659 nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp web.2.uhsw5wew3sae254swcuv3kecy

[root@worker02 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

67494c75dc9c nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp web.1.1hx3e8z6ehj61ldcnm48tluk8

5.2、显示服务详细信息

- 常见服务详细信息有以JSON格式显示、易于阅读显示两种显示方式

5.2.1、以JSON格式显示

- 可以通过下面的命令,以JSON格式显示方法Web服务的详细信息

[root@manager ~]# docker service inspect web

[

{

"ID": "q7muz8icza1akh0dmuxem7tn3",

"Version": {

"Index": 22

},

"CreatedAt": "2024-06-10T02:03:11.039981869Z",

"UpdatedAt": "2024-06-10T02:03:11.039981869Z",

"Spec": {

"Name": "web",

"Labels": {},

"TaskTemplate": {

"ContainerSpec": {

"Image": "nginx:latest@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31",

"Init": false,

"StopGracePeriod": 10000000000,

"DNSConfig": {},

"Isolation": "default"

},

"Resources": {

"Limits": {},

"Reservations": {}

},

"RestartPolicy": {

"Condition": "any",

"Delay": 5000000000,

"MaxAttempts": 0

},

"Placement": {

"Platforms": [

{

"Architecture": "amd64",

"OS": "linux"

},

{

"OS": "linux"

},

{

"OS": "linux"

},

{

"Architecture": "arm64",

"OS": "linux"

},

{

"Architecture": "386",

"OS": "linux"

},

{

"Architecture": "mips64le",

"OS": "linux"

},

{

"Architecture": "ppc64le",

"OS": "linux"

},

{

"Architecture": "s390x",

"OS": "linux"

}

]

},

"ForceUpdate": 0,

"Runtime": "container"

},

"Mode": {

"Replicated": {

"Replicas": 2

}

},

"UpdateConfig": {

"Parallelism": 1,

"FailureAction": "pause",

"Monitor": 5000000000,

"MaxFailureRatio": 0,

"Order": "stop-first"

},

"RollbackConfig": {

"Parallelism": 1,

"FailureAction": "pause",

"Monitor": 5000000000,

"MaxFailureRatio": 0,

"Order": "stop-first"

},

"EndpointSpec": {

"Mode": "vip"

}

},

"Endpoint": {

"Spec": {}

}

}

]

5.2.2、易于阅读显示

- 可以通过执行下面的命令,以易于阅读方式显示Web服务的详细信息

[root@manager ~]# docker service inspect --pretty web

ID: q7muz8icza1akh0dmuxem7tn3

Name: web

Service Mode: Replicated

Replicas: 2

Placement:

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: nginx:latest@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Init: false

Resources:

Endpoint Mode: vip

5.3、服务的扩容缩容

当使用服务并涉及到高可用时,可能会有服务的扩容和缩容操作。服务扩容缩容的命令格式如下所示,通过Task总数确定服务是扩容还是缩容

docker service scale 服务=Task总数

# 示例5:将前面已经部署的2个副本的Web服务,扩容到3个副本

[root@manager ~]# docker service scale web=3

web scaled to 3

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service web converged

# 通过docker service ps web命令查看服务扩容结果

[root@manager ~]# docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

1hx3e8z6ehj6 web.1 nginx:latest worker02 Running Running 8 minutes ago

uhsw5wew3sae web.2 nginx:latest worker01 Running Running 8 minutes ago

15jt1khj5ggc web.3 nginx:latest worker01 Running Running 41 seconds ago

# 根据上述命令结果得知,worker01节点上有两个Web应用服务的副本。进行服务缩容操作时只需要设置副本的数小于当前应用服务拥有的副本数即可,大于指定缩容副本数的副本会被删除

[root@manager ~]# docker service scale web=1

web scaled to 1

overall progress: 1 out of 1 tasks

1/1: running

verify: Service web converged

[root@manager ~]# docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

1hx3e8z6ehj6 web.1 nginx:latest worker02 Running Running 10 minutes ago

5.4、删除服务

- 命令格式:docker service rm 服务名称

# 示例6:删除集群中所有Wen应用服务

[root@manager ~]# docker service rm web

web

[root@manager ~]# docker service ps web

no such service: web

5.5、滚动更新

- 在创建服务时通过–update-delay选项可以设置容器的更新间隔时间,每次成功部署一个服务,延迟10秒钟,然后再更新下一个服务。如果某个服务更新失败,Swarm的调度器就会暂停本次服务的部署更新

[root@manager ~]# docker service create --replicas 3 --name redis --update-delay 10s redis:4.0.4

zsdc8ket1754piiz479tf622a

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service zsdc8ket1754piiz479tf622a converged

[root@manager ~]# docker service ps redis

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

gfvq0se0amfw redis.1 redis:4.0.4 worker02 Running Running 25 seconds ago

onyfq787ajql redis.2 redis:4.0.4 worker01 Running Running 23 seconds ago

57klpkbthm60 redis.3 redis:4.0.4 worker02 Running Running 25 seconds ago

# 更新已经部署服务所在容器中使用的镜像版本。示例6:将redis服务对用image版本由4.0.4更新为4.0.5,但是服务更新之前的4.0.4镜像版本的容器不会删除,只会停止

[root@manager ~]# docker service update --image redis:4.0.5 redis

redis

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service redis converged

[root@manager ~]# docker service ps redis

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

nt53g2a5dnbe redis.1 redis:4.0.5 worker02 Running Running 38 seconds ago

gfvq0se0amfw \_ redis.1 redis:4.0.4 worker02 Shutdown Shutdown about a minute ago

zcipt652sett redis.2 redis:4.0.5 worker01 Running Running 27 seconds ago

onyfq787ajql \_ redis.2 redis:4.0.4 worker01 Shutdown Shutdown 27 seconds ago

j0jkmibnnn3t redis.3 redis:4.0.5 worker01 Running Running about a minute ago

57klpkbthm60 \_ redis.3 redis:4.0.4 worker02 Shutdown Shutdown about a minute ago

5.6、添加自定义Overlay网络

- 可以让服务之间的容器通过容器名称进行通信

- 在Swarm集群中使用Overlay网络可以连接到一个或多个服务。添加Overlay网络需要在管理节点上先创建一个Overlay网络

[root@manager ~]# docker network create --driver overlay my-network

6mmvljs63afzic7o9ojivctpw

# 创建名为my-network的Overlay网络之后,在创建服务时,通过--network选项使用的网络已经存在的Overlay网络即可

[root@manager ~]# docker service create --replicas 3 --network my-network --name web nginx

166p2g47svuf48eho8ijqj9la

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service 166p2g47svuf48eho8ijqj9la converged

# 如果Swarm集群中其他节点上的Docker容器也使用my-network网络,那么处于改Overlay网路中的所有容器之间都可以进行通信

5.7、数据卷创建与应用

- 命令格式:docker volume create 卷名

# 创建数据卷

[root@manager ~]# docker volume create product-kgc

product-kgc

# 查看数据卷

[root@manager ~]# docker volume ls

DRIVER VOLUME NAME

local product-kgc挂载到服务容器中的/usr/share/nginx/html

# 应用上述创建的数据卷(将本机product-kgc挂载到服务容器中的/usr/share/nginx/html)

[root@manager ~]# docker service create --mount type=volume,src=product-kgc,dst=/usr/share/nginx/html --replicas 1 --name kgc-web-01 nginx

4zobmebf2wuzoonvtt4a53z5r

overall progress: 1 out of 1 tasks

1/1: running

verify: Service 4zobmebf2wuzoonvtt4a53z5r converged

# 查看你数据卷的详细信息

[root@manager ~]# docker volume inspect product-kgc

[

{

"CreatedAt": "2024-06-10T10:32:36+08:00",

"Driver": "local",

"Labels": null,

# 此卷真正存放数据的地方

"Mountpoint": "/var/lib/docker/volumes/product-kgc/_data",

"Name": "product-kgc",

"Options": null,

"Scope": "local"

}

]

# 查看数据是否进行同步的命令如下

# 在工作节点上执行以下命令

[root@worker01 ~]# cd /var/lib/docker/volumes/product-kgc/_data/

[root@worker01 _data]# mkdir test01 test02

# 列出所有在运行的容器信息

[root@worker01 _data]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

##################################################################

e844eee8ef04 nginx:latest "/docker-entrypoint.…" 4 minutes ago Up 4 minutes 80/tcp kgc-web-01.1.3bclleosvtp9vk4qv2cgl873x

##################################################################

932f42fdab17 nginx:latest "/docker-entrypoint.…" 9 minutes ago Up 9 minutes 80/tcp web.2.wrfkjb6cevf22g4ds963ich91

47d7ce4ecb54 redis:4.0.5 "docker-entrypoint.s…" 13 minutes ago Up 13 minutes 6379/tcp redis.2.zcipt652settbxk9r9gto5mz9

c7fed90dceda redis:4.0.5 "docker-entrypoint.s…" 14 minutes ago Up 14 minutes 6379/tcp redis.3.j0jkmibnnn3txrvupa4htej29

# 进入docker容器

[root@worker01 _data]# docker exec -it kgc-web-01.1.3bclleosvtp9vk4qv2cgl873x bash

root@e844eee8ef04:/# ls /usr/share/nginx/html/

50x.html index.html test01 test02

# 从上面的验证结果得出,在本地数据卷目录下创建几个目录,进入到容器后,找到对应的目录,数据依然存在

# 数据卷的挂载类型除volume之外,还经常使用bind类型,具体操作如下所示

[root@manager ~]# mkdir -p /var/vhost/www/aa

[root@worker01 ~]# mkdir -p /var/vhost/www/aa

[root@worker02 ~]# mkdir -p /var/vhost/www/aa

# 创建2个kgc-web-02服务

[root@manager ~]# docker service create --replicas 2 --mount type=bind,src=/var/vhost/www/aa,dst=/usr/share/nginx/html --name kgc-web-02 nginx

utulrkf6xxy9fpkpf16f33e36

overall progress: 2 out of 2 tasks

1/2: running

2/2: running

verify: Service utulrkf6xxy9fpkpf16f33e36 converged

# 下面命令用于验证数据是否同步

# 在共组节点上执行如下命令

[root@worker01 ~]# touch /var/vhost/www/aa/1

# 列出所有容器信息

[root@worker01 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

##################################################################

13baa8bb60de nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp kgc-web-02.2.neo42xgzu6dzet1g6w1qnwbqm

##################################################################

e844eee8ef04 nginx:latest "/docker-entrypoint.…" 11 minutes ago Up 11 minutes 80/tcp kgc-web-01.1.3bclleosvtp9vk4qv2cgl873x

932f42fdab17 nginx:latest "/docker-entrypoint.…" 16 minutes ago Up 16 minutes 80/tcp web.2.wrfkjb6cevf22g4ds963ich91

47d7ce4ecb54 redis:4.0.5 "docker-entrypoint.s…" 21 minutes ago Up 21 minutes 6379/tcp redis.2.zcipt652settbxk9r9gto5mz9

c7fed90dceda redis:4.0.5 "docker-entrypoint.s…" 22 minutes ago Up 22 minutes 6379/tcp redis.3.j0jkmibnnn3txrvupa4htej29

[root@worker01 ~]# docker exec -it kgc-web-02.2.neo42xgzu6dzet1g6w1qnwbqm bash

root@13baa8bb60de:/# ls /usr/share/nginx/html/

1

![[C][数据结构][树]详细讲解](https://img-blog.csdnimg.cn/direct/78646583378c49eabbb527c0a3245b4f.png)