import os

import requests

import json

import pandas as pd

#爬取地址

# 实现将python中的数据写入excel中

def write_to_excel(result, path, sheet_name):

if not os.path.exists(path):

write = pd.ExcelWriter(path)

result.to_excel(write, sheet_name=sheet_name, index=False)

write.save()

else:

Original_df = pd.read_excel(path, sheet_name='result')

result = pd.concat([Original_df, result], axis=0, join='outer')

result = result.drop_duplicates()

write = pd.ExcelWriter(path)

result.to_excel(write, sheet_name=sheet_name, index=False)

write.save()

def crawl(step, start_point, Save_path):

"""step为每批次爬取的页数,开始页数,保存路径"""

df = pd.DataFrame()

count = 0

# step为每批次爬取的页数,group_num是能够爬取完整step的组数

group_num = int(2465 / step)

start_group = int(start_point / step)#1/50=40

# 保存获取失败的网页页码

failure_get = []

for group in range(start_group, group_num + 1):

# 当能够获取完整step时,循环最大值设置为step

if group != group_num:

max = step + 1

else:

# 当不能够获取完整的step时,循环最大值设置为剩余获取页数

max = 2465 - step * group_num

for m in range(1, max):

j = group * step + m

try:

print(f'正在爬取第{j}页')

url = f'https://mci.cnki.net//statistics/query?q=&start={j}&size=20'

User_Agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'

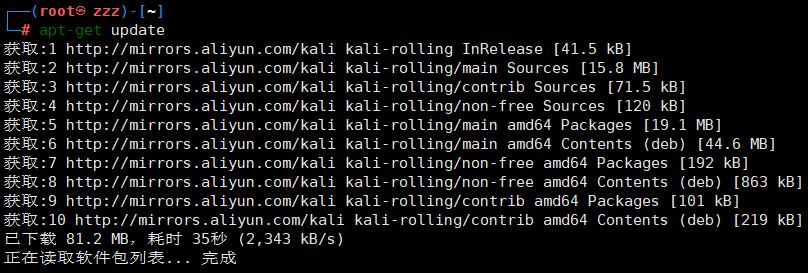

cookies = 'cangjieConfig_CHKD2=%7B%22status%22%3Atrue%2C%22startTime%22%3A%222021-12-27%22%2C%22endTime%22%3A%222022-06-24%22%2C%22type%22%3A%22mix%22%2C%22poolSize%22%3A%2210%22%2C%22intervalTime%22%3A10000%2C%22persist%22%3Afalse%7D; SID=012053; Ecp_ClientId=b230620141100392435; Ecp_IpLoginFail=230620219.137.5.106'

headers_list = {'User-Agent': User_Agent, 'Cookie': cookies}

# 解决代理报错问题

proxies = {"http": None, "https": None}

issue_url_resp = requests.get(url=url, proxies=proxies, headers=headers_list)

print()

for i in range(0, len(json.loads(issue_url_resp.text)['data']['data'])):

df.loc[count, 'Chinese_title'] = json.loads(issue_url_resp.text)['data']['data'][i]['title']

df.loc[count, 'English_title'] = json.loads(issue_url_resp.text)['data']['data'][i]['entitle']

df.loc[count, 'url'] = json.loads(issue_url_resp.text)['data']['data'][i]['url']

count = count + 1

except:

print(f'第{j}页爬取失败!!!!!!!!!!!!')

failure_get.append(j)

write_to_excel(df, Save_path, 'result')

print(failure_get)

if __name__ == '__main__':

Save_path = r'C:\Users\Childers\中国知网关键词.xlsx'

# 上次无响应的页数

start_point = 1

crawl(50, start_point, Save_path)

![[算法][差分数组][leetcode]1094. 拼车](https://img-blog.csdnimg.cn/img_convert/d65e40bf2d408ace103dd00cae59cb69.png)