集成学习案例一 (幸福感预测)

https://github.com/datawhalechina/team-learning-data-mining/blob/master/EnsembleLearning/CH6-%E9%9B%86%E6%88%90%E5%AD%A6%E4%B9%A0%E4%B9%8B%E6%A1%88%E4%BE%8B%E5%88%86%E4%BA%AB/%E9%9B%86%E6%88%90%E5%AD%A6%E4%B9%A0%E6%A1%88%E4%BE%8B%E5%88%86%E6%9E%901/%E9%9B%86%E6%88%90%E5%AD%A6%E4%B9%A0-%E6%A1%88%E4%BE%8B%E5%88%86%E6%9E%901.ipynb

背景介绍

此案例是一个数据挖掘类型的比赛——幸福感预测的baseline。比赛的数据使用的是官方的《中国综合社会调查(CGSS)》文件中的调查结果中的数据,其共包含有139个维度的特征,包括个体变量(性别、年龄、地域、职业、健康、婚姻与政治面貌等等)、家庭变量(父母、配偶、子女、家庭资本等等)、社会态度(公平、信用、公共服务)等特征。

数据信息

赛题要求使用以上 139 维的特征,使用 8000 余组数据进行对于个人幸福感的预测(预测值为1,2,3,4,5,其中1代表幸福感最低,5代表幸福感最高)。 因为考虑到变量个数较多,部分变量间关系复杂,数据分为完整版和精简版两类。可从精简版入手熟悉赛题后,使用完整版挖掘更多信息。在这里我直接使用了完整版的数据。赛题也给出了index文件中包含每个变量对应的问卷题目,以及变量取值的含义;survey文件中为原版问卷,作为补充以方便理解问题背景。

评价指标

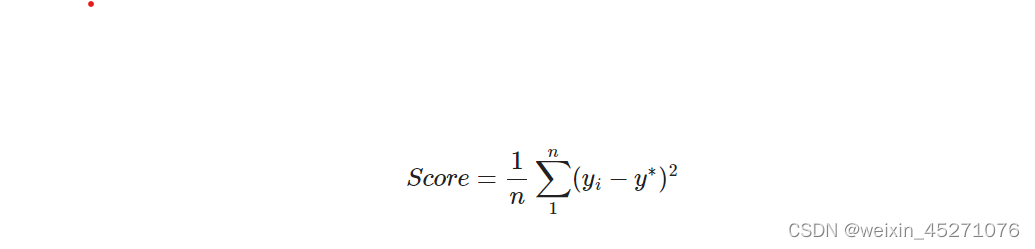

最终的评价指标为均方误差MSE,即:

import os

import time

import pandas as pd

import numpy as np

import seaborn as sns

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC, LinearSVC

from sklearn.ensemble import RandomForestClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.linear_model import Perceptron

from sklearn.linear_model import SGDClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn import metrics

from datetime import datetime

import matplotlib.pyplot as plt

from sklearn.metrics import roc_auc_score, roc_curve, mean_squared_error,mean_absolute_error, f1_score

import lightgbm as lgb

import xgboost as xgb

from sklearn.ensemble import RandomForestRegressor as rfr

from sklearn.ensemble import ExtraTreesRegressor as etr

from sklearn.linear_model import BayesianRidge as br

from sklearn.ensemble import GradientBoostingRegressor as gbr

from sklearn.linear_model import Ridge

from sklearn.linear_model import Lasso

from sklearn.linear_model import LinearRegression as lr

from sklearn.linear_model import ElasticNet as en

from sklearn.kernel_ridge import KernelRidge as kr

from sklearn.model_selection import KFold, StratifiedKFold,GroupKFold, RepeatedKFold

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn import preprocessing

import logging

import warnings

warnings.filterwarnings('ignore') #消除warning

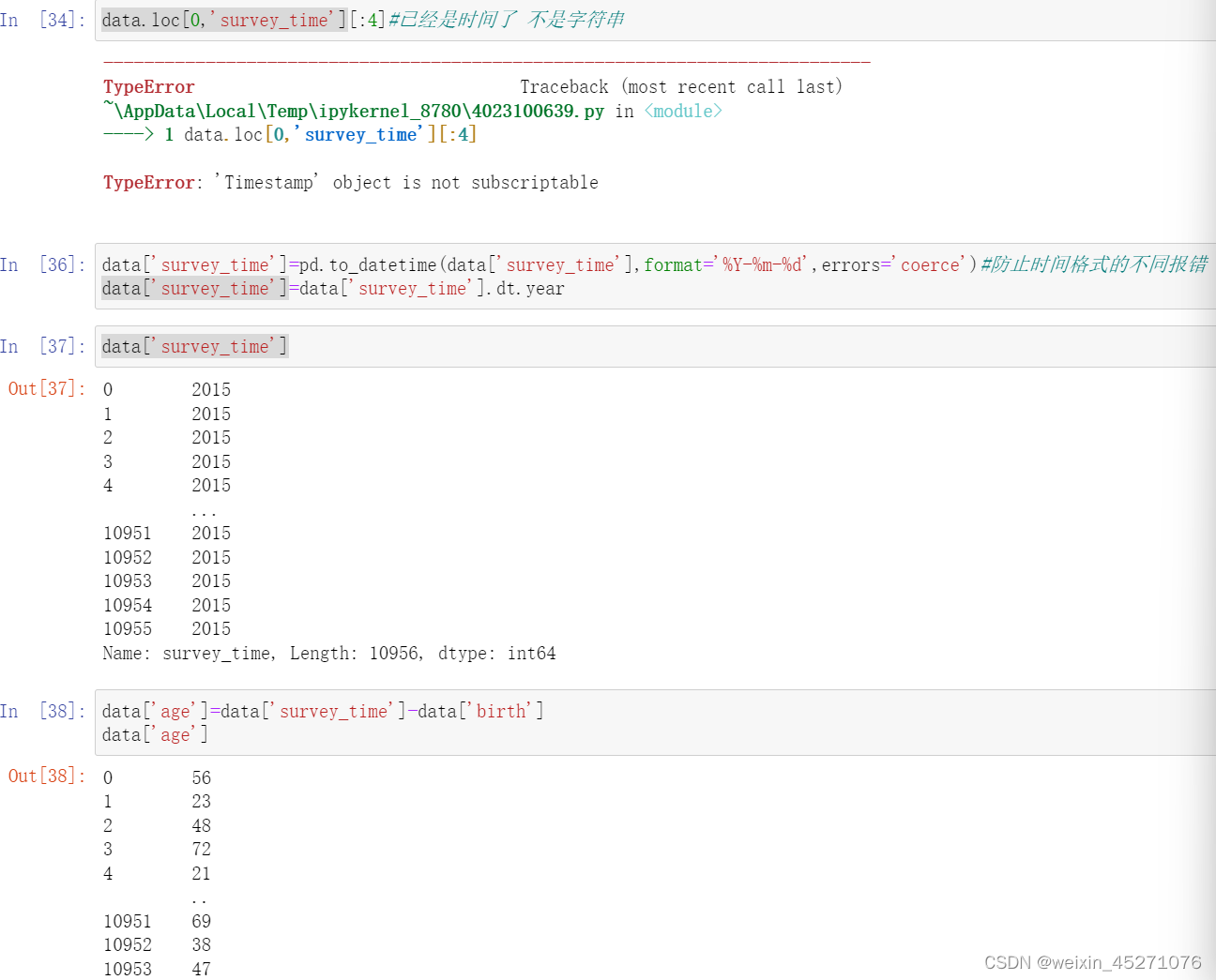

#parse_dates将Date列设置为时间类型

#index_col将Date列设置为索引

#latin-1向下兼容ASCII

train=pd.read_csv("D:\caicai_sklearn\others\happyiness_datasets\happiness_train_complete.csv",

parse_dates=['survey_time'],encoding='latin-1')

test=pd.read_csv("D:\caicai_sklearn\others\happyiness_datasets\happiness_test_complete.csv",

parse_dates=['survey_time'],encoding='latin-1')

train=train[train['happiness']!=-8].reset_index(drop=True)

#二、使用reset_index(drop=True)

#drop=True表示删除原索引,不然会在数据表格中新生成一列’index’数据

train_data_copy=train.copy()

target_col='happiness'

target=train_data_copy[target_col]

del train_data_copy[target_col]#去除目标列

data=pd.concat([train_data_copy,test],axis=0,ignore_index=True)

#当 ignore_index=True 时,表示在合并数据的同时忽略原始数据的索引(index),新生成的合并后的数据会重新生成一个默认的整数索引。

#make feature +5

#csv中有复数值:-1、-2、-3、-8,将他们视为有问题的特征,但是不删去

def getres1(row):

return len([x for x in row.values if type(x)==int and x<0])

def getres2(row):

return len([x for x in row.values if type(x)==int and x==-8])

def getres3(row):

return len([x for x in row.values if type(x)==int and x==-1])

def getres4(row):

return len([x for x in row.values if type(x)==int and x==-2])

def getres5(row):

return len([x for x in row.values if type(x)==int and x==-3])

#检查数据

data['neg1'] = data[data.columns].apply(lambda row:getres1(row),axis=1)

data.loc[data['neg1']>20,'neg1'] = 20 #平滑处理

data['neg2'] = data[data.columns].apply(lambda row:getres2(row),axis=1)

data['neg3'] = data[data.columns].apply(lambda row:getres3(row),axis=1)

data['neg4'] = data[data.columns].apply(lambda row:getres4(row),axis=1)

data['neg5'] = data[data.columns].apply(lambda row:getres5(row),axis=1)

#填充缺失值,在这里我采取的方式是将缺失值补全,使用fillna(value),其中value的数值根据具体的情况来确定。

#例如将大部分缺失信息认为是零,将家庭成员数认为是1,将家庭收入这个特征认为是66365,即所有家庭的收入平均值。

#部分实现代码如下:

#可以根据业务来填充

data['work_status']=data['work_status'].fillna(0)

data['work_yr'] = data['work_yr'].fillna(0)

data['work_manage'] = data['work_manage'].fillna(0)

data['work_type'] = data['work_type'].fillna(0)

data['edu_yr'] = data['edu_yr'].fillna(0)

data['edu_status'] = data['edu_status'].fillna(0)

data['s_work_type'] = data['s_work_type'].fillna(0)

data['s_work_status'] = data['s_work_status'].fillna(0)

data['s_political'] = data['s_political'].fillna(0)

data['s_hukou'] = data['s_hukou'].fillna(0)

data['s_income'] = data['s_income'].fillna(0)

data['s_birth'] = data['s_birth'].fillna(0)

data['s_edu'] = data['s_edu'].fillna(0)

data['s_work_exper'] = data['s_work_exper'].fillna(0)

data['minor_child'] = data['minor_child'].fillna(0)

data['marital_now'] = data['marital_now'].fillna(0)

data['marital_1st'] = data['marital_1st'].fillna(0)

data['social_neighbor']=data['social_neighbor'].fillna(0)

data['social_friend']=data['social_friend'].fillna(0)

data['hukou_loc']=data['hukou_loc'].fillna(1) #最少为1,表示户口

data['family_income']=data['family_income'].fillna(66365) #删除问题值后的平均值

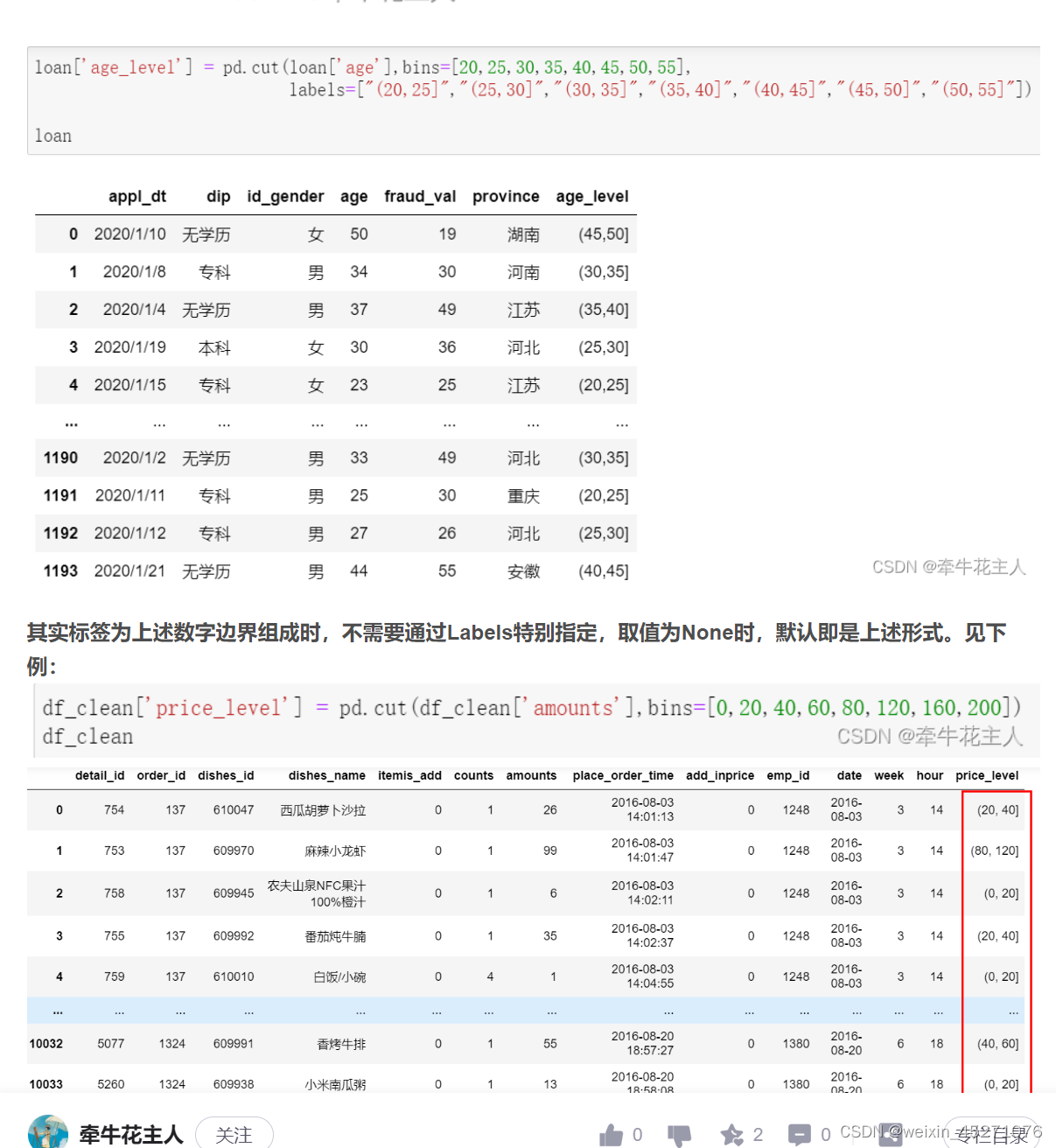

bins=[0,17,26,34,50,63,100]#人工分好箱子

data['age_bin']=pd.cut(data['age'],bins,labels=[0,1,2,3,4,5])

‘’'一、column_stack方法的基本原理

column_stack方法的主要作用是将两个或更多的一维或二维数组沿着列方向(即第二个轴)堆叠起来。这种方法在需要将多个数组的数据组合成一个更大的数组时非常有用。不同于hstack方法,column_stack要求输入的数组至少为二维,或者是一维数组但能够升维成二维。如果输入的是一维数组,column_stack会在堆叠前将它们转换为列向量。

二、column_stack方法的参数详解

column_stack方法接受一个元组作为输入,该元组包含要堆叠的数组。这些数组可以是一维的也可以是二维的,但它们的行数必须相同,以便在列方向上堆叠。下面是一个参数详解:

tup:一个元组,包含要堆叠的数组。这些数组可以是一维的也可以是二维的,但它们的第一维度(行数)必须相同。

值得注意的是,column_stack方法在内部实际上是使用concatenate函数来实现的,其等价于np.concatenate((a, b), axis=1),其中a和b是要堆叠的数组。

三、column_stack方法的使用示例’‘’

数据增广

这一步,我们需要进一步分析每一个特征之间的关系,从而进行数据增广。经过思考,这里我添加了如下的特征:第一次结婚年龄、最近结婚年龄、是否再婚、配偶年龄、配偶年龄差、各种收入比(与配偶之间的收入比、十年后预期收入与现在收入之比等等)、收入与住房面积比(其中也包括10年后期望收入等等各种情况)、社会阶级(10年后的社会阶级、14年后的社会阶级等等)、悠闲指数、满意指数、信任指数等等。除此之外,我还考虑了对于同一省、市、县进行了归一化。例如同一省市内的收入的平均值等以及一个个体相对于同省、市、县其他人的各个指标的情况。同时也考虑了对于同龄人之间的相互比较,即在同龄人中的收入情况、健康情况等等。具体的实现代码如下:

#第一次结婚年龄 147

data['marital_1stbir'] = data['marital_1st'] - data['birth']

#最近结婚年龄 148

data['marital_nowtbir'] = data['marital_now'] - data['birth']

#是否再婚 149

data['mar'] = data['marital_nowtbir'] - data['marital_1stbir']

#配偶年龄 150

data['marital_sbir'] = data['marital_now']-data['s_birth']

#配偶年龄差 151

data['age_'] = data['marital_nowtbir'] - data['marital_sbir']

#收入比 151+7 =158

data['income/s_income'] = data['income']/(data['s_income']+1) #同居伴侣

data['income+s_income'] = data['income']+(data['s_income']+1)

data['income/family_income'] = data['income']/(data['family_income']+1)

data['all_income/family_income'] = (data['income']+data['s_income'])/(data['family_income']+1)

data['income/inc_exp'] = data['income']/(data['inc_exp']+1)

data['family_income/m'] = data['family_income']/(data['family_m']+0.01)

data['income/m'] = data['income']/(data['family_m']+0.01)

#收入/面积比 158+4=162

data['income/floor_area'] = data['income']/(data['floor_area']+0.01)

data['all_income/floor_area'] = (data['income']+data['s_income'])/(data['floor_area']+0.01)

data['family_income/floor_area'] = data['family_income']/(data['floor_area']+0.01)

data['floor_area/m'] = data['floor_area']/(data['family_m']+0.01)

#class 162+3=165

data['class_10_diff'] = (data['class_10_after'] - data['class'])

data['class_diff'] = data['class'] - data['class_10_before']

data['class_14_diff'] = data['class'] - data['class_14']

#悠闲指数 166

leisure_fea_lis = ['leisure_'+str(i) for i in range(1,13)]

data['leisure_sum'] = data[leisure_fea_lis].sum(axis=1) #skew

#满意指数 167

public_service_fea_lis = ['public_service_'+str(i) for i in range(1,10)]

data['public_service_sum'] = data[public_service_fea_lis].sum(axis=1) #skew

#信任指数 168

trust_fea_lis = ['trust_'+str(i) for i in range(1,14)]

data['trust_sum'] = data[trust_fea_lis].sum(axis=1) #skew

#province mean 168+13=181

data['province_income_mean'] = data.groupby(['province'])['income'].transform('mean').values

data['province_family_income_mean'] = data.groupby(['province'])['family_income'].transform('mean').values

data['province_equity_mean'] = data.groupby(['province'])['equity'].transform('mean').values

data['province_depression_mean'] = data.groupby(['province'])['depression'].transform('mean').values

data['province_floor_area_mean'] = data.groupby(['province'])['floor_area'].transform('mean').values

data['province_health_mean'] = data.groupby(['province'])['health'].transform('mean').values

data['province_class_10_diff_mean'] = data.groupby(['province'])['class_10_diff'].transform('mean').values

data['province_class_mean'] = data.groupby(['province'])['class'].transform('mean').values

data['province_health_problem_mean'] = data.groupby(['province'])['health_problem'].transform('mean').values

data['province_family_status_mean'] = data.groupby(['province'])['family_status'].transform('mean').values

data['province_leisure_sum_mean'] = data.groupby(['province'])['leisure_sum'].transform('mean').values

data['province_public_service_sum_mean'] = data.groupby(['province'])['public_service_sum'].transform('mean').values

data['province_trust_sum_mean'] = data.groupby(['province'])['trust_sum'].transform('mean').values

#city mean 181+13=194

data['city_income_mean'] = data.groupby(['city'])['income'].transform('mean').values #按照city分组

data['city_family_income_mean'] = data.groupby(['city'])['family_income'].transform('mean').values

data['city_equity_mean'] = data.groupby(['city'])['equity'].transform('mean').values

data['city_depression_mean'] = data.groupby(['city'])['depression'].transform('mean').values

data['city_floor_area_mean'] = data.groupby(['city'])['floor_area'].transform('mean').values

data['city_health_mean'] = data.groupby(['city'])['health'].transform('mean').values

data['city_class_10_diff_mean'] = data.groupby(['city'])['class_10_diff'].transform('mean').values

data['city_class_mean'] = data.groupby(['city'])['class'].transform('mean').values

data['city_health_problem_mean'] = data.groupby(['city'])['health_problem'].transform('mean').values

data['city_family_status_mean'] = data.groupby(['city'])['family_status'].transform('mean').values

data['city_leisure_sum_mean'] = data.groupby(['city'])['leisure_sum'].transform('mean').values

data['city_public_service_sum_mean'] = data.groupby(['city'])['public_service_sum'].transform('mean').values

data['city_trust_sum_mean'] = data.groupby(['city'])['trust_sum'].transform('mean').values

#county mean 194 + 13 = 207

data['county_income_mean'] = data.groupby(['county'])['income'].transform('mean').values

data['county_family_income_mean'] = data.groupby(['county'])['family_income'].transform('mean').values

data['county_equity_mean'] = data.groupby(['county'])['equity'].transform('mean').values

data['county_depression_mean'] = data.groupby(['county'])['depression'].transform('mean').values

data['county_floor_area_mean'] = data.groupby(['county'])['floor_area'].transform('mean').values

data['county_health_mean'] = data.groupby(['county'])['health'].transform('mean').values

data['county_class_10_diff_mean'] = data.groupby(['county'])['class_10_diff'].transform('mean').values

data['county_class_mean'] = data.groupby(['county'])['class'].transform('mean').values

data['county_health_problem_mean'] = data.groupby(['county'])['health_problem'].transform('mean').values

data['county_family_status_mean'] = data.groupby(['county'])['family_status'].transform('mean').values

data['county_leisure_sum_mean'] = data.groupby(['county'])['leisure_sum'].transform('mean').values

data['county_public_service_sum_mean'] = data.groupby(['county'])['public_service_sum'].transform('mean').values

data['county_trust_sum_mean'] = data.groupby(['county'])['trust_sum'].transform('mean').values

#ratio 相比同省 207 + 13 =220

data['income/province'] = data['income']/(data['province_income_mean'])

data['family_income/province'] = data['family_income']/(data['province_family_income_mean'])

data['equity/province'] = data['equity']/(data['province_equity_mean'])

data['depression/province'] = data['depression']/(data['province_depression_mean'])

data['floor_area/province'] = data['floor_area']/(data['province_floor_area_mean'])

data['health/province'] = data['health']/(data['province_health_mean'])

data['class_10_diff/province'] = data['class_10_diff']/(data['province_class_10_diff_mean'])

data['class/province'] = data['class']/(data['province_class_mean'])

data['health_problem/province'] = data['health_problem']/(data['province_health_problem_mean'])

data['family_status/province'] = data['family_status']/(data['province_family_status_mean'])

data['leisure_sum/province'] = data['leisure_sum']/(data['province_leisure_sum_mean'])

data['public_service_sum/province'] = data['public_service_sum']/(data['province_public_service_sum_mean'])

data['trust_sum/province'] = data['trust_sum']/(data['province_trust_sum_mean']+1)

#ratio 相比同市 220 + 13 =233

data['income/city'] = data['income']/(data['city_income_mean'])

data['family_income/city'] = data['family_income']/(data['city_family_income_mean'])

data['equity/city'] = data['equity']/(data['city_equity_mean'])

data['depression/city'] = data['depression']/(data['city_depression_mean'])

data['floor_area/city'] = data['floor_area']/(data['city_floor_area_mean'])

data['health/city'] = data['health']/(data['city_health_mean'])

data['class_10_diff/city'] = data['class_10_diff']/(data['city_class_10_diff_mean'])

data['class/city'] = data['class']/(data['city_class_mean'])

data['health_problem/city'] = data['health_problem']/(data['city_health_problem_mean'])

data['family_status/city'] = data['family_status']/(data['city_family_status_mean'])

data['leisure_sum/city'] = data['leisure_sum']/(data['city_leisure_sum_mean'])

data['public_service_sum/city'] = data['public_service_sum']/(data['city_public_service_sum_mean'])

data['trust_sum/city'] = data['trust_sum']/(data['city_trust_sum_mean'])

#ratio 相比同个地区 233 + 13 =246

data['income/county'] = data['income']/(data['county_income_mean'])

data['family_income/county'] = data['family_income']/(data['county_family_income_mean'])

data['equity/county'] = data['equity']/(data['county_equity_mean'])

data['depression/county'] = data['depression']/(data['county_depression_mean'])

data['floor_area/county'] = data['floor_area']/(data['county_floor_area_mean'])

data['health/county'] = data['health']/(data['county_health_mean'])

data['class_10_diff/county'] = data['class_10_diff']/(data['county_class_10_diff_mean'])

data['class/county'] = data['class']/(data['county_class_mean'])

data['health_problem/county'] = data['health_problem']/(data['county_health_problem_mean'])

data['family_status/county'] = data['family_status']/(data['county_family_status_mean'])

data['leisure_sum/county'] = data['leisure_sum']/(data['county_leisure_sum_mean'])

data['public_service_sum/county'] = data['public_service_sum']/(data['county_public_service_sum_mean'])

data['trust_sum/county'] = data['trust_sum']/(data['county_trust_sum_mean'])

#age mean 246+ 13 =259

data['age_income_mean'] = data.groupby(['age'])['income'].transform('mean').values

data['age_family_income_mean'] = data.groupby(['age'])['family_income'].transform('mean').values

data['age_equity_mean'] = data.groupby(['age'])['equity'].transform('mean').values

data['age_depression_mean'] = data.groupby(['age'])['depression'].transform('mean').values

data['age_floor_area_mean'] = data.groupby(['age'])['floor_area'].transform('mean').values

data['age_health_mean'] = data.groupby(['age'])['health'].transform('mean').values

data['age_class_10_diff_mean'] = data.groupby(['age'])['class_10_diff'].transform('mean').values

data['age_class_mean'] = data.groupby(['age'])['class'].transform('mean').values

data['age_health_problem_mean'] = data.groupby(['age'])['health_problem'].transform('mean').values

data['age_family_status_mean'] = data.groupby(['age'])['family_status'].transform('mean').values

data['age_leisure_sum_mean'] = data.groupby(['age'])['leisure_sum'].transform('mean').values

data['age_public_service_sum_mean'] = data.groupby(['age'])['public_service_sum'].transform('mean').values

data['age_trust_sum_mean'] = data.groupby(['age'])['trust_sum'].transform('mean').values

# 和同龄人相比259 + 13 =272

data['income/age'] = data['income']/(data['age_income_mean'])

data['family_income/age'] = data['family_income']/(data['age_family_income_mean'])

data['equity/age'] = data['equity']/(data['age_equity_mean'])

data['depression/age'] = data['depression']/(data['age_depression_mean'])

data['floor_area/age'] = data['floor_area']/(data['age_floor_area_mean'])

data['health/age'] = data['health']/(data['age_health_mean'])

data['class_10_diff/age'] = data['class_10_diff']/(data['age_class_10_diff_mean'])

data['class/age'] = data['class']/(data['age_class_mean'])

data['health_problem/age'] = data['health_problem']/(data['age_health_problem_mean'])

data['family_status/age'] = data['family_status']/(data['age_family_status_mean'])

data['leisure_sum/age'] = data['leisure_sum']/(data['age_leisure_sum_mean'])

data['public_service_sum/age'] = data['public_service_sum']/(data['age_public_service_sum_mean'])

data['trust_sum/age'] = data['trust_sum']/(data['age_trust_sum_mean'])

cat_fea = ['survey_type','gender','nationality','edu_status','political','hukou','hukou_loc','work_exper','work_status','work_type',

'work_manage','marital','s_political','s_hukou','s_work_exper','s_work_status','s_work_type','f_political','f_work_14',

'm_political','m_work_14'] #已经是0、1的值不需要onehot

noc_fea = [clo for clo in use_feature if clo not in cat_fea]

onehot_data = data[cat_fea].values

enc = preprocessing.OneHotEncoder(categories = 'auto')

oh_data=enc.fit_transform(onehot_data).toarray()

oh_data.shape #变为onehot编码格式

X_train_oh = oh_data[:train_shape,:]

X_test_oh = oh_data[train_shape:,:]

X_train_oh.shape #其中的训练集

X_train_383 = np.column_stack([data[:train_shape][noc_fea].values,X_train_oh])#先是noc,再是cat_fea

X_test_383 = np.column_stack([data[train_shape:][noc_fea].values,X_test_oh])

X_train_383.shape

‘’'一、column_stack方法的基本原理

column_stack方法的主要作用是将两个或更多的一维或二维数组沿着列方向(即第二个轴)堆叠起来。这种方法在需要将多个数组的数据组合成一个更大的数组时非常有用。不同于hstack方法,column_stack要求输入的数组至少为二维,或者是一维数组但能够升维成二维。如果输入的是一维数组,column_stack会在堆叠前将它们转换为列向量。

二、column_stack方法的参数详解

column_stack方法接受一个元组作为输入,该元组包含要堆叠的数组。这些数组可以是一维的也可以是二维的,但它们的行数必须相同,以便在列方向上堆叠。下面是一个参数详解:

tup:一个元组,包含要堆叠的数组。这些数组可以是一维的也可以是二维的,但它们的第一维度(行数)必须相同。

值得注意的是,column_stack方法在内部实际上是使用concatenate函数来实现的,其等价于np.concatenate((a, b), axis=1),其中a和b是要堆叠的数组。

三、column_stack方法的使用示例’‘’

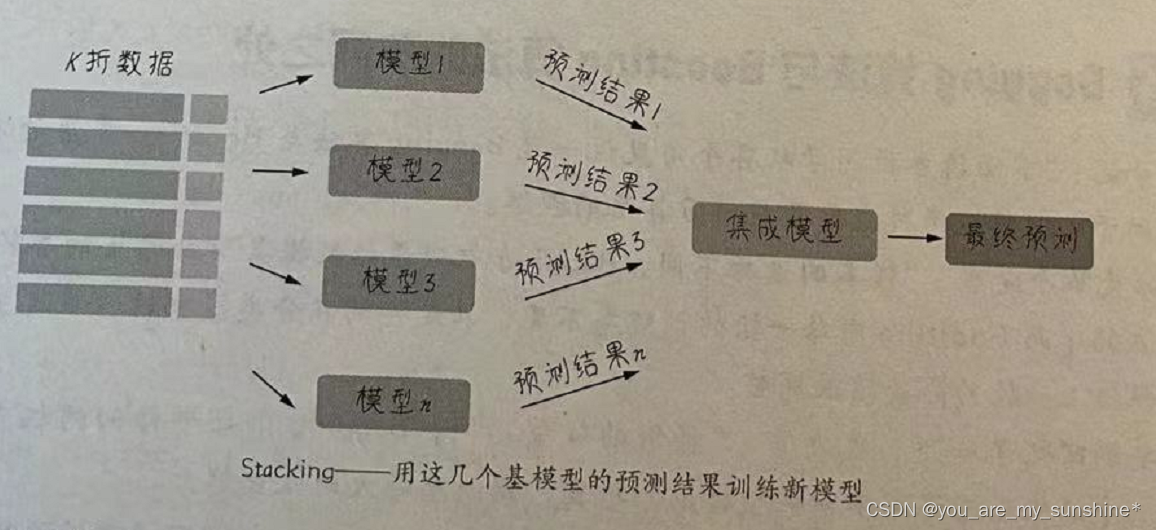

#特征建模

#首先我们对于原始的263维的特征,使用lightGBM进行处理,这里我们使用5折交叉验证的方法:

#1.lightGBM

‘’’ LightGBM重要参数

2.1 基本参数调整

num_leaves(num_leaf,max_leaves,max_leaf) :默认=31,一棵树上的最大叶子数。这是控制树模型复杂度的主要参数,一般令

num_leaves小于(2的max_depth次方),以防止过拟合。

min_data_in_leaf: 默认=20,一个叶子上数据的最小数量. 可以用来处理过拟合。它的值取决于训练数据的样本个树和 num_leaves参数.。

将其设置的较大可以避免生成一个过深的树, 但有可能导致欠拟合。

max_depth:限制树模型的最大深度. 这可以在 #data 小的情况下防止过拟合. 树仍然可以通过 leaf-wise 生长。depth 的概念在 leaf-wise

树中并没有多大作用, 因为并不存在一个从 leaves 到 depth 的合理映射。

2.2 针对训练速度的参数调整

bagging_fraction(sub_row, subsample):默认=1,不进行重采样的情况下随机选择部分数据。可用来设置使用特征的子抽样。

bagging_freq(subsample_freq):bagging 的频率, 0 意味着禁用 bagging. k 意味着每 k 次迭代执行bagging

选择较小的 max_bin 参数

使用 save_binary 在未来的学习过程对数据加载进行加速。

2.3 针对准确率的参数调整

设置较大的 max_bin (学习速度可能变慢)

设置较小的 learning_rate 和较大的 num_iterations

设置较大的 num_leaves (可能导致过拟合)

设置较大的训练集

boosting :默认gbdt,设置提升类型。可以尝试使用dart:多个加性回归树的DROPOUT方法。

2.4 针对过拟合的参数调整

设置较小的 max_bin

设置较小的 num_leaves

min_data_in_leaf: 默认=20,一个叶子上数据的最小数量. 可以用来处理过拟合

min_sum_hessian_in_leaf(min_sum_hessian_per_leaf, min_sum_hessian, min_hessian):默认=1e-3,一个叶子上的最小 hessian 和. 类似于 min_data_in_leaf, 可以用来处理过拟合

设置 bagging_fraction 和 bagging_freq 来使用 bagging

设置 feature_fraction 来使用特征子抽样

使用更大的数据集以训练

使用正则化方法

max_depth:限制树模型的最大深度。

‘’’

‘’'StratifiedKFold和KFold

K折交叉验证会把样本数据随机的分成K份(一般是均分),每次随机的选择K-1份作为训练集,剩下的1份做测试集。当这一轮完成后,重新随机选择K-1份来训练数据。若干轮(小于K)之后,选择损失函数评估最优的模型和参数。

将每个子集数据分别做一次测试集,其余的K-1组子集数据作为训练集,所以一般分成K份就这样会得到K组模型,用这K个模型最终的测试集的分类准确率的平均数作为此K-CV下分类器的性能指标。

优点是可以降低由一次随机划分带来的偶然性,提高其泛化能力。但K折还有个问题就是因为是随机划分,很有可能划分的过程中刚好把类别都划分开了,比如第一折训练集里全是0标签,第二折测试集里全是1标签,这样对模型训练就不太好,在其中的某个模型学习的时候就没有学习到测试集的分类特征。在KFold中就是这样的,对于不平衡数据集,特别是一些比赛数据正类非常少,那么直接用KFold就可能出现这种问题。

所以对非平衡数据可以用分层采样StratifiedKFold,就是在每一份子集中都保持和原始数据集相同的类别比例。若数据集有4个类别,比例是2:3:3:2,则划分后的样本比例约是2:3:3:2,StratifiedShuffleSplit() 划分中每个类的比例和完整数据集中的相同,若数据集有4个类别,比例是2:3:3:2,则划分后的样本比例也是2:3:3:2’‘’

lgb_263_param={

'num_leaves':7#一棵树上的最大叶子树

,'min_data_in_leaf':20

,'objective':'regression'

,'max_depth':-1# 一个整数,限制了树模型的最大深度,默认值为-1。如果小于0,则表示没有限制。

,'learning_rate':0.003

,'boosting':'gbdt'#使用gbdt算法

,'feature_fraction':0.18#意味着每次迭代中随机选择18%的特征来建树

,'bagging_freq':1#一个整数,表示每bagging_freq 次执行bagging。如果该参数为0,表示禁用bagging。

,'bagging_fraction':0.55#一个浮点数,取值范围为[0.0,1.0], 默认值为1.0。如果小于1.0,则lightgbm 会在每次迭代中随机选择部分样本来训练(非重复采样)。如0.8 表示:在每棵树训练之前选择80% 的样本(非重复采样)来训练。

,'bagging_seed':14#一个整数,表示bagging 的随机数种子,默认为 3 。

,'metric':'mse'

,'lambda_l1':0.1#表示L1正则化系数。默认为0

,'lambda_l2':0.2

,'verbosity':-1

}

folds=StratifiedKFold(n_splits=5,shuffle=True,random_state=4)

oof_lgb_263=np.zeros(len(X_train_263))

oof_lgb_263

predictions_lgb_263 = np.zeros(len(X_test_263))

for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):

print("fold n°{}".format(fold_+1))

trn_data = lgb.Dataset(X_train_263[trn_idx], y_train[trn_idx])

val_data = lgb.Dataset(X_train_263[val_idx], y_train[val_idx])#train:val=4:1

num_round = 10000

lgb_263 = lgb.train(lgb_263_param, trn_data, num_round, valid_sets = [trn_data, val_data],

verbose_eval=500,#每500个显示一次

early_stopping_rounds = 800)#验证集到第800次不下降的话就停止

oof_lgb_263[val_idx] = lgb_263.predict(X_train_263[val_idx], num_iteration=lgb_263.best_iteration)

predictions_lgb_263 += lgb_263.predict(X_test_263, num_iteration=lgb_263.best_iteration) / folds.n_splits

print("CV score: {:<8.8f}".format(mean_squared_error(oof_lgb_263, target)))

fold n°1

Train until valid scores didn't improve in 800 rounds.

[500] training's l2: 0.501938 valid_1's l2: 0.533425

[1000] training's l2: 0.452726 valid_1's l2: 0.499756

[1500] training's l2: 0.426169 valid_1's l2: 0.485816

[2000] training's l2: 0.407667 valid_1's l2: 0.479159

[2500] training's l2: 0.393298 valid_1's l2: 0.475593

[3000] training's l2: 0.38095 valid_1's l2: 0.473463

[3500] training's l2: 0.370048 valid_1's l2: 0.472461

[4000] training's l2: 0.360177 valid_1's l2: 0.472099

[4500] training's l2: 0.351047 valid_1's l2: 0.471627

[5000] training's l2: 0.342613 valid_1's l2: 0.471167

[5500] training's l2: 0.334567 valid_1's l2: 0.470571

[6000] training's l2: 0.326888 valid_1's l2: 0.470352

[6500] training's l2: 0.319634 valid_1's l2: 0.470425

[7000] training's l2: 0.312596 valid_1's l2: 0.470277

[7500] training's l2: 0.305676 valid_1's l2: 0.470359

Early stopping, best iteration is:

[6730] training's l2: 0.316274 valid_1's l2: 0.470175

fold n°2

Train until valid scores didn't improve in 800 rounds.

[500] training's l2: 0.506635 valid_1's l2: 0.515786

[1000] training's l2: 0.456749 valid_1's l2: 0.481037

[1500] training's l2: 0.43009 valid_1's l2: 0.467209

[2000] training's l2: 0.411874 valid_1's l2: 0.459684

[2500] training's l2: 0.397771 valid_1's l2: 0.455079

[3000] training's l2: 0.385867 valid_1's l2: 0.451832

[3500] training's l2: 0.375203 valid_1's l2: 0.449445

[4000] training's l2: 0.365454 valid_1's l2: 0.44783

[4500] training's l2: 0.356243 valid_1's l2: 0.446691

[5000] training's l2: 0.347721 valid_1's l2: 0.445975

[5500] training's l2: 0.339731 valid_1's l2: 0.445269

[6000] training's l2: 0.332021 valid_1's l2: 0.444835

[6500] training's l2: 0.324729 valid_1's l2: 0.44425

[7000] training's l2: 0.317772 valid_1's l2: 0.444009

[7500] training's l2: 0.311109 valid_1's l2: 0.443739

[8000] training's l2: 0.30449 valid_1's l2: 0.443386

[8500] training's l2: 0.298101 valid_1's l2: 0.443137

[9000] training's l2: 0.292145 valid_1's l2: 0.44313

Early stopping, best iteration is:

[8324] training's l2: 0.300343 valid_1's l2: 0.443033

fold n°3

Train until valid scores didn't improve in 800 rounds.

[500] training's l2: 0.505526 valid_1's l2: 0.519868

[1000] training's l2: 0.456471 valid_1's l2: 0.481007

[1500] training's l2: 0.430593 valid_1's l2: 0.46361

[2000] training's l2: 0.412584 valid_1's l2: 0.453949

[2500] training's l2: 0.398557 valid_1's l2: 0.448174

[3000] training's l2: 0.386372 valid_1's l2: 0.44438

[3500] training's l2: 0.375676 valid_1's l2: 0.44174

[4000] training's l2: 0.365675 valid_1's l2: 0.439735

[4500] training's l2: 0.356566 valid_1's l2: 0.438129

[5000] training's l2: 0.347892 valid_1's l2: 0.437464

[5500] training's l2: 0.339832 valid_1's l2: 0.43662

[6000] training's l2: 0.332046 valid_1's l2: 0.436186

[6500] training's l2: 0.324662 valid_1's l2: 0.43595

[7000] training's l2: 0.317693 valid_1's l2: 0.435917

[7500] training's l2: 0.310908 valid_1's l2: 0.435647

[8000] training's l2: 0.30429 valid_1's l2: 0.435682

Early stopping, best iteration is:

[7464] training's l2: 0.311372 valid_1's l2: 0.435602

fold n°4

Train until valid scores didn't improve in 800 rounds.

[500] training's l2: 0.506569 valid_1's l2: 0.515015

[1000] training's l2: 0.456554 valid_1's l2: 0.479715

[1500] training's l2: 0.429392 valid_1's l2: 0.466167

[2000] training's l2: 0.41121 valid_1's l2: 0.460294

[2500] training's l2: 0.396948 valid_1's l2: 0.456717

[3000] training's l2: 0.384836 valid_1's l2: 0.454312

[3500] training's l2: 0.374174 valid_1's l2: 0.452828

[4000] training's l2: 0.36424 valid_1's l2: 0.45174

[4500] training's l2: 0.35519 valid_1's l2: 0.451167

[5000] training's l2: 0.346832 valid_1's l2: 0.450534

[5500] training's l2: 0.33868 valid_1's l2: 0.449806

[6000] training's l2: 0.331086 valid_1's l2: 0.449443

[6500] training's l2: 0.323667 valid_1's l2: 0.448818

[7000] training's l2: 0.316767 valid_1's l2: 0.448549

[7500] training's l2: 0.31012 valid_1's l2: 0.448251

[8000] training's l2: 0.303621 valid_1's l2: 0.447867

[8500] training's l2: 0.297443 valid_1's l2: 0.447644

[9000] training's l2: 0.291354 valid_1's l2: 0.447408

[9500] training's l2: 0.285561 valid_1's l2: 0.447243

[10000] training's l2: 0.2798 valid_1's l2: 0.447077

fold n°5

Train until valid scores didn't improve in 800 rounds.

[500] training's l2: 0.505541 valid_1's l2: 0.522032

[1000] training's l2: 0.455765 valid_1's l2: 0.485519

[1500] training's l2: 0.428977 valid_1's l2: 0.471416

[2000] training's l2: 0.41045 valid_1's l2: 0.464337

[2500] training's l2: 0.39606 valid_1's l2: 0.461031

[3000] training's l2: 0.383816 valid_1's l2: 0.459759

[3500] training's l2: 0.3729 valid_1's l2: 0.458638

[4000] training's l2: 0.362829 valid_1's l2: 0.458508

[4500] training's l2: 0.353462 valid_1's l2: 0.458464

[5000] training's l2: 0.344711 valid_1's l2: 0.458295

[5500] training's l2: 0.336685 valid_1's l2: 0.458603

Early stopping, best iteration is:

[5021] training's l2: 0.34437 valid_1's l2: 0.458202

CV score: 0.45081752

'''1、pd.set_option(‘expand_frame_repr’, False)

True就是可以换行显示。设置成False的时候不允许换行

2、pd.set_option(‘display.max_rows’, 10)

pd.set_option(‘display.max_columns’, 10)

显示的最大行数和列数,如果超额就显示省略号,这个指的是多少个dataFrame的列。如果比较多又不允许换行,就会显得很乱。

'''

pd.set_option('display.max_columns', None)

#显示所有行

pd.set_option('display.max_rows', None)

#设置value的显示长度为100,默认为50

pd.set_option('max_colwidth',100)

plt.figure(figsize=(14,28))

sns.barplot(x='importance',y='feature',data=df.head(50))#x,y,hue:数据字段变量名

plt.tight_layout()

#用于调整图形的布局,以便在图形中添加文本、标题、标签等元素时更好地展示它们。这个函数会自动调整子图之间的间距和大小,使它们更加紧凑和美观。具体来说,plt.tight_layout() 会调整子图之间的距离,使它们更加均匀,并将图形元素与边界之间的距离最小化。使用该函数可以避免在绘制图形时出现重叠的文本和标签等问题。1

#此外,plt.tight_layout() 会忽略那些被标记为不可见或已经被删除的子图。在绘制多个子图时,可以使用该函数来调整布局,以免各子图之间的重叠或空隙过大。

##### xgb_263

#xgboost

xgb_263_params = {'eta': 0.02, #lr

'max_depth': 6,

'min_child_weight':3,#最小叶子节点样本权重和

'gamma':0, #指定节点分裂所需的最小损失函数下降值。

'subsample': 0.7, #控制对于每棵树,随机采样的比例

'colsample_bytree': 0.3, #用来控制每棵随机采样的列数的占比 (每一列是一个特征)。

'lambda':2,

'objective': 'reg:linear',

'eval_metric': 'rmse',

'silent': True,

'nthread': -1}

folds = StratifiedKFold(n_splits=5, shuffle=True, random_state=2019)

oof_xgb_263 = np.zeros(len(X_train_263))

predictions_xgb_263 = np.zeros(len(X_test_263))

for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):

print("fold n°{}".format(fold_+1))

trn_data = xgb.DMatrix(X_train_263[trn_idx], y_train[trn_idx])

val_data = xgb.DMatrix(X_train_263[val_idx], y_train[val_idx])

watchlist = [(trn_data, 'train'), (val_data, 'valid_data')]

xgb_263 = xgb.train(dtrain=trn_data, num_boost_round=3000, evals=watchlist, early_stopping_rounds=600, verbose_eval=500, params=xgb_263_params)

oof_xgb_263[val_idx] = xgb_263.predict(xgb.DMatrix(X_train_263[val_idx]), ntree_limit=xgb_263.best_ntree_limit)

predictions_xgb_263 += xgb_263.predict(xgb.DMatrix(X_test_263), ntree_limit=xgb_263.best_ntree_limit) / folds.n_splits

print("CV score: {:<8.8f}".format(mean_squared_error(oof_xgb_263, target)))

fold n°1

[19:14:55] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

[19:14:55] WARNING: /Users/travis/build/dmlc/xgboost/src/learner.cc:480:

Parameters: { silent } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.40426 valid_data-rmse:3.38329

Multiple eval metrics have been passed: ‘valid_data-rmse’ will be used for early stopping.

Will train until valid_data-rmse hasn’t improved in 600 rounds.

[500] train-rmse:0.40805 valid_data-rmse:0.70588

[1000] train-rmse:0.27046 valid_data-rmse:0.70760

Stopping. Best iteration:

[663] train-rmse:0.35644 valid_data-rmse:0.70521

[19:15:46] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

fold n°2

[19:15:46] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

[19:15:46] WARNING: /Users/travis/build/dmlc/xgboost/src/learner.cc:480:

Parameters: { silent } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.39811 valid_data-rmse:3.40788

Multiple eval metrics have been passed: ‘valid_data-rmse’ will be used for early stopping.

Will train until valid_data-rmse hasn’t improved in 600 rounds.

[500] train-rmse:0.40719 valid_data-rmse:0.69456

[1000] train-rmse:0.27402 valid_data-rmse:0.69501

Stopping. Best iteration:

[551] train-rmse:0.39079 valid_data-rmse:0.69403

[19:16:31] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

fold n°3

[19:16:31] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

[19:16:31] WARNING: /Users/travis/build/dmlc/xgboost/src/learner.cc:480:

Parameters: { silent } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.40181 valid_data-rmse:3.39295

Multiple eval metrics have been passed: ‘valid_data-rmse’ will be used for early stopping.

Will train until valid_data-rmse hasn’t improved in 600 rounds.

[500] train-rmse:0.41334 valid_data-rmse:0.66250

Stopping. Best iteration:

[333] train-rmse:0.47284 valid_data-rmse:0.66178

[19:17:07] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

fold n°4

[19:17:08] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

[19:17:08] WARNING: /Users/travis/build/dmlc/xgboost/src/learner.cc:480:

Parameters: { silent } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.40240 valid_data-rmse:3.39012

Multiple eval metrics have been passed: ‘valid_data-rmse’ will be used for early stopping.

Will train until valid_data-rmse hasn’t improved in 600 rounds.

[500] train-rmse:0.41021 valid_data-rmse:0.66575

[1000] train-rmse:0.27491 valid_data-rmse:0.66431

Stopping. Best iteration:

[863] train-rmse:0.30689 valid_data-rmse:0.66358

[19:18:06] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

fold n°5

[19:18:07] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

[19:18:07] WARNING: /Users/travis/build/dmlc/xgboost/src/learner.cc:480:

Parameters: { silent } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[0] train-rmse:3.39347 valid_data-rmse:3.42628

Multiple eval metrics have been passed: ‘valid_data-rmse’ will be used for early stopping.

Will train until valid_data-rmse hasn’t improved in 600 rounds.

[500] train-rmse:0.41704 valid_data-rmse:0.64937

[1000] train-rmse:0.27907 valid_data-rmse:0.64914

Stopping. Best iteration:

[598] train-rmse:0.38625 valid_data-rmse:0.64856

[19:18:55] WARNING: /Users/travis/build/dmlc/xgboost/src/objective/regression_obj.cu:170: reg:linear is now deprecated in favor of reg:squarederror.

CV score: 0.45559329

#RandomForestRegressor随机森林

folds = KFold(n_splits=5, shuffle=True, random_state=2019)

oof_rfr_263 = np.zeros(len(X_train_263))

predictions_rfr_263 = np.zeros(len(X_test_263))

for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train_263, y_train)):

print("fold n°{}".format(fold_+1))

tr_x = X_train_263[trn_idx]

tr_y = y_train[trn_idx]

rfr_263 = rfr(n_estimators=1600,max_depth=9, min_samples_leaf=9, min_weight_fraction_leaf=0.0,

max_features=0.25,verbose=1,n_jobs=-1) #并行化

#verbose = 0 为不在标准输出流输出日志信息

#verbose = 1 为输出进度条记录

#verbose = 2 为每个epoch输出一行记录

rfr_263.fit(tr_x,tr_y)

oof_rfr_263[val_idx] = rfr_263.predict(X_train_263[val_idx])

predictions_rfr_263 += rfr_263.predict(X_test_263) / folds.n_splits

print("CV score: {:<8.8f}".format(mean_squared_error(oof_rfr_263, target)))

fold n°1

[Parallel(n_jobs=-1)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=-1)]: Done 34 tasks | elapsed: 0.6s

[Parallel(n_jobs=-1)]: Done 184 tasks | elapsed: 2.6s

[Parallel(n_jobs=-1)]: Done 434 tasks | elapsed: 6.5s

[Parallel(n_jobs=-1)]: Done 784 tasks | elapsed: 11.8s

[Parallel(n_jobs=-1)]: Done 1234 tasks | elapsed: 18.9s

[Parallel(n_jobs=-1)]: Done 1600 out of 1600 | elapsed: 25.6s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

fold n°2

[Parallel(n_jobs=-1)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=-1)]: Done 34 tasks | elapsed: 0.6s

[Parallel(n_jobs=-1)]: Done 184 tasks | elapsed: 2.8s

[Parallel(n_jobs=-1)]: Done 434 tasks | elapsed: 6.9s

[Parallel(n_jobs=-1)]: Done 784 tasks | elapsed: 12.9s

[Parallel(n_jobs=-1)]: Done 1234 tasks | elapsed: 21.0s

[Parallel(n_jobs=-1)]: Done 1600 out of 1600 | elapsed: 27.5s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.3s finished

fold n°3

[Parallel(n_jobs=-1)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=-1)]: Done 34 tasks | elapsed: 0.6s

[Parallel(n_jobs=-1)]: Done 184 tasks | elapsed: 3.4s

[Parallel(n_jobs=-1)]: Done 434 tasks | elapsed: 7.6s

[Parallel(n_jobs=-1)]: Done 784 tasks | elapsed: 13.7s

[Parallel(n_jobs=-1)]: Done 1234 tasks | elapsed: 21.0s

[Parallel(n_jobs=-1)]: Done 1600 out of 1600 | elapsed: 26.9s finishe

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

fold n°4

[Parallel(n_jobs=-1)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=-1)]: Done 34 tasks | elapsed: 0.8s

[Parallel(n_jobs=-1)]: Done 184 tasks | elapsed: 3.5s

[Parallel(n_jobs=-1)]: Done 434 tasks | elapsed: 7.9s

[Parallel(n_jobs=-1)]: Done 784 tasks | elapsed: 13.3s

[Parallel(n_jobs=-1)]: Done 1234 tasks | elapsed: 20.6s

[Parallel(n_jobs=-1)]: Done 1600 out of 1600 | elapsed: 26.1s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

fold n°5

[Parallel(n_jobs=-1)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=-1)]: Done 34 tasks | elapsed: 0.6s

[Parallel(n_jobs=-1)]: Done 184 tasks | elapsed: 2.7s

[Parallel(n_jobs=-1)]: Done 434 tasks | elapsed: 6.8s

[Parallel(n_jobs=-1)]: Done 784 tasks | elapsed: 12.2s

[Parallel(n_jobs=-1)]: Done 1234 tasks | elapsed: 19.2s

[Parallel(n_jobs=-1)]: Done 1600 out of 1600 | elapsed: 25.1s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 784 tasks | elapsed: 0.1s

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.2s finished

[Parallel(n_jobs=8)]: Using backend ThreadingBackend with 8 concurrent workers.

[Parallel(n_jobs=8)]: Done 34 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 184 tasks | elapsed: 0.0s

[Parallel(n_jobs=8)]: Done 434 tasks | elapsed: 0.1s

CV score: 0.47804209

[Parallel(n_jobs=8)]: Done 1234 tasks | elapsed: 0.2s

[Parallel(n_jobs=8)]: Done 1600 out of 1600 | elapsed: 0.3s finished