视觉SLAM学习打卡【7-2】-视觉里程计·特征点法程序详解

1.opencv特征提取和匹配 orb_cv.cpp

该blog写的较为详细:点击跳转

2.手写ORB特征 orb_self.cpp

#include <opencv2/opencv.hpp>

#include <string>

#include <nmmintrin.h>

#include <chrono>

using namespace std;

string first_file = "./1.png";

string second_file = "./2.png";

typedef vector<uint32_t> DescType;

void ComputeORB(const cv::Mat &img, vector<cv::KeyPoint> &keypoints, vector<DescType> &descriptors);

void BfMatch(const vector<DescType> &desc1, const vector<DescType> &desc2, vector<cv::DMatch> &matches);

int main(int argc, char **argv) {

cv::Mat first_image = cv::imread(first_file, 0);

cv::Mat second_image = cv::imread(second_file, 0);

assert(first_image.data != nullptr && second_image.data != nullptr);

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

vector<cv::KeyPoint> keypoints1;

cv::FAST(first_image, keypoints1, 40);

vector<DescType> descriptor1;

ComputeORB(first_image, keypoints1, descriptor1);

vector<cv::KeyPoint> keypoints2;

vector<DescType> descriptor2;

cv::FAST(second_image, keypoints2, 40);

ComputeORB(second_image, keypoints2, descriptor2);

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "extract ORB cost = " << time_used.count() << " seconds. " << endl;

vector<cv::DMatch> matches;

t1 = chrono::steady_clock::now();

BfMatch(descriptor1, descriptor2, matches);

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "match ORB cost = " << time_used.count() << " seconds. " << endl;

cout << "matches: " << matches.size() << endl;

cv::Mat image_show;

cv::drawMatches(first_image, keypoints1, second_image, keypoints2, matches, image_show);

cv::imshow("matches", image_show);

cv::imwrite("matches.png", image_show);

cv::waitKey(0);

cout << "done." << endl;

return 0;

}

#pragma region ORB_pattern[256 * 4]相当于在以关键点为中心[-13,12]的范围内,随机选点对p,q;进行关键点的向量构建

int ORB_pattern[256 * 4] = {

8, -3, 9, 5,

4, 2, 7, -12,

-11, 9, -8, 2,

7, -12, 12, -13,

2, -13, 2, 12,

1, -7, 1, 6,

-2, -10, -2, -4,

-13, -13, -11, -8,

-13, -3, -12, -9,

10, 4, 11, 9,

-13, -8, -8, -9,

-11, 7, -9, 12,

7, 7, 12, 6,

-4, -5, -3, 0,

-13, 2, -12, -3,

-9, 0, -7, 5,

12, -6, 12, -1,

-3, 6, -2, 12,

-6, -13, -4, -8,

11, -13, 12, -8,

4, 7, 5, 1,

5, -3, 10, -3,

3, -7, 6, 12,

-8, -7, -6, -2,

-2, 11, -1, -10,

-13, 12, -8, 10,

-7, 3, -5, -3,

-4, 2, -3, 7,

-10, -12, -6, 11,

5, -12, 6, -7,

5, -6, 7, -1,

1, 0, 4, -5,

9, 11, 11, -13,

4, 7, 4, 12,

2, -1, 4, 4,

-4, -12, -2, 7,

-8, -5, -7, -10,

4, 11, 9, 12,

0, -8, 1, -13,

-13, -2, -8, 2,

-3, -2, -2, 3,

-6, 9, -4, -9,

8, 12, 10, 7,

0, 9, 1, 3,

7, -5, 11, -10,

-13, -6, -11, 0,

10, 7, 12, 1,

-6, -3, -6, 12,

10, -9, 12, -4,

-13, 8, -8, -12,

-13, 0, -8, -4,

3, 3, 7, 8,

5, 7, 10, -7,

-1, 7, 1, -12,

3, -10, 5, 6,

2, -4, 3, -10,

-13, 0, -13, 5,

-13, -7, -12, 12,

-13, 3, -11, 8,

-7, 12, -4, 7,

6, -10, 12, 8,

-9, -1, -7, -6,

-2, -5, 0, 12,

-12, 5, -7, 5,

3, -10, 8, -13,

-7, -7, -4, 5,

-3, -2, -1, -7,

2, 9, 5, -11,

-11, -13, -5, -13,

-1, 6, 0, -1,

5, -3, 5, 2,

-4, -13, -4, 12,

-9, -6, -9, 6,

-12, -10, -8, -4,

10, 2, 12, -3,

7, 12, 12, 12,

-7, -13, -6, 5,

-4, 9, -3, 4,

7, -1, 12, 2,

-7, 6, -5, 1,

-13, 11, -12, 5,

-3, 7, -2, -6,

7, -8, 12, -7,

-13, -7, -11, -12,

1, -3, 12, 12,

2, -6, 3, 0,

-4, 3, -2, -13,

-1, -13, 1, 9,

7, 1, 8, -6,

1, -1, 3, 12,

9, 1, 12, 6,

-1, -9, -1, 3,

-13, -13, -10, 5,

7, 7, 10, 12,

12, -5, 12, 9,

6, 3, 7, 11,

5, -13, 6, 10,

2, -12, 2, 3,

3, 8, 4, -6,

2, 6, 12, -13,

9, -12, 10, 3,

-8, 4, -7, 9,

-11, 12, -4, -6,

1, 12, 2, -8,

6, -9, 7, -4,

2, 3, 3, -2,

6, 3, 11, 0,

3, -3, 8, -8,

7, 8, 9, 3,

-11, -5, -6, -4,

-10, 11, -5, 10,

-5, -8, -3, 12,

-10, 5, -9, 0,

8, -1, 12, -6,

4, -6, 6, -11,

-10, 12, -8, 7,

4, -2, 6, 7,

-2, 0, -2, 12,

-5, -8, -5, 2,

7, -6, 10, 12,

-9, -13, -8, -8,

-5, -13, -5, -2,

8, -8, 9, -13,

-9, -11, -9, 0,

1, -8, 1, -2,

7, -4, 9, 1,

-2, 1, -1, -4,

11, -6, 12, -11,

-12, -9, -6, 4,

3, 7, 7, 12,

5, 5, 10, 8,

0, -4, 2, 8,

-9, 12, -5, -13,

0, 7, 2, 12,

-1, 2, 1, 7,

5, 11, 7, -9,

3, 5, 6, -8,

-13, -4, -8, 9,

-5, 9, -3, -3,

-4, -7, -3, -12,

6, 5, 8, 0,

-7, 6, -6, 12,

-13, 6, -5, -2,

1, -10, 3, 10,

4, 1, 8, -4,

-2, -2, 2, -13,

2, -12, 12, 12,

-2, -13, 0, -6,

4, 1, 9, 3,

-6, -10, -3, -5,

-3, -13, -1, 1,

7, 5, 12, -11,

4, -2, 5, -7,

-13, 9, -9, -5,

7, 1, 8, 6,

7, -8, 7, 6,

-7, -4, -7, 1,

-8, 11, -7, -8,

-13, 6, -12, -8,

2, 4, 3, 9,

10, -5, 12, 3,

-6, -5, -6, 7,

8, -3, 9, -8,

2, -12, 2, 8,

-11, -2, -10, 3,

-12, -13, -7, -9,

-11, 0, -10, -5,

5, -3, 11, 8,

-2, -13, -1, 12,

-1, -8, 0, 9,

-13, -11, -12, -5,

-10, -2, -10, 11,

-3, 9, -2, -13,

2, -3, 3, 2,

-9, -13, -4, 0,

-4, 6, -3, -10,

-4, 12, -2, -7,

-6, -11, -4, 9,

6, -3, 6, 11,

-13, 11, -5, 5,

11, 11, 12, 6,

7, -5, 12, -2,

-1, 12, 0, 7,

-4, -8, -3, -2,

-7, 1, -6, 7,

-13, -12, -8, -13,

-7, -2, -6, -8,

-8, 5, -6, -9,

-5, -1, -4, 5,

-13, 7, -8, 10,

1, 5, 5, -13,

1, 0, 10, -13,

9, 12, 10, -1,

5, -8, 10, -9,

-1, 11, 1, -13,

-9, -3, -6, 2,

-1, -10, 1, 12,

-13, 1, -8, -10,

8, -11, 10, -6,

2, -13, 3, -6,

7, -13, 12, -9,

-10, -10, -5, -7,

-10, -8, -8, -13,

4, -6, 8, 5,

3, 12, 8, -13,

-4, 2, -3, -3,

5, -13, 10, -12,

4, -13, 5, -1,

-9, 9, -4, 3,

0, 3, 3, -9,

-12, 1, -6, 1,

3, 2, 4, -8,

-10, -10, -10, 9,

8, -13, 12, 12,

-8, -12, -6, -5,

2, 2, 3, 7,

10, 6, 11, -8,

6, 8, 8, -12,

-7, 10, -6, 5,

-3, -9, -3, 9,

-1, -13, -1, 5,

-3, -7, -3, 4,

-8, -2, -8, 3,

4, 2, 12, 12,

2, -5, 3, 11,

6, -9, 11, -13,

3, -1, 7, 12,

11, -1, 12, 4,

-3, 0, -3, 6,

4, -11, 4, 12,

2, -4, 2, 1,

-10, -6, -8, 1,

-13, 7, -11, 1,

-13, 12, -11, -13,

6, 0, 11, -13,

0, -1, 1, 4,

-13, 3, -9, -2,

-9, 8, -6, -3,

-13, -6, -8, -2,

5, -9, 8, 10,

2, 7, 3, -9,

-1, -6, -1, -1,

9, 5, 11, -2,

11, -3, 12, -8,

3, 0, 3, 5,

-1, 4, 0, 10,

3, -6, 4, 5,

-13, 0, -10, 5,

5, 8, 12, 11,

8, 9, 9, -6,

7, -4, 8, -12,

-10, 4, -10, 9,

7, 3, 12, 4,

9, -7, 10, -2,

7, 0, 12, -2,

-1, -6, 0, -11

};

#pragma endregion

void ComputeORB(const cv::Mat &img, vector<cv::KeyPoint> &keypoints, vector<DescType> &descriptors) {

const int half_patch_size = 8;

const int half_boundary = 16;

int bad_points = 0;

for (auto &kp: keypoints) {

if (kp.pt.x < half_boundary || kp.pt.y < half_boundary ||

kp.pt.x >= img.cols - half_boundary || kp.pt.y >= img.rows - half_boundary) {

bad_points++;

descriptors.push_back({});

continue;

}

float m01 = 0, m10 = 0;

for (int dx = -half_patch_size; dx < half_patch_size; ++dx)

{

for (int dy = -half_patch_size; dy < half_patch_size; ++dy)

{

uchar pixel = img.at<uchar>(kp.pt.y + dy, kp.pt.x + dx);

m01 += dx * pixel;

m10 += dy * pixel;

}

}

float m_sqrt = sqrt(m01 * m01 + m10 * m10) + 1e-18;

float sin_theta = m01 / m_sqrt;

float cos_theta = m10 / m_sqrt;

DescType desc(8, 0);

for (int i = 0; i < 8; i++) {

uint32_t d = 0;

for (int k = 0; k < 32; k++) {

int idx_pq = i * 8 + k;

cv::Point2f p(ORB_pattern[idx_pq * 4], ORB_pattern[idx_pq * 4 + 1]);

cv::Point2f q(ORB_pattern[idx_pq * 4 + 2], ORB_pattern[idx_pq * 4 + 3]);

cv::Point2f pp = cv::Point2f(cos_theta * p.x - sin_theta * p.y, sin_theta * p.x + cos_theta * p.y)

+ kp.pt;

cv::Point2f qq = cv::Point2f(cos_theta * q.x - sin_theta * q.y, sin_theta * q.x + cos_theta * q.y)

+ kp.pt;

if (img.at<uchar>(pp.y, pp.x) < img.at<uchar>(qq.y, qq.x)) {

d |= 1 << k;

}

}

desc[i] = d;

}

descriptors.push_back(desc);

}

cout << "bad/total: " << bad_points << "/" << keypoints.size() << endl;

}

void BfMatch(const vector<DescType> &desc1, const vector<DescType> &desc2, vector<cv::DMatch> &matches) {

const int d_max = 40;

for (size_t i1 = 0; i1 < desc1.size(); ++i1) {

if (desc1[i1].empty()) continue;

cv::DMatch m{i1, 0, 256};

for (size_t i2 = 0; i2 < desc2.size(); ++i2) {

if (desc2[i2].empty()) continue;

int distance = 0;

for (int k = 0; k < 8; k++) {

distance += _mm_popcnt_u32(desc1[i1][k] ^ desc2[i2][k]);

}

if (distance < d_max && distance < m.distance) {

m.distance = distance;

m.trainIdx = i2;

}

}

if (m.distance < d_max) {

matches.push_back(m);

}

}

}

3.对极约束求解相机运动 pose_estimation_2d2d.cpp

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include<chrono>

using namespace std;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches);

void pose_estimation_2d2d(

std::vector<KeyPoint> keypoints_1,

std::vector<KeyPoint> keypoints_2,

std::vector<DMatch> matches,

Mat &R, Mat &t);

Point2d pixel2cam(const Point2d &p, const Mat &K);

int main(int argc, char **argv) {

chrono::steady_clock::time_point t1=chrono::steady_clock::now();

Mat img_1 = imread("/home/rxz/slambook2/ch7/1.png", CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread("/home/rxz/slambook2/ch7/2.png", CV_LOAD_IMAGE_COLOR);

assert(img_1.data && img_2.data && "Can not load images!");

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

Mat R, t;

pose_estimation_2d2d(keypoints_1, keypoints_2, matches, R, t);

Mat t_x =

(Mat_<double>(3, 3) << 0, -t.at<double>(2, 0), t.at<double>(1, 0),

t.at<double>(2, 0), 0, -t.at<double>(0, 0),

-t.at<double>(1, 0), t.at<double>(0, 0), 0);

cout << "t^R=" << endl << t_x * R << endl;

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

for (DMatch m: matches) {

Point2d pt1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);

Mat y1 = (Mat_<double>(3, 1) << pt1.x, pt1.y, 1);

Point2d pt2 = pixel2cam(keypoints_2[m.trainIdx].pt, K);

Mat y2 = (Mat_<double>(3, 1) << pt2.x, pt2.y, 1);

Mat d = y2.t() * t_x * R * y1;

cout << "匹配点对的对极几何残差(epipolar constraint) = " << d << endl;

}

chrono::steady_clock::time_point t2=chrono::steady_clock::now();

chrono::duration<double>time_used=chrono::duration_cast<chrono::duration<double>>(t2-t1);

cout<<"程序所用时间:"<<time_used.count()<<"秒"<<endl;

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches) {

Mat descriptors_1, descriptors_2;

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming");

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

vector<DMatch> match;

matcher->match(descriptors_1, descriptors_2, match);

double min_dist = 100, max_dist = 0;

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

Point2d pixel2cam(const Point2d &p, const Mat &K) {

return Point2d

(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

void pose_estimation_2d2d(std::vector<KeyPoint> keypoints_1,

std::vector<KeyPoint> keypoints_2,

std::vector<DMatch> matches,

Mat &R, Mat &t) {

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point2f> points1;

vector<Point2f> points2;

for (int i = 0; i < (int) matches.size(); i++) {

points1.push_back(keypoints_1[matches[i].queryIdx].pt);

points2.push_back(keypoints_2[matches[i].trainIdx].pt);

}

Mat fundamental_matrix;

fundamental_matrix = findFundamentalMat(points1, points2, CV_FM_8POINT);

cout << "fundamental_matrix is " << endl << fundamental_matrix << endl;

Point2d principal_point(325.1, 249.7);

double focal_length = 521;

Mat essential_matrix;

essential_matrix = findEssentialMat(points1, points2, focal_length, principal_point);

cout << "essential_matrix is " << endl << essential_matrix << endl;

Mat homography_matrix;

homography_matrix = findHomography(points1, points2, RANSAC, 3);

cout << "homography_matrix is " << endl << homography_matrix << endl;

recoverPose(essential_matrix, points1, points2, R, t, focal_length, principal_point);

cout << "R is " << endl << R << endl;

cout << "t is " << endl << t << endl;

}

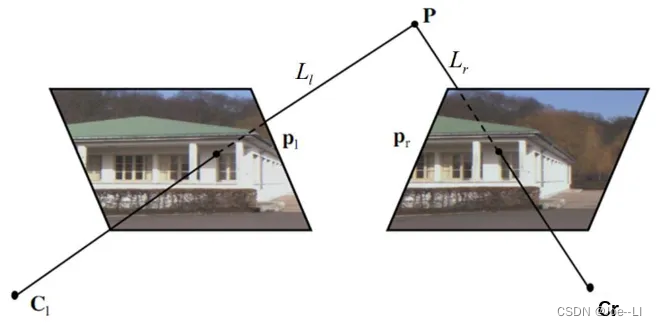

4.三角测量 triangulation.cpp

#include <iostream>

#include <opencv2/opencv.hpp>

#include<chrono>

using namespace std;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches);

void pose_estimation_2d2d(

const std::vector<KeyPoint> &keypoints_1,

const std::vector<KeyPoint> &keypoints_2,

const std::vector<DMatch> &matches,

Mat &R, Mat &t);

void triangulation(

const vector<KeyPoint> &keypoint_1,

const vector<KeyPoint> &keypoint_2,

const std::vector<DMatch> &matches,

const Mat &R, const Mat &t,

vector<Point3d> &points

);

inline cv::Scalar get_color(float depth) {

float up_th = 50, low_th = 10, th_range = up_th - low_th;

if (depth > up_th) depth = up_th;

if (depth < low_th) depth = low_th;

return cv::Scalar(255 * depth / th_range, 0, 255 * (1 - depth / th_range));

}

Point2f pixel2cam(const Point2d &p, const Mat &K);

int main(int argc, char **argv) {

if (argc != 3) {

cout << "usage: triangulation img1 img2" << endl;

return 1;

}

chrono::steady_clock::time_point t1=chrono::steady_clock::now();

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

Mat R, t;

pose_estimation_2d2d(keypoints_1, keypoints_2, matches, R, t);

vector<Point3d> points;

triangulation(keypoints_1, keypoints_2, matches, R, t, points);

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

Mat img1_plot = img_1.clone();

Mat img2_plot = img_2.clone();

for (int i = 0; i < matches.size(); i++) {

float depth1 = points[i].z;

cout <<"第"<<i<<"个匹配点的depth: "<< depth1 << endl;

Point2d pt1_cam = pixel2cam(keypoints_1[matches[i].queryIdx].pt, K);

cv::circle(img1_plot, keypoints_1[matches[i].queryIdx].pt, 2, get_color(depth1), 2,8,0);

Mat pt2_trans = R * (Mat_<double>(3, 1) << points[i].x, points[i].y, points[i].z) + t;

float depth2 = pt2_trans.at<double>(2, 0);

cv::circle(img2_plot, keypoints_2[matches[i].trainIdx].pt, 2, get_color(depth2), 2);

}

cv::imshow("img 1", img1_plot);

cv::imshow("img 2", img2_plot);

chrono::steady_clock::time_point t2=chrono::steady_clock::now();

chrono::duration<double>time_used=chrono::duration_cast<chrono::duration<double>>(t2-t1);

cout<<"time spent by project: "<<time_used.count()<<"second"<<endl;

cv::waitKey();

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches) {

Mat descriptors_1, descriptors_2;

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming");

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

vector<DMatch> match;

matcher->match(descriptors_1, descriptors_2, match);

double min_dist = 10000, max_dist = 0;

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

void pose_estimation_2d2d(

const std::vector<KeyPoint> &keypoints_1,

const std::vector<KeyPoint> &keypoints_2,

const std::vector<DMatch> &matches,

Mat &R, Mat &t) {

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point2f> points1;

vector<Point2f> points2;

for (int i = 0; i < (int) matches.size(); i++) {

points1.push_back(keypoints_1[matches[i].queryIdx].pt);

points2.push_back(keypoints_2[matches[i].trainIdx].pt);

}

Point2d principal_point(325.1, 249.7);

int focal_length = 521;

Mat essential_matrix;

essential_matrix = findEssentialMat(points1, points2, focal_length, principal_point);

recoverPose(essential_matrix, points1, points2, R, t, focal_length, principal_point);

cout<<"R 矩阵:"<<endl<< R <<endl;

}

void triangulation(

const vector<KeyPoint> &keypoint_1,

const vector<KeyPoint> &keypoint_2,

const std::vector<DMatch> &matches,

const Mat &R, const Mat &t,

vector<Point3d> &points) {

Mat T1 = (Mat_<float>(3, 4) <<

1, 0, 0, 0,

0, 1, 0, 0,

0, 0, 1, 0);

Mat T2 = (Mat_<float>(3, 4) <<

R.at<double>(0, 0), R.at<double>(0, 1), R.at<double>(0, 2), t.at<double>(0, 0),

R.at<double>(1, 0), R.at<double>(1, 1), R.at<double>(1, 2), t.at<double>(1, 0),

R.at<double>(2, 0), R.at<double>(2, 1), R.at<double>(2, 2), t.at<double>(2, 0)

);

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point2f> pts_1, pts_2;

for(int i=0;i<matches.size();i++)

{

DMatch m=matches[i];

pts_1.push_back(pixel2cam(keypoint_1[m.queryIdx].pt, K));

pts_2.push_back(pixel2cam(keypoint_2[m.trainIdx].pt, K));

}

Mat pts_4d;

cv::triangulatePoints(T1, T2, pts_1, pts_2, pts_4d);

for (int i = 0; i < pts_4d.cols; i++) {

Mat x = pts_4d.col(i);

x /= x.at<float>(3, 0);

Point3d p(

x.at<float>(0, 0),

x.at<float>(1, 0),

x.at<float>(2, 0)

);

points.push_back(p);

}

}

Point2f pixel2cam(const Point2d &p, const Mat &K) {

return Point2f

(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

5.PnP pose_estimation_3d2d.cpp

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <Eigen/Core>

#include <g2o/core/base_vertex.h>

#include <g2o/core/base_unary_edge.h>

#include <g2o/core/sparse_optimizer.h>

#include <g2o/core/block_solver.h>

#include <g2o/core/solver.h>

#include <g2o/core/optimization_algorithm_gauss_newton.h>

#include <g2o/solvers/dense/linear_solver_dense.h>

#include <sophus/se3.hpp>

#include <chrono>

using namespace std;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches);

Point2d pixel2cam(const Point2d &p, const Mat &K);

typedef vector<Eigen::Vector2d, Eigen::aligned_allocator<Eigen::Vector2d>> VecVector2d;

typedef vector<Eigen::Vector3d, Eigen::aligned_allocator<Eigen::Vector3d>> VecVector3d;

void bundleAdjustmentG2O(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose

);

void bundleAdjustmentGaussNewton(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose

);

int main(int argc, char **argv) {

if (argc != 4) {

cout << "usage: pose_estimation_3d2d img1 img2 depth1 depth2" << endl;

return 1;

}

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);

assert(img_1.data && img_2.data && "Can not load images!");

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

Mat d1 = imread(argv[3], CV_LOAD_IMAGE_UNCHANGED);

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point3f> pts_3d;

vector<Point2f> pts_2d;

for (DMatch m:matches) {

ushort d = d1.ptr<unsigned short>(int(keypoints_1[m.queryIdx].pt.y))[int(keypoints_1[m.queryIdx].pt.x)];

if (d == 0)

continue;

float dd = d / 5000.0;

Point2d p1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);

pts_3d.push_back(Point3f(p1.x * dd, p1.y * dd, dd));

pts_2d.push_back(keypoints_2[m.trainIdx].pt);

}

cout << "3d-2d pairs: " << pts_3d.size() << endl;

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

Mat r, t;

solvePnP(pts_3d, pts_2d, K, Mat(), r, t, false);

Mat R;

cv::Rodrigues(r, R);

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in opencv cost time: " << time_used.count() << " seconds." << endl;

cout << "R=" << endl << R << endl;

cout << "t=" << endl << t << endl;

VecVector3d pts_3d_eigen;

VecVector2d pts_2d_eigen;

for (size_t i = 0; i < pts_3d.size(); ++i) {

pts_3d_eigen.push_back(Eigen::Vector3d(pts_3d[i].x, pts_3d[i].y, pts_3d[i].z));

pts_2d_eigen.push_back(Eigen::Vector2d(pts_2d[i].x, pts_2d[i].y));

}

cout << "calling bundle adjustment by gauss newton" << endl;

Sophus::SE3d pose_gn;

t1 = chrono::steady_clock::now();

bundleAdjustmentGaussNewton(pts_3d_eigen, pts_2d_eigen, K, pose_gn);

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp by gauss newton cost time: " << time_used.count() << " seconds." << endl;

cout << "calling bundle adjustment by g2o" << endl;

Sophus::SE3d pose_g2o;

t1 = chrono::steady_clock::now();

bundleAdjustmentG2O(pts_3d_eigen, pts_2d_eigen, K, pose_g2o);

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp by g2o cost time: " << time_used.count() << " seconds." << endl;

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches) {

Mat descriptors_1, descriptors_2;

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming");

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

vector<DMatch> match;

matcher->match(descriptors_1, descriptors_2, match);

double min_dist = 10000, max_dist = 0;

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

Point2d pixel2cam(const Point2d &p, const Mat &K) {

return Point2d

(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

void bundleAdjustmentGaussNewton(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose) {

typedef Eigen::Matrix<double, 6, 1> Vector6d;

const int iterations = 10;

double cost = 0, lastCost = 0;

double fx = K.at<double>(0, 0);

double fy = K.at<double>(1, 1);

double cx = K.at<double>(0, 2);

double cy = K.at<double>(1, 2);

for (int iter = 0; iter < iterations; iter++) {

Eigen::Matrix<double, 6, 6> H = Eigen::Matrix<double, 6, 6>::Zero();

Vector6d b = Vector6d::Zero();

cost = 0;

for (int i = 0; i < points_3d.size(); i++) {

Eigen::Vector3d pc = pose * points_3d[i];

double inv_z = 1.0 / pc[2];

double inv_z2 = inv_z * inv_z;

Eigen::Vector2d proj(fx * pc[0] / pc[2] + cx, fy * pc[1] / pc[2] + cy);

Eigen::Vector2d e = points_2d[i] - proj;

cost += e.squaredNorm();

Eigen::Matrix<double, 2, 6> J;

J << -fx * inv_z,

0,

fx * pc[0] * inv_z2,

fx * pc[0] * pc[1] * inv_z2,

-fx - fx * pc[0] * pc[0] * inv_z2,

fx * pc[1] * inv_z,

0,

-fy * inv_z,

fy * pc[1] * inv_z2,

fy + fy * pc[1] * pc[1] * inv_z2,

-fy * pc[0] * pc[1] * inv_z2,

-fy * pc[0] * inv_z;

H += J.transpose() * J;

b += -J.transpose() * e;

}

Vector6d dx;

dx = H.ldlt().solve(b);

if (isnan(dx[0])) {

cout << "result is nan!" << endl;

break;

}

if (iter > 0 && cost >= lastCost) {

cout << "cost: " << cost << ", last cost: " << lastCost << endl;

break;

}

pose = Sophus::SE3d::exp(dx) * pose;

lastCost = cost;

cout << "iteration " << iter << " cost=" << std::setprecision(12) << cost << endl;

if (dx.norm() < 1e-6) {

break;

}

}

cout << "pose by g-n: \n" << pose.matrix() << endl;

}

class VertexPose : public g2o::BaseVertex<6, Sophus::SE3d> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

virtual void setToOriginImpl() override {

_estimate = Sophus::SE3d();

}

virtual void oplusImpl(const double *update) override {

Eigen::Matrix<double, 6, 1> update_eigen;

update_eigen << update[0], update[1], update[2], update[3], update[4], update[5];

_estimate = Sophus::SE3d::exp(update_eigen) * _estimate;

}

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

};

class EdgeProjection : public g2o::BaseUnaryEdge<2, Eigen::Vector2d, VertexPose> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeProjection(const Eigen::Vector3d &pos, const Eigen::Matrix3d &K) : _pos3d(pos), _K(K) {}

virtual void computeError() override {

const VertexPose *v = static_cast<VertexPose *> (_vertices[0]);

Sophus::SE3d T = v->estimate();

Eigen::Vector3d pos_pixel = _K * (T * _pos3d);

pos_pixel /= pos_pixel[2];

_error = _measurement - pos_pixel.head<2>();

}

virtual void linearizeOplus() override {

const VertexPose *v = static_cast<VertexPose *> (_vertices[0]);

Sophus::SE3d T = v->estimate();

Eigen::Vector3d pos_cam = T * _pos3d;

double fx = _K(0, 0);

double fy = _K(1, 1);

double cx = _K(0, 2);

double cy = _K(1, 2);

double X = pos_cam[0];

double Y = pos_cam[1];

double Z = pos_cam[2];

double Z2 = Z * Z;

_jacobianOplusXi

<< -fx / Z, 0, fx * X / Z2, fx * X * Y / Z2, -fx - fx * X * X / Z2, fx * Y / Z,

0, -fy / Z, fy * Y / (Z * Z), fy + fy * Y * Y / Z2, -fy * X * Y / Z2, -fy * X / Z;

}

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

private:

Eigen::Vector3d _pos3d;

Eigen::Matrix3d _K;

};

void bundleAdjustmentG2O(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose) {

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6, 3>> BlockSolverType;

typedef g2o::LinearSolverDense<BlockSolverType::PoseMatrixType> LinearSolverType;

auto solver = new g2o::OptimizationAlgorithmGaussNewton(

g2o::make_unique<BlockSolverType>(g2o::make_unique<LinearSolverType>()));

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm(solver);

optimizer.setVerbose(true);

VertexPose *vertex_pose = new VertexPose();

vertex_pose->setId(0);

vertex_pose->setEstimate(Sophus::SE3d());

optimizer.addVertex(vertex_pose);

Eigen::Matrix3d K_eigen;

K_eigen <<

K.at<double>(0, 0), K.at<double>(0, 1), K.at<double>(0, 2),

K.at<double>(1, 0), K.at<double>(1, 1), K.at<double>(1, 2),

K.at<double>(2, 0), K.at<double>(2, 1), K.at<double>(2, 2);

int index = 1;

for (size_t i = 0; i < points_2d.size(); ++i) {

auto p2d = points_2d[i];

auto p3d = points_3d[i];

EdgeProjection *edge = new EdgeProjection(p3d, K_eigen);

edge->setId(index);

edge->setVertex(0, vertex_pose);

edge->setMeasurement(p2d);

edge->setInformation(Eigen::Matrix2d::Identity());

optimizer.addEdge(edge);

index++;

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose(true);

optimizer.initializeOptimization();

optimizer.optimize(10);

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "optimization costs time: " << time_used.count() << " seconds." << endl;

cout << "pose estimated by g2o =\n" << vertex_pose->estimate().matrix() << endl;

pose = vertex_pose->estimate();

6.ICP pose_estimation_3d3d.cpp

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <Eigen/Core>

#include <Eigen/Dense>

#include <Eigen/Geometry>

#include <Eigen/SVD>

#include <g2o/core/base_vertex.h>

#include <g2o/core/base_unary_edge.h>

#include <g2o/core/block_solver.h>

#include <g2o/core/optimization_algorithm_gauss_newton.h>

#include <g2o/core/optimization_algorithm_levenberg.h>

#include <g2o/solvers/dense/linear_solver_dense.h>

#include <chrono>

#include <sophus/se3.hpp>

using namespace std;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches

);

Point2d pixel2cam(const Point2d &p, const Mat &K);

void pose_estimation_3d3d(

const vector<Point3f> &pts1,

const vector<Point3f> &pts2,

Mat &R, Mat &t

);

void bundleAdjustment(

const vector<Point3f> &points_3d,

const vector<Point3f> &points_2d,

Mat &R, Mat &t

);

class VertexPose : public g2o::BaseVertex<6, Sophus::SE3d> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

virtual void setToOriginImpl() override {

_estimate = Sophus::SE3d();

}

virtual void oplusImpl(const double *update) override {

Eigen::Matrix<double, 6, 1> update_eigen;

update_eigen << update[0], update[1], update[2], update[3], update[4], update[5];

_estimate = Sophus::SE3d::exp(update_eigen) * _estimate;

}

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

};

class EdgeProjectXYZRGBDPoseOnly : public g2o::BaseUnaryEdge<3, Eigen::Vector3d, VertexPose> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeProjectXYZRGBDPoseOnly(const Eigen::Vector3d &point) : _point(point) {}

virtual void computeError() override {

const VertexPose *pose = static_cast<const VertexPose *> ( _vertices[0] );

_error = _measurement - pose->estimate() * _point;

}

virtual void linearizeOplus() override {

VertexPose *pose = static_cast<VertexPose *>(_vertices[0]);

Sophus::SE3d T = pose->estimate();

Eigen::Vector3d xyz_trans = T * _point;

_jacobianOplusXi.block<3, 3>(0, 0) = -Eigen::Matrix3d::Identity();

_jacobianOplusXi.block<3, 3>(0, 3) = Sophus::SO3d::hat(xyz_trans);

}

bool read(istream &in) {}

bool write(ostream &out) const {}

protected:

Eigen::Vector3d _point;

};

int main(int argc, char **argv) {

if (argc != 5) {

cout << "usage: pose_estimation_3d3d img1 img2 depth1 depth2" << endl;

return 1;

}

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

Mat depth1 = imread(argv[3], CV_LOAD_IMAGE_UNCHANGED);

Mat depth2 = imread(argv[4], CV_LOAD_IMAGE_UNCHANGED);

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point3f> pts1, pts2;

for (DMatch m:matches) {

ushort d1 = depth1.ptr<unsigned short>(int(keypoints_1[m.queryIdx].pt.y))[int(keypoints_1[m.queryIdx].pt.x)];

ushort d2 = depth2.ptr<unsigned short>(int(keypoints_2[m.trainIdx].pt.y))[int(keypoints_2[m.trainIdx].pt.x)];

if (d1 == 0 || d2 == 0) {

continue;

}

Point2d p1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);

Point2d p2 = pixel2cam(keypoints_2[m.trainIdx].pt, K);

float dd1 = float(d1) / 5000.0;

float dd2 = float(d2) / 5000.0;

pts1.push_back(Point3f(p1.x * dd1, p1.y * dd1, dd1));

pts2.push_back(Point3f(p2.x * dd2, p2.y * dd2, dd2));

cout << "3d-3d pairs: " << pts1.size() << endl;

Mat R, t;

pose_estimation_3d3d(pts1, pts2, R, t);

cout << "ICP via SVD results: " << endl;

cout << "R = " << R << endl;

cout << "t = " << t << endl;

cout << "R_inv = " << R.t() << endl;

cout << "t_inv = " << -R.t() * t << endl;

cout << "calling bundle adjustment" << endl;

bundleAdjustment(pts1, pts2, R, t);

cout << "verifying p1 = R * p2 + t" << endl;

for (int i = 0; i < 5; i++) {

cout << "p1 = " << pts1[i] << endl;

cout << "p2 = " << pts2[i] << endl;

cout << "(R*p2+t) = " <<

R * (Mat_<double>(3, 1) << pts2[i].x, pts2[i].y, pts2[i].z) + t

<< endl;

cout << endl;

}

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches) {

Mat descriptors_1, descriptors_2;

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming");

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

vector<DMatch> match;

matcher->match(descriptors_1, descriptors_2, match);

double min_dist = 10000, max_dist = 0;

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

Point2d pixel2cam(const Point2d &p, const Mat &K) {

return Point2d(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

void pose_estimation_3d3d(const vector<Point3f> &pts1,

const vector<Point3f> &pts2,

Mat &R, Mat &t) {

Point3f p1, p2;

int N = pts1.size();

for (int i = 0; i < N; i++) {

p1 += pts1[i];

p2 += pts2[i];

}

p1 = Point3f(Vec3f(p1) / N);

p2 = Point3f(Vec3f(p2) / N);

vector<Point3f> q1(N), q2(N);

for (int i = 0; i < N; i++) {

q1[i] = pts1[i] - p1;

q2[i] = pts2[i] - p2;

}

Eigen::Matrix3d W = Eigen::Matrix3d::Zero();

for (int i = 0; i < N; i++) {

W += Eigen::Vector3d(q1[i].x, q1[i].y, q1[i].z) * Eigen::Vector3d(q2[i].x, q2[i].y, q2[i].z).transpose();

}

cout << "W=" << W << endl;

Eigen::JacobiSVD<Eigen::Matrix3d> svd(W, Eigen::ComputeFullU | Eigen::ComputeFullV);

Eigen::Matrix3d U = svd.matrixU();

Eigen::Matrix3d V = svd.matrixV();

cout << "U=" << U << endl;

cout << "V=" << V << endl;

Eigen::Matrix3d R_ = U * (V.transpose());

if (R_.determinant() < 0) {

R_ = -R_;

}

Eigen::Vector3d t_ = Eigen::Vector3d(p1.x, p1.y, p1.z) - R_ * Eigen::Vector3d(p2.x, p2.y, p2.z);

R = (Mat_<double>(3, 3) <<

R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2)

);

t = (Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

}

void bundleAdjustment(

const vector<Point3f> &pts1,

const vector<Point3f> &pts2,

Mat &R, Mat &t) {

typedef g2o::BlockSolverX BlockSolverType;

typedef g2o::LinearSolverDense<BlockSolverType::PoseMatrixType> LinearSolverType;

auto solver = new g2o::OptimizationAlgorithmLevenberg(

g2o::make_unique<BlockSolverType>(g2o::make_unique<LinearSolverType>()));

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm(solver);

optimizer.setVerbose(true);

VertexPose *pose = new VertexPose();

pose->setId(0);

pose->setEstimate(Sophus::SE3d());

optimizer.addVertex(pose);

for (size_t i = 0; i < pts1.size(); i++) {

EdgeProjectXYZRGBDPoseOnly *edge = new EdgeProjectXYZRGBDPoseOnly(

Eigen::Vector3d(pts2[i].x, pts2[i].y, pts2[i].z));

edge->setVertex(0, pose);

edge->setMeasurement(Eigen::Vector3d(

pts1[i].x, pts1[i].y, pts1[i].z));

edge->setInformation(Eigen::Matrix3d::Identity());

optimizer.addEdge(edge);

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.initializeOptimization();

optimizer.optimize(10);

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "optimization costs time: " << time_used.count() << " seconds." << endl;

cout << endl << "after optimization:" << endl;

cout << "T=\n" << pose->estimate().matrix() << endl;

Eigen::Matrix3d R_ = pose->estimate().rotationMatrix();

Eigen::Vector3d t_ = pose->estimate().translation();

R = (Mat_<double>(3, 3) <<

R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2)

);

t = (Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

}